刘二大人《Pytorch深度学习》第七讲作业

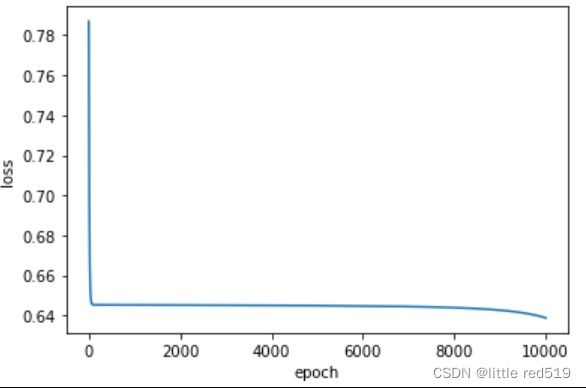

sigmoid函数(课程中的方法):

import numpy as np

import torch

xy=np.loadtxt('E:\study\PyTorch\diabetes.csv',delimiter=',',dtype=np.float32)

x_data=torch.from_numpy(xy[:,:-1])#-1加[]保证运算结果为矩阵而非向量

y_data=torch.from_numpy(xy[:,[-1]])#from_numpy根据数值创建两个tensor出来

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self, x):

x = self.sigmoid(self.linear1(x))

x = self.sigmoid(self.linear2(x))

x = self.sigmoid(self.linear3(x))

return x

model=Model()

criterion=torch.nn.BCELoss(reduction='mean')

optimizer=torch.optim.SGD(model.parameters(),lr=0.1)

loss_list=[]

for epoch in range(10000):

#Forward

y_pred=model(x_data)

loss=criterion(y_pred,y_data)

print(epoch,loss.item())

loss_list.append(loss.item())

#Backward

optimizer.zero_grad()

loss.backward()

#Update

optimizer.step()

import matplotlib.pyplot as plt

epoch_list=[i for i in range(10000)]

plt.plot(epoch_list,loss_list)

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()运行结果:

作业:

1.Heaviside

官方文档:https://pytorch.org/docs/stable/generated/torch.heaviside.html#torch.heaviside

Model部分,其余同上:

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)#8维->6维

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

def forward(self,x):

x=torch.heaviside(self.linear1(x).clone().detach(),torch.tensor([0.5]))

x=torch.heaviside(self.linear2(x).clone().detach(),torch.tensor([0.5]))

x=self.sigmoid(self.linear3(x))

return x

model=Model()运行结果:

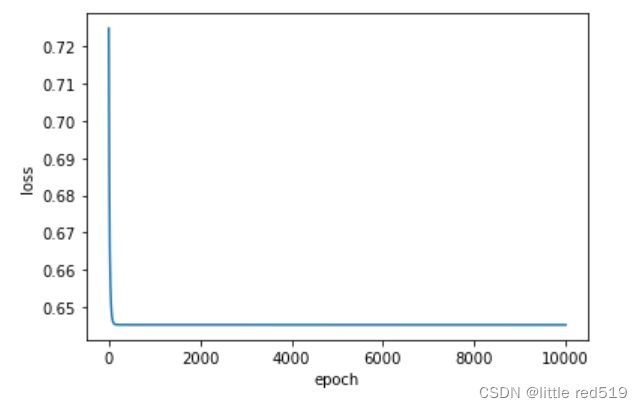

2.Sign

官方文档:https://pytorch.org/docs/stable/generated/torch.sign.html?highlight=sign#torch.sign

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)#8维->6维

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

def forward(self,x):

x=torch.sign(self.linear1(x).clone().detach())

x=torch.sign(self.linear2(x).clone().detach())

x=self.sigmoid(self.linear3(x))

return x

model=Model()运行结果:

3.linear

即f(x)=x,其实输出着玩儿~,所以直接去掉激活函数就可以。(刘二大人原话[doge])

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = torch.nn.Linear(8, 6)

self.linear2 = torch.nn.Linear(6, 4)

self.linear3 = torch.nn.Linear(4, 1)

#self.sigmoid = torch.nn.Sigmoid()

def forward(self, x):

x = self.linear1(x)

x = self.linear2(x)

x = self.sigmoid(self.linear3(x))

return x

model=Model()运行结果:

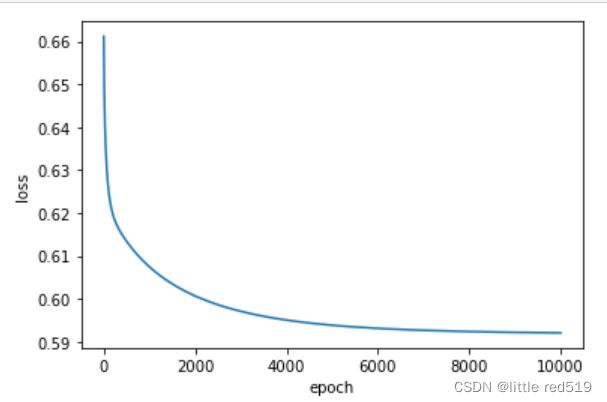

4.piece-wise linear(Hardsigmoid)

官方文档:https://pytorch.org/docs/stable/generated/torch.nn.Hardsigmoid.html#torch.nn.Hardsigmoid

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)#8维->6维

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

self.activate=torch.nn.Hardsigmoid()

def forward(self,x):

x=self.activate(self.linear1(x))

x=self.activate(self.linear2(x))

x=self.sigmoid(self.linear3(x))

return x

model=Model()运行结果:

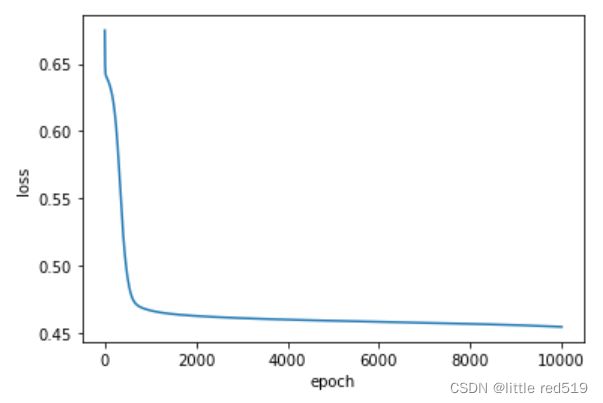

5.hyperbolic tangent(tanh):

官方文档:https://pytorch.org/docs/stable/generated/torch.nn.Tanh.html#torch.nn.Tanh

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

self.activate=torch.nn.Tanh()

def forward(self,x):

x=self.activate(self.linear1(x))

x=self.activate(self.linear2(x))

x=self.sigmoid(self.linear3(x))

return x

model=Model()运行结果:

6.ReLu:

官方文档:https://pytorch.org/docs/stable/generated/torch.nn.ReLU.html#torch.nn.ReLU

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

self.activate=torch.nn.ReLU()

def forward(self,x):

x=self.activate(self.linear1(x))

x=self.activate(self.linear2(x))

x=self.sigmoid(self.linear3(x))

return x

model=Model()7.softplus

官方文档:https://pytorch.org/docs/stable/generated/torch.nn.Softplus.html#torch.nn.Softplus

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

self.linear1=torch.nn.Linear(8,6)#8维->6维

self.linear2=torch.nn.Linear(6,4)

self.linear3=torch.nn.Linear(4,1)

self.sigmoid=torch.nn.Sigmoid()

self.activate=torch.nn.Softplus()

def forward(self,x):

x=self.activate(self.linear1(x))

x=self.activate(self.linear2(x))

x=self.sigmoid(self.linear3(x))

return x

model=Model()