【论文阅读】Deep Cocktail Network: Multi-source Unsupervised Domain Adaptation with Category Shift

Deep Cocktail Network: Multi-source Unsupervised Domain Adaptation with Category Shift

SUMMARY@ 2020/5/12

文章目录

- 1. Method abstract

-

- during training, two alternating adaptation steps:

- 2. Motivation

- 3. Challenges /Problem to be solved

- 4. Contribution

- 5. Related work

-

- 5.1 Unsupervised domain adaptation with single source

- 5.2 Domain adaptation with multiple sources

- 5.3 two branches of transfer learning closely relate to MDA (supervised)

- 6. Settings

- 7. Compared with Open Set DA

- 8. DCTN: framework details

-

- 8.1 Feature extractor F F F

- 8.2 (Multi-source) domain discriminator D D D

- 8.3 (Multi-source) category classifier C C C

- 8.4 Target classification operator

- 8.5 Connection to distribution weighted combining rule

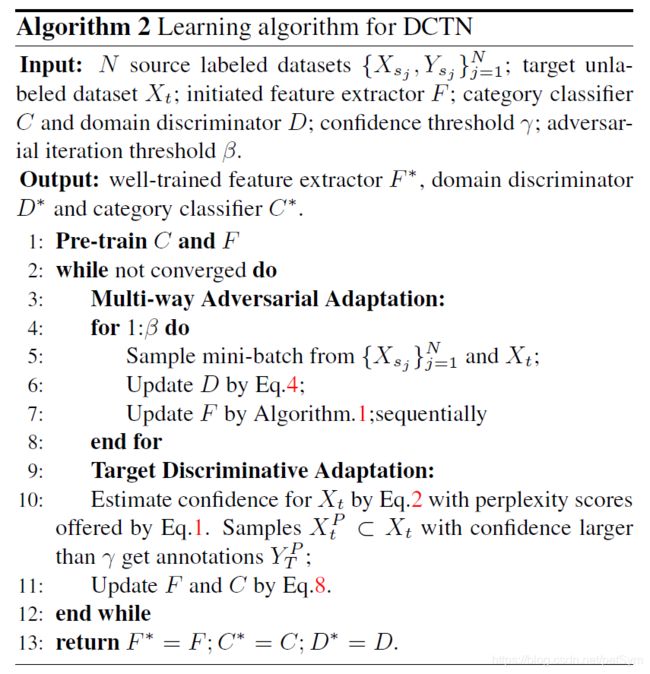

- 9 Learning

-

- 9.1 Pre-training C and F

- 9.2 Multi-way Adversarial Adaptation

- Online hard domain batch mining

- 9.3 Target Discriminative Adaptation

- 10. Experiments

-

- 10.1 Benchmarks

- 10.2 Evaluations in the vanilla(common) setting

- 10.3 Evaluations in the category shift setting

- 11. Further Analysis

-

- 11.1 Feature visualization.

- 11.2 Ablation study

- 11.3 Convergence analysis

1. Method abstract

Inspired by the distribution weighted combining rule in [33], the target distribution can be represented as the weighted combination of the multi-source distributions.

An ideal target predictor can be obtained by integrating all source predictions based on the corresponding source distribution weights.

- besides of the feature extractor

- DCTN also includes a (multi-source) category classifier to predict the class from different sources

- and a (multi-source) domain discriminator to produce multiple source-target-specific perplexity scores as the approximation of source distribution weights.

during training, two alternating adaptation steps:

-

domain discriminator: The multi-way adversarial adaptation implicitly reduces domain shifts among

those sources.- deploys multi-way adversarial learning to minimize the discrepancy between the target and each of the multiple source domains,

- also predict the source-specific perplexity scores to denote the possibilities that a target sample belongs to different source domains.

-

feature extractor and the category classifier

- The multi-source category classifiers are integrated with the perplexity scores to classify target

sample, and the pseudo-labeled target samples together with source samples are utilized to update the multi-source category classifier and the feature extractor

- The multi-source category classifiers are integrated with the perplexity scores to classify target

2. Motivation

This paper focuses on the problem of multi-source domain adaptation, where there is category shift between diverse sources.

Category shift is a new protocol in MDA, where domain shift and categorical disalignment co-exist among the sources.

This paper aims at domain shift and category shift all together.

3. Challenges /Problem to be solved

- cannot simply apply same UDA via combining all source domains since there are possible domain shifts among sources

- eliminate the distribution discrepancy between target and each source maybe too strict, and harmful.

- category shift in sources

4. Contribution

-

- We present a novel and realistic MDA protocol termed category shift that relaxes the requirement on the shared category set among any source domains.

-

- Inspired from the distribution weighted combining rule, we proposed the deep cocktail network (DCTN) together with the alternating adaptation algorithm to learn transferable and discriminative representation.

-

- We conduct comprehensive experiments on three well-known benchmarks, and testify our model in both the vanilla and the category shift settings. Our method has achieved the state of the art across most transfer tasks.

5. Related work

5.1 Unsupervised domain adaptation with single source

- domain discrepancy based methods: reduce the domain shift across the source and the target

- domain discrepancy based methods

- deep-model-based

- adversarial learning based

- others: semi-supervised method [42], domain reconstruction [14], duality [19], alignments [9] [50] [44], manifold learning [15], tensor methods [24],[31], etc.

5.2 Domain adaptation with multiple sources

- originates from A-SVM[49]

- shallow models[8] [22] [27]

- theoretical

- learning bound for multi source DA[3]

- distribution weighted combining rule[33]

5.3 two branches of transfer learning closely relate to MDA (supervised)

- continual transfer learning (CTL) [43] ,[39].

- CTLs train the learner to sequentially master multiple tasks across multiple domains.

- domain generalization (DG)

- uses the existing multiple labeled domains for training regardless of the unlabeled target samples.[13, 35]

6. Settings

-

Suppose the classifier for each source domain is known

-

Vanilla MDA: samples from diverse sources share a same category set

-

Category Shift: categories from different sources might be also different

-

N N N different underlying source distributions { p s j ( x , y ) } j = 1 N \{p_{\mathbf s_j}(x,y)\}_{j=1}^N {psj(x,y)}j=1N

- X s j = { x i s j } i = 1 ∣ X s j ∣ X_{s_{j}}=\left\{x_{i}^{s_{j}}\right\}_{i=1}^{\left|X_{s_{j}}\right|} Xsj={xisj}i=1∣Xsj∣

- Y s j = { y i s j } i = 1 ∣ Y s j ∣ Y_{s_{j}}=\left\{y_{i}^{s_{j}}\right\}_{i=1}^{\left|Y_{s_{j}}\right|} Ysj={yisj}i=1∣Ysj∣

-

1 target distribution p t ( x , y ) p_t(x,y) pt(x,y), no label

- X t = { x i t } i = 1 ∣ X t ∣ X_{t}=\left\{x_{i}^{t}\right\}_{i=1}^{\left|X_{t}\right|} Xt={xit}i=1∣Xt∣

-

training set ensemble: N + 1 N+1 N+1 datasets

-

testing set: from target distribution

-

target domain get labeled by the union of all categories in those sources

C t = ⋃ j = 1 N C s j \mathcal{C}_{t}=\bigcup\limits_{j=1}^{N} \mathcal{C}_{s_{j}} Ct=j=1⋃NCsj

7. Compared with Open Set DA

-

The uncommon classes are unified as a negative category called “unknown”.

-

In contrast, category shift consider the specific disaligned categories among multiple sources to enrich the classification in transfer.

8. DCTN: framework details

8.1 Feature extractor F F F

- deep convolution nets as the backbone

- share weights: map all images from N sources and target into a common feature space

- employ adversarial learning to obtain the optimal mapping

- because it can successfully learn both domain-invariant features and each target-source-specific relations.

8.2 (Multi-source) domain discriminator D D D

-

N N N source-specific discriminators: { D s j } j = 1 N \left\{D_{s_j}\right\}_{j=1}^{N} {Dsj}j=1N

-

Given image x x x from the source j j j or the target domain, the domain discriminator D D D receives the features F ( x ) F(x) F(x), classifies whether from the source j j j or the target

-

for the data flow from each target instance x t x_t xt, the domain discriminators D D D yields the N N N source-specific discriminative results

{ D s j ( F ( x t ) ) } j = 1 N \left\{D_{s_j}(F(x^t))\right\}_{j=1}^{N} {Dsj(F(xt))}j=1N -

target-source perplexity scores

S c f ( x t ; F , D s j ) = − log ( 1 − D s j ( F ( x t ) ) ) + α s j \mathcal{S}_{c f}\left(x^{t} ; F, D_{s_{j}}\right)=-\log \left(1-D_{s_{j}}\left(F\left(x^{t}\right)\right)\right)+\alpha_{s_{j}} Scf(xt;F,Dsj)=−log(1−Dsj(F(xt)))+αsj

α s j \alpha_{s_{j}} αsj is the source-specific concentration constant, It is obtained by averaging the source j j j discriminator losses over X s j X_{s_j} Xsj.in supplementary, different score, different α \alpha α:

α s j = 1 N T ∑ i N T ( D s j ( 1 − F ( x i s j ) ) ) 2 \alpha_{s_{j}}=\frac{1}{N_{T}} \sum_{i}^{N_{T}}\left(D_{s_{j}}\left(1-F\left(x_{i}^{s_{j}}\right)\right)\right)^{2} αsj=NT1i∑NT(Dsj(1−F(xisj)))2

N T N_T NT denotes how many times the target samples have been visited to train our model

x i s j x_{i}^{s_{j}} xisj denotes the source j instance come along with the coupled target instances in the adversarial learning.

8.3 (Multi-source) category classifier C C C

-

a multi-output net composed by N N N source-specific predictors { C s j } j = 1 N \left\{C_{s_j}\right\}_{j=1}^{N} {Csj}j=1N

-

Each predictor is softmax classifier

-

for the image from source j j j: only the value from C s j C_{s_j} Csj get activated and provides the gradient for training

-

For a target image x t x_t xt instead, all source-specific predictors provide N N N categorization results { C s j ( F ( x t ) ) } j = 1 N \{C_{s_j} (F(x_t))\}^N_{j =1} {Csj(F(xt))}j=1Nto the target classification operator.

8.4 Target classification operator

-

for each target feature F ( x t ) F(x_t) F(xt), the target classification operator takes each source perplexity score S c f ( x t ; F , D s j ) \mathcal{S}_{c f}\left(x^{t} ; F, D_{s_{j}}\right) Scf(xt;F,Dsj) to re-weight the corresponding source-specific prediction { C s j ( F ( x t ) ) } \{C_{s_j} (F(x_t))\} {Csj(F(xt))}

the confidence x t x_t xt belongs to c c c presents as

C o n f i d e n c e ( c ∣ x t ) : = ∑ c ∈ C s j S c f ( x t ; F , D s j ) ∑ c ∈ C s k S c f ( x t ; F , D s k ) C s j ( c ∣ F ( x t ) ) w h e r e c ∈ ⋃ j = 1 N C s j (2) Confidence \left(c | x^{t}\right):=\sum_{c \in \mathcal{C}_{s_{j}}} \frac{\mathcal{S}_{c f}\left(x^{t} ; F, D_{s_{j}}\right)}{\sum\limits_{c \in \mathcal{C}_{s_{k}}} \mathcal{S}_{c f}\left(x^{t} ; F, D_{s_{k}}\right)} C_{s_{j}}\left(c | F\left(x^{t}\right)\right) \\ where\ c\in\bigcup_{j=1}^{N} \mathcal{C}_{s_{j}}\tag{2} Confidence(c∣xt):=c∈Csj∑c∈Csk∑Scf(xt;F,Dsk)Scf(xt;F,Dsj)Csj(c∣F(xt))where c∈j=1⋃NCsj(2)

- C s j ( c ∣ F ( x t ) ) C_{s_{j}}\left(c | F\left(x^{t}\right)\right) Csj(c∣F(xt)) denotes the softmax value of source j j j corresponding to class c c c

- ∑ c ∈ C s j \sum\limits_{c\in \mathcal C_{s_j}} c∈Csj∑ means only those sources with class c c c can join the perplexity score weighting.

- ∑ c ∈ C s k \sum\limits_{c\in \mathcal C_{s_k}} c∈Csk∑ means all the sources

8.5 Connection to distribution weighted combining rule

- In the distribution weighted combining rule [33], the target distribution is treated as a mixture of the multi-source distributions with the coefficients by normalized source distributions weighted by unknown positive { λ j } j = 1 N \{\lambda_j\}_{j=1}^N {λj}j=1N, namely D t ( x ) = ∑ c ∈ C s k N λ k D s k ( x ) \mathcal{D}_{t}(x)=\sum_{c \in \mathcal{C}_{s_k}}^{N} \lambda_{k} \mathcal{D}_{s_{k}}(x) Dt(x)=∑c∈CskNλkDsk(x)

h λ ( x ) = ∑ i = 1 k λ i D i ( x ) ∑ j = 1 k λ j D j ( x ) h i ( x ) h_{\lambda}(x)=\sum_{i=1}^{k} \frac{\lambda_{i} D_{i}(x)}{\sum_{j=1}^{k} \lambda_{j} D_{j}(x)} h_{i}(x) hλ(x)=i=1∑k∑j=1kλjDj(x)λiDi(x)hi(x)

note that the hypothesis is one-dimension output h i ( x ) ∈ R h_i(x)\in \mathbb R hi(x)∈R

- in this paper

The ideal target classifier presents as the weighted combination of source classifiers.

Note that here each classifier for each source C s j C_{s_j} Csj is a multi output softmax result.

C t ( c ∣ x t ) = ∑ c ∈ C s λ j D s j ( x t ) ∑ c ∈ C s k λ k D s k ( x t ) C s j ( c ∣ F ( x t ) ) C_{t}\left(c | x^{t}\right)=\sum_{c \in \mathcal{C}_{s}} \frac{\lambda_{j} \mathcal{D}_{s_{j}}\left(x^{t}\right)}{\sum_{c \in \mathcal{C}_{s_{k}}} \lambda_{k} \mathcal{D}_{s_{k}}\left(x^{t}\right)} C_{s_{j}}\left(c | F\left(x^{t}\right)\right) Ct(c∣xt)=c∈Cs∑∑c∈CskλkDsk(xt)λjDsj(xt)Csj(c∣F(xt))

with the increase of the probability that x t x_t xt from source j j j, D s j ( F ( x t ) ) → 1 , D s j ( x t ) → 1 D_{s_{j}}\left(F\left(x^{t}\right)\right)\rightarrow 1,\mathcal D_{s_{j}}\left(x^{t}\right)\rightarrow 1 Dsj(F(xt))→1,Dsj(xt)→1

so λ j D s j ( x t ) ∝ S c f ( x t ; F , D s j ) = − log ( 1 − D s j ( F ( x t ) ) ) + α s j \lambda_{j} \mathcal{D}_{s_{j}}\left(x^{t}\right) \propto\mathcal{S}_{c f}\left(x^{t} ; F, D_{s_{j}}\right)=-\log \left(1-D_{s_{j}}\left(F\left(x^{t}\right)\right)\right)+\alpha_{s_{j}} λjDsj(xt)∝Scf(xt;F,Dsj)=−log(1−Dsj(F(xt)))+αsj

- 所以用score代替了distribution的weighting

- target images should be categorized by the classifiers from multiple sources, with whose features more similar to target, the source classifiers’ prediction are more trustful

9 Learning

9.1 Pre-training C and F

-

take all source images to jointly train the feature extractor F and the category classifier C

-

pseudo label for target: Those networks and the target classification operator then predict categories for all target images and annotate those with high confidences.

-

Since the domain discriminator hasn’t been trained, we take the uniform distribution simplex weight as the perplexity scores to the target classification operator.

-

Finally, we obtain the pre-trained feature extractor and category classifier via further fine-tuning them with sources and the pseudo-labeled target images.

In object recognition, we initiate our DCTN by following the same way of DAN (start with an AlexNet model pretrained on ImageNet 2012 and fine-tune it).

In terms of digit recognition, we perform DCTN learning from scratch.

9.2 Multi-way Adversarial Adaptation

ref: ADDA论文Adversarial Discriminative Domain Adaptation

- original GAN:(M means mapping / feature extractor)

L a d v D ( X s , X t , M s , M t ) = − E x s ∼ X s [ log D ( M s ( x s ) ) ] − E x t ∼ X t [ log ( 1 − D ( M t ( x t ) ) ) ] \begin{array}{c} \mathcal{L}_{\mathrm{adv}_{D}}\left(\mathbf{X}_{s}, \mathbf{X}_{t}, M_{s}, M_{t}\right)= \\ -\mathbb{E}_{\mathbf{x}_{s} \sim \mathbf{X}_{s}}\left[\log D\left(M_{s}\left(\mathbf{x}_{s}\right)\right)\right] \\ -\mathbb{E}_{\mathbf{x}_{t} \sim \mathbf{X}_{t}}\left[\log \left(1-D\left(M_{t}\left(\mathbf{x}_{t}\right)\right)\right)\right] \end{array} LadvD(Xs,Xt,Ms,Mt)=−Exs∼Xs[logD(Ms(xs))]−Ext∼Xt[log(1−D(Mt(xt)))]

L a d v M = − L a d v D \mathcal{L}_{\mathrm{adv}_{M}}=-\mathcal{L}_{\mathrm{adv}_{D}} LadvM=−LadvD

min D L a d v D ( X s , X t , M s , M t ) min M s , M t L a d v M ( X s , X t , D ) \begin{array}{c} \min _{D} \mathcal{L}_{\mathrm{adv}_{D}}\left(\mathbf{X}_{s}, \mathbf{X}_{t}, M_{s}, M_{t}\right) \\ \min _{M_{s}, M_{t}} \mathcal{L}_{\mathrm{adv}_{M}}\left(\mathbf{X}_{s}, \mathbf{X}_{t}, D\right) \end{array} minDLadvD(Xs,Xt,Ms,Mt)minMs,MtLadvM(Xs,Xt,D)

-

change method 1: early on during training the discriminator converges quickly, causing the gradient to vanish, change the generator objective, splits the optimization into two independent objectives, one for the generator and one for the discriminator,

L a d v M ( X s , X t , D ) = − E x t ∼ X t [ log D ( M t ( x t ) ) ] (**) \mathcal{L}_{\mathrm{adv}_{M}}\left(\mathbf{X}_{s}, \mathbf{X}_{t}, D\right)=-\mathbb{E}_{\mathbf{x}_{t} \sim \mathbf{X}_{t}}\left[\log D\left(M_{t}\left(\mathbf{x}_{t}\right)\right)\right] \tag{**} LadvM(Xs,Xt,D)=−Ext∼Xt[logD(Mt(xt))](**) -

change method 2: in the setting where both distributions are changing, this objective will lead to oscillation–when the mapping converges to its optimum, the discriminator can simply flip the sign of its prediction in response.

Tzeng et al. instead proposed the domain confusion objective, under which the mapping is trained using a cross-entropy loss function against a uniform distribution

This loss ensures that the adversarial discriminator views the two domains identically.

confuse就是要让它“半信半疑”,让source和target经过mapping的marginal distribution尽量接近。来自source和target的可能性都接近一半(或者说相当于source和target中的样本的真实domain标签都是来自1和0的可能性占一半,这样最小化这个差异的交叉熵损失函数,得到的mapping后的source和target分布就都是接近均均分布,可以认为source和target被map成很相似的domain,DA的任务就完成了)

L a d v M ( X s , X t , D ) = − ∑ d ∈ { s , t } E x d ∼ x d [ 1 2 log D ( M d ( x d ) ) + 1 2 log ( 1 − D ( M d ( x d ) ) ) ] (*) \begin{array}{l} \mathcal{L}_{\mathrm{adv}_{M}}\left(\mathbf{X}_{s}, \mathbf{X}_{t}, D\right)= \begin{aligned} -\sum_{d \in\{s, t\}} & \mathbb{E}_{\mathbf{x}_{d} \sim \mathbf{x}_{d}}\left[\frac{1}{2} \log D\left(M_{d}\left(\mathbf{x}_{d}\right)\right)\right. \left.+\frac{1}{2} \log \left(1-D\left(M_{d}\left(\mathbf{x}_{d}\right)\right)\right)\right] \end{aligned} \end{array} \tag{*} LadvM(Xs,Xt,D)=−d∈{s,t}∑Exd∼xd[21logD(Md(xd))+21log(1−D(Md(xd)))](*)

注意:其实*式子在ADDA论文中结果没有用,只是用来说明related work,ADDA中用的还是(**);

*式子是 Simultaneous Deep Transfer Across Domains and Tasks 文中提出来的;

ADDA论文改了generator的优化目标为**。

in this paper

-

minmax adversarial domain adaptation

min F max D V ( F , D ; C ˉ ) = L a d v ( F , D ) + L c l s ( F , C ˉ ) (4) \min _{F} \max _{D} V(F, D ; \bar{C})=\mathcal{L}_{a d v}(F, D)+\mathcal{L}_{c l s}(F, \bar{C})\tag{4} FminDmaxV(F,D;Cˉ)=Ladv(F,D)+Lcls(F,Cˉ)(4)- classifier C C C is fixed as C ˉ \bar C Cˉ to provide stable gradient values.

- the first term denotes our adversarial mechanism

- the second term is a multi-source classification losses.

The optimization based on Eq.4 works well for D D D but not F F F.

Since the feature extractor learns the mapping from the multiple sources and the target, the domain distributions become simultaneously changing in adversary, which results in an oscillation then spoils our feature extractor.

when source and target feature mappings share their architectures, the domain confusion can be introduced to replace the adversarial objective, which performs stable to learn the mapping F F F.

-

multidomain confusion loss

L a d v ( F , D ) = 1 N ∑ j N E x ∼ X s j L c f ( x ; F , D s j ) + E x ∼ X t L c f ( x ; F , D s j ) (6) \begin{array}{l} \mathcal{L}_{a d v}(F, D)=\frac{1}{N} \sum_{j}^{N} \mathbb{E}_{x \sim X_{s_{j}}} \mathcal{L}_{c f}\left(x ; F, D_{s_{j}}\right) +\mathbb{E}_{x \sim X_{t}} \mathcal{L}_{c f}\left(x ; F, D_{s_{j}}\right) \end{array} \tag{6} Ladv(F,D)=N1∑jNEx∼XsjLcf(x;F,Dsj)+Ex∼XtLcf(x;F,Dsj)(6)

where

L c f ( x ; F , D s j ) = 1 2 log D s j ( F ( x ) ) + 1 2 log ( 1 − D s j ( F ( x ) ) ) (7) \begin{array}{c} \mathcal{L}_{c f}\left(x ; F, D_{s_{j}}\right)= \frac{1}{2} \log D_{s_{j}}(F(x))+\frac{1}{2} \log \left(1-D_{s_{j}}(F(x))\right) \end{array}\tag{7} Lcf(x;F,Dsj)=21logDsj(F(x))+21log(1−Dsj(F(x)))(7)

i.e.

L a d v ( F , D ) = 1 N ∑ j N E x ∼ X s j [ 1 2 log D s j ( F ( x ) ) + 1 2 log ( 1 − D s j ( F ( x ) ) ) ] + 1 N ∑ j N E x ∼ X t [ 1 2 log D s j ( F ( x ) ) + 1 2 log ( 1 − D s j ( F ( x ) ) ) ] \begin{array}{l} \mathcal{L}_{a d v}(F, D)=\frac{1}{N} \sum_{j}^{N} \mathbb{E}_{x \sim X_{s_{j}}} \Big[\frac{1}{2} \log D_{s_{j}}(F(x))+\frac{1}{2} \log \left(1-D_{s_{j}}(F(x))\right)\Big]\\ +\frac{1}{N} \sum_{j}^{N}\mathbb{E}_{x \sim X_{t}} \Big[\frac{1}{2} \log D_{s_{j}}(F(x))+\frac{1}{2} \log \left(1-D_{s_{j}}(F(x))\right)\Big] \end{array} Ladv(F,D)=N1∑jNEx∼Xsj[21logDsj(F(x))+21log(1−Dsj(F(x)))]+N1∑jNEx∼Xt[21logDsj(F(x))+21log(1−Dsj(F(x)))]

和(*)的差别在于:-

没有负号

-

是multi source所以有N个discriminator,每个对应一个source和target的域判别

-

*中是source和target的mapping不一样,这里是feature extractor一样

-

本文中直接修改成了*是discriminator和generator公用的loss function(的相反数,因为为负数),表示的是target和每个source之间

交叉熵表示的是两个分布之间的差异,注意交叉熵一定是正数的结果

- 最大化6式,等价于最小化交叉熵损失,就是最优化discriminator

-

Online hard domain batch mining

-

samples from different sources are sometimes useless to improve the adaptation to the target, and as the training proceeds, more redundant source samples turn to draw back the whole model performance

-

minibatch: sample batch M M M for target and each source domain

-

Each source target discriminator D s j D_{s_j} Dsj‘s loss is viewed as the degrees to distinguish M M M x i t x^t_i xit from the j j jth source’ s M M M samples

∑ i M − log D s j ( F ( x i s j ) ) − log ( 1 − D s j ( F ( x i t ) ) ) \sum_i^M - \log D_{s_{j}}(F(x_i^{s_j})) - \log \left(1-D_{s_{j}}(F(x_i^{t}))\right) i∑M−logDsj(F(xisj))−log(1−Dsj(F(xit)))

这里是交叉熵损失,是最原始GAN的形式。越大表示损失越大,表示对M个source样本和M个target样本的来自source j j j 还是target domain的区分效果越差,即这个source j j j的discriminator效果不好。

- find hard source domain: feature extractor F F F performs the worst to transform the target samples to confuse the j ∗ j^* j∗th source

j ∗ = a r g max j N { ∑ i M − log D s j ( F ( x i s j ) ) − log ( 1 − D s j ( F ( x i t ) ) ) } j = 1 N j^*= \mathrm{arg}\max_j^{N}\Big\{ \sum_i^M - \log D_{s_{j}}(F(x_i^{s_j})) - \log \left(1-D_{s_{j}} (F(x_i^{t}))\right) \Big\}_{j=1}^N j∗=argjmaxN{i∑M−logDsj(F(xisj))−log(1−Dsj(F(xit)))}j=1N

-

we use the source j ∗ j^* j∗ and the target samples in the minibatchto train the feature extractor

-

以下是用于迭代更新、找到最好的feature extractor的算法1

L a d v s j ∗ ( F , D ) = ∑ i M L c f ( x i s j ∗ ; F , D s j ∗ ) + L c f ( x i t ; F , D s j ∗ ) \mathcal{L}_{a d v}^{s_j^*}(F, D)=\sum_{i}^{M} \mathcal{L}_{c f}\left(x_i^{s_j^*} ; F, D_{s_j^*}\right) +\mathcal{L}_{c f}\left(x_i^t ; F, D_{s_j^*}\right) Ladvsj∗(F,D)=i∑MLcf(xisj∗;F,Dsj∗)+Lcf(xit;F,Dsj∗)min F max D V ( F , D ; C ˉ ) = L a d v s j ∗ ( F , D ) + L c l s ( F , C ˉ ) (4) \min _{F} \max _{D} V(F, D ; \bar{C})=\mathcal{L}_{a d v}^{s_j^*}(F, D)+\mathcal{L}_{c l s}(F, \bar{C})\tag{4} FminDmaxV(F,D;Cˉ)=Ladvsj∗(F,D)+Lcls(F,Cˉ)(4)

9.3 Target Discriminative Adaptation

-

Aided by the multi-way adversary, DCTN has been able to obtain good domain-invariant features, yet not surely classifiable in the target domain.

-

auto-labeling strategy: annotate target samples, then jointly train our feature extractor and multi-source category classifier with source and target images by their (pseudo-) labels

-

classification losses from multiple source images and target images with pseudo labels

min F , C L c l s ( F , C ) = ∑ j N E ( x , y ) ∼ ( X s j , Y s j ) [ L ( C s j ( F ( x ) ) , y ) ] + E ( x t , y ^ ) ∼ ( X t p , Y t p ) [ ∑ y ^ ∈ C s ^ L ( C s ^ ( F ( x t ) ) , y ^ ) ] (8) \min _{F, C} \mathcal{L}_{c l s}(F, C)=\sum_{j}^{N} \mathbb{E}_{(x, y) \sim\left(X_{s_{j}}, Y_{s_{j}}\right)}\left[\mathcal{L}\left(C_{s_{j}}(F(x)), y\right)\right] +\mathbb{E}_{\left(x^{t}, \hat{y}\right) \sim\left(X_{t}^{p}, Y_{t}^{p}\right)}\left[\sum_{\hat{y} \in \mathcal{C}_{\hat{s}}} \mathcal{L}\left(C_{\hat{s}}\left(F\left(x^{t}\right)\right), \hat{y}\right)\right] \tag{8} F,CminLcls(F,C)=j∑NE(x,y)∼(Xsj,Ysj)[L(Csj(F(x)),y)]+E(xt,y^)∼(Xtp,Ytp)⎣⎡y^∈Cs^∑L(Cs^(F(xt)),y^)⎦⎤(8)

apply the target classification operator to assign pseudo labels, and the samples with the confidence higher than a preseted threshold will be selected into X t P X^P_t XtP .

given a target instance x t x^t xt with pseudo-labeled class y ^ \hat y y^, we find those sources s ^ \hat s s^ include this class ( y ^ ∈ C s ^ ) (\hat y \in \mathcal C_{\hat s}) (y^∈Cs^), then update our network via the sum of the multi-source classification losses

10. Experiments

10.1 Benchmarks

- 3 widely used UDA benchmarks

- Office-31 [41]:

- a object recognition benchmark with 31 categories and 4652 images unevenly spread in three visual domains A (Amazon), D (DSLR), W (Webcam).

- ImageCLEF-DA:

- 50 images in each category

- totally 600 images for each domain

- derives from ImageCLEF 2014 domain adaptation challenge, and is organized by selecting 12 object categories (aeroplane, bike bird, boat, bottle, bus, car, dog, horse, monitor, motorbike, and people) shared in the three famous real-world datasets, I (ImageNet ILSVRC 2012), P (Pascal VOC 2012), C (Caltech-256).

- Digits-five

- five digit image sets respectively sampled from following public datasets

- mt (MNIST) [26]

- mm (MNIST-M) [11]

- sv(SVHN) [36]

- up (USPS)

- sy (Synthetic Digits) [11].

- Towards the images in MNIST, MNIST-M, SVHN and Synthetic Digits, we draw 25000 for training and 9000 for testing in each dataset.

- There are only 9298 images in USPS, so we choose the entire dataset as our domain.

- five digit image sets respectively sampled from following public datasets

- Office-31 [41]:

10.2 Evaluations in the vanilla(common) setting

baseline

-

mullti source: two shallow methods

- sparse FRAME (sFRAME) [46]

- a non-stationary Markov random field model that reproduces the observed statistical properties of filter responses at a subset of selected locations, scales and orientations.

- representing a wide variety of object patterns in natural images and that the learned models are useful for object classification.

- SGF [16]

- Motivated by incremental learning, we create intermediate representations of data between the two domains by viewing the generative subspaces (of same dimension) created from these domains as points on the Grassmann manifold, and sampling points along the geodesic between them to obtain subspaces that provide a meaningful description of the underlying domain shift.

- sparse FRAME (sFRAME) [46]

-

single source models----> multi source: conventional (TCA, GFK)/ deep

Since those methods perform in single-source setting, we introduce two MDA standards for different purposes

- Source combine: all source domains are combined into a traditional single-source v.s. target setting.

- The first standard testify whether the multi-source is valuable to exploit

- Single best: in the multi-source domains, we report the single source transfer result best-performing in the test set.

- whether we can further improve the best single source UDA via introducing another source transfer.

- Source combine: all source domains are combined into a traditional single-source v.s. target setting.

-

source only

- as baselines in the Source combine and multisource standards

- use all images from sources to train backbone-based multi-source classifiers and directly apply them to classify target images

10.3 Evaluations in the category shift setting

-

depart all categories into two non-overlapped class sets and define them as the private classes

- overlap

- disjoint

-

DAN also suffers negative transfer gains in most situations, which

indicates the transferbility of DAN cripled in the category

shift. -

In contrast, DCTN reduces the performance drops compared to the model in the vanilla setting, and obtains positive transfer gains in all situations. It reveals that DCTN can resist the negative transfer caused by the category shift

11. Further Analysis

11.1 Feature visualization.

visualize the DCTN activations before and after adaptation.

- DCTN can successfully learn transferable features with multiple sources

- features learned by DCTN attains desirable discriminative property

11.2 Ablation study

-

The adversarial-only model excludes the pseudo labels and updates the category classifier with source samples.

-

The pseudo-only model forbids the adversary and categorize target samples with average multi-source results

-

without domain batch mining technique

11.3 Convergence analysis

despite of the frequent deviation, the classification loss, adversarial loss and testing error gradually converge.