Realsense D455 标定+运行VINS-MONO

运行参考:https://blog.csdn.net/qq_41839222/article/details/86552367

标定参考:https://blog.csdn.net/xiaoxiaoyikesu/article/details/105646064

安装kalibr:https://github.com/ethz-asl/kalibr/wiki/installation

问题1:在安装依赖sudo pip install python-igraph --upgrade时,报错:

Command "python setup.py egg_info" failed with error code 1 in /tmp/pip-build-HgfXHE/python-igraph/

尝试了很多方法,最后用apt-get安装成功。(另外,貌似不能在python3.7环境下安装这个,我把anaconda关了,在默认的python2.7中才弄好)

sudo apt-get install python-igraph

问题2:在编译kalibr源码的时候遇到了下载suitesparse过久的问题。

解决方法:在github中下载相应版本https://github.com/jluttine/suitesparse/releases,

下载下来suitesprse压缩包后,解压的文件夹名字为“suitesparse-4.2.1”,要把这个文件夹改名为“SuiteSparse”,然后放到"ros空间/build/kalibr/suitesparse/suitesparse_src-prefix/src/suitesparse_src"下。

(参考了https://blog.csdn.net/u010003609/article/details/104715475中走火入魔的评论)

问题3:编译kalibr的时候报错:

Errors << aslam_cv_python:make /home/yasaburo3/project/kalibr-workspace/logs/aslam_cv_python/build.make.000.log

/usr/include/boost/python/detail/destroy.hpp:20:9: error: ‘Eigen::MatrixBase::~MatrixBase() [with Derived = Eigen::Matrix

p->~T();

参考:https://github.com/ethz-asl/kalibr/issues/396

这样子看起来是由于新版本的Eigen中把原来的一个public的部分改成了protected,根据这个回答进行修改之后,解决了这个问题。(那么我觉得讲道理换一个旧版本的Eigen可能也是有效的)

安装code_utils和imu_utils之后,按照上面标定参考的文章,得到了IMU的标定结果:

%YAML:1.0

---

type: IMU

name: d455

Gyr:

unit: " rad/s"

avg-axis:

gyr_n: 2.4619970724548633e-03

gyr_w: 3.3803458569191471e-05

x-axis:

gyr_n: 1.7344090512858039e-03

gyr_w: 3.0020282041206071e-05

y-axis:

gyr_n: 3.8089577973404579e-03

gyr_w: 5.9955836202839161e-05

z-axis:

gyr_n: 1.8426243687383285e-03

gyr_w: 1.1434257463529169e-05

Acc:

unit: " m/s^2"

avg-axis:

acc_n: 2.3490554641910726e-02

acc_w: 7.1659587470836986e-04

x-axis:

acc_n: 2.0733442788182205e-02

acc_w: 6.7470235021600312e-04

y-axis:

acc_n: 2.2826384734378355e-02

acc_w: 9.0457613257271044e-04

z-axis:

acc_n: 2.6911836403171623e-02

acc_w: 5.7050914133639622e-04

https://blog.csdn.net/Duke_Star/article/details/115942252

相机的内参就采用realsense官方标定好的参数:

rostopic echo /camera/color/camera_info

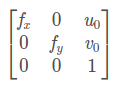

内参K :

或者使用如下方法获得相机内参和相机相对IMU的外参(参考:https://www.freesion.com/article/47171367483/)(没用到kalibr)

#include CMakeLists.txt:

cmake_minimum_required(VERSION 3.4.1)

project(calib)

set(CMAKE_CXX_FLAGS "-std=c++11")

find_package(OpenCV REQUIRED)

find_package(realsense2 REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${realsense2_INCLUDE_DIRS})

add_executable(calib calib.cpp)

target_link_libraries(calib

${OpenCV_LIBS}

${realsense2_LIBRARY}

)

得到的参数如下:

%YAML:1.0

#common parameters

imu_topic: "/camera/imu"

image_topic: "/camera/color/image_raw"

output_path: "/home/tony-ws1/output/"

#camera calibration

model_type: PINHOLE

camera_name: camera

image_width: 640

image_height: 480

distortion_parameters:

k1: -5.58381e-02

k2: 6.70407e-02

p1: -2.42111e-04

p2: -2.12417e-02

projection_parameters:

fx: 3.7958282470703125e+02

fy: 3.793941955566406e+02

cx: 3.1927392578125e+02

cy: 2.471514129638672e+02

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

# 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning.

#If you choose 0 or 1, you should write down the following matrix.

#Rotation from camera frame to imu frame, imu^R_cam

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [0.999998, 0.000727753, -0.0018542,

-0.000731598, 0.999998, -0.00207414,

0.00185269, 0.00207549, 0.999996]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [-0.0591689, -6.45117e-05, 0.000440176]

#feature traker paprameters

max_cnt: 150 # max feature number in feature tracking

min_dist: 25 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 1 # publish tracking image as topic

equalize: 0 # if image is too dark or light, trun on equalize to find enough features

fisheye: 0 # if using fisheye, trun on it. A circle mask will be loaded to remove edge noisy points

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 2.3490554641910726e-02 # accelerometer measurement noise standard deviation. #0.2

gyr_n: 2.4619970724548633e-03 # gyroscope measurement noise standard deviation. #0.05

acc_w: 7.1659587470836986e-04 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.3803458569191471e-05 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.794 # gravity magnitude in Shanghai

之后就可以运行了

roslaunch realsense2_camera rs_camera.launch

roslaunch vins_estimator realsense_color.launch

roslaunch vins_estimator vins_rviz.launch

效果也不是特别的好。第一次实验的时候出现了漂移非常严重的问题,但是这次没有那么严重了,可能是我这次运动的比较慢?当特征点不足的时候会导致视觉里程计失效,这时仅靠IMU来估计运动,误差就会非常大。另外,IMU和相机的外参没有使用kalibr标定,用的是程序自动标定的,这可能也是误差较大的原因之一,之后再用kalibr标一下看看。