【论文阅读】Delving into Deep Imbalanced Regression

论文下载

Github

bib:

@INPROCEEDINGS{yang2021delving,

title = {Delving into Deep Imbalanced Regression},

author = {Yuzhe Yang and Kaiwen Zha and Ying-Cong Chen and Hao Wang and Dina Katabi},

booktitle = {ICML},

year = {2021},

pages = {1--23}

}

1. 摘要

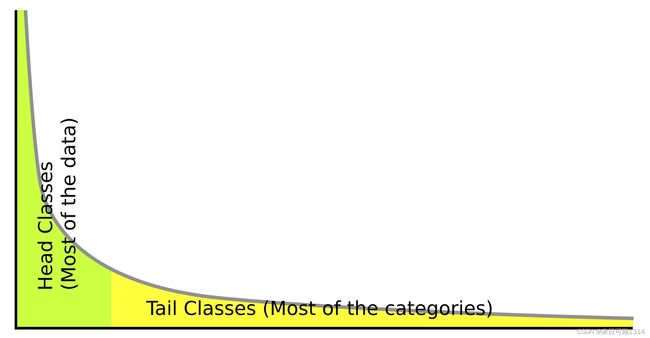

Real-world data often exhibit imbalanced distributions, where certain target values have significantly fewer observations.

Existing techniques for dealing with imbalanced data focus on targets with categorical indices, i.e., different classes.

However, many tasks involve continuous targets, where hard boundaries between classes do not exist.

We define Deep Imbalanced Regression (DIR) as learning from such imbalanced data with continuous targets, dealing with potential missing data for certain target values, and generalizing to the entire target range.

Motivated by the intrinsic difference between categorical and continuous label space, we propose distribution smoothing for both labels and features, which explicitly acknowledges the effects of nearby targets, and calibrates both label and learned feature distributions.

We curate and benchmark large-scale DIR datasets from common real-world tasks in computer vision, natural language processing, and healthcare domains.

Extensive experiments verify the superior performance of our strategies. Our work fills the gap in benchmarks and techniques for practical imbalanced regression problems. Code and data are available at: https://github.com/ YyzHarry/imbalanced-regression.

Notes:

- 现实数据存在不平衡分布,即某些目标值的观测量明显较少。然而现有的技术集中在分类问题上,在连续目标的预测(回归问题)很少讨论。言简意赅的引出研究问题。

- 本文的两个贡献:

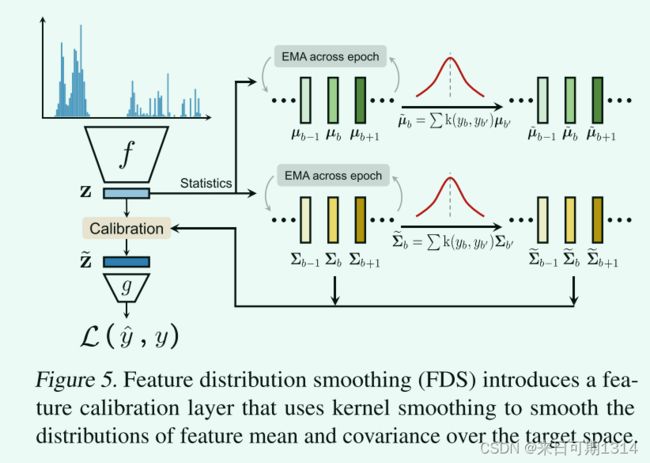

LDS: Label Distribution Smoothing. 获得影响回归预测错误率的真实标签分布(连续标签影响)。这一点在分类问题中不存在,分类的标签分布就是影响分类预测的真实分布。FDS·:Feature Distribution Smoothing. 平滑标签分布,它的提出是由于一个直觉:相似标签的样本在特征空间也距离近。

2. 前置知识

分类问题中的不平衡问题,一个直观的理解是有些类别样本数量多,而有的类别样本少,甚至没有样本。相比于回归问题中的不平衡问题,分类中的不平衡问题有很多成熟的处理方案, 主要是两种:1. 重采样; 2. 重加权。参考

2.1. 重采样(re-sampling)

简单来说就是不是所有的样本都是等概率的参与运算,降低对应很多样本类别的样本参与运算的概率,提高对应很少样本类别的样本参与运算的概率。这种欠采样方案减少了参与训练的样本。与之相反的还有过采样。

2.2. 重加权(re-weighting)

简单来说就是对不同类别的loss重新加权。

2. 算法描述

Missing value:在很大的一个区间中存在未观测的样本。Imbalance:有的区域的观测样本很多,而有的区间观测样本很少(稀疏)。

2.1. Label Distribution Smoothing

p ~ ( y ′ ) ≜ ∫ Y k ( y , y ′ ) p ( y ) d y (1) \widetilde{p}(y') \triangleq \int_{\mathcal{Y}}k(y, y')p(y)dy \tag{1} p (y′)≜∫Yk(y,y′)p(y)dy(1)

可以理解为标签维度的一个平均,类似于KNN, 只是它是针对全局的。

2.2. Feature Distribution Smoothing

μ b = 1 N b ∑ i = 1 N b z i (2) \bm{\mu}_b = \frac{1}{N_b}\sum_{i =1}^{N_b}\bm{z}_i \tag{2} μb=Nb1i=1∑Nbzi(2)

Σ b = 1 N b − 1 ∑ i = 1 N b ( z i − μ b ) ( z i − μ b ) T (3) \bm{\Sigma}_b = \frac{1}{N_b-1}\sum_{i =1}^{N_b}(\bm{z}_i - \bm{\mu}_b) (\bm{z}_i - \bm{\mu}_b)^\mathsf{T} \tag{3} Σb=Nb−11i=1∑Nb(zi−μb)(zi−μb)T(3)

这两个公式表示对于每个类别特征的均值与方差的计算。

μ ~ b = ∑ b ′ ∈ B k ( y b , y b ′ ) μ b ′ (4) \widetilde{\bm{\mu}}_b = \sum_{b' \in \mathcal{B}} k(y_b, y_{b'})\bm{\mu}_b' \tag{4} μ b=b′∈B∑k(yb,yb′)μb′(4)

Σ ~ b = ∑ b ′ ∈ B k ( y b , y b ′ ) Σ b ′ (5) \widetilde{\bm{\Sigma}}_b = \sum_{b' \in \mathcal{B}} k(y_b, y_{b'})\bm{\Sigma}_{b'} \tag{5} Σ b=b′∈B∑k(yb,yb′)Σb′(5)

μ ~ b , Σ ~ b \widetilde{\bm{\mu}}_b, \widetilde{\bm{\Sigma}}_b μ b,Σ b表示平滑后的类别特征均值与方差。

z ~ = Σ ~ b 1 2 Σ b − 1 2 ( z − μ b ) + μ ~ b \widetilde{\bm{z}} = \widetilde{\bm{\Sigma}}_b^{\frac{1}{2}} \bm{\Sigma}_b^{-\frac{1}{2}}(\bm{z}-\bm{\mu}_b) + \widetilde{\bm{\mu}}_b z =Σ b21Σb−21(z−μb)+μ b

这里注意,感觉原有的公式存在歧义, z \bm{z} z特征只会与它所属类别的均值与方差进行转化(类似白化操作),在代码里面是这样的。

for label in torch.unique(labels):

if label > self.bucket_num - 1 or label < self.bucket_start:

continue

elif label == self.bucket_start:

pass

elif label == self.bucket_num - 1:

pass

else:

# 筛选对应类别的特征进行平滑处理

features[labels == label] = calibrate_mean_var(

features[labels == label],

self.running_mean_last_epoch[int(label - self.bucket_start)],

self.running_var_last_epoch[int(label - self.bucket_start)],

self.smoothed_mean_last_epoch[int(label - self.bucket_start)],

self.smoothed_var_last_epoch[int(label - self.bucket_start)])

return features

3. 总结

这是一篇很容易理解的工作(写得很清晰),而且实验做得很充分。值得注意的是,这篇论文值得我这种不懂高大上理论推导的人学习,从实验观测到处理方法,巧妙的绕过了自己的短板-理论推导,整个文章行文流畅,代码也写得很漂亮,真是太优美了。

文章中提出的两个方法,LDS, FDS其实可以看作是两个独立的trick(技巧),从伪代码中看出没有明显重叠的地方。LDS是re-weighting在回归问题的延伸,FDS有点像BatchNormal。