k8s报错收集汇总

文章目录

-

- 报错信息:name: Invalid value: "openstack_controller

-

- 执行的命令为:

-

- 解决办法:

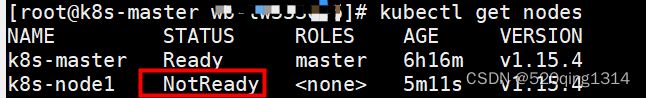

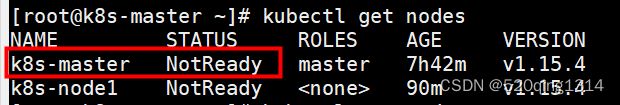

- 报错:k8s-master上:执行kubectl get nodes获得的内容全是NotReady(workaroud方法)

-

- messages报错信息:

- 解决办法:

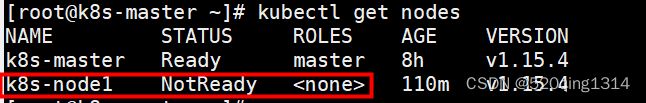

- 报错:k8s-node上:执行kubectl get nodes获得的内容全是NotReady(workaroud方法)

-

- 具体报错前操作了什么?

- 解决办法:

- 报错:k8s-master上:执行kubectl get nodes获得的内容全是NotReady(根本解决)

-

- 解决办法:

- 报错:k8s-node上:执行kubectl get nodes获得的内容全是NotReady(根本解决)

-

- 解决办法:

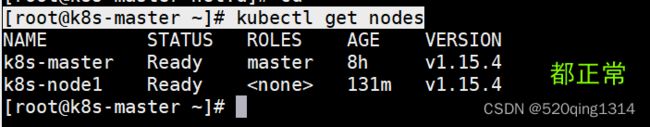

- 最后在k8-master再去执行:

- 报错信息: 节点加入kubeletes的master的时候报错问题解决

-

- 错误信息:

- 解决办法:

- 报错:The connection to the server 11.89.46.19:6443 was refused - did you specify the right host or port?

-

- 执行命令:

- 报错信息:

- 解决方法:

- 报错:error execution phase preflight: couldn‘t validate the identity of the API Server: abort connecting

-

- 执行命令:

- 报错详情:

- 解决办法一:

- 解决办法二:

- 报错:error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

-

- 执行的操作:

- 解决:

- 报错:error execution phase kubelet-start: configmaps "kubelet-config-1.19" is forbidden: User "system:bootstrap:xvnp3x" cannot get resource "configmaps" in API group "" in the namespace "kube-system"

-

- node节点加入k8s集群失败

- 报错:k8s-master与k8s-node上kubelet,kubeadm,kubectl版本不一致导致node加入master失败

-

- 操作了什么:

- 报错信息:

- 原因:

- 原因:

- 解决:

- 报错:/etc/kubernetes/kubelet.conf already exists

-

- 原因:

- 解决:

- k8s-node节点重新加入k8s-master

-

- 一、重置K8s node节点

- 二、删除配置

- 三、node节点重新加入k8s集群

- 四、创建目录和配置

报错信息:name: Invalid value: "openstack_controller

name: Invalid value: “openstack_controller”: a DNS-1123 subdomain must

consist of lower case alphanumeric characters, ‘-’ or ‘.’, and must

start and end with an alphanumeric character (e.g. ‘example.com’,

regex used for validation is

‘a-z0-9?(.a-z0-9?)*’)

执行的命令为:

kubeadm init --apiserver-advertise-address=192.168.2.111

–image-repository registry.aliyuncs.com/google_containers

–kubernetes-version v1.15.4

–service-cidr=10.1.0.0/16

–pod-network-cidr=10.244.0.0/16

解决办法:

k8s master节点需要修改主机名儿

报错:k8s-master上:执行kubectl get nodes获得的内容全是NotReady(workaroud方法)

messages报错信息:

[root@k8s-master ]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 15h v1.15.4

[root@k8s-master root]# cat /var/log/messages| tail -100

Jun 11 16:50:45 k8s-master kubelet: W0611 16:50:45.022114 3843 cni.go:213] Unable to update cni config: No networks found in /etc/cni/net.d

Jun 11 16:50:46 k8s-master kubelet: E0611 16:50:46.182436 3843 kubelet.go:2173] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

Jun 11 16:50:46 k8s-master su: (to root) root on none

解决办法:

使用命令vim /var/lib/kubelet/kubeadm-flags.env编辑配置文件,删除掉–network-plugin=cni

KUBELET_KUBEADM_ARGS=–cgroup-driver=systemd --cni-bin-dir=/opt/cni/bin --cni-conf-dir=/etc/cni/net.d --network-plugin=cni

master节点和node节点都删除掉–network-plugin=cni。

systemctl restart kubelet

报错:k8s-node上:执行kubectl get nodes获得的内容全是NotReady(workaroud方法)

具体报错前操作了什么?

1)在k8s-node上执行kubeadm join xxx,成功将k8s-node加入k8s-master集群

2)在k8s-master上执行kubectl get nodes然后显示k8s-node NotReady

解决办法:

-------- 在k8s-node上执行---------------------:

1)使用命令vim /var/lib/kubelet/kubeadm-flags.env编辑配置文件,删除掉–network-plugin=cni

2) systemctl restart kubelet

报错:k8s-master上:执行kubectl get nodes获得的内容全是NotReady(根本解决)

解决办法:

下载并安装flannel资源配置清单(k8s-master)

[root@k8s-master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

报错:k8s-node上:执行kubectl get nodes获得的内容全是NotReady(根本解决)

解决办法:

[root@k8s-node ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-node ~]# kubectl apply -f kube-flannel.yml

如果报错:

unable to recognize "kube-flannel.yml": Get http://localhost:8080/api?timeout=32s: dial tcp [::1]:8080: connect: connection refused

在k8s-master执行:

scp /etc/kubernetes/admin.conf k8s-node:/etc/kubernetes/

在k8s-node服务器上继续执行下面:

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

然后继续再k8s-node上执行:[root@k8s-node ~]# kubectl apply -f kube-flannel.yml

如果又有了新的报错:

unable to recognize "kube-flannel.yml": Get http://localhost:8080/api?timeout=32s: dial tcp [::1]:8080: connect: connection refused

继续在节点上查看日志:

journalctl -f -u kubelet.service

-- Logs begin at Sun 2022-05-22 15:56:39 CST. --

Jun 12 01:58:25 k8s-node1 kubelet[18185]: W0612 01:58:25.304325 18185 cni.go:213] Unable to update cni config: No networks found in /etc/cni/net.d #这里是关键

Jun 12 01:58:28 k8s-node1 kubelet[18185]: E0612 01:58:28.680599 18185 kubelet.go:2173] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

Jun 12 01:58:40 k8s-node1 kubelet[18185]: I0612 01:58:40.262575 18185 reconciler.go:203] operationExecutor.VerifyControllerAttachedVolume started for volume "config-volume" (UniqueName: "kubernetes.io/configmap/e044783d-f0c9-41d8-8072-e9689e183041-config-volume") pod "coredns-bccdc95cf-6nsqm" (UID: "e044783d-f0c9-41d8-8072-e9689e183041")

Jun 12 01:58:40 k8s-node1 kubelet[18185]: I0612 01:58:40.262765 18185 reconciler.go:203] operationExecutor.VerifyControllerAttachedVolume started for volume "coredns-token-crdjx" (UniqueName: "kubernetes.io/secret/e044783d-f0c9-41d8-8072-e9689e183041-coredns-token-crdjx") pod "coredns-bccdc95cf-6nsqm" (UID: "e044783d-f0c9-41d8-8072-e9689e183041")

Jun 12 01:58:40 k8s-node1 kubelet[18185]: I0612 01:58:40.362972 18185 reconciler.go:203] operationExecutor.VerifyControllerAttachedVolume started for volume "config-volume" (UniqueName: "kubernetes.io/configmap/1807838b-7ad6-4cb6-8058-06cad3b5ea95-config-volume") pod "coredns-bccdc95cf-s4j8z" (UID: "1807838b-7ad6-4cb6-8058-06cad3b5ea95")

Jun 12 01:58:40 k8s-node1 kubelet[18185]: I0612 01:58:40.363029 18185 reconciler.go:203] operationExecutor.VerifyControllerAttachedVolume started for volume "coredns-token-crdjx" (UniqueName: "kubernetes.io/secret/1807838b-7ad6-4cb6-8058-06cad3b5ea95-coredns-token-crdjx") pod "coredns-bccdc95cf-s4j8z" (UID: "1807838b-7ad6-4cb6-8058-06cad3b5ea95")

Jun 12 01:58:40 k8s-node1 kubelet[18185]: W0612 01:58:40.752013 18185 pod_container_deletor.go:75] Container "658a0cf5b5996d943d4d96001849bb5125cfa6271b537944e8215794c6820684" not found in pod's containers

解决方法,将master上/etc/cni/net.d 目录下的文件拷贝到有问题的节点上:

最后在k8-master再去执行:

报错信息: 节点加入kubeletes的master的时候报错问题解决

错误信息:

[root@k8s-master ~]# kubeadm join --token vnjgrg.olh7ojs0x3jp4wnr 111.167.19.21:6443 --discovery-token-ca-cert-hash sha256:ae85df1d602c969dea5a047dc04fdd3603045053df6b8e5f662472dfe4f9d017

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.14. Latest validated version: 18.09

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

解决办法:

在节点上先执行如下命令,清理kubeadm的操作,然后再重新执行join 命令: kubeadm reset

报错:The connection to the server 11.89.46.19:6443 was refused - did you specify the right host or port?

执行命令:

[root@k8s-master root]# kubectl get nodes

报错信息:

[---------------- 在k8s-master上执行 -----------------------]

The connection to the server 11.89.46.19:6443 was refused - did you

specify the right host or port?

解决方法:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config #关键

chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

报错:error execution phase preflight: couldn‘t validate the identity of the API Server: abort connecting

执行命令:

执行k8s-node加入k8s-master

报错详情:

[root@k8s-node1 root]# kubeadm join 11.17.16.13:6443 --token y7t804.giub7rn6rldhpxig --discovery-token-ca-cert-hash sha256:1b8ad1f8e140740d325beb52e6717f44abfx4319a0baf09d10cf38695cbd5d94

[preflight] Running pre-flight checks

error execution phase preflight: couldn't validate the identity of the API Server: Get "https://11.167.169.13:6443/api/v1/namespaces/kube-public/configmaps/cluster-info?timeout=10s": dial tcp 11.167.169.13:6443: connect: connection refused

To see the stack trace of this error execute with --v=5 or higher

解决办法一:

1、token 过期

此时需要通过kubedam重新生成token

[root@master ~]#kubeadm token generate #生成token

7r3l16.5yzfksso5ty2zzie #下面这条命令中会用到该结果

[root@master ~]# kubeadm token create 7r3l16.5yzfksso5ty2zzie --print-join-command --ttl=0 #根据token输出添加命令

W0604 10:35:00.523781 14568 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0604 10:35:00.523827 14568 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join 192.168.254.100:6443 --token 7r3l16.5yzfksso5ty2zzie --discovery-token-ca-cert-hash sha2

然后用上面输出的kubeadm join命令放到想要添加的节点中执行

解决办法二:

k8s api server不可达

此时需要检查和关闭所有服务器的firewalld和selinux

[root@master ~]#setenforce 0

[root@master ~]#sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

[root@master ~]#systemctl disable firewalld --now

报错:error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

执行的操作:

k8s-node加入k8s-master时发生报错:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized

原因:token令牌失效

解决:

#在master上执行

[root@k8s-master ~]# kubeadm token create #生成新token

56ehzj.hpxea29zdu2w45hf

[root@k8s-master ~]# kubeadm token create --print-join-command #生成加入集群的命令

kubeadm join 192.168.191.133:6443 --token wagma2.huev9ihugawippas --discovery-token-ca-cert-hash sha256:9f90161043001c0c75fac7d61590734f844ee507526e948f3647d7b9cfc1362d

#在node节点上执行

[root@k8s-node2 ~]# kubeadm join 192.168.191.133:6443 --token wagma2.huev9ihugawippas --discovery-token-ca-cert-hash sha256:9f90161043001c0c75fac7d61590734f844ee507526e948f3647d7b9cfc1362d

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#成功!

#可在master上查询node节点是否加入成功

[root@k8s-master ~]# kubectl get nodes

`

报错:error execution phase kubelet-start: configmaps “kubelet-config-1.19” is forbidden: User “system:bootstrap:xvnp3x” cannot get resource “configmaps” in API group “” in the namespace “kube-system”

node节点加入k8s集群失败

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.19" ConfigMap in the kube-system namespace

error execution phase kubelet-start: configmaps "kubelet-config-1.19" is forbidden: User "system:bootstrap:xvnp3x" cannot get resource "configmaps" in API group "" in the namespace "kube-system"

报错:k8s-master与k8s-node上kubelet,kubeadm,kubectl版本不一致导致node加入master失败

操作了什么:

[root@k8s-node1 root]# kubeadm join 11.15.42.9:6443 --token 14wipl.w7quasjzx1um2nn3 --discovery-token-ca-cert-hash sha256:1b8ad1f8e140740d325beb52e6717f44abf34319a0baf09d10cf38695cbd5d94

报错信息:

[root@k8s-node1 root]# kubeadm join 11.15.4.19:6443 --token 14wipl.w7quasjzx1um2nn3 --discovery-token-ca-cert-hash sha256:1b8ad1f8e140740d325beb52e6717f44abf34319a0baf09d10cf38695cbd5d94

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0611 23:56:55.887488 26950 utils.go:69] The recommended value for "resolvConf" in "KubeletConfiguration" is: /run/systemd/resolve/resolv.conf; the provided value is: /etc/resolv.conf

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: this version of kubeadm only supports deploying clusters with the control plane version >= 1.22.0. Current version: v1.15.4

To see the stack trace of this error execute with --v=5 or higher

原因:

关键原因在于error log中的

this version of kubeadm only supports deploying clusters with the control plane version >= 1.22.0. Current version: v1.15.4

原因:

kubelet版本与master不一致(master上的版本:kubelet-1.14.0)

解决:

yum -y remove kubelet kubeadm kubectl #卸载当前版本的kube系列

yum -y install kubelet-1.14.0

yum -y install kubectl-1.14.0

yum -y install kubeadm-1.14.0

systemctl start kubelet && systemctl enable kubelet

kubeadm join 192.168.191.133:6443 --token xvnp3x.pl6i8ikcdoixkaf0 \

--discovery-token-ca-cert-hash sha256:9f90161043001c0c75fac7d61590734f844ee507526e948f3647d7b9cfc1362d

报错:/etc/kubernetes/kubelet.conf already exists

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

原因:

有残留文件

解决:

----------------8s-node上执行 ----------------------:

rm -rf /etc/kubernetes/kubelet.conf /etc/kubernetes/pki/ca.crt #删除k8s配置文件和证书文件

kubeadm join 192.168.191.133:6443 --token xvnp3x.pl6i8ikcdoixkaf0 \

--discovery-token-ca-cert-hash sha256:9f90161043001c0c75fac7d61590734f844ee507526e948f3647d7b9cfc1362d

k8s-node节点重新加入k8s-master

在使用k8s的过程中,相信很多人都遇到过使用kubeadm join命令,将node加入master时,出现error execution phase preflight: couldn’t validate the identity of the API Server: abort connecting to API servers after timeout of 5m0s错误,即节点纳入管理失败,五分钟后超时放弃连接。具体信息如下

[root@node1 ~]# kubeadm join 19.18.24.100:6443 --token 7r3l16.5yzfksxd5ty2zzie --discovery-token-ca-cert-hash sha256:56281a8be264fa334bb98cac5206aa190527xc3180c9f397c253ece41d997e8a

W0604 10:35:39.924306 13660 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

error execution phase preflight: couldn't validate the identity of the API Server: abort connecting to API servers after timeout of 5m0s

To see the stack trace of this error execute with --v=5 or higher

一、重置K8s node节点

[root@k8s-master root]# kubeadm reset

二、删除配置

[root@k8s-master root]# rm -rf $HOME/.kube/config

[root@k8s-master root]# rm -rf /var/lib/etcd

三、node节点重新加入k8s集群

[root@k8s-node root]# kubeadm join 12.18.26.10:16443 --token abcdef.0123456789abcdef

四、创建目录和配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config