SAM segment anything

Meta:segment anything

介绍地址:https://ai.facebook.com/research/publications/segment-anything/

演示地址:https://segment-anything.com/demo#

论文地址:https://arxiv.org/abs/2304.02643

GitHub地址:https://github.com/facebookresearch/segment-anything

Abstract

We introduce the Segment Anything (SA) project: a new task, model, and dataset for image segmentation. Using our efficient model in a data collection loop, we built the largest segmentation dataset to date (by far), with over 1 billion masks on 11M licensed and privacy respecting images. The model is designed and trained to be promptable, so it can transfer zero-shot to new image distributions and tasks. We evaluate its capabilities on numerous tasks and find that its zero-shot performance is impressive – often competitive with or even superior to prior fully supervised results. We are releasing the Segment Anything Model (SAM) and corresponding dataset (SA-1B) of 1B masks and 11M images at \href{https://segment-anything.com}{https://segment-anything.com} to foster research into foundation models for computer vision.

SEEM: Segment Everything Everywhere All At Once

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-E9i81rhc-1686562293452)(https://files.mdnice.com/user/44170/30a091e7-8ab4-4dc7-9b00-5d275d504d95.png)]

地址:https://huggingface.co/spaces/xdecoder/SEEM

Instructions:

Try our default examples first (Sketch is not automatically drawed on input and example image);

For video demo, it takes about 30-60s to process, please refresh if you meet an error on uploading;

Upload an image/video (If you want to use referred region of another image please check “Example” and upload another image in referring image panel);

Select at least one type of prompt of your choice (If you want to use referred region of another image please check “Example”);

Remember to provide the actual prompt for each promt type you select, otherwise you will meet an error (e.g., rember to draw on the referring image);

Our model by defualt support the vocabulary of COCO 133 categories, others will be classified to ‘others’ or misclassifed.

SEEM:微软基于 CV 大模型新作,分割“瞬息全宇宙”

论文题目:

Segment Everything Everywhere All at Once

论文链接:

https://arxiv.org/abs/2304.06718

项目地址:

https://github.com/ux-decoder/segment-everything-everywhere-all-at-once

Demo地址:

https://36771ee9c49a4631.gradio.app/

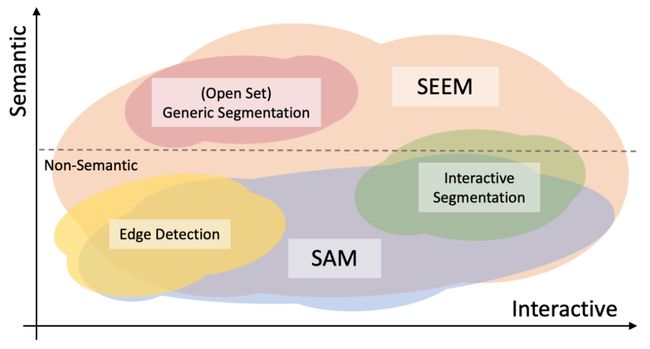

图像分割:SAM 与 SEEM

在分割问题领域,Meta 几天前提出的 SAM 提供了一个通用且全自动的图像分割方法,它的创新之处在于可以同时执行交互式分割和自动分割,并且可以通过灵活的 prompt 界面来适应新任务和新领域。它解决了传统方法需要很多手动注释和对于特定对象的限制的问题,具有很高的适用性和可扩展性。

本文由mdnice多平台发布