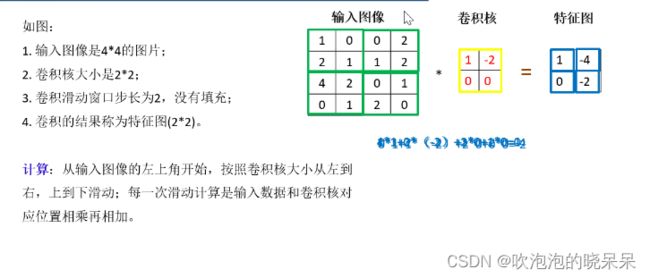

卷积神经网络笔记

在这个过程中注意降维

全连接的手写数字书别:

(1)构建数据集:

import struct

import os

import numpy as np

import gzip

#import cv2

def load_images(filename):

"""load images

filename: the name of the file containing data

return -- a matrix containing images as row vectors

"""

g_file = gzip.GzipFile(filename)

data = g_file.read()

magic, num, rows, columns = struct.unpack('>iiii', data[:16])

dimension = rows*columns

X = np.zeros((num,rows,columns), dtype='uint8')

offset = 16

for i in range(num):

a = np.frombuffer(data, dtype=np.uint8, count=dimension, offset=offset)

X[i] = a.reshape((rows, columns))

# X[i] = cv2.resize(a.reshape((rows, columns)), (32, 32), interpolation=cv2.INTER_AREA) # @@resize为32*32

offset += dimension

return X

def load_labels(filename):

"""load labels

filename: the name of the file containing data

return -- a row vector containing labels

"""

g_file = gzip.GzipFile(filename)

data = g_file.read()

magic, num = struct.unpack('>ii', data[:8])

d = np.frombuffer(data,dtype=np.uint8, count=num, offset=8)

return d

def load_data(foldername):

"""加载MINST数据集

foldername: the name of the folder containing datasets

return -- train_X, train_y, test_X, test_y

train_X: 训练数据集

train_y: 训练数据集对应的标签

test_X: 测试数据集

test_y: 测试数据集对应的标签

"""

# filenames of datasets

train_X_name = "train-images-idx3-ubyte.gz"

train_y_name = "train-labels-idx1-ubyte.gz"

test_X_name = "t10k-images-idx3-ubyte.gz"

test_y_name = "t10k-labels-idx1-ubyte.gz"

train_X = load_images(os.path.join(foldername, train_X_name))

train_y = load_labels(os.path.join(foldername,train_y_name))

test_X = load_images(os.path.join(foldername, test_X_name))

test_y = load_labels(os.path.join(foldername, test_y_name))

return train_X, train_y, test_X, test_y

class DatasetTrans():

"""" 训练数据转换 """

def __init__(self, X, y, batch_size):

"""

param:

x,y: 从文件读上来的数据

batch_size: 要划分的batch大小

"""

self.x = X

self.y = y

self.batch_size = batch_size

self.total = self.x.shape[0]

self.nbatch = int(self.total/batch_size)

def get_batch(self, n):

"""" 获取一个batch的数据

param:n第几个batch

return: inputs2一个batch的数据、label2对应的标签

"""

start = n * self.batch_size

end = (1+n)* self.batch_size

if end >= self.total:

inputs = self.x[start: ]

label = self.y[start: ]

else:

inputs = self.x[start: end]

label = self.y[start: end]

# 转换为(N, 784); (N, 10)

shape = inputs.shape

#inputs2 = np.zeros((shape[0], shape[1]*shape[2]))

#for i in range(inputs.shape[0]):

# inputs2[i] = inputs[i].flatten()

label2 = np.zeros((label.shape[0], 10))

for i in range(label.shape[0]):

v = label[i]

label2[i][v] = 1

return inputs, label2

if __name__ == "__main__":

train_X, train_y, test_X, test_y = load_data("./data/MNIST")

print("===: ", train_X.shape, train_y.shape, test_X.shape, test_y.shape)

import cv2

dir_name = "../MNIST/train/"

for i in range(train_X.shape[0]):

if not os.path.exists(dir_name):

os.makedirs(dir_name)

cv2.imwrite("../MNIST/train/{}_{}.jpg".format(i, train_y[i]), train_X[i])

dir_name = "../MNIST/test/"

for i in range(test_X.shape[0]):

if not os.path.exists(dir_name):

os.makedirs(dir_name)

cv2.imwrite("../MNIST/test/{}_{}.jpg".format(i, test_y[i]), test_X[i])

模型文件,构建模型:

# -*- coding: utf-8 -*-

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

"""

Created on Mon Fri 17 10:58:36 2021

@author: iflysse

"""

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input//构建输入层

from tensorflow.keras.layers import Dense//构建全连接层

from tensorflow.keras.layers import Flatten//降为操作

from tensorflow.keras.layers import Conv2D//对他进行计算变形,降维,传参

from tensorflow.keras.layers import MaxPooling2D//最大池化

#构建卷积神经网络

def LeNet(input_shape=(28, 28, 1), classes=10):#传入图片的大小,通道

#输入

input = Input(shape=input_shape)#输入我们的的所需要的值

#C1层

x=Conv2D(6, kernel_size=(5, 5), activation='relu')(input)//relu函数进行函数

#S2层

x=MaxPooling2D(pool_size=(2, 2))(x)//池化

#C3层

x=Conv2D(16, kernel_size=(5, 5), activation='relu')(x)//16个卷积核,卷积层

#S4层

x=MaxPooling2D(pool_size=(2, 2))(x)//池化

#C5层

x=Flatten()(x)//降维

x=Dense(120, activation='relu')(x)//全连接方法,120个神经元

#F6层

x=Dense(84, activation='relu')(x)//全连接层

#输出层

x=Dense(10, activation='softmax')(x)//10神经元,softmax是概率值

model = Model(inputs=input, outputs=x, name='LeNet')//建立模型

model.summary() # 显示模型的架构,控制台架构

return model 返回模型

if __name__ == '__main__':

LeNet(input_shape=[28, 28, 1], classes=10)//调用方法

运行结果:

Model: "LeNet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

conv2d (Conv2D) (None, 24, 24, 6) 156

max_pooling2d (MaxPooling2D (None, 12, 12, 6) 0

)

conv2d_1 (Conv2D) (None, 8, 8, 16) 2416

max_pooling2d_1 (MaxPooling (None, 4, 4, 16) 0

2D)

flatten (Flatten) (None, 256) 0

dense (Dense) (None, 120) 30840 参数很多

dense_1 (Dense) (None, 84) 10164

dense_2 (Dense) (None, 10) 850 参数主机减少

=================================================================

Total params: 44,426

Trainable params: 44,426

Non-trainable params: 0

_________________________________________________________________

构建好模型后,我们对模型进行训练:

import os

from data_manager import load_data

import tensorflow.keras

from Lenet import LeNet

from tensorflow.keras.optimizers import SGD

learning_rate=0.01

# 1. 加载原始数据集

x_train, y_train, x_test, y_test = load_data("./data/MNIST")

# 输入数据为 mnist 数据集

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1)

x_test = x_test.reshape(x_test.shape[0], 28, 28, 1)

x_train = x_train / 255

x_test = x_test / 255

y_train = tensorflow.keras.utils.to_categorical(y_train, 10)

y_test = tensorflow.keras.utils.to_categorical(y_test, 10)

# 调用LeNet网络模型

model = LeNet(input_shape=(28, 28, 1), classes=10)

# 编译来配置模型学习过程

sgd = SGD(lr=learning_rate, decay=1e-5, momentum=0.9, nesterov=True)

model.compile(loss=tensorflow.keras.metrics.categorical_crossentropy, optimizer=tensorflow.keras.optimizers.Adam(), metrics=['accuracy'])

model.fit(x_train, y_train, batch_size=64, epochs=30, verbose=1, validation_data=(x_test, y_test))

score = model.evaluate(x_test, y_test)

print('Test Loss:', score[0])

print('Test accuracy:', score[1])

# 保存模型

save_dir = 'weights/LeNet/'

if not os.path.exists(save_dir):

os.makedirs(save_dir)

model.save(save_dir + 'LeNet.h5')

训练结果:

Model: "LeNet"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

conv2d (Conv2D) (None, 24, 24, 6) 156

max_pooling2d (MaxPooling2D (None, 12, 12, 6) 0

)

conv2d_1 (Conv2D) (None, 8, 8, 16) 2416

max_pooling2d_1 (MaxPooling (None, 4, 4, 16) 0

2D)

flatten (Flatten) (None, 256) 0

dense (Dense) (None, 120) 30840

dense_1 (Dense) (None, 84) 10164

dense_2 (Dense) (None, 10) 850

=================================================================

Total params: 44,426

Trainable params: 44,426

Non-trainable params: 0

_________________________________________________________________

预测和运用,图像分类:

import os

import numpy as np

import tensorflow as tf

from Lenet import LeNet

from PIL import Image

import matplotlib.pyplot as plt

def handel_image(img_dir):

'''

将图像处理成符合本次实验的输入形式

shape:[-1,784]

1.根据图片路径显示图片

2.将图片转化成黑白图片

3.裁剪图片成28*28大小

4.将图片转化成array形式

5.将图像reshape:[-1,784]形式

:param img_dir: 图片路径

:return: array形式的图片

'''

# TODO: 1.使用Image.open打开图片image

image = Image.open(img_dir)

# TODO: 2.使用plt显示图片

plt.imshow(image)

plt.show()

# TODO: 3.使用image.convert('1')将图片转化成黑白图片

image = image.convert('1')

# TODO: 4.使用image.resize将图片裁剪成[28, 28]

image = image.resize([28, 28])

# TODO: 5.转化成np可处理格式

image = np.array(image).astype(np.float32)

#TODO:6.设置通道数为1(灰度图像)

image = image.reshape([28, 28,1])

image = np.expand_dims(image, 0)

return image

if __name__ == "__main__":

# 1. 加载模型

# 调用LeNet网络模型

model = LeNet(input_shape=(28, 28, 1), classes=10)

# 加载weights

model.load_weights('weights/LeNet/LeNet.h5')

# 2. 图像预处理

imag_data = handel_image("./data/test_image_300x300.png")

# 3. 测试

out = model(imag_data)

print("out:",out)

print("argmax(out, 1):",tf.argmax(out, 1))

cls = int(tf.argmax(out, 1))

confidence = out[0][cls]

print("类别: {}, 置信度:{}".format(cls, confidence))

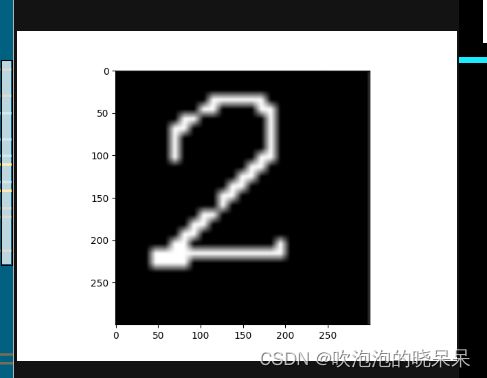

预测结果:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 28, 28, 1)] 0

conv2d (Conv2D) (None, 24, 24, 6) 156

max_pooling2d (MaxPooling2D (None, 12, 12, 6) 0

)

conv2d_1 (Conv2D) (None, 8, 8, 16) 2416

max_pooling2d_1 (MaxPooling (None, 4, 4, 16) 0

2D)

flatten (Flatten) (None, 256) 0

dense (Dense) (None, 120) 30840

dense_1 (Dense) (None, 84) 10164

dense_2 (Dense) (None, 10) 850

=================================================================

Total params: 44,426

Trainable params: 44,426

Non-trainable params: 0

_________________________________________________________________

out: tf.Tensor(

[[4.70090377e-14 1.70552225e-10 1.00000000e+00 7.36586764e-11

4.08429611e-13 7.50180222e-17 7.19841686e-16 4.18770487e-13

4.17118759e-13 1.03071224e-16]], shape=(1, 10), dtype=float32)

argmax(out, 1): tf.Tensor([2], shape=(1,), dtype=int64)

类别: 2, 置信度:1.0

可以见得:[[4.70090377e-14 1.70552225e-10 1.00000000e+00 7.36586764e-11

4.08429611e-13 7.50180222e-17 7.19841686e-16 4.18770487e-13

4.17118759e-13 1.03071224e-16]], shape=(1, 10), dtype=float32)

argmax(out, 1): tf.Tensor([2], shape=(1,), dtype=int64)

类别: 2, 置信度:1.0