Docker-compose部署ELK

Docker-compose部署ELK

- 基础环境安装

-

- docker-compose安装

- git安装

- 命令补全安装

-

- 系统命令补全

- docker-compose命令补全

- dock-compose部署安装ELK

-

- 拉取github 项目

- docker-compose 安装

- docker-compose 启动(创建镜像及docker network)

- docker-compose 停止(删除镜像及docker network)

- docker-compose 停止(保留镜像及docker network)

- docker-compose 启动(从stop状态下重新启动)

- 启动后可能出现的问题

- Logstsh端口测试

基础环境安装

docker-compose

git 安装

命令补全安装

docker-compose安装

curl -L https://github.com/docker/compose/releases/download/1.28.2/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

建议放置在 /usr/local/bin/ 此目录为本地第三方安装 区分 /usr/bin/ 目录的系统默认安装位置

git安装

yum install git -y

命令补全安装

系统命令补全

自动补齐需要依赖工具 bash-complete,如果没有,则需要手动安装,命令如下:

yum -y install bash-completion

安装成功后,得到文件为 /usr/share/bash-completion/bash_completion ,如果没有这个文件,则说明系统上没有安装这个工具。

docker-compose命令补全

命令如下:

curl -L https://raw.githubusercontent.com/docker/compose/$(docker-compose version --short)/contrib/completion/bash/docker-compose -o /etc/bash_completion.d/docker-compose

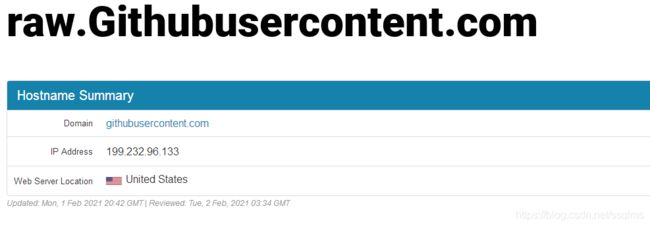

如出现 0curl: (7) Failed connect to raw.githubusercontent.com:443; Connection refused

证明所在地的域名已被污染,解决办法在hosts中加入raw.githubusercontent.com的真实地址进行本地解析

1、登陆 https://www.ipaddress.com/ 解析出真实地址

2、修改本地hosts进行本地解析

vim /etc/hosts

加入本地解析

199.232.28.133 raw.githubusercontent.com

dock-compose部署安装ELK

拉取github 项目

git clone https://github.com/deviantony/docker-elk.git

docker-compose 安装

修改

elasticsearch/config/elasticsearch.yml

## Default Elasticsearch configuration from Elasticsearch base image.

## https://github.com/elastic/elasticsearch/blob/master/distribution/docker/src/docker/config/elasticsearch.yml

#

cluster.name: "docker-cluster"

network.host: 0.0.0.0

node.name: node-1

node.master: true

http.cors.enabled: true

http.cors.allow-origin: "*"

## X-Pack settings

## see https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-xpack.html

#

xpack.license.self_generated.type: trial

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

kibana/config/kibana.yml

## Default Kibana configuration from Kibana base image.

## https://github.com/elastic/kibana/blob/master/src/dev/build/tasks/os_packages/docker_generator/templates/kibana_yml.template.ts

#

server.name: kibana

server.host: 0.0.0.0

elasticsearch.hosts: [ "http://elasticsearch_IP:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

## X-Pack security credentials

#

elasticsearch.username: elastic

elasticsearch.password: changeme

logstash/config/logstash.yml

## Default Logstash configuration from Logstash base image.

## https://github.com/elastic/logstash/blob/master/docker/data/logstash/config/logstash-full.yml

#

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch_IP:9200" ]

## X-Pack security credentials

#

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: changeme

logstash/pipeline/logstash.conf

- 此处有坑,经测试:在 logstash.conf 文件中input时引用tcp和udp端口并tag后,在output时tcp上会错误收到UDP中的日志。不知道是elk本身有BUG还是我哪里没做对。

input {

beats {

port => 5044

}

tcp {

port => 5000

type =>"tcp"

}

udp {

port => 5140

type =>"udp"

}

}

## Add your filters / logstash plugins configuration here

output {

if [type] == "tcp"{

elasticsearch {

hosts => "IP:9200"

user => "xxx"

password => "xxx"

ecs_compatibility => disabled

index => "syslog-%{+YYYY.MM.dd}"

}

}

if [type] == "udp"{

elasticsearch {

hosts => "IP:9200"

user => "xxx"

password => "xxx"

ecs_compatibility => disabled

index => "udp_syslog-%{+YYYY.MM.dd}"

}

}

}

以上情况下,当5000端口的TCP数据过来时index syslog 中可以收到 index udp_syslog 中不会收到,当5140的UDP数据过来时 index syslog 和 udp_syslog 都会收到

解决办法:

在 logstash/pipeline/ 目录中 另外创建两份 conf文件,分别使用TCP和UDP的端口

docker-compose 启动(创建镜像及docker network)

[root@localhost docker-elk]# docker-compose up -d

Building with native build. Learn about native build in Compose here: https://docs.docker.com/go/compose-native-build/

Creating docker-elk_elasticsearch_1 ... done

Creating docker-elk_logstash_1 ... done

Creating docker-elk_kibana_1 ... done

docker-compose 停止(删除镜像及docker network)

[root@localhost docker-elk]# docker-compose down

Stopping docker-elk_kibana_1 ... done

Stopping docker-elk_logstash_1 ... done

Stopping docker-elk_elasticsearch_1 ... done

Removing docker-elk_kibana_1 ... done

Removing docker-elk_logstash_1 ... done

Removing docker-elk_elasticsearch_1 ... done

Removing network docker-elk_elk

docker-compose 停止(保留镜像及docker network)

[root@localhost docker-elk]# docker-compose stop

Stopping docker-elk_logstash_1 ... done

Stopping docker-elk_kibana_1 ... done

Stopping docker-elk_elasticsearch_1 ... done

docker-compose 启动(从stop状态下重新启动)

[root@localhost docker-elk]# docker-compose start

Starting elasticsearch ... done

Starting logstash ... done

Starting kibana ... done

up down stop start 后均可跟镜像,单独执行

启动后可能出现的问题

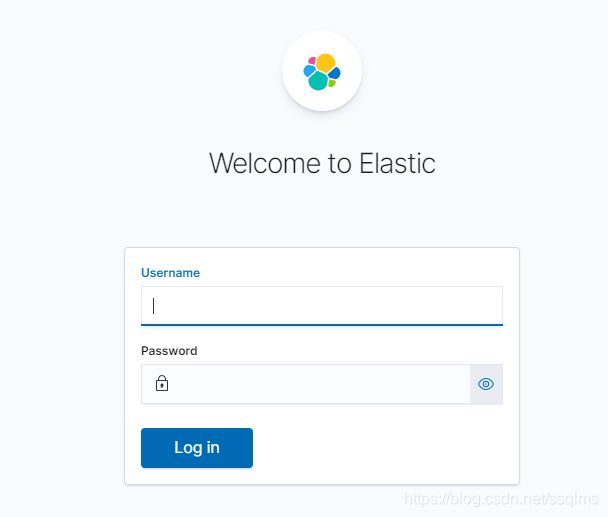

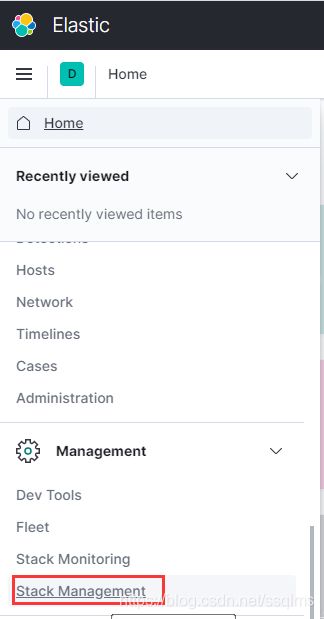

Kibana server is not ready yet 错误

一般由ElasticSearch索引问题导致Kibana 提示该未准备好的错误

解决办法:

- 查看docker-compose.yml时发现 在启动时 创建了 volumes 并且 E L K 中的bind都绑定进了这个卷,因此考虑缓存引起的问题。docker volume rm 删除该卷后重新 up 一次,问题解决。

- 在测试过程中无意又重现了一次这问题,网上查找其它解决办法时,大佬们普遍认为是ElasticSearch索引问题导致的,使用以下方法也可解决问题,比较粗暴的删volumes更好

curl -u elastic:changeme 'localhost:9200/_cat/indices?v' //注意使用xpack插件时要带帐号密码访问

curl -u elastic:changeme XDELETE 'localhost:9200/.kibana*'

Logstsh端口测试

Logstash conf

logstash/pipeline/logstash_tcp.conf //5000的TCP端口

input {

tcp {

port => 5000

type =>"tcp"

}

}

## Add your filters / logstash plugins configuration here

output {

if [type] == "tcp"{

elasticsearch {

hosts => "192.168.6.151:9200"

user => "elastic"

password => "changeme"

ecs_compatibility => disabled

index => "syslog-%{+YYYY.MM.dd}"

}

}

}

logstash/pipeline/logstash_udp.conf //5140的UDP端口

input {

udp {

port => 5140

type =>"udp"

}

}

## Add your filters / logstash plugins configuration here

output {

if [type] == "udp"{

elasticsearch {

hosts => "192.168.6.151:9200"

user => "elastic"

password => "changeme"

ecs_compatibility => disabled

index => "udp_syslog-%{+YYYY.MM.dd}"

}

}

}

docker-compose.yml //注意logstash 模块中添加 5140的UDP端口映射

logstash:

build:

context: logstash/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./logstash/config/logstash.yml

target: /usr/share/logstash/config/logstash.yml

read_only: true

- type: bind

source: ./logstash/pipeline

target: /usr/share/logstash/pipeline

read_only: true

ports:

- "5044:5044"

- "5000:5000/tcp"

- "5000:5000/udp"

- "5140:5140/udp"

- "9600:9600"

environment:

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

networks:

- elk

#network_mode: bridge //使用默认的docker0网桥

depends_on:

- elasticsearch

以上配置正确并启动容器后

- 测试5140 UDP口 (模拟一个 syslog消息)

提供一个python的测试脚本

import logging

import logging.handlers # handlers要单独import

logger = logging.getLogger()

fh = logging.handlers.SysLogHandler(('192.168.6.151', 5140), logging.handlers.SysLogHandler.LOG_AUTH)

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

fh.setFormatter(formatter)

logger.addHandler(fh)

logger.warning("msg4")

logger.error("msg4")

logstash.conf 的 filter grok 解析及dissect分割(Huawei 交换机中的log收集分析