yolo增加RFEM

论文地址:https://arxiv.org/pdf/2208.02019.pdf

代码地址:GitHub - Krasjet-Yu/YOLO-FaceV2: YOLO-FaceV2: A Scale and Occlusion Aware Face Detector

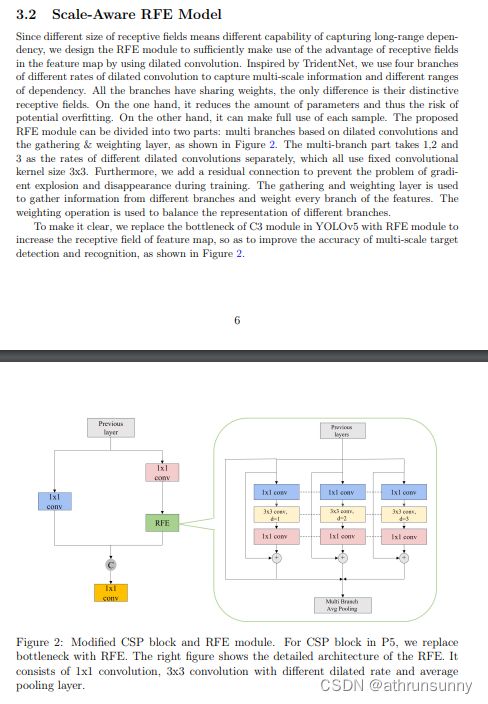

总的来说就是RFEM利用了感受野在特征图中的优势,通过使用不同膨胀卷积率的分支来捕捉多尺度信息和不同范围的依赖关系。这种设计有助于减少参数数量,降低过拟合风险,并充分利用每个样本。

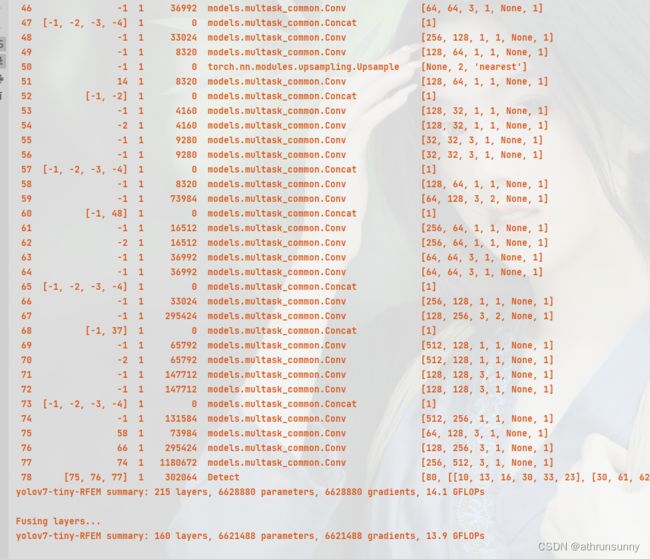

1、yolov7-tiny

创建配置文件yolov7-tiny-RFEM.yaml

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

activation: nn.LeakyReLU(0.1)

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# yolov7-tiny backbone

backbone:

# [from, number, module, args] c2, k=1, s=1, p=None, g=1, act=True

[[-1, 1, Conv, [32, 3, 2, None, 1]], # 0-P1/2

[-1, 1, Conv, [64, 3, 2, None, 1]], # 1-P2/4

[-1, 1, Conv, [32, 1, 1, None, 1]],

[-2, 1, Conv, [32, 1, 1, None, 1]],

[-1, 1, Conv, [32, 3, 1, None, 1]],

[-1, 1, Conv, [32, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1]], # 7

[-1, 1, MP, []], # 8-P3/8

[-1, 1, Conv, [64, 1, 1, None, 1]],

[-2, 1, Conv, [64, 1, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1]], # 14

[-1, 1, MP, []], # 15-P4/16

[-1, 1, Conv, [128, 1, 1, None, 1]],

[-2, 1, Conv, [128, 1, 1, None, 1]],

[-1, 1, Conv, [128, 3, 1, None, 1]],

[-1, 1, Conv, [128, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1]], # 21

[-1, 1, MP, []], # 22-P5/32

[-1, 1, Conv, [256, 1, 1, None, 1]],

[-2, 1, Conv, [256, 1, 1, None, 1]],

[-1, 1, Conv, [256, 3, 1, None, 1]],

[-1, 1, Conv, [256, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [512, 1, 1, None, 1]], # 28

]

# yolov7-tiny head

head:

[[-1, 1, Conv, [256, 1, 1, None, 1]],

[-2, 1, Conv, [256, 1, 1, None, 1]],

[-1, 1, SP, [5]],

[-2, 1, SP, [9]],

[-3, 1, SP, [13]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1]],

[[-1, -7], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1]], # 37

[-1, 1, RFEM, [256]],

[-1, 1, Conv, [128, 1, 1, None, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[21, 1, Conv, [128, 1, 1, None, 1]], # route backbone P4

[[-1, -2], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1]],

[-2, 1, Conv, [64, 1, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1]], # 48

[-1, 1, Conv, [64, 1, 1, None, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[14, 1, Conv, [64, 1, 1, None, 1]], # route backbone P3

[[-1, -2], 1, Concat, [1]],

[-1, 1, Conv, [32, 1, 1, None, 1]],

[-2, 1, Conv, [32, 1, 1, None, 1]],

[-1, 1, Conv, [32, 3, 1, None, 1]],

[-1, 1, Conv, [32, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1]], # 58

[-1, 1, Conv, [128, 3, 2, None, 1]],

[[-1, 48], 1, Concat, [1]],

[-1, 1, Conv, [64, 1, 1, None, 1]],

[-2, 1, Conv, [64, 1, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[-1, 1, Conv, [64, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1]], # 66

[-1, 1, Conv, [256, 3, 2, None, 1]],

[[-1, 37], 1, Concat, [1]],

[-1, 1, Conv, [128, 1, 1, None, 1]],

[-2, 1, Conv, [128, 1, 1, None, 1]],

[-1, 1, Conv, [128, 3, 1, None, 1]],

[-1, 1, Conv, [128, 3, 1, None, 1]],

[[-1, -2, -3, -4], 1, Concat, [1]],

[-1, 1, Conv, [256, 1, 1, None, 1]], # 74

[58, 1, Conv, [128, 3, 1, None, 1]],

[66, 1, Conv, [256, 3, 1, None, 1]],

[74, 1, Conv, [512, 3, 1, None, 1]],

[[75,76,77], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

在common.py中增加

# RFEM

class TridentBlock(nn.Module):

def __init__(self, c1, c2, stride=1, c=False, e=0.5, padding=[1, 2, 3], dilate=[1, 2, 3], bias=False):

super(TridentBlock, self).__init__()

self.stride = stride

self.c = c

c_ = int(c2 * e)

self.padding = padding

self.dilate = dilate

self.share_weightconv1 = nn.Parameter(torch.Tensor(c_, c1, 1, 1))

self.share_weightconv2 = nn.Parameter(torch.Tensor(c2, c_, 3, 3))

self.bn1 = nn.BatchNorm2d(c_)

self.bn2 = nn.BatchNorm2d(c2)

# self.act = nn.SiLU()

self.act = Conv.default_act

nn.init.kaiming_uniform_(self.share_weightconv1, nonlinearity="relu")

nn.init.kaiming_uniform_(self.share_weightconv2, nonlinearity="relu")

if bias:

self.bias = nn.Parameter(torch.Tensor(c2))

else:

self.bias = None

if self.bias is not None:

nn.init.constant_(self.bias, 0)

def forward_for_small(self, x):

residual = x

out = nn.functional.conv2d(x, self.share_weightconv1, bias=self.bias)

out = self.bn1(out)

out = self.act(out)

out = nn.functional.conv2d(out, self.share_weightconv2, bias=self.bias, stride=self.stride,

padding=self.padding[0],

dilation=self.dilate[0])

out = self.bn2(out)

out += residual

out = self.act(out)

return out

def forward_for_middle(self, x):

residual = x

out = nn.functional.conv2d(x, self.share_weightconv1, bias=self.bias)

out = self.bn1(out)

out = self.act(out)

out = nn.functional.conv2d(out, self.share_weightconv2, bias=self.bias, stride=self.stride,

padding=self.padding[1],

dilation=self.dilate[1])

out = self.bn2(out)

out += residual

out = self.act(out)

return out

def forward_for_big(self, x):

residual = x

out = nn.functional.conv2d(x, self.share_weightconv1, bias=self.bias)

out = self.bn1(out)

out = self.act(out)

out = nn.functional.conv2d(out, self.share_weightconv2, bias=self.bias, stride=self.stride,

padding=self.padding[2],

dilation=self.dilate[2])

out = self.bn2(out)

out += residual

out = self.act(out)

return out

def forward(self, x):

xm = x

base_feat = []

if self.c is not False:

x1 = self.forward_for_small(x)

x2 = self.forward_for_middle(x)

x3 = self.forward_for_big(x)

else:

x1 = self.forward_for_small(xm[0])

x2 = self.forward_for_middle(xm[1])

x3 = self.forward_for_big(xm[2])

base_feat.append(x1)

base_feat.append(x2)

base_feat.append(x3)

return base_feat

class RFEM(nn.Module):

def __init__(self, c1, c2, n=1, e=0.5, stride=1):

super(RFEM, self).__init__()

c = True

layers = []

layers.append(TridentBlock(c1, c2, stride=stride, c=c, e=e))

c1 = c2

for i in range(1, n):

layers.append(TridentBlock(c1, c2))

self.layer = nn.Sequential(*layers)

# self.cv = Conv(c2, c2)

self.bn = nn.BatchNorm2d(c2)

# self.act = nn.SiLU()

self.act = Conv.default_act

def forward(self, x):

out = self.layer(x)

out = out[0] + out[1] + out[2] + x

out = self.act(self.bn(out))

return out

class C3RFEM(nn.Module):

def __init__(self, c1, c2, n=1, shortcut=True, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)

# self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))

# self.rfem = RFEM(c_, c_, n)

self.m = nn.Sequential(*[RFEM(c_, c_, n=1, e=e) for _ in range(n)])

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))其中C3RFEM对应原作者的实现

在yolo.py中修改:

n = n_ = max(round(n * gd), 1) if n > 1 else n # depth gain

if m in {

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, StemBlock,

BlazeBlock, DoubleBlazeBlock, ShuffleV2Block, MobileBottleneck, InvertedResidual, ConvBNReLU,

RepVGGBlock, SEBlock, RepBlock, SimCSPSPPF, C3_P, SPPCSPC, RepConv, RFEM, C3RFEM}:

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

if m == InvertedResidual:

c2 = make_divisible(c2 * gw, 4 if gw == 0.1 else 8)

args = [c1, c2, *args[1:]]

if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x, C3_P, C3RFEM}:

args.insert(2, n) # number of repeats

n = 1运行yolo.py

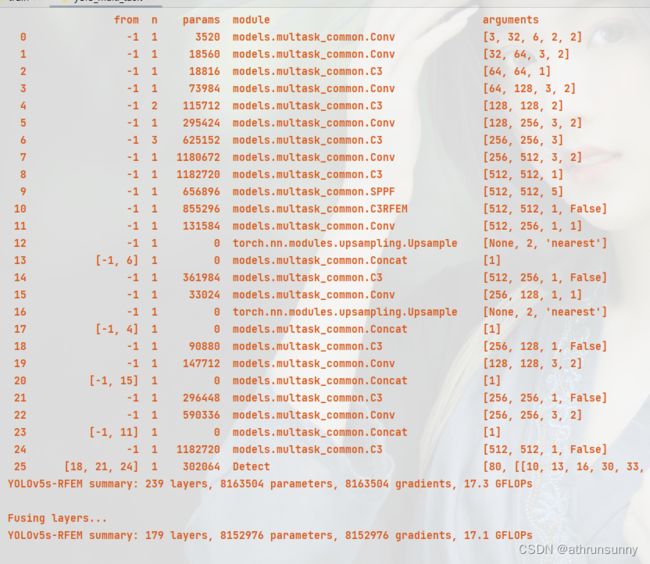

2、yolov5

yolov5s-RFEM.yaml

# YOLOv5 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

[-1, 3, C3RFEM, [1024, False]], # 10

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 15], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 11], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[18, 21, 24], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

改法和上面一样

运行yolo.py