OAK相机:多相机硬件同步拍摄

OAK相机:多相机硬件同步拍摄

- 传感器同步

- 硬件同步信号

-

- FSYNC信号

- STROBE信号

- 硬件接线

-

- 硬件设备

- 接线步骤

- 软件驱动

- 参考文献

传感器同步

目前主要有两种方法来同步不同传感器的信息(帧、IMU数据包、ToF等):

- 硬件同步(基于硬件信号触发,同步精度较高,需要硬件支持)

- 软件同步(基于时间戳或序列号同步,同步精度较低,无需硬件支持)

此博客重点介绍硬件同步,它允许在多个相机传感器之间精确同步,并可能与其他硬件同步,如闪光灯LED、外部IMU或其他相机。

硬件同步信号

FSYNC信号

FSYNC/FSIN(帧同步)信号是一个脉冲,在开始捕获每个帧时被驱动为高电平。它的长度与曝光时间不成正比,可以是输入或输出,工作电压是1.8V。

在双目立体相机(OAK-D*)上,我们希望双目黑白相机是完全同步的,所以一个相机传感器(如左相机)的FSYNC设置为INPUT(输入),而另一个相机传感器(如右相机)的FSYNC设置为OUTPUT(输出)。在这样的配置中,右相机驱动左相机。

注意:目前,只有OV9282/OV9782可以输出FSYNC信号,而IMX378/477/577/等应该也有这个能力,但还不支持(所以这些信号不能驱动FSYNC信号,只能被它驱动)。AR0234只支持输入FSYNC信号。

如果我们想用外部信号驱动相机,我们需要将FSIN设置为相机传感器的INPUT。将一个信号发生器连接到所有的FSIN引脚上,这样相机将根据信号发生器的触发信号捕获每一帧图像。

STROBE信号

STROBE信号是图像传感器的输出,在图像传感器的曝光期间是有效的(高电平)。它可以用来驱动外部的LED照明,所以照明只在曝光时间内激活,而不是持续开启,这将减少功耗和发热。

在OAK-D-Pro系列相机上使用STROBE信号(它有板载照明红外LED和红外激光点阵发射器)来驱动激光/LED。

硬件接线

硬件设备

我们使用的硬件设备如下:

OV9782广角相机 × 4

OAK-FFC-4P摄像头模组 × 1

OV9782广角相机产品特点:

- CMOS感光

- 全局快门

- 最大帧率:120 FPS

- 最大分辨率: 1MP(1280×800)

- DFOV:89.5°

- HFOV:80°

- VFOV:55°

- 对焦范围:固定焦点:19.6 cm – ∞

OAK-FFC-4P摄像头模组属于分体式OAK,可以通过软排线接入4个独立的MIPI相机模块,其产品特点有:

- 4T算力;

- 4K H.265推流;

- 厘米级测量精度;

- 支持的平台和语言:Windows10、Ubuntu、树莓派、linux、macOS、Jetson、Python、C++、ROS、Android(需depthai≥2.16.0)。

接线步骤

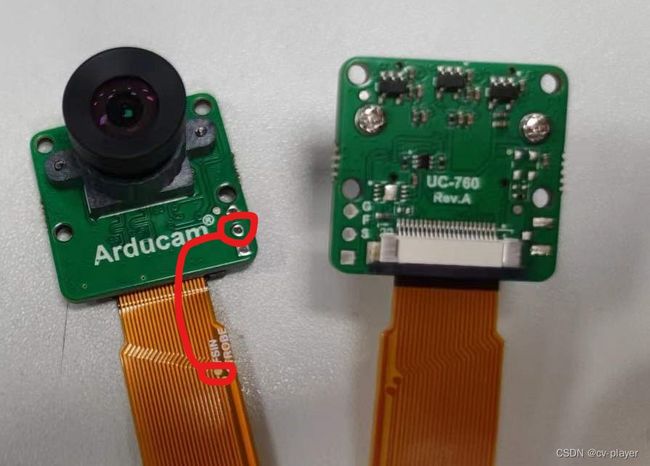

1、首先,使用跳线将每根电缆上的 FSIN 测试点连接到相应相机板上的 FSIN 引脚(也可以直接将相机板上的所有FSIN引脚直接相连):

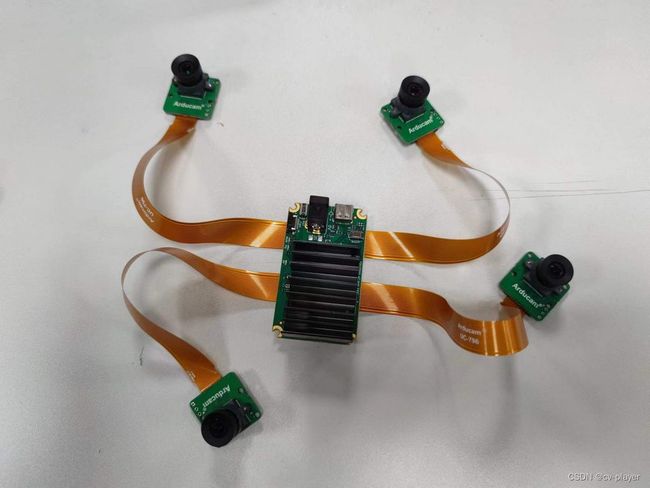

2、然后,将4个OV9782广角相机通过排线连接到OAK-FFC-4P摄像头模组:

3、最后,给摄像头模组供电,并通过USB接入电脑PC中。

软件驱动

编写测试代码和打印设备时间戳,camera_driver.py文件如下:

import depthai as dai

import time

import cv2

import collections

set_fps = 30

class FPS:

def __init__(self, window_size=30):

self.dq = collections.deque(maxlen=window_size)

self.fps = 0

def update(self, timestamp=None):

if timestamp == None: timestamp = time.monotonic()

count = len(self.dq)

if count > 0: self.fps = count / (timestamp - self.dq[0])

self.dq.append(timestamp)

def get(self):

return self.fps

cam_list = ['rgb', 'left', 'right', 'camd']

cam_socket_opts = {

'rgb' : dai.CameraBoardSocket.RGB, # Or CAM_A

'left' : dai.CameraBoardSocket.LEFT, # Or CAM_B

'right': dai.CameraBoardSocket.RIGHT, # Or CAM_C

'camd' : dai.CameraBoardSocket.CAM_D,

}

pipeline = dai.Pipeline()

cam = {}

xout = {}

for c in cam_list:

cam[c] = pipeline.create(dai.node.MonoCamera)

cam[c].setResolution(dai.MonoCameraProperties.SensorResolution.THE_800_P)

if c == 'rgb':

cam[c].initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.OUTPUT)

else:

cam[c].initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.INPUT)

cam[c].setBoardSocket(cam_socket_opts[c])

xout[c] = pipeline.create(dai.node.XLinkOut)

xout[c].setStreamName(c)

cam[c].out.link(xout[c].input)

config = dai.Device.Config()

config.board.gpio[6] = dai.BoardConfig.GPIO(dai.BoardConfig.GPIO.OUTPUT,

dai.BoardConfig.GPIO.Level.HIGH)

with dai.Device(config) as device:

device.startPipeline(pipeline)

q = {}

fps_host = {} # FPS computed based on the time we receive frames in app

fps_capt = {} # FPS computed based on capture timestamps from device

for c in cam_list:

q[c] = device.getOutputQueue(name=c, maxSize=1, blocking=False)

cv2.namedWindow(c, cv2.WINDOW_NORMAL)

cv2.resizeWindow(c, (640, 480))

fps_host[c] = FPS()

fps_capt[c] = FPS()

while True:

frame_list = []

for c in cam_list:

pkt = q[c].tryGet()

if pkt is not None:

fps_host[c].update()

fps_capt[c].update(pkt.getTimestamp().total_seconds())

print(c+":",pkt.getTimestampDevice())

frame = pkt.getCvFrame()

cv2.imshow(c, frame)

print("-------------------------------")

# print("\rFPS:",

# *["{:6.2f}|{:6.2f}".format(fps_host[c].get(), fps_capt[c].get()) for c in cam_list],

# end='', flush=True)

key = cv2.waitKey(1)

if key == ord('q'):

break

运行

python camera_driver.py

参考文献

1、通过硬件触发信号实现OAK多相机之间的同步拍摄

2、官方文档:硬件同步

3、官方文档:oak-ffc-4p

4、原理图

5、oak_deptahi_external_trigger_fsync.py

#!/usr/bin/env python3

import depthai as dai

import cv2

import time

pipeline = dai.Pipeline()

camRgb = pipeline.create(dai.node.ColorCamera)

camRgb.setColorOrder(dai.ColorCameraProperties.ColorOrder.RGB)

camRgb.setIspScale(2,3)

camRgb.initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.INPUT)

camRgb.initialControl.setExternalTrigger(4,3)

xoutRgb = pipeline.create(dai.node.XLinkOut)

xoutRgb.setStreamName("color")

camRgb.isp.link(xoutRgb.input)

monoLeft = pipeline.create(dai.node.MonoCamera)

monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

monoLeft.setBoardSocket(dai.CameraBoardSocket.LEFT)

monoLeft.initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.INPUT)

monoLeft.initialControl.setExternalTrigger(4,3)

xoutLeft = pipeline.create(dai.node.XLinkOut)

xoutLeft.setStreamName("left")

monoLeft.out.link(xoutLeft.input)

monoRight = pipeline.createMonoCamera()

monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

monoRight.setBoardSocket(dai.CameraBoardSocket.RIGHT)

monoRight.initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.INPUT)

monoRight.initialControl.setExternalTrigger(4,3)

xoutRight = pipeline.create(dai.node.XLinkOut)

xoutRight.setStreamName("right")

monoRight.out.link(xoutRight.input)

# Connect to device with pipeline

with dai.Device(pipeline) as device:

arr = ['left', 'right', 'color']

queues = {}

frames = {}

for name in arr:

queues[name] = device.getOutputQueue(name)

print("Starting...")

while True:

for name in arr:

if queues[name].has():

frames[name]=queues[name].get().getCvFrame()

for name, frame in frames.items():

cv2.imshow(name, frame)

key = cv2.waitKey(1)

if key == ord('q'):

break