Keras搭建经典CNN LeNet5网络进行手写体识别

1 简介

LeNet-5 模型是Yann LeCun 教授于 1998 年在论文 Gradient-based learning applied to document recognition中提出的,它是第一个成功应用于数字识别问题的卷积神经网络。在 MNIST 数据集上, LeNet-5 模型可以达到大约 **99.2%**的正确率。

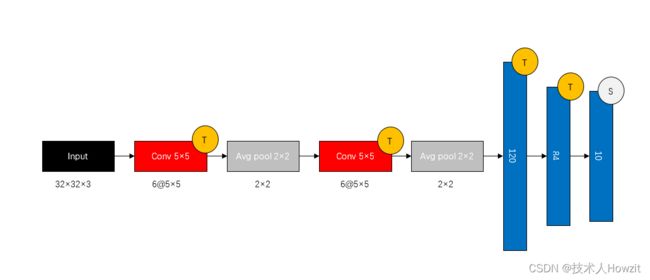

在minst手写体数据集基础上构建LeNet-5网络,其结构如下:

其中,T表示tanh激活函数,S代表softmax。

2 数据处理和展示

原始mnist数据格式为**[6000,28,28],但是卷积层所需要的格式为[None,28,28,1],None 代表任何个[28,28,1]数据,其中28为宽高**,1代表颜色通道(一般颜色通道为3,rgb,这里采用灰色).

def load_data(self):

print('loaddata')

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(-1, 28, 28, 1) # normalize

X_test = X_test.reshape(-1, 28, 28, 1) # normalize

X_train = X_train / 255

X_test = X_test / 255

y_train = to_categorical(y_train, num_classes=10)

y_test = to_categorical(y_test, num_classes=10)

return (X_train, y_train), (X_test, y_test)

3 模型搭建

其中LeNet5中的5代表5层,我已经在下面用彩色数字标注出来了。

4 代码实现

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.layers import Dense, Conv2D, AveragePooling2D, Flatten

from tensorflow.keras.models import Sequential

from tensorflow.keras.utils import to_categorical

class LeNet5:

def __init__(self):

self.model = Sequential()

def load_data(self):

print('loaddata')

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train.reshape(-1, 28, 28, 1) # normalize

X_test = X_test.reshape(-1, 28, 28, 1) # normalize

X_train = X_train / 255

X_test = X_test / 255

y_train = to_categorical(y_train, num_classes=10)

y_test = to_categorical(y_test, num_classes=10)

return (X_train, y_train), (X_test, y_test)

def train(self, x, y):

print("build")

self.model.add(Conv2D(input_shape=(28, 28, 1),

filters=6,

kernel_size=(5, 5),

strides=1,

activation=tf.keras.activations.tanh))

self.model.add(AveragePooling2D(pool_size=(2, 2)))

self.model.add(Conv2D(filters=6,

kernel_size=(5, 5),

strides=1,

activation=tf.keras.activations.tanh))

self.model.add(AveragePooling2D(pool_size=(2, 2)))

self.model.add(Flatten())

self.model.add(Dense(120, activation=tf.keras.activations.tanh))

self.model.add(Dense(84, activation=tf.keras.activations.tanh))

self.model.add(Dense(10, activation=tf.keras.activations.softmax))

# Compile model

self.model.compile(loss=tf.keras.losses.CategoricalCrossentropy(),

optimizer="adam",

metrics=['accuracy'])

print("train")

self.model.fit(x=x, y=y, batch_size=1, epochs=100, verbose=1)

return self.model

def predict(self, x):

print("predict")

for result in self.model.predict(x):

print(tf.argmax(result))

if __name__ == "__main__":

lenet = LeNet5()

(X_train, y_train), (X_test, y_test) = lenet.load_data()

lenet.train(X_train, y_train)

lenet.predict(X_test)

5 评估

模型结构:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 24, 24, 6) 156

_________________________________________________________________

average_pooling2d (AveragePo (None, 12, 12, 6) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 8, 8, 6) 906

_________________________________________________________________

average_pooling2d_1 (Average (None, 4, 4, 6) 0

_________________________________________________________________

flatten (Flatten) (None, 96) 0

_________________________________________________________________

dense (Dense) (None, 120) 11640

_________________________________________________________________

dense_1 (Dense) (None, 84) 10164

_________________________________________________________________

dense_2 (Dense) (None, 10) 850

=================================================================

Total params: 23,716

Trainable params: 23,716

Non-trainable params: 0

_________________________________________________________________

为了快速得到结果,设置epoch=1得到训练结果:

loss: 0.1207 - accuracy: 0.9625