- 【论文阅读】Persistent Homology Captures the Generalization of Neural Networks Without A Validation Set

开心星人

论文阅读论文阅读

将神经网络表征为加权的无环图,直接根据模型的权重矩阵构造PD。计算相邻batch的权重矩阵PD之间的距离。比较同调收敛性与神经网络的验证精度变化趋势摘要机器学习从业者通常通过监控模型的某些指标来估计其泛化误差,并在训练数值收敛之前停止训练,以防止过拟合。通常,这种误差度量或任务相关的指标是通过一个验证集(holdoutset)来计算的。因为这些数据没有直接用于更新模型参数,通常假设模型在验证集上的

- 【论文阅读】实时全能分割模型

万里守约

论文阅读论文阅读图像分割图像处理计算机视觉

文章目录导言1、论文简介2、论文主要方法3、论文针对的问题4、论文创新点总结导言在最近的计算机视觉领域,针对实时多任务分割的需求日益增长,特别是在交互式分割、全景分割和视频实例分割等多种应用场景中。为了解决这些挑战,本文介绍了一种新方法——RMP-SAM(Real-TimeMulti-PurposeSegmentAnything),旨在实现实时的多功能分割。RMP-SAM结合了动态卷积与高效的模型

- 论文阅读:2023 arxiv Multiscale Positive-Unlabeled Detection of AI-Generated Texts

CSPhD-winston-杨帆

论文阅读论文阅读人工智能

总目录大模型安全相关研究:https://blog.csdn.net/WhiffeYF/article/details/142132328MultiscalePositive-UnlabeledDetectionofAI-GeneratedTextshttps://arxiv.org/abs/2305.18149https://www.doubao.com/chat/211427064915225

- 论文阅读笔记——MAGICDRIVE: STREET VIEW GENERATION WITH DIVERSE 3D GEOMETRY CONTROL

寻丶幽风

论文阅读笔记论文阅读笔记3d人工智能自动驾驶

MagicDrive论文MagicDrive通过对3D数据和文本数据的多模态条件融合和隐式视角转换,实现了高质量、多视角一致的3D场景生成。几何条件编码Cross-attention:针对顺序数据,适合处理文本标记和边界框等可变长度输入。Additiveencoderbranch:对于地图等网络状规则数据,能够有效保留空间结构。对于文本按照模版构建:“Adrivingsceneat{locatio

- 【论文阅读】Availability Attacks Create Shortcuts

开心星人

论文阅读论文阅读

还得重复读这一篇论文,有些地方理解不够透彻可用性攻击通过在训练数据中添加难以察觉的扰动,使数据无法被机器学习算法利用,从而防止数据被未经授权地使用。例如,一家私人公司未经用户同意就收集了超过30亿张人脸图像,用于构建商业人脸识别模型。为解决这些担忧,许多数据投毒攻击被提出,以防止数据被未经授权的深度模型学习。它们通过在训练数据中添加难以察觉的扰动,使模型无法从数据中学习太多信息,从而导致模型在未见

- Description of a Poisson Imagery Super Resolution Algorithm 论文阅读

青铜锁00

论文阅读Radar论文阅读

DescriptionofaPoissonImagerySuperResolutionAlgorithm1.研究目标与意义1.1研究目标1.2实际意义2.创新方法与模型2.1核心思路2.2关键公式与推导2.2.1贝叶斯框架与概率模型2.2.2MAP估计的优化目标2.2.3超分辨率参数α2.3对比传统方法的优势3.实验验证与结果3.1实验设计3.2关键结果4.未来研究方向(实波束雷达领域)4.1挑战

- DeepSeek 如何处理多模态数据(如文本、图像、视频)?

借雨醉东风

人工智能

关注我,持续分享逻辑思维&管理思维&面试题;可提供大厂面试辅导、及定制化求职/在职/管理/架构辅导;推荐专栏《10天学会使用asp.net编程AI大模型》,目前已完成所有内容。一顿烧烤不到的费用,让人能紧跟时代的浪潮。从普通网站,到公众号、小程序,再到AI大模型网站。干货满满。学成后可接项目赚外快,绝对划算。不仅学会如何编程,还将学会如何将AI技术应用到实际问题中,为您的职业生涯增添一笔宝贵的财富

- 多模态大模型常见问题

cv2016_DL

多模态大模型人工智能语言模型自然语言处理机器学习transformer

1.视觉编码器和LLM连接时,使用BLIP2中Q-Former那种复杂的Adaptor好还是LLaVA中简单的MLP好,说说各自的优缺点?Q-Former(BLIP2):优点:Q-Former通过查询机制有效融合了视觉和语言特征,使得模型能够更好地处理视觉-语言任务,尤其是在多模态推理任务中表现优秀。缺点:Q-Former结构较为复杂,计算开销较大。MLP(LLaVA):优点:MLP比较简单,计算

- 端到端的NLP框架(Haystack)

deepdata_cn

NLP自然语言处理人工智能

Haystack是一个端到端的NLP框架,专门用于构建基于文档的问答系统,是实现RAG的理想选择。它提供了数据预处理、文档存储、检索和生成等一系列组件,支持多种语言模型和检索器。提供可视化界面,方便用户进行配置和调试;支持多模态数据,可处理文本、图像等多种类型的数据;具有可扩展性,可根据需求添加自定义组件。2020年在自然语言处理技术快速发展,对高效、易用且灵活的端到端NLP框架需求日益增长的背景

- CBNetV2: A Composite Backbone Network Architecture for Object Detection论文阅读

Laughing-q

论文阅读深度学习人工智能目标检测实例分割transformer

CBNetV2:ACompositeBackboneNetworkArchitectureforObjectDetection论文阅读介绍方法CBNetV2融合方式对Assistant的监督实验与SOTA的比较在主流backbone架构上的通用性与更宽更深的网络比较与可变形卷积的兼容在主流检测器上的模型适用性在SwinTransformer上的模型适用性消融实验paper:https://arxi

- YOLO算法全面改进指南(二)

niuTaylor

YOLO改进YOLO算法

以下是为YOLO系列算法设计的系统性改进框架,结合前沿技术与多领域创新,提供可支持高水平论文发表的详细改进思路。本方案整合了轻量化设计、多模态融合、动态特征优化等创新点,并给出可验证的实验方向。一、多模态提示驱动的开放场景检测系统1.核心创新三模态提示机制:文本提示编码器:基于RepRTA(可重参数化区域文本对齐)构建轻量级文本编码网络,将自然语言描述映射为128维语义向量。视觉提示编码器:采用S

- Tinyflow AI 工作流编排框架 v0.0.7 发布

自不量力的A同学

人工智能

目前没有关于TinyflowAI工作流编排框架v0.0.7发布的相关具体信息。Tinyflow是一个轻量的AI智能体流程编排解决方案,其设计理念是“简单、灵活、无侵入性”。它基于WebComponent开发,前端支持与React、Vue等任何框架集成,后端支持Java、Node.js、Python等语言,助力传统应用快速AI转型。该框架代码库轻量,学习成本低,能轻松应对简单任务编排和复杂多模态推理

- 向量检索、检索增强生成(RAG)、大语言模型及相关系统架构——典型面试问题及简要答案

快撑死的鱼

算法工程师宝典(面试学习最新技术必备)语言模型系统架构面试

1.什么是向量检索?它与传统基于关键字的检索相比有什么不同?答案要点:向量检索是将文本、图像、音频等数据映射为向量,在高维向量空间中基于相似度或距离进行搜索。与传统基于关键字的检索(如倒排索引)相比,向量检索更关注“语义”或“特征”,能找出语义上相似但未必包含相同关键词的内容。向量检索非常适合多模态场景(例如“以图搜图”)或自然语言问答(同义词、上下文关联等)。2.什么是检索增强生成(RAG)?核

- 【论文阅读】PERSONALIZE SEGMENT ANYTHING MODEL WITH ONE SHOT

s1ckrain

计算机视觉论文阅读计算机视觉人工智能

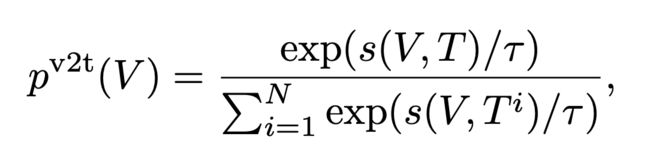

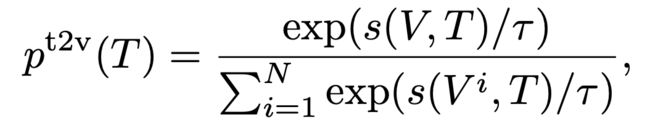

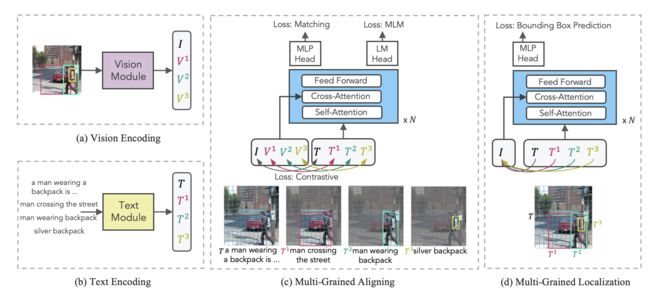

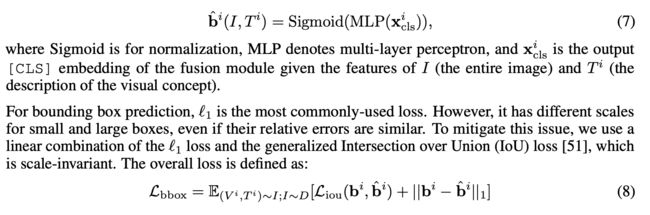

PERSONALIZESEGMENTANYTHINGMODELWITHONESHOT原文摘要研究背景与问题:SAM是一个基于大规模数据预训练的强大提示框架,推动了分割领域的发展。尽管SAM具有通用性,但在无需人工提示的情况下,针对特定视觉概念(如自动分割用户宠物狗)的定制化研究尚不充分。方法提出:提出了一种无需训练的SAM个性化方法,称为PerSAM。仅需单次数据(一张带参考掩码的图像),即可在新

- PyTorch深度学习框架60天进阶学习计划 - 第28天:多模态模型实践(二)

凡人的AI工具箱

深度学习pytorch学习AI编程人工智能python

PyTorch深度学习框架60天进阶学习计划-第28天:多模态模型实践(二)5.跨模态检索系统应用场景5.1图文匹配系统的实际应用应用领域具体场景优势电子商务商品图像搜索、视觉购物用户可以上传图片查找相似商品或使用文本描述查找商品智能媒体内容推荐、图片库搜索通过内容的语义理解提供更精准的推荐和搜索社交网络基于内容的帖子推荐理解用户兴趣,提供更相关的内容推荐教育技术多模态教学资源检索教师和学生可以更

- PyTorch深度学习框架60天进阶学习计划 - 第28天:多模态模型实践(一)

凡人的AI工具箱

深度学习pytorch学习AI编程人工智能python

PyTorch深度学习框架60天进阶学习计划-第28天:多模态模型实践(一)引言:跨越感知的边界欢迎来到我们的PyTorch学习旅程第28天!今天我们将步入AI世界中最激动人心的领域之一:多模态学习。想象一下,如果你的模型既能"看"又能"读",并且能够理解图像与文字之间的联系,这将为我们打开怎样的可能性?今天我们将专注于构建图文匹配系统,学习如何使用CLIP(ContrastiveLanguage

- GS-SLAM论文阅读笔记-MGSO

zenpluck

GS论文阅读论文阅读笔记

前言MGSO首字母缩略词是直接稀疏里程计(DSO),我们建立的光度SLAM系统和高斯飞溅(GS)的混合。这应该是第一个前端用DSO的高斯SLAM,不知道这个系统的组合能不能打得过ORB-SLAM3,以及对DSO会做出怎么样的改进以适应高斯地图,接下来就看一下吧!GishelloG^s_ihelloGishello我是红色文章目录前言1.背景介绍2.关键内容2.1SLAMmodule2.2Dense

- 实测 Gemini 2.0 Flash 图像生成:多模态 AI 的创作力边界

python

近日,Google发布了Gemini2.0Flash的实验性图像生成功能(Gemini2.0Flash(ImageGeneration)Experimental)。我也第一时间体验了这一功能,再次感受到AI技术对传统图像处理工具的颠覆性冲击。本文从主要功能、安装方法、应用场景,并通过实际测试展示其能力,希望帮助大家更好地了解和使用这一工具。引言Gemini2.0Flash的实验性图像生成功能于20

- 算力融合创新与多场景应用生态构建

智能计算研究中心

其他

内容概要算力作为数字经济的核心驱动力,正经历从单一计算范式向融合架构的跨越式演进。随着异构计算、光子计算等底层技术的突破,算力资源逐步形成跨架构协同、多模态联动的智能供给体系,支撑工业互联网、医疗影像、智能安防等场景实现效率跃升。与此同时,量子计算与神经形态计算的前沿探索,正在重塑科学计算与实时决策的技术边界。建议行业关注算力可扩展性与安全标准的协同设计,通过动态调度算法与分布式架构优化,构建弹性

- DeepSeek混合专家架构赋能智能创作

智能计算研究中心

其他

内容概要在人工智能技术加速迭代的当下,DeepSeek混合专家架构(MixtureofExperts)通过670亿参数的动态路由机制,实现了多模态处理的范式突破。该架构将视觉语言理解、多语言语义解析与深度学习算法深度融合,构建出覆盖文本生成、代码编写、学术研究等场景的立体化能力矩阵。其核心优势体现在三个维度:精准化内容生产——通过智能选题、文献综述自动生成等功能,将学术论文写作效率提升40%以上;

- 基于Python的金融领域AI训练数据抓取实战(完整技术解析)

海拥✘

python金融人工智能

项目背景与需求分析场景描述为训练一个覆盖全球金融市场的多模态大语言模型(LLM),需实时采集以下数据:全球30+主要证券交易所(NYSE、NASDAQ、LSE、TSE等)的上市公司公告企业财报PDF文档及结构化数据社交媒体舆情数据(Twitter、StockTwits)新闻媒体分析(Reuters、Bloomberg)技术挑战地理封锁:部分交易所(如日本TSE)仅允许本国IP访问历史数据动态反爬:

- 效果媲美GPT4V的多模态大型语言模型MiniCPM-V-2_6详细介绍

我就是全世界

语言模型人工智能自然语言处理

MiniCPM-V-2.6概述1.1模型背景MiniCPM-V-2.6是由nuoan开发的一款达到GPT-4V级别的多模态大型语言模型(MLLM)。该模型专为手机上的单图像、多图像和视频处理设计,旨在提供高效、准确的多模态内容理解与生成能力。随着移动设备的普及和计算能力的提升,用户对于在移动端进行复杂图像和视频处理的需求日益增长。MiniCPM-V-2.6的推出,正是为了满足这一需求,提供了一种在

- 开源模型应用落地-qwen模型小试-调用Qwen2-7B-Instruct-进阶篇(十二)

开源技术探险家

开源模型-实际应用落地#深度学习自然语言处理语言模型

一、前言经过前五篇“qwen模型小试”文章的学习,我们已经熟练掌握qwen大模型的使用。然而,就在前几天阿里云又发布了Qwen2版本。无论是语言模型还是多模态模型,均在大规模多语言和多模态数据上进行预训练,并通过高质量数据进行后期微调以贴近人类偏好。本文将介绍如何使用Transformers库进行模型推理(相较于qwen1系列,使用方式上有较大的调整),现在,我们赶紧跟上脚步,去体验一下新版本模型

- 图生视频技术的发展与展望:从技术突破到未来图景

Liudef06

StableDiffusion音视频人工智能深度学习stablediffusion

一、技术发展现状图生视频(Image-to-VideoGeneration)是生成式人工智能(AIGC)的重要分支,其核心是通过单张或多张静态图像生成动态视频序列。近年来,随着深度学习、多模态融合和计算硬件的进步,图生视频技术经历了从基础研究到商业落地的快速演进。早期探索与GAN的奠基早期图生视频技术主要基于生成对抗网络(GAN),通过对抗训练生成低分辨率的视频片段。例如,DeepMind的DVD

- 【论文阅读】MMedPO: 用临床感知多模态偏好优化调整医学视觉语言模型

勤奋的小笼包

论文阅读语言模型人工智能自然语言处理chatgpt

MMedPO:用临床感知多模态偏好优化调整医学视觉语言模型1.背景2.核心问题:3.方法:3.实验结果与优势4.技术贡献与意义5.结论MMedPO:AligningMedicalVision-LanguageModelswithClinical-AwareMultimodalPreferenceOptimizationMMedPO:用临床感知多模态偏好优化调整医学视觉语言模型gitgub:地址1.

- 复旦:过程奖励优化多模态推理

大模型任我行

大模型-模型训练人工智能自然语言处理语言模型论文笔记

标题:VisualPRM:AnEffectiveProcessRewardModelforMultimodalReasoning来源:arXiv,2503.10291摘要我们引入了VisualPRM,这是一种具有8B参数的高级多模态过程奖励模型(PRM),它通过Best-of-N(BoN)评估策略提高了现有多模态大型语言模型(MLLM)在不同模型尺度和族之间的推理能力。具体来说,我们的模型提高了三

- 21.11 《ChatGLM3-6B+Gradio工业级落地:多模态交互+60%性能优化,手把手实现生产部署》

少林码僧

AI大模型应用实战专栏人工智能gpt语言模型性能优化交互

《ChatGLM3-6B+Gradio工业级落地:多模态交互+60%性能优化,手把手实现生产部署》关键词:ChatGLM3-6B应用开发,Gradio界面集成,模型交互优化,Web服务容器化,多模态输入支持使用Gradio赋能ChatGLM3-6B图形化界面通过Gradio实现大模型服务的可视化交互,是生产级AI应用落地的关键环节。本节将深入解析如何构建适配ChatGLM3-6B的工业级交互界面。

- 大众文艺杂志社大众文艺杂志大众文艺编辑部2025年第3期目录

QQ296078736

人工智能

公共文化服务研究提高基层群众音乐鉴赏水平的策略研究罗婉琳;1-3文艺评论《增广贤文》:深入剖析其中的人学智慧姚志清;4-6当代战争视阈下近20年军旅戏剧军事文化观的嬗变研究(2000~2023年)邱远望;7-9从奥威尔的《射象》看分裂的自我与身份认同何玉蔚;10-12南宋都市笔记中的临安园林及其美学意义张凯歌;13-15文博与数字化研究数字时代与媒介史视域下的多模态图书馆系统及新质书香社会建设鹿钦

- 3DMAX点云算法:实现毫米级BIM模型偏差检测(附完整代码)

夏末之花

人工智能

摘要本文基于激光雷达点云数据与BIM模型的高精度对齐技术,提出一种融合动态体素化与多模态特征匹配的偏差检测方法。通过点云预处理、语义分割、模型配准及差异分析,最终实现建筑构件毫米级偏差的可视化检测。文中提供关键代码实现,涵盖点云处理、特征提取与深度学习模型搭建。一、核心算法流程点云预处理与特征增强去噪与下采样:采用统计滤波与体素网格下采样,去除离群点并降低数据量。语义分割:基于PointNet++

- MMScan数据集:首个最大的多模态3D场景数据集,包含层次化的语言标注

数据集

2024-10-24,由上海人工智能实验室联合多所高校创建了MMScan,这是迄今为止最大的多模态3D场景数据集,包含了层次化的语言标注。数据集的建立,不仅推动了3D场景理解的研究进展,还为训练和评估多模态3D感知模型提供了宝贵的资源。一、研究背景:随着大型语言模型(LLMs)的兴起和与其他数据模态的融合,多模态3D感知因其与物理世界的连接而受到越来越多的关注,并取得了快速进展。然而,现有的数据集

- rust的指针作为函数返回值是直接传递,还是先销毁后创建?

wudixiaotie

返回值

这是我自己想到的问题,结果去知呼提问,还没等别人回答, 我自己就想到方法实验了。。

fn main() {

let mut a = 34;

println!("a's addr:{:p}", &a);

let p = &mut a;

println!("p's addr:{:p}", &a

- java编程思想 -- 数据的初始化

百合不是茶

java数据的初始化

1.使用构造器确保数据初始化

/*

*在ReckInitDemo类中创建Reck的对象

*/

public class ReckInitDemo {

public static void main(String[] args) {

//创建Reck对象

new Reck();

}

}

- [航天与宇宙]为什么发射和回收航天器有档期

comsci

地球的大气层中有一个时空屏蔽层,这个层次会不定时的出现,如果该时空屏蔽层出现,那么将导致外层空间进入的任何物体被摧毁,而从地面发射到太空的飞船也将被摧毁...

所以,航天发射和飞船回收都需要等待这个时空屏蔽层消失之后,再进行

&

- linux下批量替换文件内容

商人shang

linux替换

1、网络上现成的资料

格式: sed -i "s/查找字段/替换字段/g" `grep 查找字段 -rl 路径`

linux sed 批量替换多个文件中的字符串

sed -i "s/oldstring/newstring/g" `grep oldstring -rl yourdir`

例如:替换/home下所有文件中的www.admi

- 网页在线天气预报

oloz

天气预报

网页在线调用天气预报

<%@ page language="java" contentType="text/html; charset=utf-8"

pageEncoding="utf-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transit

- SpringMVC和Struts2比较

杨白白

springMVC

1. 入口

spring mvc的入口是servlet,而struts2是filter(这里要指出,filter和servlet是不同的。以前认为filter是servlet的一种特殊),这样就导致了二者的机制不同,这里就牵涉到servlet和filter的区别了。

参见:http://blog.csdn.net/zs15932616453/article/details/8832343

2

- refuse copy, lazy girl!

小桔子

copy

妹妹坐船头啊啊啊啊!都打算一点点琢磨呢。文字编辑也写了基本功能了。。今天查资料,结果查到了人家写得完完整整的。我清楚的认识到:

1.那是我自己觉得写不出的高度

2.如果直接拿来用,很快就能解决问题

3.然后就是抄咩~~

4.肿么可以这样子,都不想写了今儿个,留着作参考吧!拒绝大抄特抄,慢慢一点点写!

- apache与php整合

aichenglong

php apache web

一 apache web服务器

1 apeche web服务器的安装

1)下载Apache web服务器

2)配置域名(如果需要使用要在DNS上注册)

3)测试安装访问http://localhost/验证是否安装成功

2 apache管理

1)service.msc进行图形化管理

2)命令管理,配

- Maven常用内置变量

AILIKES

maven

Built-in properties

${basedir} represents the directory containing pom.xml

${version} equivalent to ${project.version} (deprecated: ${pom.version})

Pom/Project properties

Al

- java的类和对象

百合不是茶

JAVA面向对象 类 对象

java中的类:

java是面向对象的语言,解决问题的核心就是将问题看成是一个类,使用类来解决

java使用 class 类名 来创建类 ,在Java中类名要求和构造方法,Java的文件名是一样的

创建一个A类:

class A{

}

java中的类:将某两个事物有联系的属性包装在一个类中,再通

- JS控制页面输入框为只读

bijian1013

JavaScript

在WEB应用开发当中,增、删除、改、查功能必不可少,为了减少以后维护的工作量,我们一般都只做一份页面,通过传入的参数控制其是新增、修改或者查看。而修改时需将待修改的信息从后台取到并显示出来,实际上就是查看的过程,唯一的区别是修改时,页面上所有的信息能修改,而查看页面上的信息不能修改。因此完全可以将其合并,但通过前端JS将查看页面的所有信息控制为只读,在信息量非常大时,就比较麻烦。

- AngularJS与服务器交互

bijian1013

JavaScriptAngularJS$http

对于AJAX应用(使用XMLHttpRequests)来说,向服务器发起请求的传统方式是:获取一个XMLHttpRequest对象的引用、发起请求、读取响应、检查状态码,最后处理服务端的响应。整个过程示例如下:

var xmlhttp = new XMLHttpRequest();

xmlhttp.onreadystatechange

- [Maven学习笔记八]Maven常用插件应用

bit1129

maven

常用插件及其用法位于:http://maven.apache.org/plugins/

1. Jetty server plugin

2. Dependency copy plugin

3. Surefire Test plugin

4. Uber jar plugin

1. Jetty Pl

- 【Hive六】Hive用户自定义函数(UDF)

bit1129

自定义函数

1. 什么是Hive UDF

Hive是基于Hadoop中的MapReduce,提供HQL查询的数据仓库。Hive是一个很开放的系统,很多内容都支持用户定制,包括:

文件格式:Text File,Sequence File

内存中的数据格式: Java Integer/String, Hadoop IntWritable/Text

用户提供的 map/reduce 脚本:不管什么

- 杀掉nginx进程后丢失nginx.pid,如何重新启动nginx

ronin47

nginx 重启 pid丢失

nginx进程被意外关闭,使用nginx -s reload重启时报如下错误:nginx: [error] open() “/var/run/nginx.pid” failed (2: No such file or directory)这是因为nginx进程被杀死后pid丢失了,下一次再开启nginx -s reload时无法启动解决办法:nginx -s reload 只是用来告诉运行中的ng

- UI设计中我们为什么需要设计动效

brotherlamp

UIui教程ui视频ui资料ui自学

随着国际大品牌苹果和谷歌的引领,最近越来越多的国内公司开始关注动效设计了,越来越多的团队已经意识到动效在产品用户体验中的重要性了,更多的UI设计师们也开始投身动效设计领域。

但是说到底,我们到底为什么需要动效设计?或者说我们到底需要什么样的动效?做动效设计也有段时间了,于是尝试用一些案例,从产品本身出发来说说我所思考的动效设计。

一、加强体验舒适度

嗯,就是让用户更加爽更加爽的用你的产品。

- Spring中JdbcDaoSupport的DataSource注入问题

bylijinnan

javaspring

参考以下两篇文章:

http://www.mkyong.com/spring/spring-jdbctemplate-jdbcdaosupport-examples/

http://stackoverflow.com/questions/4762229/spring-ldap-invoking-setter-methods-in-beans-configuration

Sprin

- 数据库连接池的工作原理

chicony

数据库连接池

随着信息技术的高速发展与广泛应用,数据库技术在信息技术领域中的位置越来越重要,尤其是网络应用和电子商务的迅速发展,都需要数据库技术支持动 态Web站点的运行,而传统的开发模式是:首先在主程序(如Servlet、Beans)中建立数据库连接;然后进行SQL操作,对数据库中的对象进行查 询、修改和删除等操作;最后断开数据库连接。使用这种开发模式,对

- java 关键字

CrazyMizzz

java

关键字是事先定义的,有特别意义的标识符,有时又叫保留字。对于保留字,用户只能按照系统规定的方式使用,不能自行定义。

Java中的关键字按功能主要可以分为以下几类:

(1)访问修饰符

public,private,protected

p

- Hive中的排序语法

daizj

排序hiveorder byDISTRIBUTE BYsort by

Hive中的排序语法 2014.06.22 ORDER BY

hive中的ORDER BY语句和关系数据库中的sql语法相似。他会对查询结果做全局排序,这意味着所有的数据会传送到一个Reduce任务上,这样会导致在大数量的情况下,花费大量时间。

与数据库中 ORDER BY 的区别在于在hive.mapred.mode = strict模式下,必须指定 limit 否则执行会报错。

- 单态设计模式

dcj3sjt126com

设计模式

单例模式(Singleton)用于为一个类生成一个唯一的对象。最常用的地方是数据库连接。 使用单例模式生成一个对象后,该对象可以被其它众多对象所使用。

<?phpclass Example{ // 保存类实例在此属性中 private static&

- svn locked

dcj3sjt126com

Lock

post-commit hook failed (exit code 1) with output:

svn: E155004: Working copy 'D:\xx\xxx' locked

svn: E200031: sqlite: attempt to write a readonly database

svn: E200031: sqlite: attempt to write a

- ARM寄存器学习

e200702084

数据结构C++cC#F#

无论是学习哪一种处理器,首先需要明确的就是这种处理器的寄存器以及工作模式。

ARM有37个寄存器,其中31个通用寄存器,6个状态寄存器。

1、不分组寄存器(R0-R7)

不分组也就是说说,在所有的处理器模式下指的都时同一物理寄存器。在异常中断造成处理器模式切换时,由于不同的处理器模式使用一个名字相同的物理寄存器,就是

- 常用编码资料

gengzg

编码

List<UserInfo> list=GetUserS.GetUserList(11);

String json=JSON.toJSONString(list);

HashMap<Object,Object> hs=new HashMap<Object, Object>();

for(int i=0;i<10;i++)

{

- 进程 vs. 线程

hongtoushizi

线程linux进程

我们介绍了多进程和多线程,这是实现多任务最常用的两种方式。现在,我们来讨论一下这两种方式的优缺点。

首先,要实现多任务,通常我们会设计Master-Worker模式,Master负责分配任务,Worker负责执行任务,因此,多任务环境下,通常是一个Master,多个Worker。

如果用多进程实现Master-Worker,主进程就是Master,其他进程就是Worker。

如果用多线程实现

- Linux定时Job:crontab -e 与 /etc/crontab 的区别

Josh_Persistence

linuxcrontab

一、linux中的crotab中的指定的时间只有5个部分:* * * * *

分别表示:分钟,小时,日,月,星期,具体说来:

第一段 代表分钟 0—59

第二段 代表小时 0—23

第三段 代表日期 1—31

第四段 代表月份 1—12

第五段 代表星期几,0代表星期日 0—6

如:

*/1 * * * * 每分钟执行一次。

*

- KMP算法详解

hm4123660

数据结构C++算法字符串KMP

字符串模式匹配我们相信大家都有遇过,然而我们也习惯用简单匹配法(即Brute-Force算法),其基本思路就是一个个逐一对比下去,这也是我们大家熟知的方法,然而这种算法的效率并不高,但利于理解。

假设主串s="ababcabcacbab",模式串为t="

- 枚举类型的单例模式

zhb8015

单例模式

E.编写一个包含单个元素的枚举类型[极推荐]。代码如下:

public enum MaYun {himself; //定义一个枚举的元素,就代表MaYun的一个实例private String anotherField;MaYun() {//MaYun诞生要做的事情//这个方法也可以去掉。将构造时候需要做的事情放在instance赋值的时候:/** himself = MaYun() {*

- Kafka+Storm+HDFS

ssydxa219

storm

cd /myhome/usr/stormbin/storm nimbus &bin/storm supervisor &bin/storm ui &Kafka+Storm+HDFS整合实践kafka_2.9.2-0.8.1.1.tgzapache-storm-0.9.2-incubating.tar.gzKafka安装配置我们使用3台机器搭建Kafk

- Java获取本地服务器的IP

中华好儿孙

javaWeb获取服务器ip地址

System.out.println("getRequestURL:"+request.getRequestURL());

System.out.println("getLocalAddr:"+request.getLocalAddr());

System.out.println("getLocalPort:&quo