Flink CDC 笔记

- 开源地址:https://github.com/ververica/flink-cdc-connectors

- CDC (change data capture:变更数据获取),检测并捕获数据库的变动(包括数据或数据表的插入、更新及删除等),直接读取全量数据和增量变更数据的 source 组件。

CDC的种类

MySQL配置

- 修改配置文件

sudo vim /etc/my.cnf

# 数据库id

server-id = 1

# 启动binlog

log-bin = mysql-bin

# binlog类型

binlog_format = row

# 启动binlog的数据库

binlog-do-db = test_binlog

-

登入MySQL查看binlog

mysql -uroot -p000000

show master status; -

重启MySQL

sudo systemctl restart mysqld -

登入MySQL查看binlog

mysql -uroot -p000000

show master status;

POM文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>test flink cdc</groupId>

<artifactId>test flink cdc</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.49</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

Flink datastream方式

package com.fink_cdc.test;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class flink_cdc_datastream {

public static void main(String[] args) throws Exception {

// 1 创建Flink 运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行

env.setParallelism(1);

// 2 开启检查点

// Flink-CDC将读取binlog的位置信息以状态的方式保存在CK,如果想要做到断点续传,需要从Checkpoint或Savepoint启动程序

// 2.1 开启Checkpoint,每隔5秒钟做一次CK

env.enableCheckpointing(3000L, CheckpointingMode.EXACTLY_ONCE);

// 2.2 并制定CK的一致性语义

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

// 2.3 设置超时时间为1分钟

env.getCheckpointConfig().setCheckpointTimeout(60 * 1000L);

// 2.4 设置两次重启的最小时间间隔

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(3000L);

// 2.5 设置任务关闭的时候保留最后一次 CK 数据

env.getCheckpointConfig().enableExternalizedCheckpoints(

CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

// 2.6 指定从 CK 自动重启策略

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000L));

// 2.7 设置状态后端

env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/flinkCDC"));

// 2.8 设置访问HDFS的用户名

System.setProperty("HADOOP_USER_NAME", "link999");

// 3 创建MySQLsource

DebeziumSourceFunction<String> mysqlsource = MySqlSource.<String>builder()

.hostname("hadoop102")

.port(3306)

.databaseList("test_binlog")

.tableList("test_binlog.test_table_binlog")

.username("root")

.password("000000")

.deserializer(new StringDebeziumDeserializationSchema())

.startupOptions(StartupOptions.initial())

.build();

//4.使用 CDC Source 从 MySQL 读取数据

env.addSource(mysqlsource).print();

//5.执行任务

env.execute();

}

}

Flink sql方式

package com.fink_cdc.test;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class flink_cdc_sql {

public static void main(String[] args) throws Exception {

// 创建流处理环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 创建表执行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 创建动态表

tableEnv.executeSql("CREATE TABLE TEST_TABLE_BINLOG1 (" +

"id INT," +

"name STRING," +

"age INT" +

") WITH (" +

"'connector' = 'mysql-cdc'," +

"'hostname' = 'hadoop102'," +

"'port' = '3306'," +

"'username' = 'root'," +

"'password' = '000000'," +

"'database-name' = 'test_binlog'," +

"'table-name' = 'test_table_binlog'" +

")");

// sql查询

tableEnv.executeSql("select * from TEST_TABLE_BINLOG1").print();

// 创建执行

env.execute();

}

}

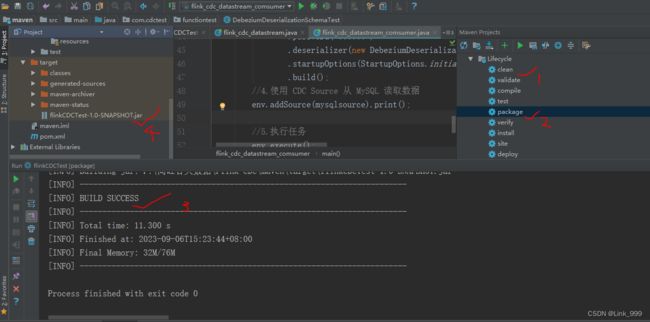

集群提交

# 启动hadoop集群

# 开启zk

启动 Flink 集群

[atguigu@hadoop102 flink-standalone]$ bin/start-cluster.sh

启动程序

bin/flink run -m hadoop102:8081 -c com.cdctest /opt/module/flink-1.13.6/testdatajar/flinkCDCTest-1.0-SNAPSHOT.jar

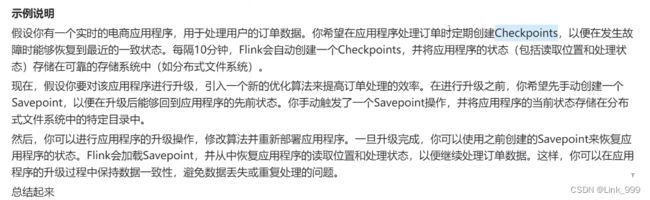

给当前的 Flink 程序创建 Savepoint

[atguigu@hadoop102 flink-standalone]$ bin/flink savepoint JobId hdfs://hadoop102:8020/flink/save

关闭程序以后从 Savepoint 重启程序

[atguigu@hadoop102 flink-standalone]$ bin/flink run -s hdfs://hadoop102:8020/flink/save/... -c

com.atguigu.FlinkCDC flink-1.0-SNAPSHOT-jar-with-dependencies.jar

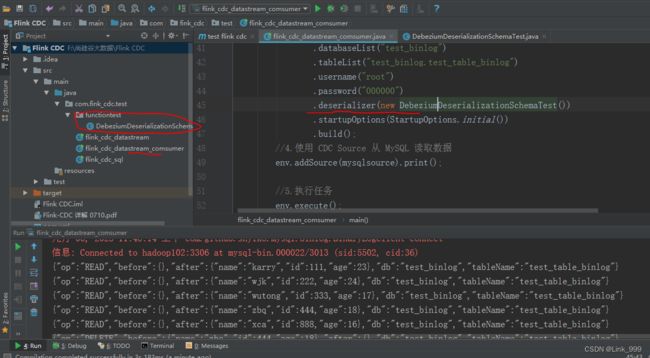

自定义反序列化器

package com.fink_cdc.test.functiontest;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Schema;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import scala.annotation.meta.field;

import java.util.List;

public class DebeziumDeserializationSchemaTest implements DebeziumDeserializationSchema<String> {

/**

*{

* "db":"",

* "tablrName":"",

* "before":{"id":"111"},

* "after":{"id":"111"},

* "op":"",

*}

*/

@Override

public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {

// 创建JSON对象用于封装结果数据

JSONObject result = new JSONObject();

// 获取库名和表名

String topic = sourceRecord.topic();

String[] fields = topic.split("\\.");

result.put("db", fields[1]);

result.put("tableName", fields[2]);

// 获取before数据

Struct value = (Struct) sourceRecord.value();

Struct before = value.getStruct("before");

JSONObject beforejson = new JSONObject();

if (before != null) {

// 获取列信息

Schema schema = before.schema();

List<Field> fieldsList = schema.fields();

for (Field field : fieldsList) {

beforejson.put(field.name(), before.get(field));

}

}

result.put("before", beforejson);

// 获取after数据

Struct after = value.getStruct("after");

JSONObject afterjson = new JSONObject();

if (after != null) {

// 获取列信息

Schema schema = after.schema();

List<Field> fieldsList = schema.fields();

for (Field field : fieldsList) {

afterjson.put(field.name(), after.get(field));

}

}

result.put("after", afterjson);

// 获取op数据

Envelope.Operation operation = Envelope.operationFor(sourceRecord);

result.put("op", operation);

// 输出数据

collector.collect(result.toJSONString());

}

@Override

public TypeInformation<String> getProducedType() {

return BasicTypeInfo.STRING_TYPE_INFO;

}

}

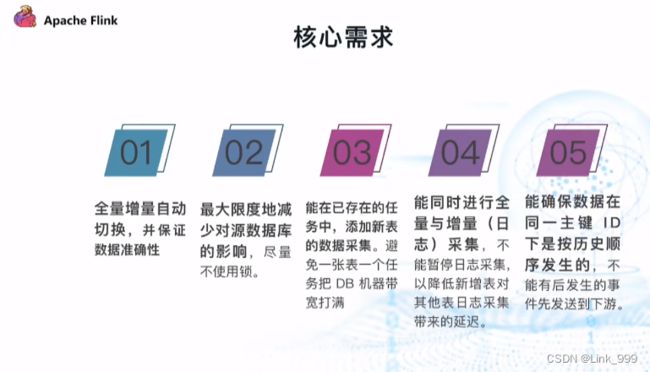

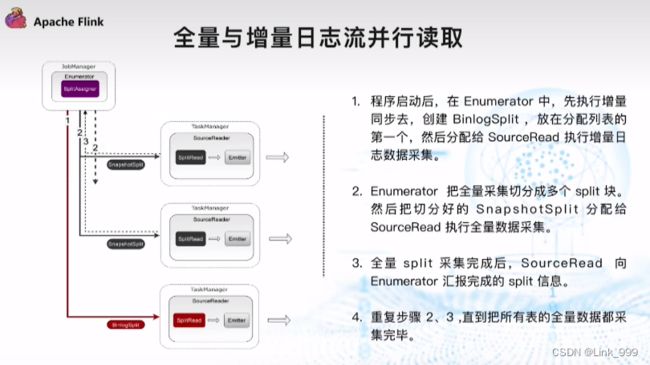

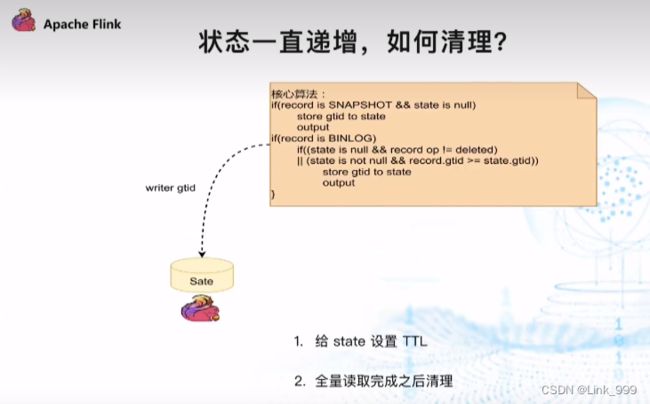

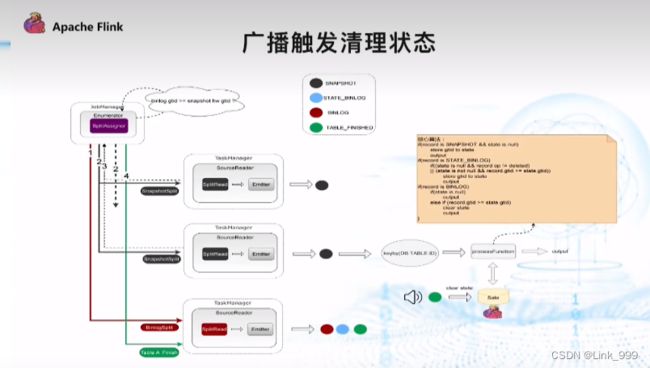

2.0版本新增特性

1.Chunk 切分;2.Chunk 分配;(实现并行读取数据&CheckPoint)

3.Chunk 读取;(实现无锁读取)

4.Chunk 汇报;

5.Chunk 分配。

总结

- Flink datastream可以监控多库多表,Flink sql 只能监控一张表

- Flink datastream适用于1.2和1.3,Flink sql只适用于1.3

- Flink datastream要自定义反序列化器得到想要的数据,Flink sql不用自定义

问题记录

1、JDK版本问题

- 主要是jdk版本问题,此处有两个原因,一个是编译版本不匹配,一个是当前项目jdk版本不支持。

- 问题解决:https://blog.csdn.net/weixin_41219529/article/details/120154838

2、Flink版本和CDC版本问题

- Flink 1.2升级到1.3(datastream适合用1.2,sql适合用1.3)

- Caused by: java.lang.NoSuchMethodError: org.apache.flink.table.factories.DynamicTableFactory$Context.getCatalogTable()Lorg/apache/flink/table/catalog/ResolvedCatalogTable;

3、Flink提交作业问题

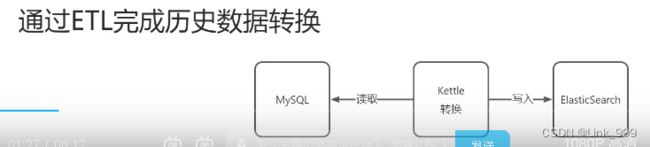

拓展

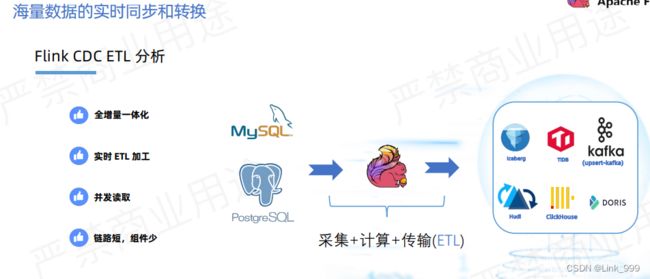

十亿级表Flink CDC如何同步

- 先全量后增量,binlog保留7天或2-3天都可以