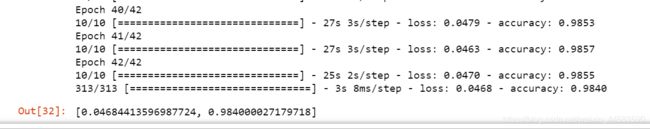

20210329 LeNet-5数字识别 TensorFlow2.0 MINIST数据集

LeNet-5 数字识别 TensorFlow2.0

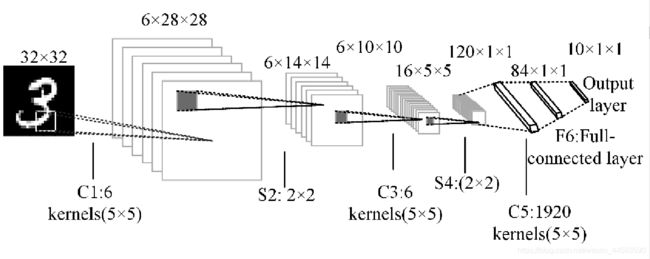

LeNet-5 由两个卷积层、两个池化层和两个全连接层组成,每个卷积层使用尺寸为5×5(每个滤波器有1个通道)的滤波器,第一层中有6个滤波 器,第 二 层 中 有 16 个 滤 波 器。在 每 次 卷 积 之后,采用 Sigmoid函数进行激活,并且使用2×2的平均池化进行池化操作。

# Implementation of LeNet-5 in keras

# [LeCun et al., 1998. Gradient based learning applied to document recognition]

# Some minor changes are made to the architecture like using ReLU activation instead of

# sigmoid/tanh, max pooling instead of avg pooling and softmax output layer

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from tensorflow import keras

# 导入数据

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

# 观察数据

print (x_train.shape)

plt.imshow(x_train[1000])

print (y_train[1000])

#数据处理,维度要一致

x_train = x_train.reshape((x_train.shape[0],28,28,1)).astype('float32')

x_test = x_test.reshape((x_test.shape[0],28,28,1)).astype('float32') #-1代表那个地方由其余几个值算来的

x_train = x_train/255

x_test = x_test/255

x_train = np.pad(x_train, ((0,0),(2,2),(2,2),(0,0)), 'constant')

x_test = np.pad(x_test, ((0,0),(2,2),(2,2),(0,0)), 'constant')

print (x_train.shape)

#序贯模型(Sequential):单输入单输出

model = keras.Sequential()

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import MaxPooling2D

from tensorflow.keras.layers import Flatten

from tensorflow.keras.layers import Dense

#Layer 1

#Conv Layer 1

model.add(Conv2D(filters = 6,

kernel_size = 5,

strides = 1,

activation = 'relu',

input_shape = (32,32,1)))

#Pooling layer 1

model.add(MaxPooling2D(pool_size = 2, strides = 2))

#Layer 2

#Conv Layer 2

model.add(Conv2D(filters = 16,

kernel_size = 5,

strides = 1,

activation = 'relu',

input_shape = (14,14,6)))

#Pooling Layer 2

model.add(MaxPooling2D(pool_size = 2, strides = 2))

#Flatten

model.add(Flatten())

#Layer 3

#Fully connected layer 1

model.add(Dense(units = 120, activation = 'relu'))

#Layer 4

#Fully connected layer 2

model.add(Dense(units = 84, activation = 'relu'))

#Layer 5

#Output Layer

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(optimizer = 'adam', loss=keras.losses.SparseCategoricalCrossentropy(), metrics = ['accuracy'])

model.summary()

#进行模型训练

historyLeNet = model.fit(x_train, y_train, steps_per_epoch=10, epochs=42)

#保存下模型(这个方法比较常用,也可以考虑适合部署的SavedModel 方式)

model.save('LeNet_5.h5')#保存模型,名字可以任取,但要由.h5后缀

#测试模型

model.evaluate(x_test, y_test)

#相关库的导入

from tensorflow import keras

import tensorflow.keras.layers as layers

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import cv2

#加载模型

model=tf.keras.models.load_model('LeNet_5.h5')

#自身数据加载(随便去百度或者那截张图,然后读取处理后就可以)

def output(y_pre , y):

temp = np.argmax(y_pre)

print ('预测结果为'+str(temp))

print ('实际结果为'+str(y))

if str(temp)==str(y):

print('预测结果正确')

else:

print('预测结果错误')

def readnum(path):#读取自己的数字数据进行预测

img = cv2.imread(path, 0)#灰度图读入

# plt.imshow(img)

#查看数据

img = cv2.resize(img,(28,28))

# cv2.waitKey(0)

# cv2.destroyAllWindows()

img = np.array(img)

img = img.reshape((-1,28,28,1)).astype('float32')

img = 1-img/255.0 #因为我的数字是相反颜色的所以有个反转

return img

#预测并输出结果

path = 'D:\\0.jpg'

xTemp=readnum(path)

x_pre = np.pad(xTemp, ((0,0),(2,2),(2,2),(0,0)), 'constant')

y_pre = model.predict(x_pre)

output(y_pre, 8)