halcon深度学习中的样本增强

一、问题描述

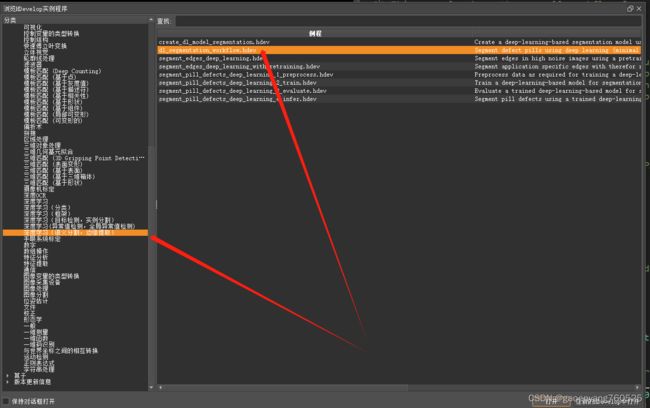

halcon的例程中,下面是最经典的语义分割例子。

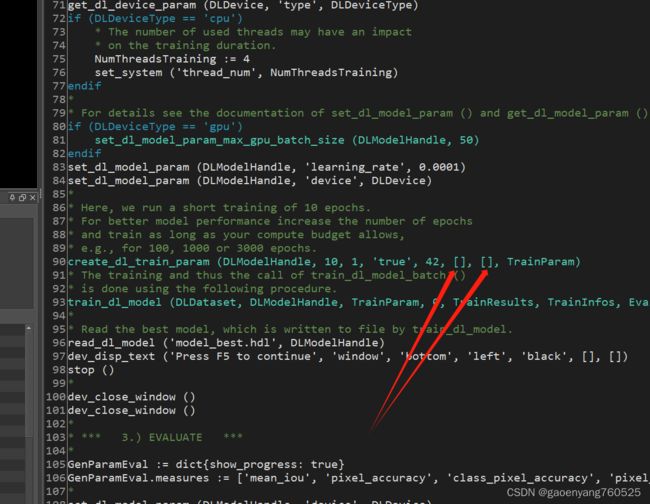

但是,它并没做样本增强,因为 你看下图的代码,第90行,那两个参数都是[],空的。

二、解决方案

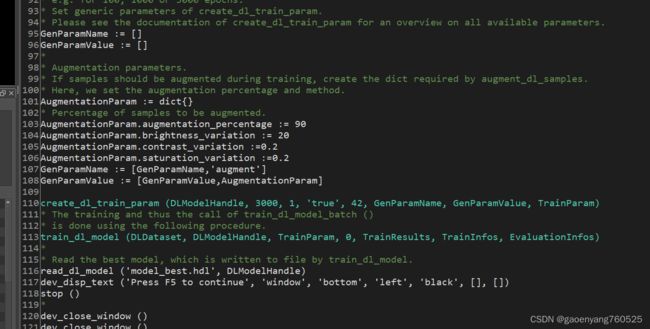

如下图所示,增加了从95到108行,并且在第110行,倒数第二和倒数第三个参数,不再是[]了,被我改了。

三、完整代码

*

* Deep learning segmentation workflow:

*

* This example shows the overall workflow for

* deep learning segmentation.

*

* PLEASE NOTE:

* - This is a bare-bones example.

* - For this, default parameters are used as much as possible.

* Hence, the results may differ from those obtained in other examples.

* - For more detailed steps, please refer to the respective examples from the series,

* e.g. segment_pill_defects_deep_learning_1_preprocess.hdev etc.

*

dev_close_window ()

dev_update_off ()

set_system ('seed_rand', 42)

*

* *** 0) SET INPUT/OUTPUT PATHS AND DATASET PARAMETERS ***

*

ImageDir := './somepics'

SegmentationDir := './jiang_labels'

*

OutputDir := 'segment_pill_defects_data'

*

ClassNames := ['0', '1', '2','3']

ClassIDs := [0, 1, 2, 3]

* Set to true, if the results should be deleted after running this program.

RemoveResults := false

*

* *** 1.) PREPARE ***

*

* Read and prepare the DLDataset.

read_dl_dataset_segmentation (ImageDir, SegmentationDir, ClassNames, ClassIDs, [], [], [], DLDataset)

split_dl_dataset (DLDataset, 60, 20, [])

create_dict (PreprocessSettings)

* Here, existing preprocessed data will be overwritten if necessary.

set_dict_tuple (PreprocessSettings, 'overwrite_files', 'auto')

create_dl_preprocess_param ('segmentation', 620, 100, 3, -127, 128, 'none', 'full_domain', [], [], [], [], DLPreprocessParam)

preprocess_dl_dataset (DLDataset, OutputDir, DLPreprocessParam, PreprocessSettings, DLDatasetFileName)

*

* Inspect 10 randomly selected preprocessed DLSamples visually.

create_dict (WindowDict)

get_dict_tuple (DLDataset, 'samples', DatasetSamples)

find_dl_samples (DatasetSamples, 'split', 'train', 'match', TrainSampleIndices)

for Index := 0 to 9 by 1

SampleIndex := TrainSampleIndices[round(rand(1) * (|TrainSampleIndices| - 1))]

read_dl_samples (DLDataset, SampleIndex, DLSample)

dev_display_dl_data (DLSample, [], DLDataset, ['segmentation_image_ground_truth', 'segmentation_weight_map'], [], WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

*stop ()

endfor

dev_close_window_dict (WindowDict)

*

* *** 2.) TRAIN ***

*

* Read a pretrained model and adapt its parameters

* according to the dataset.

*read_dl_model ('pretrained_dl_segmentation_compact.hdl', DLModelHandle)

read_dl_model ('pretrained_dl_segmentation_enhanced.hdl', DLModelHandle)

set_dl_model_param_based_on_preprocessing (DLModelHandle, DLPreprocessParam, ClassIDs)

set_dl_model_param (DLModelHandle, 'class_names', ClassNames)

* Set training related model parameters.

* Training can be performed on a GPU or CPU.

* See the respective system requirements in the Installation Guide.

* If possible a GPU is used in this example.

* In case you explicitely wish to run this example on the CPU,

* choose the CPU device instead.

query_available_dl_devices (['runtime', 'runtime'], ['gpu', 'cpu'], DLDeviceHandles)

if (|DLDeviceHandles| == 0)

throw ('No supported device found to continue this example.')

endif

* Due to the filter used in query_available_dl_devices, the first device is a GPU, if available.

DLDevice := DLDeviceHandles[0]

get_dl_device_param (DLDevice, 'type', DLDeviceType)

if (DLDeviceType == 'cpu')

* The number of used threads may have an impact

* on the training duration.

NumThreadsTraining := 4

set_system ('thread_num', NumThreadsTraining)

endif

*

* For details see the documentation of set_dl_model_param () and get_dl_model_param ().

if (DLDeviceType == 'gpu')

set_dl_model_param_max_gpu_batch_size (DLModelHandle, 30)

endif

set_dl_model_param (DLModelHandle, 'learning_rate', 0.0001)

set_dl_model_param (DLModelHandle, 'device', DLDevice)

*

* Here, we run a short training of 10 epochs.

* For better model performance increase the number of epochs

* and train as long as your compute budget allows,

* e.g. for 100, 1000 or 3000 epochs.

* Set generic parameters of create_dl_train_param.

* Please see the documentation of create_dl_train_param for an overview on all available parameters.

GenParamName := []

GenParamValue := []

*

* Augmentation parameters.

* If samples should be augmented during training, create the dict required by augment_dl_samples.

* Here, we set the augmentation percentage and method.

AugmentationParam := dict{}

* Percentage of samples to be augmented.

AugmentationParam.augmentation_percentage := 90

AugmentationParam.brightness_variation := 20

AugmentationParam.contrast_variation :=0.2

AugmentationParam.saturation_variation :=0.2

GenParamName := [GenParamName,'augment']

GenParamValue := [GenParamValue,AugmentationParam]

create_dl_train_param (DLModelHandle, 3000, 1, 'true', 42, GenParamName, GenParamValue, TrainParam)

* The training and thus the call of train_dl_model_batch ()

* is done using the following procedure.

train_dl_model (DLDataset, DLModelHandle, TrainParam, 0, TrainResults, TrainInfos, EvaluationInfos)

*

* Read the best model, which is written to file by train_dl_model.

read_dl_model ('model_best.hdl', DLModelHandle)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'left', 'black', [], [])

stop ()

*

dev_close_window ()

dev_close_window ()

*

* *** 3.) EVALUATE ***

*

create_dict (GenParamEval)

set_dict_tuple (GenParamEval, 'show_progress', true)

set_dict_tuple (GenParamEval, 'measures', ['mean_iou', 'pixel_accuracy', 'class_pixel_accuracy', 'pixel_confusion_matrix'])

*

set_dl_model_param (DLModelHandle, 'device', DLDevice)

evaluate_dl_model (DLDataset, DLModelHandle, 'split', 'test', GenParamEval, EvaluationResult, EvalParams)

*

create_dict (GenParamEvalDisplay)

set_dict_tuple (GenParamEvalDisplay, 'display_mode', ['measures', 'absolute_confusion_matrix'])

dev_display_segmentation_evaluation (EvaluationResult, EvalParams, GenParamEvalDisplay, WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

dev_close_window_dict (WindowDict)

*

* Optimize the model for inference,

* meaning, reduce its memory consumption.

set_dl_model_param (DLModelHandle, 'optimize_for_inference', 'true')

set_dl_model_param (DLModelHandle, 'batch_size', 1)

* Save the model in this optimized state.

write_dl_model (DLModelHandle, 'model_best.hdl')

*

* *** 4.) INFER ***

*

* To demonstrate the inference steps, we apply the

* trained model to some randomly chosen example images.

list_image_files (ImageDir, 'default', 'recursive', ImageFiles)

tuple_shuffle (ImageFiles, ImageFilesShuffled)

*

* Create dictionaries used in visualization.

create_dict (WindowDict)

get_dl_model_param (DLModelHandle, 'class_ids', ClassIds)

get_dl_model_param (DLModelHandle, 'class_names', ClassNames)

create_dict (DLDatasetInfo)

set_dict_tuple (DLDatasetInfo, 'class_ids', ClassIds)

set_dict_tuple (DLDatasetInfo, 'class_names', ClassNames)

for IndexInference := 0 to 9 by 1

read_image (Image, ImageFilesShuffled[IndexInference])

gen_dl_samples_from_images (Image, DLSample)

preprocess_dl_samples (DLSample, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSample, [], DLResult)

*

dev_display_dl_data (DLSample, DLResult, DLDatasetInfo, ['segmentation_image_result', 'segmentation_confidence_map'], [], WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

endfor

dev_close_window_dict (WindowDict)

*

* *** 5.) REMOVE FILES ***

*

clean_up_output (OutputDir, RemoveResults)

四、注意事项

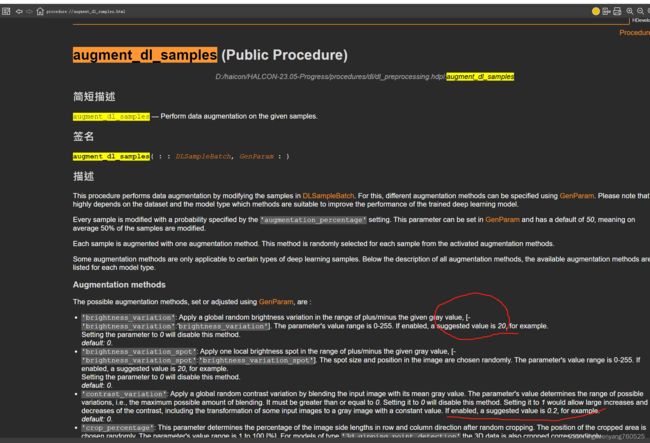

我增加的代码中,依据的是halcon的帮助文件,建议各位使用的时候,认真研读,如下图