Linux内核分析之简析创建一个新进程的过程

SA16225055冯金明 原创作品转载请注明出处

《Linux内核分析》MOOC课程http://mooc.study.163.com/course/USTC-1000029000

实验内容:

实验要求:

- 阅读理解task_struct数据结构http://codelab.shiyanlou.com/xref/linux-3.18.6/include/linux/sched.h#1235

- 分析fork函数对应的内核处理过程sys_clone,理解创建一个新进程如何创建和修改task_struct数据结构

- 使用gdb跟踪分析一个fork系统调用内核处理函数sys_clone,验证您对Linux系统创建一个新进程的理解

- 特别关注新进程是从哪里开始执行的?为什么从哪里能顺利执行下去?即执行起点与内核堆栈如何保证一致

关键实验截图:

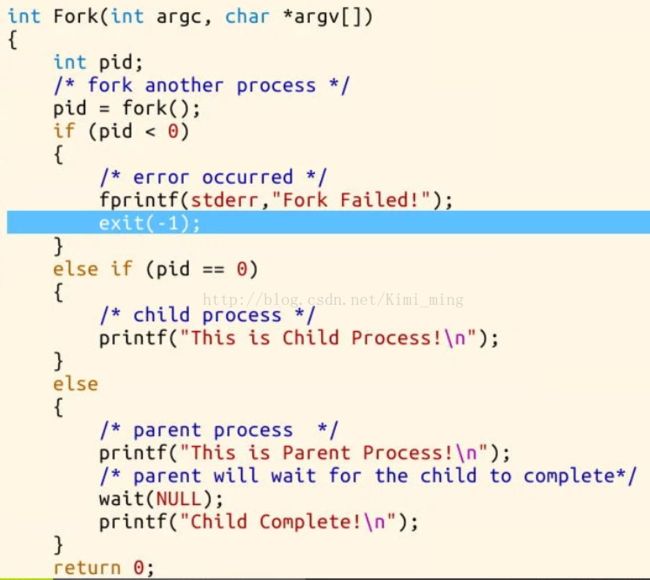

图一 Fork()

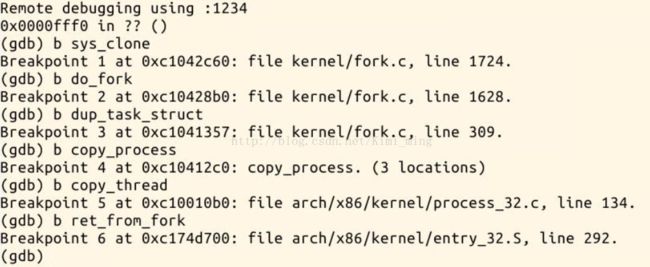

图二 设置断点

图三 dup_task_struct调试中的代码

简析Linux内核创建一个新的进程的过程

进程控制块(Processing Control Block),是系统为了管理进程设置的一个专门的数据结构。系统用它来记录进程的外部特征,描述进程的运动变化过程。同时,系统可以利用PCB来控制和管理进程,所以说,PCB(进程控制块)是系统感知进程存在的唯一标志。我们需要注意以下几个方面:

- 就绪态和运行态都是TASK_RUNNING

- 进程标识符PID

- 通过双向循环链表来实现的进程链表

- 进程描述符中的用来描述进程间的父子关系的相关域

- 一个进程,8KB大小的内存区域,包含两个方面:Thread_info和进程的内核堆栈(内核控制路径所用的堆栈很少)

操作系统的三大功能:

- 进程管理

- 内存管理

- 文件系统

进程描述符task_struct数据结构(实在是太多了,只写了一部分,可以参建我文末的参考资料,其中有更为详细的介绍)

- 调度数据成员

- volatile long states;//表示进程的当前状态

- unsigned long flags;//进程标志

- long priority;//进程优先级

- unsigned long rt_priority;rt_priority给出实时进程的优先级

- long counter;//在轮转法调度时表示进程当前还可运行多久

- unsigned long policy;//该进程的进程调度策略

- 信号处理

- unsigned long signal;//进程接收到的信号

- unsigned long blocked;//进程所能接受信号的位掩码

- struct signal_struct *sig;//因为signal和blocked都是32位的变量,Linux最多只能接受32种信号。对每种信号,各进程可以由PCB的sig属性选择使用自定义的处理函数,或是系统的缺省处理函数。

- 进程队列指针

- struct task_struct *next_task,*prev_task;//所有进程(以PCB的形式)组成一个双向链表

- struct task_struct *next_run,*prev_run;//由正在运行或是可以运行的,其进程状态均为TASK_RUNNING的进程所组成的一个双向循环链表,即run_queue就绪队列。该链表的前后向指针用next_run和prev_run,链表的头和尾都是init_task(即0号进程)。

- struct task_struct *p_opptr,*p_pptr;和struct task_struct *p_cptr,*p_ysptr,*p_osptr;//以上分别是指向原始父进程(original parent)、父进程(parent)、子进程(youngest child)及新老兄弟进程(younger sibling,older sibling)的指针。

- 进程标识

- unsigned short uid,gid;//uid和gid是运行进程的用户标识和用户组标识

- 时间数据成员

- unsigned long timeout;//用于软件定时,指出进程间隔多久被重新唤醒。采用tick为单位。

- unsigned long it_real_value,it_real_iner;//用于itimer(interval timer)软件定时。采用jiffies为单位,每个tick使it_real_value减到0时向进程发信号SIGALRM,并重新置初值。初值由it_real_incr保存。具体代码见kernel/itimer.c中的函数it_real_fn()。

- unsigned long it_virt_value,it_virt_incr;//关于进程用户态执行时间的itimer软件定时。采用jiffies为单位。进程在用户态运行时,每个tick使it_virt_value减1,减到0时向进程发信号SIGVTALRM,并重新置初值。初值由it_virt_incr保存。具体代码见kernel/sched.c中的函数do_it_virt()。

- long utime,stime,cutime,cstime,start_time;//以上分别为进程在用户态的运行时间、进程在内核态的运行时间、所有层次子进程在用户态的运行时间总和、所有层次子进程在核心态的运行时间总和,以及创建该进程的时间。

- 信号量数据成员

- struct sem_undo *semundo;//进程每操作一次信号量,都生成一个对此次操作的undo操作,它由sem_undo结构描述。

- 进程上下文环境

- struct desc_struct *ldt;//进程关于CPU段式存储管理的局部描述符表的指针

- unsigned long kernel_stack_page;//在内核态运行时,每个进程都有一个内核堆栈,其基地址就保存在kernel_stack_page中

- 文件系统数据成员

- struct fs_struct *fs;//fs保存了进程本身与VFS的关系消息,其中root指向根目录结点,pwd指向当前目录结点,umask给出新建文件的访问模式(可由系统调用umask更改),count是Linux保留的属性,如下页图所示。结构定义在include/linux/sched.h中。

- struct files_struct *files;//files包含了进程当前所打开的文件(struct file *fd[NR_OPEN])

- int link_count;//文件链(link)的数目

- 内存数据成员

- struct mm_struct *mm;//在linux中,采用按需分页的策略解决进程的内存需求。task_struct的数据成员mm指向关于存储管理的mm_struct结构。其中包含了一个虚存队列mmap,指向由若干vm_area_struct描述的虚存块。同时,为了加快访问速度,mm中的mmap_avl维护了一个AVL树。在树中,所有的vm_area_struct虚存块均由左指针指向相邻的低虚存块,右指针指向相邻的高虚存块。

- 页面管理

- int swappable:1;//进程占用的内存页面是否可换出。swappable为1表示可换出。对该标志的复位和置位均在do_fork()函数中执行(见kerenl/fork.c)。

- unsigned long min_flt,maj_flt;//该进程累计的minor缺页次数和major缺页次数。maj_flt基本与min_flt相同,但计数的范围比后者广(参见fs/buffer.c和mm/page_alloc.c)。min_flt只在do_no_page()、do_wp_page()里(见mm/memory.c)计数新增的可以写操作的页面。

- unsigned long nswap;//该进程累计换出的页面数

- 支持对称多处理器方式(SMP)时的数据成员

- int processor;//进程正在使用的CPU

- int last_processor;//进程最后一次使用的CPU

- 其他数据成员

- char comm[16];//进程正在运行的可执行文件的文件名

- int errno;//最后一次出错的系统调用的错误号,0表示无错误。系统调用返回时,全程量也拥有该错误号

- struct linux_binfmt *binfmt;//指向进程所属的全局执行文件格式结构,共有a。out、script、elf和Java等四种

- 进程队列全局变量

- current;//当前正在运行的进程的指针,在SMP中则指向CPU组中正被调度的CPU的当前进程

- struct task_struct init_task;//即0号进程的PCB,是进程的“根”,始终保持初值INIT_TASK

- struct task_struct *task[NR_TASKS];//进程队列数组,规定系统可同时运行的最大进程数

- int need_resched;//重新调度标志位

- unsigned long intr_count;//记录中断服务程序的嵌套层数

fork,vfork和clone是用户态的三种系统调用,都是用来创建一个新进程 ,都是通过调用do_fork来完成进程的创建!

Linux进程的产生及进程的由来:

- 道生一:start_kernel .......cpu_idle

- 一生二:kernel_init 和 kthreadd

- 二生三:即0,1,2三个进程----idle进程(PID = 0), init进程(PID = 1)和kthreadd(PID = 2)

- 三生万物:1号进程是所有用户态进程的祖先,0号进程则是所有内核态线程测祖先,2号进程为始终运行在内核空间, 负责所有内核线程的调度和管理

简析do_fork()函数

long do_fork(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *parent_tidptr,

int __user *child_tidptr)

{

struct task_struct *p; //进程描述符结构体指针

int trace = 0;

long nr; //总的pid数量

/*

* Determine whether and which event to report to ptracer. When

* called from kernel_thread or CLONE_UNTRACED is explicitly

* requested, no event is reported; otherwise, report if the event

* for the type of forking is enabled.

*/

if (!(clone_flags & CLONE_UNTRACED)) {

if (clone_flags & CLONE_VFORK)

trace = PTRACE_EVENT_VFORK;

else if ((clone_flags & CSIGNAL) != SIGCHLD)

trace = PTRACE_EVENT_CLONE;

else

trace = PTRACE_EVENT_FORK;

if (likely(!ptrace_event_enabled(current, trace)))

trace = 0;

}

// 复制进程描述符,返回创建的task_struct的指针

p = copy_process(clone_flags, stack_start, stack_size,

child_tidptr, NULL, trace);

/*

* Do this prior waking up the new thread - the thread pointer

* might get invalid after that point, if the thread exits quickly.

*/

if (!IS_ERR(p)) {

struct completion vfork;

struct pid *pid;

trace_sched_process_fork(current, p);

// 取出task结构体内的pid

pid = get_task_pid(p, PIDTYPE_PID);

nr = pid_vnr(pid);

if (clone_flags & CLONE_PARENT_SETTID)

put_user(nr, parent_tidptr);

// 如果使用的是vfork,那么必须采用某种完成机制,确保父进程后运行

if (clone_flags & CLONE_VFORK) {

p->vfork_done = &vfork;

init_completion(&vfork);

get_task_struct(p);

}

// 将子进程添加到调度器的队列,使得子进程有机会获得CPU

wake_up_new_task(p);

/* forking complete and child started to run, tell ptracer */

if (unlikely(trace))

ptrace_event_pid(trace, pid);

// 如果设置了 CLONE_VFORK 则将父进程插入等待队列,并挂起父进程直到子进程释放自己的内存空间

// 保证子进程优先于父进程运行

if (clone_flags & CLONE_VFORK) {

if (!wait_for_vfork_done(p, &vfork))

ptrace_event_pid(PTRACE_EVENT_VFORK_DONE, pid);

}

put_pid(pid);

} else {

nr = PTR_ERR(p);

}

return nr;

}- 通过copy_process来复制进程描述符,返回新创建的子进程的task_struct的指针(即PCB指针)

- 将新创建的子进程放入调度器的队列中,让其有机会获得CPU,并且要确保子进程要先于父进程运行

- 子进程先于父进程的原因:在Linux系统中,有一个叫做copy_on_write技术(写时拷贝技术),该技术的作用是创建新进程时可以减少系统开销,这里子进程先于父进程运行可以保证写时拷贝技术发挥其作用

/*

创建进程描述符以及子进程所需要的其他所有数据结构

为子进程准备运行环境

*/

static struct task_struct *copy_process(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *child_tidptr,

struct pid *pid,

int trace)

{

...

int retval;

struct task_struct *p;

...

// 分配一个新的task_struct,此时的p与当前进程的task,仅仅是stack地址不同

p = dup_task_struct(current);

if (!p)

goto fork_out;

···

retval = -EAGAIN;

// 检查该用户的进程数是否超过限制

if (atomic_read(&p->real_cred->user->processes) >=

task_rlimit(p, RLIMIT_NPROC)) {

// 检查该用户是否具有相关权限,不一定是root

if (p->real_cred->user != INIT_USER &&

!capable(CAP_SYS_RESOURCE) && !capable(CAP_SYS_ADMIN))

goto bad_fork_free;

}

current->flags &= ~PF_NPROC_EXCEEDED;

retval = copy_creds(p, clone_flags);

if (retval < 0)

goto bad_fork_free;

/*

* If multiple threads are within copy_process(), then this check

* triggers too late. This doesn't hurt, the check is only there

* to stop root fork bombs.

*/

retval = -EAGAIN;

// 检查进程数量是否超过 max_threads,后者取决于内存的大小

if (nr_threads >= max_threads)

goto bad_fork_cleanup_count;

if (!try_module_get(task_thread_info(p)->exec_domain->module))

goto bad_fork_cleanup_count;

delayacct_tsk_init(p); /* Must remain after dup_task_struct() */

p->flags &= ~(PF_SUPERPRIV | PF_WQ_WORKER);

// 表明子进程还没有调用exec系统调用

p->flags |= PF_FORKNOEXEC;

INIT_LIST_HEAD(&p->children);

INIT_LIST_HEAD(&p->sibling);

rcu_copy_process(p);

p->vfork_done = NULL;

// 初始化自旋锁

spin_lock_init(&p->alloc_lock);

// 初始化挂起信号

init_sigpending(&p->pending);

// 初始化定时器

p->utime = p->stime = p->gtime = 0;

p->utimescaled = p->stimescaled = 0;

#ifndef CONFIG_VIRT_CPU_ACCOUNTING_NATIVE

p->prev_cputime.utime = p->prev_cputime.stime = 0;

#endif

#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

seqlock_init(&p->vtime_seqlock);

p->vtime_snap = 0;

p->vtime_snap_whence = VTIME_SLEEPING;

#endif

...

#ifdef CONFIG_DEBUG_MUTEXES

p->blocked_on = NULL; /* not blocked yet */

#endif

#ifdef CONFIG_BCACHE

p->sequential_io = 0;

p->sequential_io_avg = 0;

#endif

/* Perform scheduler related setup. Assign this task to a CPU. */

// 完成对新进程调度程序数据结构的初始化,并把新进程的状态设置为TASK_RUNNING

// 同时将thread_info中得preempt_count置为1,禁止内核抢占

retval = sched_fork(clone_flags, p);

if (retval)

goto bad_fork_cleanup_policy;

retval = perf_event_init_task(p);

if (retval)

goto bad_fork_cleanup_policy;

retval = audit_alloc(p);

if (retval)

goto bad_fork_cleanup_perf;

/* copy all the process information */

// 复制所有的进程信息

shm_init_task(p);

retval = copy_semundo(clone_flags, p);

if (retval)

goto bad_fork_cleanup_audit;

retval = copy_files(clone_flags, p);

if (retval)

goto bad_fork_cleanup_semundo;

...

// 初始化子进程的内核栈

retval = copy_thread(clone_flags, stack_start, stack_size, p);

if (retval)

goto bad_fork_cleanup_io;

if (pid != &init_struct_pid) {

retval = -ENOMEM;

// 这里为子进程分配了新的pid号

pid = alloc_pid(p->nsproxy->pid_ns_for_children);

if (!pid)

goto bad_fork_cleanup_io;

}

...

// 清除子进程thread_info结构的 TIF_SYSCALL_TRACE,防止 ret_from_fork将系统调用消息通知给调试进程

clear_tsk_thread_flag(p, TIF_SYSCALL_TRACE);

#ifdef TIF_SYSCALL_EMU

clear_tsk_thread_flag(p, TIF_SYSCALL_EMU);

#endif

clear_all_latency_tracing(p);

/* ok, now we should be set up.. */

// 设置子进程的pid

p->pid = pid_nr(pid);

// 如果是创建线程

if (clone_flags & CLONE_THREAD) {

p->exit_signal = -1;

// 线程组的leader设置为当前线程的leader

p->group_leader = current->group_leader;

// tgid是当前线程组的id,也就是main进程的pid

p->tgid = current->tgid;

} else {

if (clone_flags & CLONE_PARENT)

p->exit_signal = current->group_leader->exit_signal;

else

p->exit_signal = (clone_flags & CSIGNAL);

// 创建的是进程,自己是一个单独的线程组

p->group_leader = p;

// tgid和pid相同

p->tgid = p->pid;

}

...

if (likely(p->pid)) {

ptrace_init_task(p, (clone_flags & CLONE_PTRACE) || trace);

init_task_pid(p, PIDTYPE_PID, pid);

if (thread_group_leader(p)) {

...

// 将pid加入散列表

attach_pid(p, PIDTYPE_PGID);

attach_pid(p, PIDTYPE_SID);

__this_cpu_inc(process_counts);

} else {

...

}

// 将pid加入PIDTYPE_PID这个散列表

attach_pid(p, PIDTYPE_PID);

// 递增 nr_threads的值

nr_threads++;

}

total_forks++;

spin_unlock(¤t->sighand->siglock);

syscall_tracepoint_update(p);

write_unlock_irq(&tasklist_lock);

...

// 返回被创建的task结构体指针

return p;

...

}- 调用 dup_task_struct 复制当前的 task_struct

- 检查进程数是否超过限制

- 初始化自旋锁、挂起信号、CPU 定时器等

- 调用 sched_fork 初始化进程数据结构,并把进程状态设置为 TASK_RUNNING

- 复制所有进程信息,包括文件系统、信号处理函数、信号、内存管理等

- 调用 copy_thread 初始化子进程内核栈

- 为新进程分配并设置新的 pid

简析dup_task_struct( )函数

static struct task_struct *dup_task_struct(struct task_struct *orig)

{

struct task_struct *tsk;

struct thread_info *ti;

int node = tsk_fork_get_node(orig);

int err;

//分配一个 task_struct 节点

tsk = alloc_task_struct_node(node);

if (!tsk)

return NULL;

//分配一个 thread_info 节点,包含进程的内核栈,ti 为栈底

ti = alloc_thread_info_node(tsk, node);

if (!ti)

goto free_tsk;

//将栈底的值赋给新节点的栈

tsk->stack = ti;

//……

return tsk;

} - 调用alloc_task_struct_node分配一个 task_struct 节点

- 调用alloc_thread_info_node分配一个 thread_info 节点,分配了一个thread_union联合体,将栈底返回给 ti

- 最终执行完dup_task_struct之后,子进程除了tsk->stack指针不同之外,全部都一样!

int copy_thread(unsigned long clone_flags, unsigned long sp,

unsigned long arg, struct task_struct *p)

{

//获取寄存器信息

struct pt_regs *childregs = task_pt_regs(p);

struct task_struct *tsk;

int err;

p->thread.sp = (unsigned long) childregs;

p->thread.sp0 = (unsigned long) (childregs+1);

memset(p->thread.ptrace_bps, 0, sizeof(p->thread.ptrace_bps));

if (unlikely(p->flags & PF_KTHREAD)) {

//内核线程

memset(childregs, 0, sizeof(struct pt_regs));

p->thread.ip = (unsigned long) ret_from_kernel_thread;

task_user_gs(p) = __KERNEL_STACK_CANARY;

childregs->ds = __USER_DS;

childregs->es = __USER_DS;

childregs->fs = __KERNEL_PERCPU;

childregs->bx = sp; /* function */

childregs->bp = arg;

childregs->orig_ax = -1;

childregs->cs = __KERNEL_CS | get_kernel_rpl();

childregs->flags = X86_EFLAGS_IF | X86_EFLAGS_FIXED;

p->thread.io_bitmap_ptr = NULL;

return 0;

}

//将当前寄存器信息复制给子进程

*childregs = *current_pt_regs();

//子进程 eax 置 0,因此fork 在子进程返回0

childregs->ax = 0;

if (sp)

childregs->sp = sp;

//子进程ip 设置为ret_from_fork,因此子进程从ret_from_fork开始执行

p->thread.ip = (unsigned long) ret_from_fork;

//……

return err;

} - 为什么 fork 在子进程中返回0,原因是childregs->ax = 0;这段代码将子进程的 eax 赋值为0

- p->thread.ip = (unsigned long) ret_from_fork;将子进程的 ip 设置为 ret_form_fork 的首地址,因此子进程是从 ret_from_fork 开始执行的

这周的学习和实验内容很好的和前几周所学的知识联系了起来,有了一种豁然开朗的感觉,也算是真正明白了道生一,一生二,二生三,三生万物的Linux解释!总结一下一个新进程执行的大致流程为:

- fork,vfork和clone调用do-fork来完成一个新的进程创建

- 调用 copy_process 为子进程复制出一份进程信息

- 调用 dup_task_struct 复制当前的 task_struct

- 调用 sched_fork 初始化进程数据结构,并把进程状态设置为 TASK_RUNNING

- copy_thread中将父进程的寄存器上下文复制给子进程,保证了父子进程的堆栈信息是一致的

- 将子进程的 ip 设置为 ret_form_fork 的首地址,因此子进程是从 ret_from_fork 开始执行的

好像说的有点乱了,可能还需要时间去好好总结推敲一下!此篇博文篇幅较长,很大一部分是参考了前辈们优秀的作品,向各位前辈表示深深地谢意!同时,也还请看博文的大佬指出我理解上出现的问题,不吝赐教!

参考资料:

task_struct结构体字段介绍--Linux中的PCB

分析Linux内核创建一个新进程的过程

Linux内核创建新进程的全过程

分析Linux内核创建一个新进程的过程

Linux下2号进程的kthreadd--Linux进程的管理与调度(七)