AdaBoost 详解

AdaBoost

Boosting

Boosting 是指,仅通过训练精度比随机猜想(50%)稍高的学习器,通过集成的方式过建出强学习器。

其中boosting中最有名的是AdaBoost算法。AdaBoost是英文"Adaptive Boosting"(自适应增强)的缩写,它的自适应在于:前一个基本分类器被错误分类的样本的权值会增大,而正确分类的样本的权值会减小,并再次用来训练下一个基本分类器。同时,在每一轮迭代中,加入一个新的弱分类器,直到达到某个预定的足够小的错误率或达到预先指定的最大迭代次数才确定最终的强分类器。

AdaBoost 推导

设存在数据集 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T=\left\{\left(x_1,y_1\right),\left(x_2,y_2\right),\cdots,\left(x_N,y_N\right)\right\} T={(x1,y1),(x2,y2),⋯,(xN,yN)} ,其中 x i ∈ X ⊆ R n , y i ∈ Y = { + 1 , − 1 } , i = 1 , 2 , ⋯ , N ; x_i\in\mathcal{X}\subseteq R^n,y_i\in\mathcal{Y}=\left\{+1,-1\right\},i=1,2,\cdots,N\text{;} xi∈X⊆Rn,yi∈Y={+1,−1},i=1,2,⋯,N;

考虑二分类问题

因为AdaBoost算法最终得到的学习器是由多个弱学习器集成而来的,由此设

F ( x ) = ∑ k = 1 K α k ϕ ( x ; θ k ) ϕ ( x ; θ k ) ∈ { − 1 , 1 } F(\boldsymbol{x})=\sum_{k=1}^{K}\alpha_{k}\phi(\boldsymbol{x};\boldsymbol{\theta}_{k})\\ \phi(\boldsymbol{x};\boldsymbol{\theta}_{k})\in\{-1,1\} F(x)=k=1∑Kαkϕ(x;θk)ϕ(x;θk)∈{−1,1}

其中 θ k \theta_k θk为参数向量,代表第k个学习器的参数, α k \alpha_k αk为第k个学习器的权重,表示在最终学习器中第k个学习器在其中的权重。

其中最终决策为

f ( x ) = s i g n { F ( x ) } f(\boldsymbol{x})=sign\{F(x)\} f(x)=sign{F(x)}

当正确分类时,存在 y i F ( x i ) > 0 , − y i F ( x i ) < 0 y_iF(x_i)>0,-y_iF(x_i)<0 yiF(xi)>0,−yiF(xi)<0

当错误分类时,存在 y i F ( x i ) < 0 , − y i F ( x i ) > 0 y_iF(x_i)<0,-y_iF(x_i)>0 yiF(xi)<0,−yiF(xi)>0

其中AdaBoost分类的目标就是尽可能的正确分类,即最大限度时,错分的样本数目最少,由此构建目标函数

arg min α k , θ k , k : 1... , K ∑ i = 1 N exp ( − y i F ( x i ) ) \arg\min_{\alpha_k,\theta_k,k:1...,K}\sum_{i=1}^N\exp(-y_iF(x_i)) argαk,θk,k:1...,Kmini=1∑Nexp(−yiF(xi))

其中在AdaBoost推导过程中,设 F m ( x ) F_m(x) Fm(x)为前m个分类器的集成结果,即

F m ( x ) = ∑ k = 1 m α k ϕ ( x ; θ k ) , m = 1 , 2 , . . . , K F_{m}(x)=\sum_{k=1}^{m}\alpha_{k}\phi(x;\theta_{k}),m=1,2,...,K Fm(x)=k=1∑mαkϕ(x;θk),m=1,2,...,K

若写为增量表示,则为

F m ( x ) = F m − 1 ( x ) + α m ϕ ( x ; θ m ) F_{m}(x)=F_{m-1}(x)+\alpha_{m}\phi(x;\theta_{m}) Fm(x)=Fm−1(x)+αmϕ(x;θm)

即前m个分类器集成的结果为前m-1个分类器集成的结果加上第m个分类器的结果 × \times × 权重

由此前m个分类器的目标函数为

J ( α , θ ) = ∑ i = 1 N exp ( − y i ( F m − 1 ( x ) + α ϕ ( x ; θ ) ) ) ( α m , θ m ) = arg min α , θ J ( α , θ ) \begin{aligned}&J(\alpha,\theta)=\sum_{i=1}^N\exp(-y_i(F_{m-1}(x)+\alpha\phi(x;\theta)))\\\\&(\alpha_m,\theta_m)=\arg\min_{\alpha,\theta}J(\alpha,\theta)\end{aligned} J(α,θ)=i=1∑Nexp(−yi(Fm−1(x)+αϕ(x;θ)))(αm,θm)=argα,θminJ(α,θ)

令 w i ( m ) w_i^{(m)} wi(m)为前 m − 1 m-1 m−1个分类器对 x i x_i xi对错分指数,易知这与第m个分类器的 α , θ \alpha,\theta α,θ无关。即

w i ( m ) ≡ exp ( − y i F m − 1 ( x i ) ) w_{i}^{(m)}\equiv\exp(-y_{i}F_{m-1}(x_{i})) wi(m)≡exp(−yiFm−1(xi))

所以前m个分类器的目标函数可以写为

J ( α , θ ) = ∑ i = 1 N w i ( m ) exp ( − y i α ϕ ( x ; θ ) ) J(\alpha,\boldsymbol{\theta})=\sum_{i=1}^Nw_i^{(m)}\exp(-y_i\alpha\phi(\boldsymbol{x};\boldsymbol{\theta})) J(α,θ)=i=1∑Nwi(m)exp(−yiαϕ(x;θ))

由此我们选择的参数 θ m \theta_m θm 应是使得目标函数最小的,即错分的样本最少

θ m = arg min θ ∑ i = 1 N w i ( m ) exp ( − y i α ϕ ( x ; θ ) ) θ m = arg min θ { P m = ∑ i = 1 N w i ( m ) I ( 1 − y i ϕ ( x ; θ ) ) } \begin{aligned} \boldsymbol\theta_{m}&=\arg\min_{\theta}\sum_{i=1}^{N}w_{i}^{(m)}\exp(-y_{i}\alpha\phi(x;\theta))\\ \boldsymbol{\theta}_{m}&=\arg\min_{\theta}\left\{P_{m}=\sum_{i=1}^{N}w_{i}^{(m)}{I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta}))}\right\} \end{aligned} θmθm=argθmini=1∑Nwi(m)exp(−yiαϕ(x;θ))=argθmin{Pm=i=1∑Nwi(m)I(1−yiϕ(x;θ))}

其中:

I ( 1 − y i ϕ ( x ; θ ) ) I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta})) I(1−yiϕ(x;θ))是一个非0即1的函数,当 1 − y i ϕ ( x ; θ ) = 0 1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta})=0 1−yiϕ(x;θ)=0是函数 I ( 1 − y i ϕ ( x ; θ ) ) = 0 I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta}))=0 I(1−yiϕ(x;θ))=0,即正确分类时,函数 I ( 1 − y i ϕ ( x ; θ ) ) I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta})) I(1−yiϕ(x;θ))为0,当错误分类时,函数 I ( 1 − y i ϕ ( x ; θ ) = 1 I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta})=1 I(1−yiϕ(x;θ)=1;

P m P_m Pm为第m个分类器错误识别样本的平均权重。求 θ \theta θ通常在选择第m个分类器时候,选择阈值 P m < 0.5 P_m<0.5 Pm<0.5

所以优化目标可以改为:优化第m个分类器,使得错分样本对应的平均权重最小,即在第m个分类器的正确分类样本,尽可能时前m-1个分类器中错分指数大的样本即 w i ( m ) w_i^{(m)} wi(m) 大,求 θ m \theta_m θm。即可认为在集成过程中使得对 x i x_i xi分类错误的学习器的 w i ( m ) w_i^{(m)} wi(m)小,使得最终的学习器受到错分的影响最小。

J ( α , θ ) = ∑ i = 1 N w i ( m ) exp ( − y i α ϕ ( x ; θ ) ) J(\alpha,\theta)=\sum_{i=1}^{N}w_{i}^{(m)}\exp(-y_{i}\alpha\phi(x;\theta)) J(α,θ)=i=1∑Nwi(m)exp(−yiαϕ(x;θ))

因为

∑ y i ϕ ( x i ; θ m ) < 0 w i ( m ) = P m , y i ϕ ( x i ; θ m ) = − 1 ∑ y i ϕ ( x i ; θ m ) > 0 w i ( m ) = 1 − P m , y i ϕ ( x i ; θ m ) = 1 \begin{aligned}\sum_{y_{i}\phi(x_{i};\theta_{m})<0}w_{i}^{(m)}=&P_{m},\quad y_{i}\phi(x_{i};\theta_{m})=-1\\\sum_{y_{i}\phi(x_{i};\theta_{m})>0}w_{i}^{(m)}=&1-P_{m},\quad y_{i}\phi(x_{i};\theta_{m})=1\end{aligned} yiϕ(xi;θm)<0∑wi(m)=yiϕ(xi;θm)>0∑wi(m)=Pm,yiϕ(xi;θm)=−11−Pm,yiϕ(xi;θm)=1

所以

α m = arg min α { exp ( − α ) ( 1 − P m ) + exp ( α ) P m } \alpha_{m}=\arg\min_{\alpha}\{\exp(-\alpha)(1-P_{m})+\exp(\alpha)P_{m}\} αm=argαmin{exp(−α)(1−Pm)+exp(α)Pm}

由此设存在函数:

f ( a ) = exp ( − α ) ( 1 − P m ) + exp ( α ) P m f(a)=\exp(-\alpha)(1-P_{m})+\exp(\alpha)P_{m} f(a)=exp(−α)(1−Pm)+exp(α)Pm

对其求导数得到

f ′ ( α ) = P m exp ( α ) − ( 1 − P m ) exp ( − α ) \begin{aligned}f'(\alpha)=P_m\exp(\alpha)-(1-P_m)\exp(-\alpha)\end{aligned} f′(α)=Pmexp(α)−(1−Pm)exp(−α)

令 f ′ ( α ) = 0 f'(\alpha)=0 f′(α)=0得到 f ( α ) f(\alpha) f(α)的取最小值时的 α \alpha α 即 α m \alpha_m αm

α m = 1 2 ln 1 − P m P m \alpha_{m}=\frac{1}{2}\ln\frac{1-P_{m}}{P_{m}} αm=21lnPm1−Pm

由此更新权重

w i ( m + 1 ) ≡ exp ( − y i F m ( x i ) ) = w i ( m ) exp ( − y i α m ϕ ( x i ; θ m ) ) w_i^{(m+1)}\equiv\exp(-y_iF_m(\boldsymbol{x}_i))=w_i^{(m)}\exp(-y_i\alpha_m\phi(\boldsymbol{x}_i;\boldsymbol{\theta}_m)) wi(m+1)≡exp(−yiFm(xi))=wi(m)exp(−yiαmϕ(xi;θm))

将 α m = 1 2 ln 1 − P m P m \alpha_{m}=\frac{1}{2}\ln\frac{1-P_{m}}{P_{m}} αm=21lnPm1−Pm代入可得:

被第m个分类器正确分类的样本权值为:

y i ϕ ( x i ; θ m ) = 1 , w i ( m + 1 ) = w i ( m ) exp ( − α m ) = w i ( m ) P m 1 − P m y_{i}\phi(x_{i};\theta_{m})=1,\quad w_{i}^{(m+1)}=w_{i}^{(m)}\exp\left(-\alpha_{m}\right)=w_{i}^{(m)}\sqrt{\frac{P_{m}}{1-P_{m}}} yiϕ(xi;θm)=1,wi(m+1)=wi(m)exp(−αm)=wi(m)1−PmPm

被第m个分类器错误分类的样本权值为:

y i ϕ ( x i ; θ m ) = − 1 , u i ( m + 1 ) = w i ( m ) exp ( α m ) = w i ( m ) 1 − P m P m \begin{aligned}y_{i}\phi(x_{i};\theta_{m})=&-1,\quad u_{i}^{(m+1)}=w_{i}^{(m)}\exp\left(\alpha_{m}\right)=w_{i}^{(m)}\sqrt{\frac{1-P_{m}}{P_{m}}}\end{aligned} yiϕ(xi;θm)=−1,ui(m+1)=wi(m)exp(αm)=wi(m)Pm1−Pm

归一化:

w i ( m + 1 ) = w i ( m + 1 ) / ∑ i = 1 N w i ( m + 1 ) w_{i}^{(m+1)}=\left.w_{i}^{(m+1)}\right/\sum_{i=1}^{N}w_{i}^{(m+1)} wi(m+1)=wi(m+1)/i=1∑Nwi(m+1)

示例

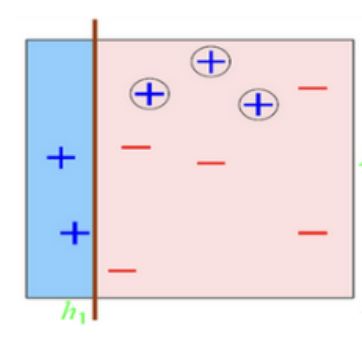

当存在10个样本如图所示

假设在正确分类的样本权值不变,错误分类的样本权值为: w i ( m + 1 ) = w i ( m ) 1 − P m P m w_{i}^{(m+1)}=w_{i}^{(m)}\frac{1-P_{m}}{P_{m}} wi(m+1)=wi(m)Pm1−Pm的情况下

首先初始化各分类器的权重

D 1 = ( w 11 , w 12 , ⋯ , w 1 N ) , w 1 i = 1 N , i = 1 , 2 , ⋯ , N D_1=\left(w_{11},w_{12},\cdots,w_{1N}\right),\quad w_{1i}=\frac1N,\quad i=1,2,\cdots,N D1=(w11,w12,⋯,w1N),w1i=N1,i=1,2,⋯,N

可得: аll x i , w i ( 0 ) = 0.1 \textit{аll }x_i\text{,}w^{(0)}_\mathrm{i}=0.1 аll xi,wi(0)=0.1

然后对其使用 h 1 h_1 h1分类器,分类结果如图所示

易知有三个样本被错误分类

其中正确分类的样本权值不变,错误分类的样本权值为:

w i ( m + 1 ) = w i ( m ) 1 − P m P m w_{i}^{(m+1)}=w_{i}^{(m)}\frac{1-P_{m}}{P_{m}} wi(m+1)=wi(m)Pm1−Pm

其中

P m = ∑ i = 1 N w i ( m ) I ( 1 − y i ϕ ( x ; θ ) ) P_{m}=\sum_{i=1}^{N}w_{i}^{(m)}{I(1-y_{i}\phi(\boldsymbol{x};\boldsymbol{\theta}))} Pm=i=1∑Nwi(m)I(1−yiϕ(x;θ))

即被错分的样本乘上平均错分权重

α m = 1 2 ln 1 − P m P m \alpha_{m}=\frac{1}{2}\ln\frac{1-P_{m}}{P_{m}} αm=21lnPm1−Pm

得到

P 1 = N F w ( 0 ) = 0.3 1 − P 1 = 0.7 α 1 = 1 2 ln ( 1 − P 1 P 1 ) = 1 2 ln ( 1 − 0.3 0.3 ) = 0.42 w i ( 1 ) = w ( 0 ) = 0.1 正确分类不变 w i ( 1 ) = w ( 0 ) 1 − P 1 P 1 = 0.233 错误分类 \begin{aligned} P_{1}&=N_Fw^{(0)}={0.3}\\ 1-P_{1}&={0.7}\\ \alpha_1&=\frac{1}{2}\ln(\frac{1-P_1}{P_1})=\frac{1}{2}\ln(\frac{1-0.3}{0.3})=0.42\\ w_{i}^{(1)}&=w^{(0)}=0.1 \quad \text{正确分类不变 }\\ w_i^{(1)}&=w^{(0)}\frac{1-P_{1}}{P_{1}}=0.233 \quad \text{错误分类} \end{aligned} P11−P1α1wi(1)wi(1)=NFw(0)=0.3=0.7=21ln(P11−P1)=21ln(0.31−0.3)=0.42=w(0)=0.1正确分类不变 =w(0)P11−P1=0.233错误分类

对其 w ( 1 ) w^{(1)} w(1)进行归一化处理

w i ( 1 ) = 0.1 0.1 ∗ 7 + 0.233 ∗ 3 1被正确分类 w i ( 1 ) = 0.233 0.1 ∗ 7 + 0.233 ∗ 3 1被错误分类 \begin{aligned} w_{i}^{(1)}=\frac{0.1}{0.1*7+0.233*3}\quad\text{1被正确分类}\\ w_{i}^{(1)}=\frac{0.233}{0.1*7+0.233*3}\quad\text{1被错误分类} \end{aligned} wi(1)=0.1∗7+0.233∗30.11被正确分类wi(1)=0.1∗7+0.233∗30.2331被错误分类

对其使用 h 2 h_2 h2分类器,分类结果如图所示

易知有三个样本被错误分类

P 2 = N F w i ( 1 ) = 3 ∗ 0.1 0.1 ∗ 7 + 0.233 ∗ 3 = 0.2144 α 2 = 1 2 ln ( 1 − P 2 P 2 ) = 1 2 ln ( 1 − 0.2144 0.2144 ) = 0.6493 w i ( 2 ) = w i ( 1 ) 正确分类不变 w i ( 2 ) = w i ( 1 ) 1 − P 2 P 2 = 0.3644 错误分类 \begin{aligned} P_2&=N_Fw^{(1)}_i=3*\frac{0.1}{0.1*7+0.233*3}=0.2144\\ \alpha_2&=\frac{1}{2}\ln(\frac{1-P_2}{P_2})=\frac12\ln\biggl(\frac{1-0.2144}{0.2144}\biggr)=0.6493\\ w_{i}^{(2)}&=w_i^{(1)}\quad \text{正确分类不变 }\\ w_i^{(2)}&=w_i^{(1)}\frac{1-P_{2}}{P_{2}}=0.3644 \quad \text{错误分类} \end{aligned} P2α2wi(2)wi(2)=NFwi(1)=3∗0.1∗7+0.233∗30.1=0.2144=21ln(P21−P2)=21ln(0.21441−0.2144)=0.6493=wi(1)正确分类不变 =wi(1)P21−P2=0.3644错误分类

对 w i ( 2 ) w^{(2)}_i wi(2)进行归一化

w i ( 1 ) = 0.1 0.1 ∗ 4 + 0.233 ∗ 3 + 0.3644 ∗ 3 1、2都被正确分类 w i ( 1 ) = 0.233 0.1 ∗ 4 + 0.233 ∗ 3 + 0.3644 ∗ 3 1被错误分类,2被正确分类 w i ( 1 ) = 0.3644 0.1 ∗ 4 + 0.233 ∗ 3 + 0.3644 ∗ 3 2被错误分类,1被正确分类 \begin{aligned} w_{i}^{(1)}&=\frac{0.1}{0.1*4+0.233*3+0.3644*3}\quad\text{1、2都被正确分类} \\ w_{i}^{(1)}&=\frac{0.233}{0.1*4+0.233*3+0.3644*3}\quad\text{1被错误分类,2被正确分类}\\ w_{i}^{(1)}&=\frac{0.3644}{0.1*4+0.233*3+0.3644*3}\quad\text{2被错误分类,1被正确分类}\\ \end{aligned} wi(1)wi(1)wi(1)=0.1∗4+0.233∗3+0.3644∗30.11、2都被正确分类=0.1∗4+0.233∗3+0.3644∗30.2331被错误分类,2被正确分类=0.1∗4+0.233∗3+0.3644∗30.36442被错误分类,1被正确分类

对其使用 h 3 h_3 h3分类器,分类结果如图所示

易知有一个样本被错误分类

P 2 = N F w i ( 1 ) = 1 ∗ 0.1 0.1 ∗ 4 + 0.233 ∗ 3 + 0.3644 ∗ 3 = 0.1356 α 2 = 1 2 ln ( 1 − P 3 P 3 ) = 1 2 ln ( 1 − 0.1356 0.1356 ) = 0.9223 w i ( 2 ) = w i ( 1 ) 正确分类不变 w i ( 2 ) = w i ( 1 ) 1 − P 3 P 3 = 0.6326 错误分类 \begin{aligned} P_2&=N_Fw^{(1)}_i=1*\frac{0.1}{0.1*4+0.233*3+0.3644*3}=0.1356\\ \alpha_2&=\frac{1}{2}\ln(\frac{1-P_3}{P_3})=\frac12\ln\biggl(\frac{1-0.1356}{0.1356}\biggr)=0.9223\\ w_{i}^{(2)}&=w_i^{(1)}\quad \text{正确分类不变 }\\ w_i^{(2)}&=w_i^{(1)}\frac{1-P_{3}}{P_{3}}=0.6326 \quad \text{错误分类} \end{aligned} P2α2wi(2)wi(2)=NFwi(1)=1∗0.1∗4+0.233∗3+0.3644∗30.1=0.1356=21ln(P31−P3)=21ln(0.13561−0.1356)=0.9223=wi(1)正确分类不变 =wi(1)P31−P3=0.6326错误分类

综上所述