经典卷积神经网络-AlexNet

经典卷积神经网络-AlexNet

一、背景介绍

2012 年, 出自论文《ImageNet Classification with Deep Convolutional Neural Networks》中的AlexNet被称为是首个真正意义上的深度卷积神经网络。这个模型的名字来源于论文第一作者的姓名 Alex Krizhevsky。AlexNet 使用了 8 层卷积神经网络,并以很大的优势赢得了 ImageNet 2012 图像识别挑战赛冠军。

二、AlexNet网络结构

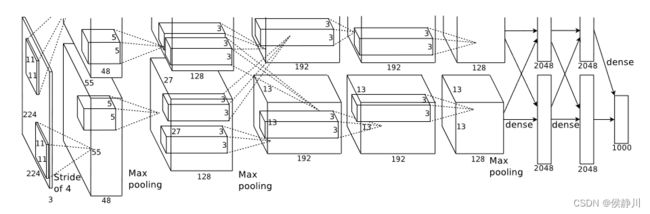

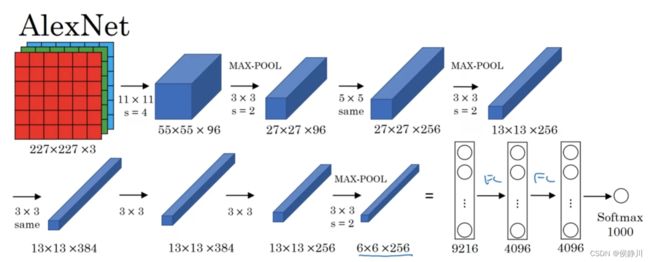

如图所示,这是论文中作者给出的AlexNet网络结构,共有八层,包含五个卷积层和三个全连接层。吴恩达深度学习视频中给出的网络结构如下:

如图所示,AlexNet与LeNet有很多相似之处,但AlexNet要大的多。AlexNet是针对ImageNet数据集来建立的,所以其最后输出(Softmax)层有1000个神经元。LeNet大约包含6万个参数,而AlexNet大约包含6000万个参数。以下是AlexNet论文中的一些细节:

- 论文中的网络结构使用的原图输入为224 × 224,实际上进行了随机裁剪,实际大小为227 × 227。

- AlexNet使用了ReLU激活函数,比传统神经网络所选取的非线性饱和函数(如sigmoid函数,tanh函数)要快许多。

- 论文中采用了非常复杂的方法在两个GPU上进行训练,大致原理是这些层被分拆到两个不同的GPUs上,同时还有一个专门的方法用于两个GPUs进行交流。

- 采用局部响应归一化(LRN),可形成某种形式的横向抑制,从而提高网络的泛华能力。随着时间的推移,LRN 在深度学习中的应用逐渐减少,因为其他正则化技术,如批量归一化(Batch Normalization)等,通常表现更好且更易于训练。

- 池化方式采用overlapping pooling,即池化窗口的大小大于步长,使得每次池化都有重叠的部分。这种重叠的池化方式比传统无重叠的池化方式有着更好的效果,且可以避免过拟合现象的发生。

- 采用Dropout操作,Dropout操作会将概率小于0.5的每个隐层神经元的输出设为0,即去掉了一些神经节点,达到防止过拟合。

三、AlexNet的Pytorch实现

后面要将AlexNet应用到猫狗二分类问题上,所以对AlexNet网络做了一些修改,其实现代码如下:

import torch

from torch import nn

from torch.nn import Sequential

class AlexNet(nn.Module):

def __init__(self):

super().__init__()

# 五个卷积层提取特征

self.features = Sequential(

# 【1】号卷积层 + ReLU + LRN + 池化

nn.Conv2d(in_channels=3, out_channels=96, kernel_size=11, stride=4, padding=2),

nn.LocalResponseNorm(size=5, alpha=0.0001, beta=0.75, k=2),

nn.MaxPool2d(kernel_size=3, stride=2),

# 【2】号卷积层 + ReLU + LRN + 池化

nn.Conv2d(in_channels=96, out_channels=256, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.LocalResponseNorm(size=5, alpha=0.0001, beta=0.75, k=2),

nn.MaxPool2d(kernel_size=3, stride=2),

# 3个卷积层+RelU + 池化

nn.Conv2d(in_channels=256, out_channels=384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=384, out_channels=384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=384, out_channels=256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.classifier = Sequential(

# DP+1号线性层

nn.Dropout(p=0.5),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

# DP+2号线性层

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

# 输出层

nn.Linear(4096, 2)

)

def forward(self, x):

x = self.features(x)

# 作用类似Flatten 元素个数不变 每个元素都展平成一维的

x = x.view(-1, 256 * 6 * 6)

x = self.classifier(x)

return x

if __name__ == '__main__':

model = AlexNet()

print(model)

input = torch.ones((64, 3, 224, 224))

output = model(input)

print(output.shape)

四、案例:猫狗二分类问题

此次使用的猫狗分类图像一共25000张,猫狗分别有12500张。下载地址:https://www.kaggle.com/c/dogs-vs-cats-redux-kernels-edition/data,其中train文件夹中有带标签的图片25000张,猫狗各一半。因为test1文件夹中的图片没有带标签,所以我只使用了train文件夹,由于图片太多所以我只取了其中2000张(猫狗各1000张),并将其七三划分为了训练集和测试集。目录结构为:

CatVSDog:

----train_cat

----train_dog

----test_cat

----test_dog

自定义DataSet:

from torch.utils.data import Dataset

from PIL import Image

import torchvision

import os

class CatvsDogDataSet(Dataset):

def __init__(self, root_dir, label_dir, transform=None):

# 根目录 对应到一个train/test文件夹

self.root_dir = root_dir

# 标签目录就是数据文件名

self.label_dir = label_dir

# 数据地址就是根目录 + 标签目录(数据文件),也就是对应的cat文件夹或dog文件夹

self.path = os.path.join(self.root_dir, self.label_dir)

# 图片地址就是数据文件下的所有文件名 (返回一个列表)

self.img_path = os.listdir(self.path)

# 数据预处理

self.transform = transform

# 重写__getitem__()函数

def __getitem__(self, idx):

imag_name = self.img_path[idx]

img_item_path = os.path.join(self.path, imag_name)

img = Image.open(img_item_path)

# 如果传入transform 就对其进行预处理

if self.transform:

img = self.transform(img)

if self.label_dir == "train_cat" or self.label_dir == "test_cat":

label = 0

else:

label = 1

return img, label

# 重写 __len__()函数:获取数据集的数量

def __len__(self):

return len(self.img_path)

下面是训练模型的代码:

import torch

import torch.nn as nn

import torchvision

from torch.utils.tensorboard import SummaryWriter

from dataset import *

from model import *

from torch.utils.data import DataLoader

from torchvision.transforms import ToTensor

# 1. 准备数据集

# 数据预处理

transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize((224, 224)),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

# 加载训练集

root_dir = "../dataset/dataset_kaggledogvscat/CatVSDog/"

train_cat_label_dir = "train_cat"

train_dog_label_dir = "train_dog"

train_cat_dataset = CatvsDogDataSet(root_dir, train_cat_label_dir, transform=transforms)

train_dog_dataset = CatvsDogDataSet(root_dir, train_dog_label_dir, transform=transforms)

train_dataset = train_cat_dataset + train_dog_dataset

# 加载测试集

root_dir = "../dataset/dataset_kaggledogvscat/CatVSDog/"

test_cat_label_dir = "test_cat"

test_dog_label_dir = "test_dog"

test_cat_dataset = CatvsDogDataSet(root_dir, test_cat_label_dir, transform=transforms)

test_dog_dataset = CatvsDogDataSet(root_dir, test_dog_label_dir, transform=transforms)

test_dataset = test_cat_dataset + test_dog_dataset

train_data_size = len(train_dataset)

test_data_size = len(test_dataset)

# print(train_data_size) # 1400

# print(test_data_size) # 600

# 2. 利用 DataLoader来加载数据集

train_dataloader = DataLoader(train_dataset, batch_size=100, shuffle=True)

test_dataloader = DataLoader(test_dataset, batch_size=64, shuffle=False)

# 4. 创建网络模型

model = AlexNet()

if torch.cuda.is_available():

model = model.cuda()

# 5. 创建损失函数

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 6. 创建优化器

learning_rate = 1e-3

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

# 7. 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 可选1 添加TensorBoard

writer = SummaryWriter("./logs_AlexNet")

for i in range(epoch):

print("-----------第{}轮训练开始-----------".format(i + 1))

# 8. 训练步骤开始

model.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

# 计算loss值

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 5 == 0:

print("训练次数:{}, Loss:{}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 9. 测试步骤开始

model.eval()

total_test_loss = 0

total_accuracy = 0

# torch.no_grad() 是一个上下文管理器,用于禁用梯度计算 当进入with后的代码块时,Pytorch会停止跟踪梯度

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = model(imgs)

loss = loss_fn(outputs, targets)

total_test_loss += loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的accuracy:{}".format(total_accuracy / test_data_size))

total_test_step += 1

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)

# 10. 保存每一轮训练的模型

torch.save(model.state_dict(), "train_model/AlexNet_{}.pth".format(i + 1))

writer.close()

训练完毕以后,在TensorBoard查看train_loss曲线:

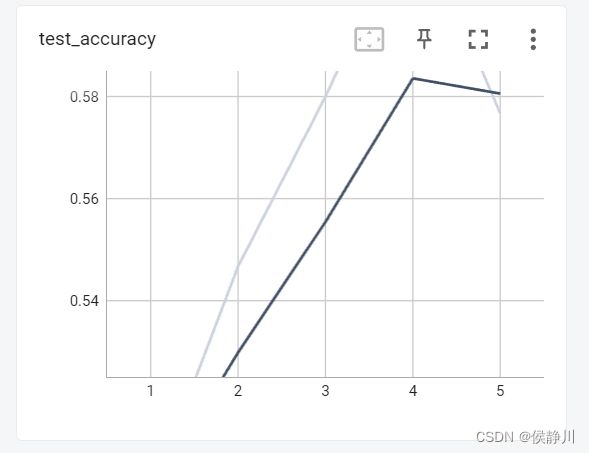

查看test_accuracy曲线:

可能是我的数据量太小导致的,其准确率最高只有61%。

参考链接:

-

https://admirefx.gitee.io/admirefx/2021/07/01/AlexNet%E5%9C%A8%E7%8C%AB%E7%8B%97%E5%88%86%E7%B1%BB%E7%9A%84%E4%BA%8C%E5%88%86%E7%B1%BB%E9%97%AE%E9%A2%98%E4%B8%AD%E7%9A%84%E5%BA%94%E7%94%A8/

-

https://cloud.tencent.com/developer/article/1636074

-

https://blog.csdn.net/weixin_37813036/article/details/90718310