tensorflow全连接神经网络代码实现

训练目的

由np产生随机数据train_x,标签数据是train_y 由 f(x )= sinx + cosx产生,即 train_y = f(train_x)

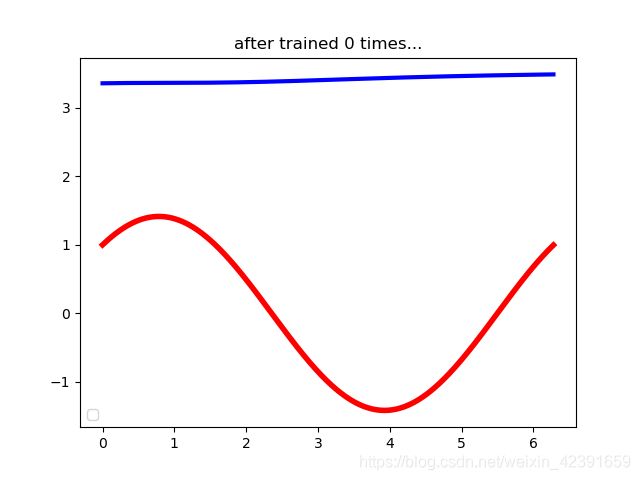

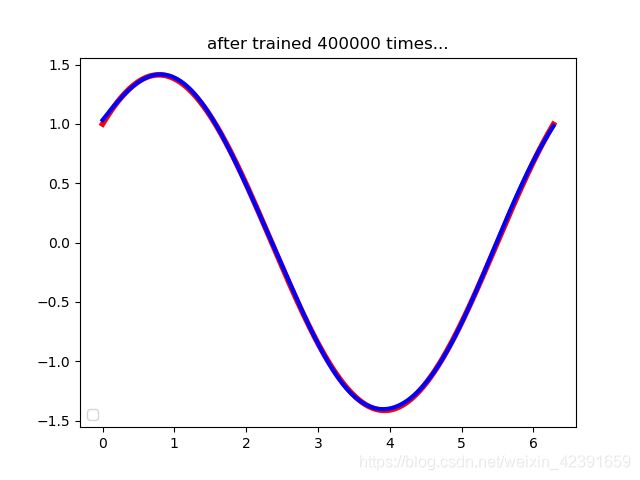

训练结果

蓝色是神经网络训练相应次数产生的数据

红色是直接由x = np.arrange(0, 2 * np.pi, 0.01), y = f(x), pylab.plot(x, y)画出,正确的f(x)函数图像

可以看出,随着训练次数的增加,图像逐渐重合

可以看出,随着训练次数的增加,图像逐渐重合

代码奉上

(借鉴了《TensorFlow入门与实战》的代码思想)

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import pylab

'''

实验目的是为了熟悉练习全连接网络的使用

本实验采用的训练数据采用np产生的数据,标签数据为np.sin(x) + np.cos(x)

输入层:1

hidden1:20nodes w1 = [1, 20], bias1 = [1, 20] a1 = [1, 20] aw + bias

hidden2:15nodes w2 = [20, 15], bias2 = [1, 15] a2 = [1, 15]

hidden3:10nodes w3 = [15, 10], bias3 = [1, 10]

hidden4:15nodes w4 = [10, 15], bias4 = [1, 15]

hidden5:10nodes w5 = [15, 10], bias4 = [1, 10]

output layer:1 output w5= [10, 1] bias5 = [1]

'''

def func(x):

# 定义目标函数

y = np.sin(x) + np.cos(x)

return y

def get_correct_graph():

x = np.arange(0, 2 * np.pi, 0.01) # 此处生成的是1行n列的列表

x = x.reshape((len(x), 1)) # 此处将列表变换为n行1列的矩阵

y = func(x)

# 画图

pylab.plot(x, y, color='red', linewidth=4)

# plt.axhline(y=0, color='red', linewidth=4)

def get_train_data():

'''获取训练神经网络所用yoga的数据'''

train_x = np.random.uniform(0, 2 * np.pi, (1))

train_y = func(train_x)

return train_x, train_y

def inference(input_data):

'''对正向传播进行参数设置,hyperparameters setting,参数初始化为接近为零的数字'''

with tf.variable_scope('hidden1'):

weights = tf.get_variable('weight', [1, 20], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1, 20], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

hidden1 = tf.sigmoid(tf.multiply(input_data, weights) + biases)

with tf.variable_scope('hidden2'):

weights = tf.get_variable('weight', [20, 15], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1, 15], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

hidden2 = tf.sigmoid(tf.matmul(hidden1, weights) + biases)

with tf.variable_scope('hidden3'):

weights = tf.get_variable('weight', [15, 10], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1, 10], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

hidden3 = tf.sigmoid(tf.matmul(hidden2, weights) + biases)

with tf.variable_scope('hidden4'):

weights = tf.get_variable('weight', [10, 15], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1, 15], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

hidden4 = tf.sigmoid(tf.matmul(hidden3, weights) + biases)

with tf.variable_scope('hidden5'):

weights = tf.get_variable('weight', [15, 10], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1, 10], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

hidden5 = tf.sigmoid(tf.matmul(hidden4, weights) + biases)

with tf.variable_scope('output'):

weights = tf.get_variable('weight', [10, 1], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

biases = tf.get_variable('bias', [1], tf.float32,

initializer=tf.random_normal_initializer(0.0, 1))

output = tf.matmul(hidden5, weights) + biases

return output

def train():

train_rate = 0.01

# 对x和y采用占位符

x = tf.placeholder(tf.float32)

y = tf.placeholder(tf.float32)

y_hat = inference(x)

loss = tf.square(y_hat - y)

opt = tf.train.GradientDescentOptimizer(train_rate)

train_opt = opt.minimize(loss)

# 参数初始化

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

# 开始喂入数据

print('start training....')

for i in range(500000): # 训练500000次

train_x, train_y = get_train_data()

sess.run(train_opt, feed_dict={x: train_x,y: train_y})

# 对上述训练好的模型进行测试

if i % 100000 == 0:

times = int(i / 100000)

test_x_ndarray = np.arange(0, 2 * np.pi, 0.01)

test_y_ndarray = np.zeros([len(test_x_ndarray)])

ind = 0

for test_x in test_x_ndarray:

test_y = sess.run(y_hat, feed_dict={x: test_x, y: 1})

np.put(test_y_ndarray, ind, test_y)

ind += 1

get_correct_graph()

pylab.plot(test_x_ndarray, test_y_ndarray, color='blue', linewidth=3)

plt.legend([y, test_y_ndarray], ['y', 'y_trained'], loc='lower left')

plt.title('after trained ' + str(times * 100000) + ' times...')

plt.show()

if __name__ == '__main__':

train()