normalization in nn (batchnorm layernorm instancenorm groupnorm)

- 本文内容为笔者学习b站deep_thought老师视频的笔记。

- 本文将从源码角度深入学习剖析四种norm方式的区别。

- 本文只针对norm时计算mean 和 std 的方式进行解释,没有加入可学习的参数 γ \gamma γ和 β \beta β。

首先导入pytorch。

import torch

import torch.nn as nn

定义输入,本文以nlp或时间序列预测的数据结构为例。即[batch_size, time_step, embedding]

# 定义输入

batch_size = 2

time_step = 3

embedding = 4

inputx = torch.randn(batch_size, time_step, embedding)#使用torch.randn函数生成随机分布

下面为pytorch官方的解释。

https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm1d.html

batch_norm

下面我们先调用官方的api实例化一个norm类。然后使用该类的call方法进行batchnorm的计算。

此处只讨论计算mean和std进行归一化的过程,不讨论可学习的参数。因此选择参数affine = False

#使用官方API实现batch norm

batch_norm_op = nn.BatchNorm1d(embedding, affine = False)

bn_y = batch_norm_op(inputx.transpose(-1, -2)).transpose(-1, -2)

print(bn_y)

可以看到运行结果:

tensor([[[ 0.4841, -0.2107, 1.2978, -1.4603],

[-0.3818, 1.9045, -0.7635, 1.6050],

[-1.5852, -0.0339, 0.7518, 0.0177]],

[[ 1.7162, -1.5197, -1.6548, -0.2374],

[-0.3759, -0.0073, 0.6093, 0.8224],

[ 0.1426, -0.1330, -0.2406, -0.7474]]])

下面我们来手写实现batchnorm的实现。

在此之前,读者应掌握torch.mean()的用法,需特别注意其中dim参数为要降低的维度(也即每次计算时贯穿的维度),即每次算mean时,综合dim维的所有元素算一次mean,而dim中不包括的维是计算dim时变化的维。所以算出的mean的维度与dim中不包含的那个维度一致。

以batchnorm为例,dim中包括(0,1),即每次算mean是把每个embedding的所有batchz-size和time_step的元素都求和再除以元素个数得到平均值。所以得到的mean维数为(1,1,embedding)。使用torch.squeeze()函数可把维度变为(embedding)。

ps:笔者在理解此处时耗费了大量时间,读者应画图思考一下,以便尽快理解。

#手写实现 batch_norm

#首先计算mean和std

bn_mean = inputx.mean(dim = (0, 1), keepdim = True)

bn_std = inputx.std(dim = (0, 1), keepdim = True, unbiased=False)

print(bn_mean)

print(bn_mean.shape)

print(bn_std)

#利用归一化公式进行计算

verify_bn_y = (inputx - bn_mean) / (bn_std + 1e-5)

print(verify_bn_y)

tensor([[[-0.0491, -0.1138, -0.2325, 0.0215]]])

torch.Size([1, 1, 4])

tensor([[[0.6325, 0.8457, 0.8910, 0.9523]]])

tensor([[[ 0.4841, -0.2107, 1.2978, -1.4603],

[-0.3818, 1.9045, -0.7635, 1.6050],

[-1.5852, -0.0339, 0.7518, 0.0177]],

[[ 1.7162, -1.5197, -1.6548, -0.2374],

[-0.3759, -0.0073, 0.6093, 0.8224],

[ 0.1426, -0.1330, -0.2406, -0.7474]]])

#验证官方api 与 手写 是否一致

print(torch.all(torch.isclose(bn_y, verify_bn_y)))

tensor(True)

可以看到结果一致。

layer_norm

https://pytorch.org/docs/stable/generated/torch.nn.LayerNorm.html

!(https://img-home.csdnimg.cn/images/202307

同样,我们首先调用官方api实例化layernorm对象并调用其方法实现layernorm的计算。

#使用官方API实现layer norm

layer_norm_op = nn.LayerNorm(embedding, elementwise_affine=False)

ln_y = layer_norm_op(inputx)

print(ln_y)

tensor([[[ 0.4491, -0.2048, 1.2429, -1.4871],

[-0.6924, 0.9547, -1.2657, 1.0035],

[-1.5988, 0.0681, 1.1311, 0.3995]],

[[ 1.4816, -0.7667, -1.0508, 0.3360],

[-1.0973, -0.7025, 0.3155, 1.4842],

[ 1.3758, 0.3859, -0.4303, -1.3314]]])

接下来手动实现与官方api比较结果是否一致。

# 手写实现layer_norm

ln_mean = inputx.mean(dim = 2, keepdim = True)

ln_std = inputx.std(dim = 2,keepdim = True, unbiased = False)

print(ln_mean.shape)

verify_ln_y = (inputx - ln_mean) / (ln_std + 1e-5)

print(verify_ln_y)

torch.Size([2, 3, 1])

tensor([[[ 0.4491, -0.2048, 1.2429, -1.4871],

[-0.6924, 0.9547, -1.2657, 1.0035],

[-1.5988, 0.0681, 1.1311, 0.3995]],

[[ 1.4816, -0.7667, -1.0508, 0.3360],

[-1.0973, -0.7025, 0.3155, 1.4842],

[ 1.3758, 0.3860, -0.4303, -1.3314]]])

可以看到是一致的。

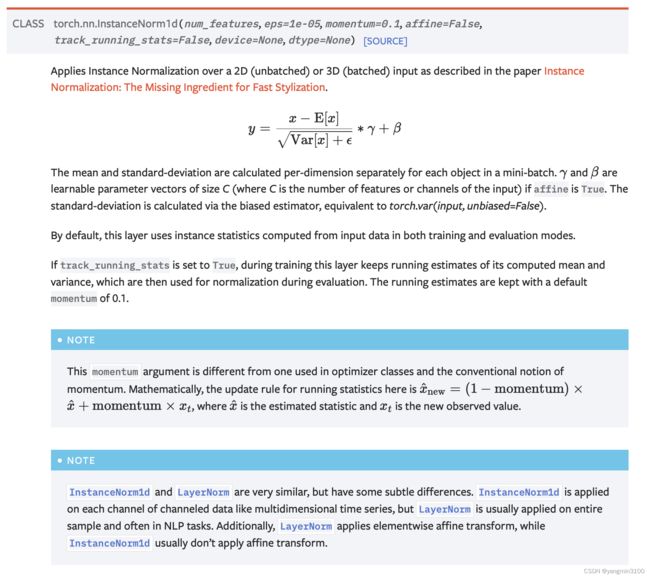

instance norm

https://pytorch.org/docs/stable/generated/torch.nn.InstanceNorm1d.html

#使用官方API实现instance norm

instance_norm_op = nn.InstanceNorm1d(embedding)

in_y = instance_norm_op(inputx.transpose(-1, -2)).transpose(-1 ,-2)

print(in_y)

tensor([[[ 1.1530, -0.7974, 0.9967, -1.2099],

[ 0.1326, 1.4102, -1.3672, 1.2390],

[-1.2856, -0.6128, 0.3706, -0.0291]],

[[ 1.3736, -1.4102, -1.3130, -0.2803],

[-0.9783, 0.7968, 1.1115, 1.3406],

[-0.3953, 0.6134, 0.2014, -1.0603]]])

#手动实现instance norm

in_mean = inputx.mean(dim = 1, keepdim = True)

in_std = inputx.std(dim = 1, keepdim = True, unbiased = False)

verify_in_y = (inputx - in_mean) / (in_std + 1e-5)

print(verify_in_y)

print(torch.all(torch.isclose(in_y, verify_in_y)))

tensor([[[ 1.1530, -0.7974, 0.9966, -1.2099],

[ 0.1326, 1.4102, -1.3672, 1.2390],

[-1.2856, -0.6128, 0.3706, -0.0291]],

[[ 1.3736, -1.4102, -1.3130, -0.2803],

[-0.9782, 0.7968, 1.1115, 1.3406],

[-0.3953, 0.6134, 0.2014, -1.0603]]])

tensor(True)

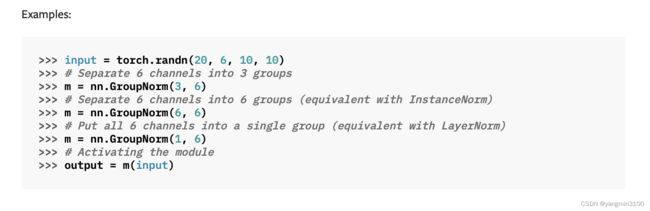

group norm

https://pytorch.org/docs/stable/generated/torch.nn.GroupNorm.html

# 使用官方API实现group_norm

num_groups = 2

group_norm_op = nn.GroupNorm(num_groups = 2, num_channels = embedding)

gn_y = group_norm_op(inputx.transpose(-1, -2)).transpose(-1 ,-2)

print(gn_y)

tensor([[[ 0.3367, -0.3720, 0.8071, -1.4704],

[-0.3701, 1.9366, -1.0171, 1.4289],

[-1.3523, -0.1789, 0.3240, -0.0724]],

[[ 1.6825, -1.7451, -1.7509, 0.1487],

[-0.1798, 0.0551, 0.8001, 1.4250],

[ 0.2818, -0.0945, -0.1575, -0.4653]]], grad_fn=)

#手写实现group_norm

#首先将inpux分组

group_inputx = torch.split(inputx, split_size_or_sections=embedding // num_groups, dim = -1)

results = []

for g_inputx in group_inputx:

gn_mean = g_inputx.mean(dim = (1, 2), keepdim = True)

gn_std = g_inputx.std(dim = (1, 2), keepdim = True, unbiased = False)

gn_result = (g_inputx - gn_mean) / (gn_std + 1e-5)

results.append(gn_result)

verify_gn_y = torch.cat(results, dim = -1)

print(verify_gn_y)

print(torch.all(torch.isclose(gn_y, verify_gn_y)))

tensor([[[ 0.3367, -0.3720, 0.8071, -1.4704],

[-0.3701, 1.9366, -1.0171, 1.4289],

[-1.3523, -0.1789, 0.3240, -0.0724]],

[[ 1.6825, -1.7451, -1.7509, 0.1487],

[-0.1798, 0.0551, 0.8001, 1.4250],

[ 0.2818, -0.0945, -0.1575, -0.4653]]])

tensor(True)

总结:可以看到,在不考虑可学习的参数时,这四种norm方式的本质不同在于计算mean和std时的方法不同,不同就是在mean函数的dim参数上。

读者在搞懂torch.mean()函数后,自己画一下立方体结合一下数据空间形态能很好理解。

作为记忆,读者可这样记录:

下面这篇文章对于batchnorm和layernorm的区别以及layernorm的机理介绍的很详细。

关于更多这四种norm方法中可学习参数维度的区别和使用方法,可参考这篇博客。

https://blog.csdn.net/qq_43827595/article/details/121877901