DIS 光流详解

DIS光流文章:Fast Optical Flow using Dense Inverse Search 由Till Kroeger等人在2016年提出的。

目的:实现快速的稠密光流算法;

亲测数据:2000*1000彩色图像 CPU版本20ms左右,1000*1000彩色图像CPU版本10ms左右(最快的版本)

调用程序如下:

cv::Ptr algorithm = DISOpticalFlow::create(DISOpticalFlow::PRESET_ULTRAFAST);//PRESET_MEDIUM

cv::Mat im1 = cv::imread("imageName");//比如1.jpg

cv::Mat im2 = cv::imread("imageName");//比如2.jpg

cv::Mat map;//稠密光流图

algorithm->calc(im1,im2,map); 步骤:

(1)初始化:包括两图像的图像金字塔构建,其他变量的初始化,比如图像梯度等

(2)for循环:从最顶层到最底层:

当前层im1的梯度图像计算图像块状积分图;

逆向搜索求解稀疏的图像光流场;

根据稀疏光流场计算稠密光流;

(3)最底层的光流resize到跟原始图像一样大小并乘以相应的放大比例,得到最后的光流图map

原理

主要分为3个部分:

1)图像块状积分图,用于逆向搜索,输入为图像x,y方向上的梯度Ix,Iy(通过计算sobel算子得到)

以每个稀疏光流场的位置(x,y)到(x+patchSize,y+patchSize)的图像块进行图像积分,包括:

Ixx = sum( Ix(i,j)*Ix(i,j) )(x<=i<=x+patchSize,y<=j<=y+patchSize)

Ixy = sum( Ix(i,j)*Iy(i,j) ) Iyy = sum( Iy(i,j)*Iy(i,j) )

IxSum = sum( Ix(i,j) ) IySum = sum( Iy(i,j) )

2)逆向搜索得到稀疏光流场,输入:1)中的输出,im1,im2在当前层的图像I0,I1_ext(I1扩展了一点重复的边缘)

目标:I0(x,y) = I1_ext(x+u,y+v) ------------------------------1 得到光流(u,v)

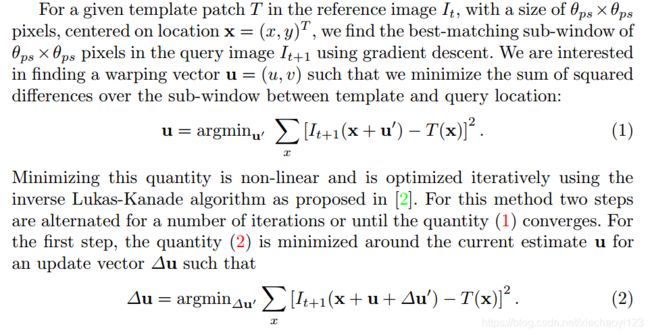

传统光流求解方案:迭代求解 :I0(x,y) = I1_ext(x+u+delU,y+v+delV) u += delU, v+= delV,文章公式:

逆向搜索算法: I0(x+delU,y+delV) = I1_ext(x+u,y+v) u += delU, v+= delV,文章中公式

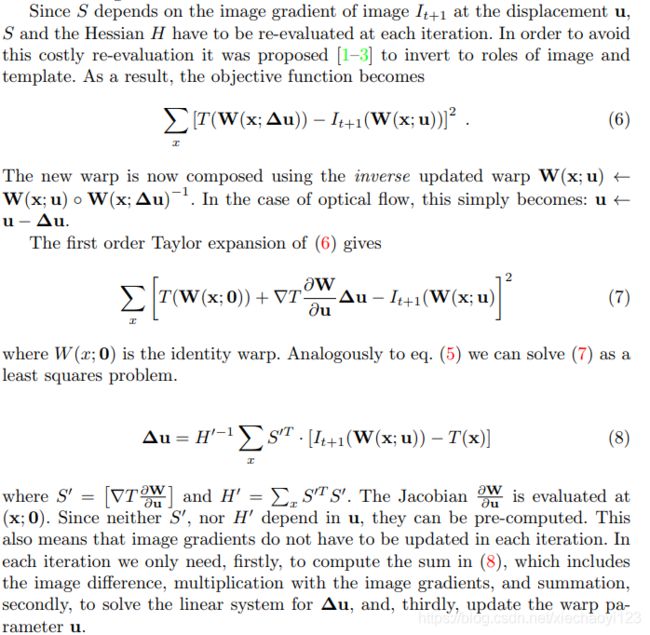

优势:每次迭代之前只需要计算一次S和H,计算速度提升很多,精度相差不大

3) 计算稠密光流:输入 稀疏光流场,I0,I1(两图)

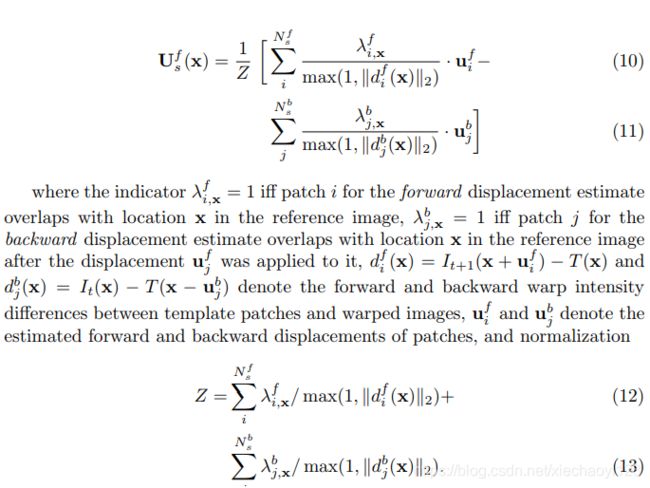

当前层每一个像素对应的光流UV = sum(wi*sparse_UV(i)),每个像素对应的光流等于所有包含该点的图像块对应的稀疏光流的加权求和,每个权重wi = 1.0f/max(1.0f, diff( I0(x) - I1(x+sparse_UV(i) ) ),文章中对应的公式:

opencv对应的源程序:

void DISOpticalFlowImpl::calc(InputArray I0, InputArray I1, InputOutputArray flow)

{

CV_Assert(!I0.empty() && I0.depth() == CV_8U && I0.channels() == 1);

CV_Assert(!I1.empty() && I1.depth() == CV_8U && I1.channels() == 1);

CV_Assert(I0.sameSize(I1));

CV_Assert(I0.isContinuous());

CV_Assert(I1.isContinuous());

Mat I0Mat = I0.getMat();

Mat I1Mat = I1.getMat();

bool use_input_flow = false;

if (flow.sameSize(I0) && flow.depth() == CV_32F && flow.channels() == 2)

use_input_flow = true;

else

flow.create(I1Mat.size(), CV_32FC2);

Mat &flowMat = flow.getMatRef();

coarsest_scale = (int)(log((2 * I0Mat.cols) / (4.0 * patch_size)) / log(2.0) + 0.5) - 1;

int num_stripes = getNumThreads();

prepareBuffers(I0Mat, I1Mat, flowMat, use_input_flow);//初始化

Ux[coarsest_scale].setTo(0.0f);

Uy[coarsest_scale].setTo(0.0f);

for (int i = coarsest_scale; i >= finest_scale; i--)

{

w = I0s[i].cols;

h = I0s[i].rows;

ws = 1 + (w - patch_size) / patch_stride;

hs = 1 + (h - patch_size) / patch_stride;

precomputeStructureTensor(I0xx_buf, I0yy_buf, I0xy_buf, I0x_buf, I0y_buf, I0xs[i], I0ys[i]);//计算图像块状积分图

//逆向搜索

if (use_spatial_propagation)

{

/* Use a fixed number of stripes regardless the number of threads to make inverse search

* with spatial propagation reproducible

*/

parallel_for_(Range(0, 8), PatchInverseSearch_ParBody(*this, 8, hs, Sx, Sy, Ux[i], Uy[i], I0s[i],

I1s_ext[i], I0xs[i], I0ys[i], 2, i));

}

else

{

parallel_for_(Range(0, num_stripes),

PatchInverseSearch_ParBody(*this, num_stripes, hs, Sx, Sy, Ux[i], Uy[i], I0s[i], I1s_ext[i],

I0xs[i], I0ys[i], 1, i));

}

//稠密化

parallel_for_(Range(0, num_stripes),

Densification_ParBody(*this, num_stripes, I0s[i].rows, Ux[i], Uy[i], Sx, Sy, I0s[i], I1s[i]));

//优化结果(最快的不经过优化这一步)

if (variational_refinement_iter > 0)

variational_refinement_processors[i]->calcUV(I0s[i], I1s[i], Ux[i], Uy[i]);

//将上一层的图像光流转化为下一层的光流初始化值

if (i > finest_scale)

{

resize(Ux[i], Ux[i - 1], Ux[i - 1].size());

resize(Uy[i], Uy[i - 1], Uy[i - 1].size());

Ux[i - 1] *= 2;

Uy[i - 1] *= 2;

}

}

//将最底层的光流图resize到原始图像大小,得到最终结果

Mat uxy[] = { Ux[finest_scale], Uy[finest_scale] };

merge(uxy, 2, U);

resize(U, flowMat, flowMat.size());

flowMat *= 1 << finest_scale;

}