Ming-Ming Cheng1 Ziming Zhang2 Wen-Yan Lin3 Philip Torr1

1The University of Oxford 2Boston University 3Brookes Vision Group

Fig. 1. Although object (red) and non-object (green) windows present huge variation in the image space (a), in proper scales and aspect ratios where they correspond to a small fixed size (b), their corresponding normed gradients, i.e. a NG feature (c), share strong correlation. We learn a single 64D linear model (d) for selecting object proposals based on their NG features.

Fig. 1. Although object (red) and non-object (green) windows present huge variation in the image space (a), in proper scales and aspect ratios where they correspond to a small fixed size (b), their corresponding normed gradients, i.e. a NG feature (c), share strong correlation. We learn a single 64D linear model (d) for selecting object proposals based on their NG features.

Abstract

Training a generic objectness measure to produce a small set of candidate object windows, has been shown to speed up the classical sliding window object detection paradigm. We observe that generic objects with well-defined closed boundary, share surprisingly strong correlation in normed gradients space, when resizing their corresponding image windows into a small fixed size. Based on this observation and computational reasons, we propose to resize an image window to 8 × 8 and use the normed gradients as a simple 64D feature to describe it, for explicitly training a generic objectness measure. We further show how the binarized version of this feature, namely binarized normed gradients (BING), can be used for efficient objectness estimation, which requires only a few atomic operations (e.g. ADD , BITWISE SHIFT , etc.). Experiments on the challenging PASCAL VOC 2007 dataset show that our method efficiently (300fps on a single laptop CPU) generates a small set of category-independent, high quality object windows, yielding 96.2% object detection rate (DR) with 1,000 proposals. With increase of the numbers of proposals and color spaces for computing BING features, our performance can be further improved to 99.5% DR.

Papers

- BING: Binarized Normed Gradients for Objectness Estimation at 300fps. Ming-Ming Cheng, Ziming Zhang, Wen-Yan Lin, Philip Torr, IEEE CVPR, 2014. [Project page][pdf][bib]

Results

Figure. Tradeoff between #WIN and DR (see [3] for more comparisons with other methods [6, 12, 16, 20, 25, 28, 30, 42] on the same benchmark). Our method achieves 96.2% DR using 1,000 proposals, and 99.5% DR using 5,000 proposals.

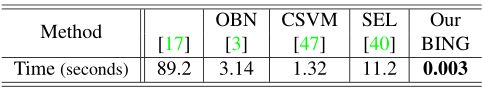

Table 1. Average computational time on VOC2007.

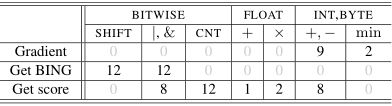

Table 2. Average number of atomic operations for computing objectness of each image window at different stages: calculate normed gradients, extract BING features, and get objectness score.

Figure. Illustration of the true positive object proposals for VOC2007 test images.

Downloads

The C++ source code of our method is public available for download. An OpenCV compatible VOC 2007 annotations could be found here. Matlab file for making figure plot in the paper.

Links to most related works:

- Measuring the objectness of image windows. Alexe, B., Deselares, T. and Ferrari, V. PAMI 2012.

- Selective Search for Object Recognition, Jasper R. R. Uijlings, Koen E. A. van de Sande, Theo Gevers, Arnold W. M. Smeulders, International Journal of Computer Vision, Volume 104 (2), page 154-171, 2013

- Category-Independent Object Proposals With Diverse Ranking, Ian Endres, and Derek Hoiem, PAMI February 2014.

- Proposal Generation for Object Detection using Cascaded Ranking SVMs. Ziming Zhang, Jonathan Warrell and Philip H.S. Torr, IEEE CVPR, 2011: 1497-1504. (PDF)

- Efficient Salient Region Detection with Soft Image Abstraction. Ming-Ming Cheng, Jonathan Warrell, Wen-Yan Lin, Shuai Zheng, Vibhav Vineet, Nigel Crook. IEEE ICCV, 2013.

- Global Contrast based Salient Region Detection. Ming-Ming Cheng, Guo-Xin Zhang, Niloy J. Mitra, Xiaolei Huang, Shi-Min Hu. IEEE CVPR, 2011, p. 409-416. (2nd most cited paper in CVPR 2011)

Perfectly match the major speed limitation of:

- Fast, Accurate Detection of 100,000 Object Classes on a Single Machine, CVPR 2013 (best paper).

- Regionlets for Generic Object Detection, ICCV 2013 oral. (Runner up Winner in the ImageNet large scale object detection challenge, achieves best ever reported performance on PASCAL VOC)

Applications

If you have developed some exciting new extensions, applications, etc, please send a link to me via email. I will add a link here:

Third party resources.

If you have made a version running on other platforms (Software at other platforms, e.g. Mac, Linux, vs2010, makefile projects) and want to share it with others, please send me an email to the url and I will add a link here. If you are interested to translate the paper to other languages, e.g. Chinese, I’m very happy to supply the original Latex source and add a pointer here to your translation.

FAQs

Since the release of the source code 2 days ago, 500+ students and researchers has download this source code (according to email records). Here are some frequently asked questions from users. Please read the FAQs before sending me new emails. Questions already occurred in FAQs will not be replied.

1. I download your code but can’t compile it in visual studio 2008 or 2010. Why?

I use Visual Studio 2012 for develop. The shared source code guarantee working under Visual Studio 2012. The algorithm itself doesn’t rely on any visual studio 2012 specific features. Some users already reported that they successfully made a Linux version running and achieves 1000fps on a desktop machine (my 300fps was tested on a laptop machine). If users made my code running at different platforms and want to share it with others, I’m very happy to add links from this page. Please contact me via email to do this.

2. I run the code but the results are empty. Why?

Please check if you have download the PASCAL VOC data. The original VOC annotations could not directly be read by OpenCV. I have shared a version which is compatible with OpenCV (http://mmcheng.net/code-data/)

3. What’s the password for unzip your source code?

Please read the notice in the download page. You can get it automatically by supplying your name and institute information.

4. I got different testing speed than 300fps. Why?

If you are using 64bit windows, and visual studio 2012, the default setting should be fine. Otherwise, please make sure to enable OPENMP and native SSE instructions. In any cases, speed should be tested under release mode rather than debug mode. Don’t uncomments commands for showing progress, e.g. printf(“Processing image: %s”, imageName). When the algorithm runs at hundreds fps, printf, image reading (SSD hard-disk would help in this case), etc might become bottleneck of the speed. Depending on different hardware, the running speed might be different. To eliminate influence of hard disk image reading speed, I preload all testing images before count timing and do predicting. Only 64 bit machines support such large memory for a single program. If you RAM size is small, such pre-loading might cause hard disk paging, resulting slow running time as well. Typical speed people reporting ranging from 100fps (typical laptop) ~ 1000fps (pretty powerful desktop).

5. After increase the number of proposals to 5000, I got only 96.5% detection rate. Why?

Please read through the paper before using the source code. As explained in the abstract, ‘With increase of the numbers of proposals and color spaces … improved to 99:5% DR’. Using three different color space can be enabled by calling “getObjBndBoxesForTests” rather than the default one in the demo code “getObjBndBoxesForTestsFast”.

6. I got compilation or linking errors like: can’t find “opencv2/opencv.hpp”, error C1083: can’t fine “atlstr.h”.

These are all standard libraries. Please copy the error message and search at Google for answers.

http://www.cvchina.info/2014/02/25/14cvprbing/

亮点巨多:

- 在PASCAL VOC数据集上取得了State of the art的Detection Rate

- 比PAMI2012, PAMI 2013, IJCV 2013 的方法快了1000倍,测试速度达300个图像每秒!

- 计算一个window的objectness score仅需2个float乘法,一个float加法,十来个bitwise operation。

- 没有各种复杂的计算,算法代码100行以内。

- 在整个PASCAL VOC 2007数据集上,Training不需要几周,不需要几天,仅需20秒钟!

- 有望for free的加速几乎所有object detection方法。去年CVPR best paper,以及在VOC上跑出最好成绩的ICCV 2013 Oral paper, 在共同抱怨的generic object proposal的速度瓶颈完全解决了。应该最多再过一年,各种realtime,high performance的multi-object detection将迅速涌现。

- 我来牛津一年多了,第一次在组内reading group (http://www.robots.ox.ac.uk/~vgg/rg/)上听Prof. Andrew Zisserman (全世界唯一拿过3次Marr奖的教授,论文citaiton 6万多)在组内讨论中对一个paper给这么正面的评价,并在我作组内reading group报告当天安排自己的学生开始做后续工作。

- 由于这次只用了最最简单的feature (梯度绝对值),最简单的学习方法 (Linear SVM)。应该非常容易进行扩展和改进。

- 我2011年发布Saliency region detection代码的时候,当时觉得会有比较多的后续工作(后来证明光我自己的论文就有400多次引用),但也没有这次这个topic这么让人激动!相信未来一段时间将有非常多的领域会产生深刻的变化。为了推动这一变化,算法已经与一个小时前共享了出来:C++代码 http://mmcheng.net/bing/

人去识别一个照片,没见过谁用sliding window的方式一个个仔细的判断。因此Objectness 和 Saliency机制很相关,我感觉用objectness应该是detection的正确机制。

关于Salient object detection,如果一个图像只生成一个saliency map的话,用单张图像搞Saliency map,发展空间已经不是特别大了,我11年投PAMI那篇在MSRA1000上做到了93%左右的FMeasure,之后没看过别的比我CVPR11论文中segmentation结果(F = 90%)更高的正确率。用多张图像,特别是从internet上随机download的图像,从中提取有用的Salient object,并自动剔除单张图像分析产生的错误,应该还有很多事情可做。具体可参考:http://mmcheng.net/gsal/

关于Objectness,CVPR14这个充其量只算开了个头。因为只用了最最弱的feature(梯度:相邻像素颜色相减的绝对值)和学习方法(LinearSVM)来刻画我对这个问题的observation。进一步对初步结果做分析,将1000个proposal降低到几百个,甚至几十个,并同时保持较高的recall,将会有很多工作可做。从1千降到几十,将是一个漫长的过程,估计需要上百篇paper的不懈努力才有可能实现。

要是在未来几年,能将proposal数目降低到个位数,将会深刻影响图像编辑领域,我们也可能可以直接通过语音命令在没有分类器存在的情况下发出控制命令,例如“把这个object给我变大…”。关于语音控制的semantic parsing和图像编辑,有兴趣的话可以参考:http://mmcheng.net/imagespirit/ 。这个paper接收后也会公布代码。