PLDA文本聚类

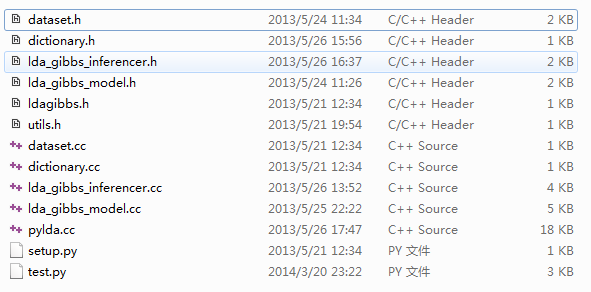

晓阳大牛写了一个PLDA用于文本聚类,让我在这里分享一下,主要包含下面几个文件,贴的代码顺序如图片显示的那样:

#ifndef DATASET_H

#define DATASET_H

#include <vector>

#include <string>

#include "ldagibbs.h"

#include "dictionary.h"

class DataSet {

public:

DataSet();

~DataSet();

DocumentId AppendDocument(const char *title) {

document_titles_.push_back(std::string(title));

document_term_ids_.push_back(std::vector<TermId>());

return document_term_ids_.size() - 1;

}

void AppendTerm(DocumentId document_id, const char *term) {

TermId term_id = dictionary_->GetTermId(term);

document_term_ids_[document_id].push_back(term_id);

}

TermId GetTermId(DocumentId document_id, size_t term_index) const {

return document_term_ids_[document_id][term_index];

}

Dictionary *dictionary() const {

return dictionary_;

}

DocumentId DocumentNumber() const {

return document_term_ids_.size();

}

TermId TermNumber() const {

return dictionary_->term_count();

}

size_t DocumentLength(DocumentId document_id) const {

return document_term_ids_[document_id].size();

}

const char *DocumentTitle(DocumentId document_id) const {

return document_titles_[document_id].c_str();

}

private:

std::vector<std::vector<TermId> > document_term_ids_;

std::vector<std::string> document_titles_;

Dictionary *dictionary_;

};

#endif

#ifndef DICT_H

#define DICT_H

#include <string>

#include <map>

class Dictionary {

public:

static const TermId kNotExist = -1;

TermId GetTermId(const char *term_str);

TermId FindIdByTerm(const char *term_str) const {

TermId term_id;

std::map<std::string, TermId>::iterator it = map_term_id_.find(string(term_str));

if (it != map_term_id_.end()) {

term_id = it->second;

} else {

term_id = kNotExist;

}

return term_id;

}

const char *GetTermStr(TermId term_id) const {

return map_id_term_.at(term_id).c_str();

}

TermId term_count() const {

return term_count_;

}

Dictionary(): term_count_(0) {}

private:

std::map<std::string, TermId> map_term_id_;

std::map<TermId, std::string> map_id_term_;

TermId term_count_;

};

#endif

#ifndef LDA_GIBBS_INFERENCER

#define LDA_GIBBS_INFERENCER

#include "ldagibbs.h"

#include <vector>

class LdaGibbsInferencer {

public:

LdaGibbsInferencer(TopicId topic_number, Dictionary *dictionary, double alpha, double beta);

void UpdatePhi(TopicId topic_id, TermId term_id, double value);

void Inference(int iteration, size_t document_length, const TermId *document_terms, double *topic_distribution) const;

TopicId topic_number() { return topic_number_; }

private:

class Instance;

void InitializeInstance(Instance *instance);

void GibbsSamplingOne(Instance *instance, size_t term_index);

void TermTopicDistribution(Instance *instance, size_t term_index, double *distribution);

void SamplingTopicFromDistribution(double *distribution) const;

void PhiGet(TopicId topic_id, TermId term_id) const {

double phi_value;

if (term_id == Dictionary::kNotExist) {

phi_value = 1.0 / static_cast<double>(term_number_);

} else {

phi_value = phi[topic_id][term_id]

}

return phi_value;

}

Dictionary *dictionary_;

TopicId topic_number_;

TermId term_number_;

double alpha_;

double beta_;

double **phi_;

};

class LdaGibbsInferencer::Instance {

public:

size_t document_length;

const TermId *document_content;

int *document_topic_count;

TopicId *document_term_topic;

double *temp_term_distribution;

Instance(size_t document_length, const TermId *document_content, TopicId topic_number):

document_length(document_length),

document_content(document_content),

temp_term_distribution(new double[topic_number]),

document_topic_count(AllocArray<int>(topic_number)),

document_term_topic(AllocArray<TopicId>(document_length)) {}

~Instance() {

FreeArray(document_topic_count);

document_topic_count = NULL:

FreeArray(document_term_topic);

document_term_topic = NULL;

delete[] temp_term_distribution;

temp_term_distribution = NULL;

}

};

#endif

#ifndef LDAGM_H

#define LDAGM_H

#include "dataset.h"

class LdaGibbsModel {

public:

LdaGibbsModel(DataSet *data_set,

TopicId topic_number,

double alpha,

double beta);

~LdaGibbsModel();

void GibbsSampling(int iteration);

double CalculatePhi(TopicId topic_id, TermId term_id) const {

return (topic_term_count_[topic_id][term_id] + beta_) / (topic_term_sum_[topic_id] + beta_ * term_number_);

}

double CalculateTheta(DocumentId document_id, TopicId topic_id) const {

return (document_topic_count_[document_id][topic_id] + alpha_) / (document_topic_sum_[document_id] + alpha_ * topic_number_);

}

void InitializeModel();

TopicId topic_number() { return topic_number_; }

TermId term_number() { return term_number_; }

DocumentId document_number() { return document_number_; }

private:

void TopicDistribution(DocumentId document_id, size_t word_index, double *distribution) const;

TopicId SamplingTopicFromDistribution(double *distribution) const;

void GibbsSamplingOne(DocumentId document_id, size_t term_index);

int *document_topic_sum_;

int **document_topic_count_;

int *topic_term_sum_;

int **topic_term_count_;

TopicId **document_term_topic_;

TermId term_number_;

DocumentId document_number_;

TopicId topic_number_;

double alpha_;

double beta_;

DataSet *data_set_;

};

#endif

#ifndef LDAGIBBS_H #define LDAGIBBS_H typedef short TopicId; typedef long TermId; typedef long DocumentId; #endif

#include <string.h>

//

// Allocs a 2-dimension array filled with 0 of type T

//

template<class T>

T **AllocMatrix(size_t rows, size_t columns)

{

T **matrix = new T *[rows];

for (size_t row = 0; row < rows; ++row) {

matrix[row] = new T[columns];

memset(matrix[row], 0, columns * sizeof(T));

}

return matrix;

}

//

// Delete a 2-dimension array

//

template<class T>

void FreeMatrix(T **matrix, size_t rows)

{

for (size_t row = 0; row < rows; ++row) {

delete[] matrix[row];

}

delete[] matrix;

}

template<class T>

T *AllocArray(size_t length)

{

T *array = new T[length];

memset(array, 0, length * sizeof(T));

return array;

}

template<class T>

void FreeArray(T *array)

{

delete[] array;

}

#include "dataset.h"

DataSet::DataSet(): dictionary_(new Dictionary()) {}

DataSet::~DataSet() {

delete dictionary_;

}

#include <string>

#include <map>

#include "ldagibbs.h"

#include "dictionary.h"

TermId Dictionary::GetTermId(const char *termStr) {

std::string term_string(termStr);

TermId term_id;

std::map<std::string, TermId>::const_iterator it;

if ((it = map_term_id_.find(term_string)) != map_term_id_.end()) {

term_id = it->second;

} else {

term_id = term_count_;

term_count_++;

map_term_id_[term_string] = term_id;

map_id_term_[term_id] = term_string;

}

return term_id;

}

#include "ldagibbs.h"

#include "lda_gibbs_inferencer.h"

#include "utils.h"

LdaGibbsInferencer::LdaGibbsInferencer(TopicId topic_number, Dictionary *dictionary, double alpha, double, beta):

alpha_(alpha),

beta_(beta),

topic_number_(topic_number),

term_number_(dictionary->term_count()),

dictionary_(dictionary_),

phi_(AllocMatrix(topic_number, term_number_)) {}

void LdaGibbsInferencer::UpdatePhi(TopicId topic_id, TermId term_id, double value) {

phi_[topic_id][term_id] = value;

}

void LdaGibbsInferencer::Inference(int iteration,

size_t document_length,

const TermId *document_terms,

double *topic_distribution) {

Instance *instance = new Instance(document_length, document_terms, topic_number_);

InitializeInstance(instance);

for (int i = 0; i < iteration; ++i) {

for (size_t term_index = 0; term_index < document_length; ++term_index) {

GibbsSamplingOne(instance, term_index);

}

}

// calculate topic distribution

for (TopicId topic_id = 0; topic_id < topic_number_; ++topic_id) {

topic_distribution[topic_id] =

(instance->document_topic_count[topic_id] + alpha_) /

(instance->document_length + alpha_ * topic_number);

}

delete instance;

instance = NULL;

}

void LdaGibbsInferencer::InitializeInstance(Instance *instance) {

srand(static_cast<unsigned int>(time(NULL)));

for (size_t term_index = 0; term_index < instance->document_length; ++term_index) {

TopicId topic_id = rand() % topic_number_;

instance->document_topic_count_[topic_id]++;

instance->document_term_topic_[term_index] = topic_id;

}

}

void LdaGibbsInferencer::GibbsSamplingOne(Instance *instance, size_t term_index) {

TermId term_id = instance->document_content[term_index];

TopicId topic_id = instance->document_term_topic[term_index];

TermTopicDistribution(document_id, term_index, instance->temp_term_distribution);

TopicId new_topic_id = SamplingTopicFromDistribution(instance->temp_term_distribution);

if (new_topic_id != topic_id) {

instance->document_term_topic[term_index] = new_topic_id;

instance->document_topic_count[topic_id]--;

instance->document_topic_count[new_topic_id]++;

}

}

void LdaGibbsInferencer::TermTopicDistribution(Instance *instance, size_t term_index, double *distribution) {

TopicId term_topic_id = instance->document_term_topic[term_index];

TermId term_id = instance->document_content[term_index];

for (int estimate_topic_id = 0; estimate_topic_id < topic_number_; ++estimate_topic_id) {

int exclude = 0;

if (estimate_topic_id == term_topic_id) {

exclude = 1;

}

distribution[estimate_topic_id] =

(instance->document_topic_count[estimate_topic_id] + alpha_ - exclude) *

PhiGet(estimate_topic_id, term_id);

}

}

TopicId LdaGibbsInferencer::SamplingTopicFromDistribution(double *distribution) const {

double sum = 0;

for (TopicId topic_id = 0; topic_id < topic_number_; ++topic_id) {

sum += distribution[topic_id];

}

double random_value = Random0to1() * sum;

sum = 0;

TopicId topic_id = 0;

for (topic_id = 0; topic_id < topic_number_; ++topic_id) {

sum += distribution[topic_id];

if (sum > random_value) {

break;

}

}

return topic_id;

}

#include <time.h>

#include <string.h>

#include <stdlib.h>

#include <stdio.h>

#include "lda_gibbs_model.h"

#include "ldagibbs.h"

#include "utils.h"

LdaGibbsModel::LdaGibbsModel(DataSet *data_set,

TopicId topic_number,

double alpha,

double beta) {

data_set_ = data_set;

document_number_ = data_set_->DocumentNumber();

term_number_ = data_set_->TermNumber();

topic_number_ = topic_number;

document_topic_sum_ = AllocArray<int>(document_number_);

topic_term_sum_ = AllocArray<int>(topic_number_);

topic_term_count_ = AllocMatrix<int>(static_cast<size_t>(topic_number_),

static_cast<size_t>(term_number_));

document_topic_count_ = AllocMatrix<int>(static_cast<size_t>(document_number_),

static_cast<size_t>(topic_number_));

document_term_topic_ = new TopicId *[document_number_];

for (int i = 0; i < document_number_; ++i) {

int document_length = data_set_->DocumentLength(i);

document_term_topic_[i] = AllocArray<TopicId>(document_length);

}

alpha_ = alpha;

beta_ = beta;

}

LdaGibbsModel::~LdaGibbsModel() {

FreeArray(document_topic_sum_);

FreeArray(topic_term_sum_);

FreeMatrix(document_topic_count_, static_cast<size_t>(document_number_));

FreeMatrix(topic_term_count_, static_cast<size_t>(topic_number_));

for (DocumentId document_id = 0; document_id < document_number_; ++document_id) {

FreeArray(document_term_topic_[document_id]);

}

}

void LdaGibbsModel::InitializeModel() {

srand(static_cast<unsigned int>(time(NULL)));

for (int document_id = 0; document_id < document_number_; ++document_id) {

for (size_t term_index = 0; term_index < data_set_->DocumentLength(document_id); ++term_index) {

TopicId topic_id = rand() % topic_number_;

TermId term_id = data_set_->GetTermId(document_id, term_index);

document_topic_count_[document_id][topic_id]++;

document_topic_sum_[document_id]++;

topic_term_count_[topic_id][term_id]++;

topic_term_sum_[topic_id]++;

document_term_topic_[document_id][term_index] = topic_id;

}

}

}

void LdaGibbsModel::TopicDistribution(DocumentId document_id,

size_t term_index,

double *distribution) const {

TopicId term_topic_id = document_term_topic_[document_id][term_index];

TermId term_id = data_set_->GetTermId(document_id, term_index);

for (int estimate_topic_id = 0; estimate_topic_id < topic_number_; ++estimate_topic_id) {

int exclude = 0;

if (estimate_topic_id == term_topic_id) {

exclude = 1;

}

distribution[estimate_topic_id] =

(topic_term_count_[estimate_topic_id][term_id] + beta_ - exclude) *

(document_topic_count_[document_id][estimate_topic_id] + alpha_ - exclude) /

(topic_term_sum_[estimate_topic_id] - exclude + term_number_ * beta_);

}

}

double Random0to1() {

const double kRandomMaxPlus1 = static_cast<double>(RAND_MAX) + 1;

const double kBigRandomMaxPlus1 = kRandomMaxPlus1 * kRandomMaxPlus1;

double big_rand = rand() * kRandomMaxPlus1 + rand();

return big_rand / kBigRandomMaxPlus1;

}

TopicId LdaGibbsModel::SamplingTopicFromDistribution(double *distribution) const {

double sum = 0;

for (TopicId topic_id = 0; topic_id < topic_number_; ++topic_id) {

sum += distribution[topic_id];

}

double random_value = Random0to1() * sum;

sum = 0;

TopicId topic_id = 0;

for (topic_id = 0; topic_id < topic_number_; ++topic_id) {

sum += distribution[topic_id];

if (sum > random_value) {

break;

}

}

return topic_id;

}

void LdaGibbsModel::GibbsSamplingOne(DocumentId document_id, size_t term_index) {

TermId term_id = data_set_->GetTermId(document_id, term_index);

TopicId topic_id = document_term_topic_[document_id][term_index];

double *distribution = new double[topic_number_];

TopicDistribution(document_id, term_index, distribution);

TopicId new_topic_id = SamplingTopicFromDistribution(distribution);

delete[] distribution;

if (new_topic_id != topic_id) {

document_term_topic_[document_id][term_index] = new_topic_id;

document_topic_count_[document_id][topic_id]--;

topic_term_count_[topic_id][term_id]--;

topic_term_sum_[topic_id]--;

document_topic_count_[document_id][new_topic_id]++;

topic_term_count_[new_topic_id][term_id]++;

topic_term_sum_[new_topic_id]++;

}

}

void LdaGibbsModel::GibbsSampling(int iteration) {

for (int i = 0; i < iteration; ++i) {

for (DocumentId document_id = 0; document_id < document_number_; ++document_id) {

for (size_t term_index = 0; term_index < data_set_->DocumentLength(document_id); ++term_index) {

GibbsSamplingOne(document_id, term_index);

}

}

}

}

#include <Python.h>

#include <vector>

#include <stdio.h>

#include "dataset.h"

#include "lda_gibbs_model.h"

#include "lda_gibbs_inferencer.h"

#include "utils.h"

// ----------------------------------- DataSet ---------------------------------------

typedef struct {

PyObject_HEAD

DataSet *data_set_;

} DataSetObject;

struct DataSetType;

static int DataSet_init(DataSetObject *self, PyObject *args, PyObject *kwds)

{

self->data_set_ = new DataSet();

return 0;

}

static PyObject *DataSet_new(PyTypeObject *type, PyObject *args, PyObject *kwds)

{

return (PyObject *)((DataSetType *)type->tp_alloc(type, 0));

}

static int DataSet_dealloc(DataSetObject *self)

{

delete self->data_set_;

self->data_set_ = NULL;

PyObject_Del(self);

return 0;

}

static PyObject *DataSet_append_document(DataSetObject* self, PyObject *args)

{

PyObject *term_list_object;

char *document_title;

char *term;

if (!PyArg_ParseTuple(args, "sO", &document_title, &term_list_object))

return NULL;

if (!PyList_Check(term_list_object)) {

return NULL;

}

DocumentId document_id = self->data_set_->AppendDocument(document_title);

Py_ssize_t document_size = PyList_Size(term_list_object);

for (Py_ssize_t i = 0; i < document_size; ++i) {

PyObject *term_unicode = PyList_GetItem(term_list_object, i);

if (term_unicode == NULL) {

return NULL;

}

PyObject *term_utf8_bytes = PyUnicode_AsUTF8String(term_unicode);

if (term_utf8_bytes == NULL) {

return NULL;

}

term = PyBytes_AsString(term_utf8_bytes);

if (term == NULL) {

return NULL;

}

self->data_set_->AppendTerm(document_id, term);

Py_DECREF(term_utf8_bytes);

}

Py_RETURN_NONE;

}

static PyObject *DataSet_document_number(DataSetObject* self, PyObject *args)

{

if (!PyArg_ParseTuple(args, ""))

return NULL;

DocumentId document_number = self->data_set_->DocumentNumber();

return PyLong_FromLong(static_cast<long>(document_number));

}

static PyObject *DataSet_term_number(DataSetObject* self, PyObject *args)

{

if (!PyArg_ParseTuple(args, ""))

return NULL;

TermId term_number = self->data_set_->TermNumber();

return PyLong_FromLong(static_cast<long>(term_number));

}

static PyObject *DataSet_document_length(DataSetObject* self, PyObject *args)

{

long long_document_id;

if (!PyArg_ParseTuple(args, "l", &long_document_id))

return NULL;

size_t document_length = self->data_set_->DocumentLength(

static_cast<DocumentId>(long_document_id));

return PyLong_FromLong(static_cast<long>(document_length));

}

static PyObject *DataSet_get_term_by_id(DataSetObject* self, PyObject *args)

{

long term_id_long;

if (!PyArg_ParseTuple(args, "l", &term_id_long))

return NULL;

return PyUnicode_FromString(self->data_set_->dictionary()->GetTermStr(static_cast<TermId>(term_id_long)));

}

static PyObject *DataSet_get_document_title(DataSetObject* self, PyObject *args)

{

long document_id_long = 0;

if (!PyArg_ParseTuple(args, "l", &document_id_long))

return NULL;

return PyUnicode_FromString(self->data_set_->DocumentTitle(static_cast<DocumentId>(document_id_long)));

}

static PyMethodDef DataSetType_methods[] = {

{

"appendDocument",

(PyCFunction)DataSet_append_document,

METH_VARARGS,

"Append a document to data set."

}, {

"documentNumber",

(PyCFunction)DataSet_document_number,

METH_VARARGS,

"Get document number in data set."

}, {

"documentTitle",

(PyCFunction)DataSet_get_document_title,

METH_VARARGS,

"Get document title in data set."

}, {

"documentNumber",

(PyCFunction)DataSet_document_number,

METH_VARARGS,

"Get document number in data set."

}, {

"termNumber",

(PyCFunction)DataSet_term_number,

METH_VARARGS,

"Get total term number in data set."

}, {

"documentLength",

(PyCFunction)DataSet_document_length,

METH_VARARGS,

"Get the length of a document."

}, {

"getTermById",

(PyCFunction)DataSet_get_term_by_id,

METH_VARARGS,

"Get a term string by it's Id."

}, {

NULL

} /* Sentinel */

};

static PyTypeObject DataSetType = {

PyVarObject_HEAD_INIT(NULL, 0)

"lda.DataSet", /* tp_name */

sizeof(DataSetObject), /* tp_basicsize */

0, /* tp_itemsize */

(destructor)DataSet_dealloc, /* tp_dealloc */

0, /* tp_print */

0, /* tp_getattr */

0, /* tp_setattr */

0, /* tp_reserved */

0, /* tp_repr */

0, /* tp_as_number */

0, /* tp_as_sequence */

0, /* tp_as_mapping */

0, /* tp_hash */

0, /* tp_call */

0, /* tp_str */

0, /* tp_getattro */

0, /* tp_setattro */

0, /* tp_as_buffer */

Py_TPFLAGS_DEFAULT, /* tp_flags */

"DataSet object", /* tp_doc */

0, /* tp_traverse */

0, /* tp_clear */

0, /* tp_richcompare */

0, /* tp_weaklistoffset */

0, /* tp_iter */

0, /* tp_iternext */

DataSetType_methods, /* tp_methods */

0, /* tp_members */

0, /* tp_getset */

0, /* tp_base */

0, /* tp_dict */

0, /* tp_descr_get */

0, /* tp_descr_set */

0, /* tp_dictoffset */

(initproc)DataSet_init, /* tp_init */

0, /* tp_alloc */

DataSet_new, /* tp_new */

};

// ----------------------------------- LdaGibbsModel --------------------------------------

typedef struct {

PyObject_HEAD

LdaGibbsModel *lda_model_;

PyObject *data_set_object_;

} LdaObject;

struct LdaType;

static int Lda_init(LdaObject *self, PyObject *args, PyObject *kwds)

{

PyObject *data_set_object;

double alpha = 0;

double beta = 0;

long topic_number_long = 0;

if (!PyArg_ParseTuple(args, "Oldd", &data_set_object, &topic_number_long, &alpha, &beta))

return 1;

if (PyObject_IsInstance((PyObject *)data_set_object, (PyObject *)&DataSetType) == 0)

return 1;

self->data_set_object_ = data_set_object;

Py_INCREF(data_set_object);

DataSet *data_set = ((DataSetObject *)data_set_object)->data_set_;

self->lda_model_ = new LdaGibbsModel(data_set,

static_cast<TopicId>(topic_number_long),

alpha,

beta);

self->lda_model_->InitializeModel();

return 0;

}

static PyObject *Lda_new(PyTypeObject *type, PyObject *args, PyObject *kwds)

{

return (PyObject *)((LdaType *)type->tp_alloc(type, 0));

}

static int Lda_dealloc(LdaObject *self)

{

delete self->lda_model_;

Py_DECREF(self->data_set_object_);

PyObject_Del(self);

return 0;

}

static PyObject *Lda_gibbs_sampling(LdaObject* self, PyObject *args)

{

long iteration_long = 0;

if (!PyArg_ParseTuple(args, "l", &iteration_long))

return NULL;

self->lda_model_->GibbsSampling(static_cast<int>(iteration_long));

Py_RETURN_NONE;

}

static PyObject *Lda_calculate_phi(LdaObject* self, PyObject *args)

{

long topic_id_long = 0;

long term_id_long = 0;

if (!PyArg_ParseTuple(args, "ll", &topic_id_long, &term_id_long))

return NULL;

TopicId topic_id = static_cast<TopicId>(topic_id_long);

TermId term_id = static_cast<TermId>(term_id_long);

double phi = self->lda_model_->CalculatePhi(topic_id, term_id);

return PyFloat_FromDouble(phi);

}

static PyObject *Lda_calculate_theta(LdaObject* self, PyObject *args)

{

long document_id_long = 0;

long topic_id_long = 0;

if (!PyArg_ParseTuple(args, "ll", &document_id_long, &topic_id_long))

return NULL;

DocumentId document_id = static_cast<DocumentId>(document_id_long);

TopicId topic_id = static_cast<TopicId>(topic_id_long);

double theta = self->lda_model_->CalculateTheta(document_id, topic_id);

return PyFloat_FromDouble(theta);

}

static PyMethodDef LdaType_methods[] = {

{

"gibbsSampling",

(PyCFunction)Lda_gibbs_sampling,

METH_VARARGS,

"Start gibbs sampling."

}, {

"calculatePhi",

(PyCFunction)Lda_calculate_phi,

METH_VARARGS,

"Get the value of phi."

}, {

"calculateTheta",

(PyCFunction)Lda_calculate_theta,

METH_VARARGS,

"Get the value of theta."

}, {

NULL

} /* Sentinel */

};

static PyTypeObject LdaType = {

PyVarObject_HEAD_INIT(NULL, 0)

"lda.Lda", /* tp_name */

sizeof(LdaObject), /* tp_basicsize */

0, /* tp_itemsize */

(destructor)Lda_dealloc, /* tp_dealloc */

0, /* tp_print */

0, /* tp_getattr */

0, /* tp_setattr */

0, /* tp_reserved */

0, /* tp_repr */

0, /* tp_as_number */

0, /* tp_as_sequence */

0, /* tp_as_mapping */

0, /* tp_hash */

0, /* tp_call */

0, /* tp_str */

0, /* tp_getattro */

0, /* tp_setattro */

0, /* tp_as_buffer */

Py_TPFLAGS_DEFAULT, /* tp_flags */

"Lda object", /* tp_doc */

0, /* tp_traverse */

0, /* tp_clear */

0, /* tp_richcompare */

0, /* tp_weaklistoffset */

0, /* tp_iter */

0, /* tp_iternext */

LdaType_methods, /* tp_methods */

0, /* tp_members */

0, /* tp_getset */

0, /* tp_base */

0, /* tp_dict */

0, /* tp_descr_get */

0, /* tp_descr_set */

0, /* tp_dictoffset */

(initproc)Lda_init, /* tp_init */

0, /* tp_alloc */

Lda_new, /* tp_new */

};

// ------------------------------------- LdaGibbsInferencer ---------------------------------

typedef struct {

PyObject_HEAD

LdaGibbsInferencer *lda_inferencer_;

Dictionary *dictionary_;

} LdaInferencerObject;

struct LdaInferencerType;

static int LdaInferencer_init(LdaInferencerObject *self, PyObject *args, PyObject *kwds)

{

double alpha = 0;

double beta = 0;

long topic_number_long = 0;

self->dictionary_ = NULL;

self->lda_inferencer_ = NULL;

if (!PyArg_ParseTuple(args, "Oldd", &dictionary_object, &topic_number_long, &alpha, &beta))

goto failed_cleanup;

self->dictionary_ = new Dictionary();

PyObject *iterator = PyObject_GetIter(obj);

if (iterator == NULL) goto failed_cleanup;

PyObject *item;

while (item = PyIter_Next(iterator)) {

PyObject *term_utf8_bytes = PyUnicode_AsUTF8String(item);

if (term_utf8_bytes == NULL) goto failed_cleanup;

const char *term = PyBytes_AsString(term_utf8_bytes);

if (term == NULL) goto failed_cleanup;

// insert item into dictionary

self->dictionary_->GetTermId(term);

}

self->lda_inferencer_ = new LdaGibbsInferencer(self->dictionary_,

static_cast<TopicId>(topic_number_long),

alpha,

beta);

return 0;

failed_cleanup:

if (self->dictionary_ != NULL) delete self->dictionary_;

if (self->lda_inferencer_ != NULL) delete self->lda_inferencer_;

return 1;

}

static PyObject *LdaInferencer_new(PyTypeObject *type, PyObject *args, PyObject *kwds)

{

return (PyObject *)((LdaInferencerType *)type->tp_alloc(type, 0));

}

static int LdaInferencer_dealloc(LdaInferencerObject *self)

{

delete self->lda_inferencer_;

delete self->dictionary_;

PyObject_Del(self);

return 0;

}

static PyObject *LdaInferencer_inference(LdaInferencerObject* self, PyObject *args)

{

long iteration_long = 0;

PyObject *term_list_object = NULL;

TermId *document_content = NULL;

PyObject *term_str_object = NULL;

PyObject *term_utf8_bytes = NULL;

double *topic_distribution = NULL;

if (!PyArg_ParseTuple(args, "Ol", &term_list_object, &iteration_long))

return NULL;

if (PyList_Check(term_list_object) == 0)

return NULL;

// Get document term strings from parameter

Py_ssize_t document_lehgth = PyList_Size(term_list_object);

document_content = new TermId[document_size];

for (Py_ssize_t term_index = 0; term_index < document_lehgth; ++term_index) {

term_str_object = PyList_GetItem(term_list_object, term_index);

term_utf8_bytes = PyUnicode_AsUTF8String(term_unicode);

if (term_utf8_bytes == NULL) goto inference_failed_cleanup;

const char *term_str = PyBytes_AsString(term_utf8_bytes);

if (term_str == NULL) goto inference_failed_cleanup;

document_content[term_index] = self->dictionary_->FindIdByTerm(term_str);

Py_DECREF(term_utf8_bytes);

term_utf8_bytes = NULL;

}

double *topic_distribution = new double[self->lda_inferencer_->topic_number()];

// start inference

self->lda_inferencer_->Inference(200, document_length, document_content, topic_distribution);

self->lda_model_->GibbsSampling(static_cast<int>(iteration_long));

Py_RETURN_NONE;

inference_failed_cleanup:

if (document_content != NULL) delete[] document_content;

if (term_utf8_bytes != NULL) Py_DECREF(term_utf8_bytes);

if (topic_distribution != NULL) delete[] topic_distribution;

return NULL;

}

static PyObject *Lda_calculate_phi(LdaObject* self, PyObject *args)

{

long topic_id_long = 0;

long term_id_long = 0;

if (!PyArg_ParseTuple(args, "ll", &topic_id_long, &term_id_long))

return NULL;

TopicId topic_id = static_cast<TopicId>(topic_id_long);

TermId term_id = static_cast<TermId>(term_id_long);

double phi = self->lda_model_->CalculatePhi(topic_id, term_id);

return PyFloat_FromDouble(phi);

}

static PyObject *Lda_calculate_theta(LdaObject* self, PyObject *args)

{

long document_id_long = 0;

long topic_id_long = 0;

if (!PyArg_ParseTuple(args, "ll", &document_id_long, &topic_id_long))

return NULL;

DocumentId document_id = static_cast<DocumentId>(document_id_long);

TopicId topic_id = static_cast<TopicId>(topic_id_long);

double theta = self->lda_model_->CalculateTheta(document_id, topic_id);

return PyFloat_FromDouble(theta);

}

static PyMethodDef LdaType_methods[] = {

{

"gibbsSampling",

(PyCFunction)Lda_gibbs_sampling,

METH_VARARGS,

"Start gibbs sampling."

}, {

"calculatePhi",

(PyCFunction)Lda_calculate_phi,

METH_VARARGS,

"Get the value of phi."

}, {

"calculateTheta",

(PyCFunction)Lda_calculate_theta,

METH_VARARGS,

"Get the value of theta."

}, {

NULL

} /* Sentinel */

};

static PyTypeObject LdaType = {

PyVarObject_HEAD_INIT(NULL, 0)

"lda.Lda", /* tp_name */

sizeof(LdaObject), /* tp_basicsize */

0, /* tp_itemsize */

(destructor)Lda_dealloc, /* tp_dealloc */

0, /* tp_print */

0, /* tp_getattr */

0, /* tp_setattr */

0, /* tp_reserved */

0, /* tp_repr */

0, /* tp_as_number */

0, /* tp_as_sequence */

0, /* tp_as_mapping */

0, /* tp_hash */

0, /* tp_call */

0, /* tp_str */

0, /* tp_getattro */

0, /* tp_setattro */

0, /* tp_as_buffer */

Py_TPFLAGS_DEFAULT, /* tp_flags */

"Lda object", /* tp_doc */

0, /* tp_traverse */

0, /* tp_clear */

0, /* tp_richcompare */

0, /* tp_weaklistoffset */

0, /* tp_iter */

0, /* tp_iternext */

LdaType_methods, /* tp_methods */

0, /* tp_members */

0, /* tp_getset */

0, /* tp_base */

0, /* tp_dict */

0, /* tp_descr_get */

0, /* tp_descr_set */

0, /* tp_dictoffset */

(initproc)Lda_init, /* tp_init */

0, /* tp_alloc */

Lda_new, /* tp_new */

};

// ------------------------------------- Python Module --------------------------------------

static PyModuleDef PyLda_module = {

PyModuleDef_HEAD_INIT,

"lda", /* name of module */

NULL, /* module documentation, may be NULL */

-1, /* size of per-interpreter state of the module,

or -1 if the module keeps state in global variables. */

NULL, NULL, NULL, NULL, NULL

};

PyMODINIT_FUNC

PyInit_lda(void)

{

PyObject* m;

if (PyType_Ready(&DataSetType) < 0)

return NULL;

if (PyType_Ready(&LdaType) < 0)

return NULL;

m = PyModule_Create(&PyLda_module);

if (m == NULL)

return NULL;

Py_INCREF(&DataSetType);

PyModule_AddObject(m, "DataSet", (PyObject *)&DataSetType);

Py_INCREF(&LdaType);

PyModule_AddObject(m, "Lda", (PyObject *)&LdaType);

return m;

}

from distutils.core import setup, Extension

module1 = Extension('lda',

sources = ['pylda.cc', 'dataset.cc', 'dictionary.cc', 'lda_gibbs_model.cc'],)

setup (name = 'PackageName',

version = '1.0',

description = 'This is a demo package',

ext_modules = [module1])

import lda

import pickle

dataset = lda.DataSet()

stopwords = None

with open('stopwords.txt', encoding = 'utf-8') as fp:

stopwords = set(map(lambda x: x.strip(), fp))

print("Loading data ...")

for line in open('f_sp.txt', encoding = 'utf-8'):

line = line.strip()

terms = line.split()

terms = list(filter(lambda x: len(x) > 1 and x not in stopwords, terms))

if len(terms) == 0:

continue

dataset.appendDocument(terms[0], terms)

print("Total documents: {0}\nTotal terms: {1}".format(dataset.documentNumber(), dataset.termNumber()))

topic_number = 100

term_number = dataset.termNumber()

document_number = dataset.documentNumber()

lda = lda.Lda(dataset, topic_number, 50 / topic_number, 0.01)

print("Start sampling")

iter = 0;

for i in range(10):

for j in range(10):

iter += 1

print("Iteration {0}".format(iter))

lda.gibbsSampling(1)

for topic_id in range(topic_number):

phi_topic = map(lambda term_id: lda.calculatePhi(topic_id, term_id), range(term_number))

term_weight_pairs = list(zip(

range(term_number),

phi_topic))

term_weight_pairs.sort(key = lambda x: x[1], reverse = True)

print("Topic {0}: {1}".format(topic_id, ', '.join(

map(lambda x: dataset.getTermById(x[0]), term_weight_pairs[:20]))))

with open('phi.matrix', 'w', encoding = 'utf-8') as fp:

for term_id in range(term_number):

phi_term = map(lambda topic_id: '{:.15f}'.format(lda.calculatePhi(topic_id, term_id)), range(topic_number))

term_str = dataset.getTermById(term_id)

fp.write('{0} {1}\n'.format(term_str, ' '.join(phi_term)))

with open('theta.matrix', 'w', encoding = 'utf-8') as fp:

for document_id in range(document_number):

theta_document = map(lambda topic_id: '{:.15f}'.format(lda.calculateTheta(document_id, topic_id)), range(topic_number))

document_title = dataset.documentTitle(document_id)

fp.write('{0} {1}\n'.format(document_title, ' '.join(theta_document)))

print('OK')