Machine Learning系列实验--SoftMax Regression

原文地址:http://blog.sina.com.cn/s/blog_6982136301015asd.html

SoftMax回归可以用来进行两种以上的分类,很是神奇!实现过程实在有点坎坷,主要是开始写代码的时候理解并不透彻,而且思路不清晰,引以为戒吧!

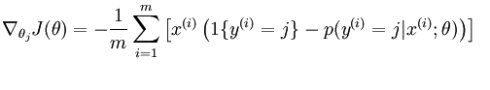

SoftMax Regression属于指数家族,证明见( http://cs229.stanford.edu/notes/cs229-notes1.pdf 及 http://ufldl.stanford.edu/wiki/index.php/Softmax_Regression),最后得出的结论是:

参数theta的更新如下:

要注意的是,theta[j]是一个向量。

实验还是参考大牛pennyliang( http://blog.csdn.net/pennyliang/article/details/7048291),代码如下:

- #include <iostream>

- #include <cmath>

- #include <assert.h>

- using namespace std;

- const int K = 2;//有K+1类

- const int M = 9;//训练集大小

- const int N = 4;//特征数

- double x[M][N]={{1,47,76,24}, //include x0=1

- {1,46,77,23},

- {1,48,74,22},

- {1,34,76,21},

- {1,35,75,24},

- {1,34,77,25},

- {1,55,76,21},

- {1,56,74,22},

- {1,55,72,22},

- };

- double y[M]={1,

- 1,

- 1,

- 2,

- 2,

- 2,

- 3,

- 3,

- 3,};

- double theta[K][N]={

- {0.3,0.3,0.01,0.01},

- {0.5,0.5,0.01,0.01}}; // include theta0

- double h_value[K];//h(x)向量值

- //求exp(QT*x)

- double fun_eqx(double* x, double* q)

- {

- double sum = 0;

- for (int i = 0; i < N; i++)

- {

- sum += x[i] * q[i];

- }

- return pow(2.718281828, sum);

- }

- //求h向量

- void h(double* x)

- {

- int i;

- double sum = 1;//之前假定theta[K+1]={0},所以exp(Q[K+1]T*x)=1

- for (i = 0; i < K; i++)

- {

- h_value[i] = fun_eqx(x, theta[i]);

- sum += h_value[i];

- }

- assert(sum != 0);

- for (i = 0; i < K; i++)

- {

- h_value[i] /= sum;

- }

- }

- void modify_stochostic()

- {

- //随机梯度下降,训练参数

- int i, j, k;

- for (j = 0; j < M; j ++)

- {

- h(x[j]);

- for (i = 0; i < K; i++)

- {

- for (k = 0; k < N; k++)

- {

- theta[i][k] += 0.001 * x[j][k] * ((y[j] == i+1?1:0) - h_value[i]);

- }

- }

- }

- }

- void modify_batch()

- {

- //批量梯度下降,训练参数

- int i, j, k ;

- for (i = 0; i < K; i++)

- {

- double sum[N] = {0.0};

- for (j = 0; j < M; j++)

- {

- h(x[j]);

- for (k = 0; k < N; k++)

- {

- sum[k] += x[j][k] * ((y[j] == i+1?1:0) - h_value[i]);

- }

- }

- for (k = 0; k < N; k++)

- {

- theta[i][k] += 0.001 * sum[k] / N;

- }

- }

- }

- void train(void)

- {

- int i;

- for (i = 0; i < 10000; i++)

- {

- //modify_stochostic();

- modify_batch();

- }

- }

- void predict(double* pre)

- {

- //输出预测向量

- int i;

- for (i = 0; i < K; i++)

- h_value[i] = 0;

- train();

- h(pre);

- for (i = 0; i < K; i++)

- cout << h_value[i] << " ";

- cout << 1 - h_value[0] - h_value[1] << endl;

- }

- int main(void)

- {

- for (int i=0; i < M; i++)

- {

- predict(x[i]);

- }

- cout << endl;

- double pre[] = {1,20, 80, 50 };

- predict(pre);

- return 0;

- }

代码实现了批量梯度和随机梯度两种方法,实验最后分别将训练样本带入进行估计,迭代10000次的结果为:

stochastic:

0.999504 0.000350044 0.000145502

0.997555 0.00242731 1.72341e-005

0.994635 1.24138e-005 0.00535281

2.59353e-005 0.999974 6.07695e-017

0.00105664 0.998943 -1.09071e-016

4.98481e-005 0.99995 3.45318e-017

0.0018048 1.56509e-012 0.998195

0.000176388 1.90889e-015 0.999824

0.000169041 8.42073e-016 0.999831

batch:

0.993387 0.00371185 0.00290158

0.991547 0.0081696 0.000283336

0.979246 0.000132495 0.0206216

0.000630111 0.99937 4.9303e-014

0.00378715 0.996213 9.37462e-014

0.000299602 0.9997 3.50739e-017

0.00759726 2.60939e-010 0.992403

0.0006897 1.09856e-012 0.99931

0.000545117 5.19157e-013 0.999455

可见随机梯度收敛的更快。

对于预测来说,输出结果每行的三个数表示是:对于输入来说,是1 2 3三类的概率分别是多少。