Spark 安装配置实验

hadoop 2.7.2 安装,参考

http://blog.csdn.net/wzy0623/article/details/50681554

hive 2.0.0 安装,参考

http://blog.csdn.net/wzy0623/article/details/50685966

注:hive 2.0.0初始化需要执行下面的命令:

$HIVE_HOME/bin/schematool -initSchema -dbType mysql -userName=root -passowrd=new_password

否则执行hive会报错:

Exception in thread "main" java.lang.RuntimeException: Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql)

安装spark

1. 下载spark安装包,地址: http://spark.apache.org/downloads.html下载页面如图1所示

图1

注:如果要用sparksql查询hive的数据,一定要注意spark和hive的版本兼容性问题,在hive源码包的pom.xml文件中可以找到匹配的spark版本。

2. 解压缩tar -zxvf spark-1.6.0-bin-hadoop2.6.tgz

3. 建立软连接

ln -s spark-1.6.0-bin-hadoop2.6 spark

4. 配置环境变量

vi /etc/profile.d/spark.sh

# 增加如下两行

export SPARK_HOME=/home/grid/spark-1.6.0-bin-hadoop2.6

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

5. 建立spark-env.sh

cd /home/grid/spark/conf/

cp spark-env.sh.template spark-env.sh

vi spark-env.sh

# 增加如下配置

export JAVA_HOME=/home/grid/jdk1.7.0_75

export HADOOP_HOME=/home/grid/hadoop-2.7.2

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export SPARK_HOME=/home/grid/spark-1.6.0-bin-hadoop2.6

SPARK_MASTER_IP=master

SPARK_LOCAL_DIRS=/home/grid/spark

SPARK_DRIVER_MEMORY=1G

6. 配置slaves

cd /home/grid/spark/conf/

vi slaves

# 增加如下两行

slave1

slave2

7. 将配置好的spark-1.6.0-bin-hadoop2.6文件远程拷贝到相对应的从机中:

scp -r spark-1.6.0-bin-hadoop2.6 slave1:/home/grid/

scp -r spark-1.6.0-bin-hadoop2.6 slave2:/home/grid/

8. 配置yarn

vi /home/grid/hadoop-2.7.2/etc/hadoop/yarn-site.xml

# 修改如下属性

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

# 启动dfs

start-dfs.sh

# 启动yarn

start-yarn.sh

# 启动spark

$SPARK_HOME/sbin/start-all.sh

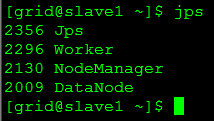

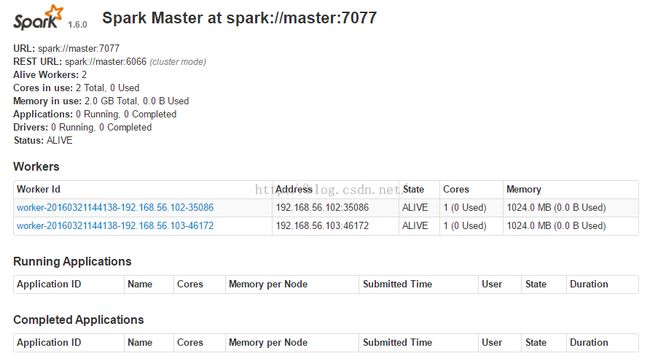

10. 启动完成后,查看主从机的进程和spark的UI,分别如图2、图3、图4所示

jps查看主机进程

图2

jps查看主从机进程图3

http://192.168.17.210:8080/图4

11. 测试# 把一个本地文本文件放到hdfs,命名为input

hadoop fs -put /home/grid/hadoop-2.7.2/README.txt input

# 登录spark的Master节点,进入sparkshell

cd $SPARK_HOME/bin

./spark-shell

# 运行wordcount

val file=sc.textFile("hdfs://master:9000/user/grid/input")

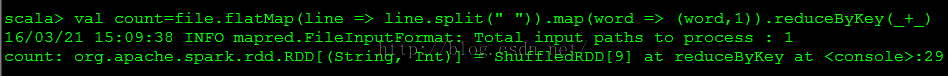

val count=file.flatMap(line => line.split(" ")).map(word => (word,1)).reduceByKey(_+_)

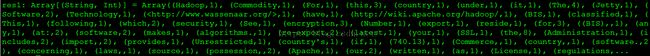

count.collect()

上面三条命令的执行分别如图5、图6、图7所示

图5

图6

图7

测试SparkSQL:

在$SPARK_HOME/conf目录下创建hive-site.xml文件,然后在该配置文件中,添加hive.metastore.uris属性,具体如下:

<configuration>

<property>

<name>hive.metastore.uris</name>

<value>thrift://master:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

</configuration>

# 启动hive metastore服务

hive --service metastore > /tmp/grid/hive_metastore.log 2>&1 &

# 启动SparkSQL CLI

spark-sql --master spark://master:7077 --executor-memory 1g

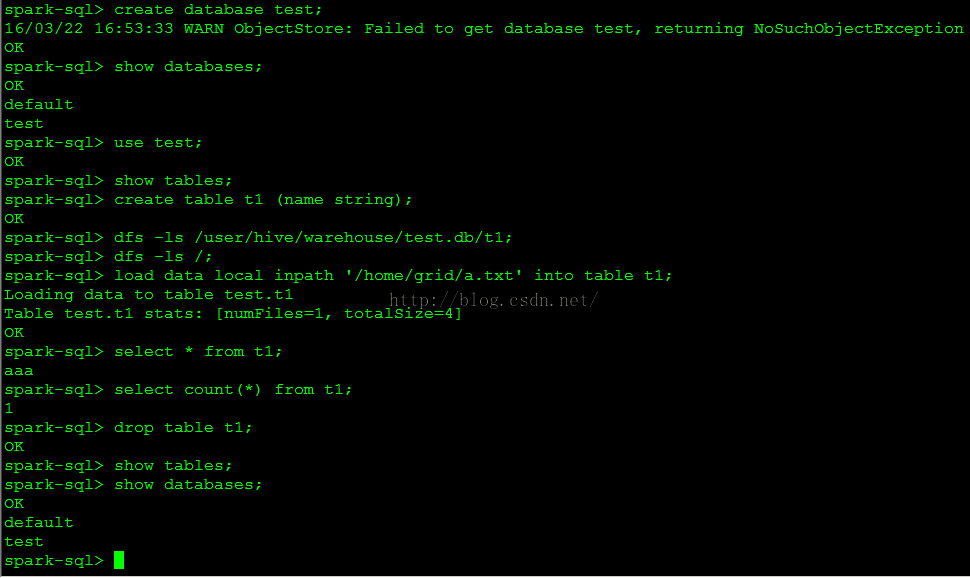

# 这时就可以使用HQL语句对Hive数据进行查询

show databases;

create table test;

use test;

create table t1 (name string);

load data local inpath '/home/grid/a.txt' into table t1;

select * from t1;

select count(*) from t1;

drop table t1;

SQL执行如图8所示

图8

做了一个简单的对比测试,300G数据时,sparksql比hive快近三倍,3T数据时,sparksql比hive快7.5倍。

参考:

http://spark.apache.org/docs/latest/running-on-yarn.htmlhttp://blog.csdn.net/u014039577/article/details/50829910

http://www.cnblogs.com/shishanyuan/p/4723604.html

http://www.cnblogs.com/shishanyuan/p/4723713.html