人脸关键点检测

先上效果图,然后贴代码。依赖库主要有opencv、Lasagne、nolearn

这幅图是训练用的数据库图片。数据库地址https://www.kaggle.com/c/facial-keypoints-detection 图片保存在csv文件里。

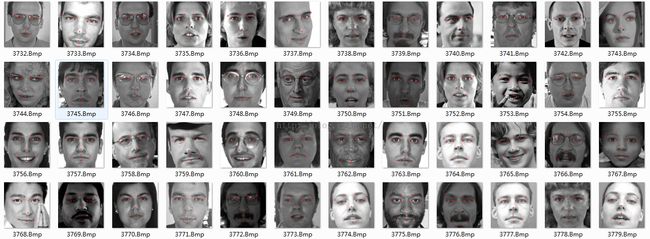

这幅图是训练之后检测的结果,效果还不错,不过对于侧脸的检测可以发现效果相对差一些,少部分图像会出现较大偏差。不过这个检测的数据库图片质量真是不怎么好。

# coding:utf-8

import os

import cv2

import numpy as np

from pandas.io.parsers import read_csv

from sklearn.utils import shuffle

from lasagne import layers

from lasagne.updates import nesterov_momentum

from nolearn.lasagne import NeuralNet

import theano.tensor as T

def load():

df = read_csv(os.path.expanduser(<span style="font-family: Arial, Helvetica, sans-serif;">training.csv</span><span style="font-family: Arial, Helvetica, sans-serif;">)) # load pandas dataframe</span>

df['Image'] = df['Image'].apply(lambda im: np.fromstring(im, sep=' '))

print(df.count()) # prints the number of values for each column

df = df.dropna() # drop all rows that have missing values in them

X = np.vstack(df['Image'].values) / 255. # scale pixel values to [0, 1]

X = X.astype(np.float32)

y = df[df.columns[:-1]].values

y = ( y - 48 ) / 48. # scale target coordinates to [-1, 1]

X, y = shuffle(X, y, random_state=42) # shuffle train data

y = y.astype(np.float32)

return X, y

def load_testdata():

df = read_csv(os.path.expanduser('test.csv')) # load pandas dataframe

df['Image'] = df['Image'].apply(lambda im: np.fromstring(im, sep=' '))

X = np.vstack(df['Image'].values)

X = X.astype(np.float32)

X = X/255.

return X

'''

for i in range(len(X)):

img = X[i]

img = img.reshape(96,96)

add = './test_data/'+str(i)+'.Bmp'

cv2.imwrite(add,img)

'''

net1 = NeuralNet(

layers=[ # three layers: one hidden layer

('input', layers.InputLayer),

('hidden', layers.DenseLayer),

('output', layers.DenseLayer),

],

# layer parameters:

input_shape=(None, 9216), # 96x96 input pixels per batch

hidden_num_units=100, # number of units in hidden layer

output_nonlinearity=None, # output layer uses identity function

output_num_units=30, # 30 target values

# optimization method:

update=nesterov_momentum,

update_learning_rate=0.01,

update_momentum=0.9,

regression=True, # flag to indicate we're dealing with regression problem 回归问题,而不是分类

max_epochs=400, # we want to train this many epochs

verbose=1,

)

net2 = NeuralNet(

layers=[

('input', layers.InputLayer),

('conv1', layers.Conv2DLayer),

('pool1', layers.MaxPool2DLayer),

('dropout1', layers.DropoutLayer),#

('conv2', layers.Conv2DLayer),

('pool2', layers.MaxPool2DLayer),

('dropout2', layers.DropoutLayer),#

('conv3', layers.Conv2DLayer),

('pool3', layers.MaxPool2DLayer),

('dropout3', layers.DropoutLayer),#

('hidden4', layers.DenseLayer),

('dropout4', layers.DropoutLayer),#

('hidden5', layers.DenseLayer),

('output', layers.DenseLayer),

],

input_shape=(None, 1, 96, 96),

conv1_num_filters=32, conv1_filter_size=(3, 3), pool1_pool_size=(2, 2),

conv2_num_filters=64, conv2_filter_size=(3, 3), pool2_pool_size=(2, 2),

conv3_num_filters=128, conv3_filter_size=(3, 3), pool3_pool_size=(2, 2),

hidden4_num_units=500, hidden5_num_units=500,

dropout1_p=0.1,dropout2_p=0.2,dropout3_p=0.2,dropout4_p=0.5,

output_num_units=30, output_nonlinearity=None,

update_learning_rate=0.01,

update_momentum=0.9,

regression=True,

max_epochs=200,

verbose=1,

)

net3 = NeuralNet(

# three layers: one hidden layer

layers=[

('input', layers.InputLayer),

('h1', layers.DenseLayer),

('h2', layers.DenseLayer),

('output', layers.DenseLayer),

],# layer parameters:# variable batch size.

input_shape=(None, 9216), # 96x96 input pixels per batch

h1_num_units = 300, # number of units in hidden layer

h1_nonlinearity = T.tanh,

h2_num_units = 100,

h2_nonlinearity = None, # rectify,

output_nonlinearity=T.tanh,

# None if output layer wants to use identity function

output_num_units=30, # 30 target values# optimization method:

update=nesterov_momentum,

update_learning_rate=0.01,

update_momentum=0.9,regression = True, # flag to indicate we're dealing with regression problem

max_epochs = 1000, # we want to train this many epochs

eval_size = 0.1,

verbose=1

)

def train_mlp():

x , y = load()

net1.load_params_from('face_weight_mlp')

net1.fit(x, y)

net1.save_params_to('face_weight_mlp')

def test_mlp():

net1.load_params_from('face_weight_mlp')

x = load_testdata()

y = np.empty(shape = (x.shape[0],30))

y = net1.predict(x)

#保存图片

x = x*255

y = y*48+48

for i in range(len(x)):

tmp = x[i].reshape(96,96)

img = cv2.cvtColor(tmp,cv2.COLOR_GRAY2BGR)

add = './predict/'+str(i)+'.Bmp'

for j in range(15):

point = (y[i][2*j],y[i][2*j+1])

cv2.circle(img,point,1,(0,255,0))

cv2.imwrite(add,img)

def train_ann():

x , y = load()

net1.load_params_from('face_weight_ann')

net3.fit(x, y)

net3.save_params_to('face_weight_ann')

def test_ann():

net3.load_params_from('face_weight_ann')

x = load_testdata()

y = np.empty(shape = (x.shape[0],30))

y = net3.predict(x)

#保存图片

x = x*255

y = y*48+48

for i in range(len(x)):

tmp = x[i].reshape(96,96)

img = cv2.cvtColor(tmp,cv2.COLOR_GRAY2BGR)

add = './predict/'+str(i)+'.Bmp'

for j in range(15):

point = (y[i][2*j],y[i][2*j+1])

cv2.circle(img,point,1,(0,255,0))

cv2.imwrite(add,img)

def train_cnn():

x , y = load()

x = x.reshape(-1, 1, 96, 96)

net2.load_params_from('face_weight_cnn')

net2.fit(x, y)

net2.save_params_to('face_weight_cnn')

def test_cnn():

net2.load_params_from('face_weight_cnn')

x = load_testdata()

x = x.reshape(-1, 1, 96, 96)

y = np.empty(shape = (x.shape[0],30))

y = net2.predict(x)

#保存图片

x = x*255

y = y*48+48

for i in range(len(x)):

tmp = x[i].reshape(96,96)

img = cv2.cvtColor(tmp,cv2.COLOR_GRAY2BGR)

add = './predict/'+str(i)+'.Bmp'

for j in range(15):

point = (y[i][2*j],y[i][2*j+1])

cv2.circle(img,point,1,(0,255,0))

cv2.imwrite(add,img)

if __name__ == '__main__':

#train_ann()

#test_ann()

#train_mlp()

#test_mlp()

#train_cnn()

test_cnn()

print 'OK'

代码比较简单,但是调试过程中还出了不少问题,本来看了这个算法之后觉得比较简单想自己用keras写,但是发现完全不能收敛,问题非常简单,这里要解决的问题是一个回归问题,而不是分类问题。而之前用到的keras的函数也只提供了分类的接口(如果有知道如何用keras解决回归问题请指教,不胜感激)。

后来没办法只好去用lasagne,谁知道程序出来之后 报错如下:

File "/usr/local/lib/python2.7/dist-packages/theano/gradient.py", line 432, in grad

raise TypeError("cost must be a scalar.")

TypeError: cost must be a scalar.

这里有解决方法https://github.com/dnouri/kfkd-tutorial/issues/16

主要就是这三句:

pip uninstall Lasagne

pip uninstall nolearn

pip install -r https://raw.githubusercontent.com/dnouri/kfkd-tutorial/master/requirements.txt当时没有找到解决办法,百度不给力呀,然后就想自己解决,这个报错太坑了,专门跑去看了源码,没多大收货。最后想到的解决方法是自己去用theano写,主要要修改的地方如下:

这里的cost函数表示的是归类问题的趋近概率,而我们要的cost函数是表示预测值和真实值的差值,感觉用LMS( Least mean square)函数比较合适(学的少,只知道这个- -)。不过因为theano调试比较麻烦,python学的又菜,感觉调试时间太久,放弃了又回去找之前的问题。其实还是有点懒,用了这些库之后就不太想再用theano了。