Unity结合讯飞语音在线识别

这次说说Unity上的语音识别,使用的是讯飞语音识别的SDK,目标平台是安卓客户端

在写文章之前,参考了讯飞官方论坛给出的Unity结合讯飞语音识别的案例,参照案例可以很方便的进行语音识别,

文章链接就不贴出来了,想看的移步讯飞官方论坛,搜索unity相关。

既然官方有,为什么我还写呢,我不想做搬运工的(可能吧)。。。

因为在开发过程中,使用论坛帖子的方式,必须要将androidMainfast.xml放到工程里面,这样我就不开心了,因为我原先工程里面就已经用了Mainfast了,并且是个复杂系统,不好改。能不能我将讯飞语音制作成一个Jar包,用的时候调用一下就可以了。

当然是可以的!!!

废话不多说,下面开始来做

第一步:创建安卓工程都是没问题的吧,工程创建好之后,将讯飞给出的Demo中的libs文件夹拷贝到我们新建工程的libs文件夹中

第二步:开始写代码

package com.petit.voice_voice;

import java.util.HashMap;

import java.util.LinkedHashMap;

import org.json.JSONException;

import org.json.JSONObject;

import com.iflytek.cloud.InitListener;

import com.iflytek.cloud.RecognizerResult;

import com.iflytek.cloud.SpeechConstant;

import com.iflytek.cloud.SpeechError;

import com.iflytek.cloud.SpeechRecognizer;

import com.iflytek.cloud.SpeechUtility;

import com.iflytek.cloud.RecognizerListener;

import com.unity3d.player.UnityPlayer;

import android.app.Activity;

import android.os.Bundle;

import android.util.Log;

import android.widget.Toast;

public class MainActivity {

public SpeechRecognizer mSpeech;

static MainActivity instance=null;

// 用HashMap存储听写结果

private HashMap<String, String> mIatResults = new LinkedHashMap<String, String>();

//用来存放由Unity传送过来的Activity,这里换成Context也是可行的

private Activity activity = null;

public MainActivity(){

instance=this;

}

public static MainActivity instance(){

if(instance==null){

instance=new MainActivity();

}

return instance;

}

public void setContext(Activity act){

this.activity=act;

if(this.activity!=null){

try{

//这里在获取到Unity里面的activity之后,注册你在讯飞申请的appId SpeechUtility.createUtility(activity, "appid=XXXXXXX");

//初始化

mSpeech=SpeechRecognizer.createRecognizer(activity, mInitListener);

}

catch(Exception e){

//使用UnityPlayer将消息发送回Unity,具体用法找度娘耍耍

UnityPlayer.UnitySendMessage("Manager", "Init", "StartActivity0"+e.getMessage());

}

}else{

UnityPlayer.UnitySendMessage("Manager", "Init", "setContext is null");

}

}

public void StartActivity1(){

//进行语音识别

mSpeech.setParameter(SpeechConstant.DOMAIN,"iat");

mSpeech.setParameter(SpeechConstant.SAMPLE_RATE, "16000");

mSpeech.setParameter(SpeechConstant.LANGUAGE,"zh_cn");

int ret=mSpeech.startListening(recognizerListener);

Toast.makeText(activity, "startListening ret:"+ret, Toast.LENGTH_SHORT).show();

UnityPlayer.UnitySendMessage("Manager", "Init", "startListening ret:"+ret);

}

public RecognizerListener recognizerListener=new RecognizerListener(){

@Override

public void onBeginOfSpeech() {

// TODO Auto-generated method stub

}

@Override

public void onEndOfSpeech() {

// TODO Auto-generated method stub

}

@Override

public void onError(SpeechError arg0) {

// TODO Auto-generated method stub

Log.d("ss", "error"+arg0.getErrorCode());

}

@Override

public void onEvent(int arg0, int arg1, int arg2, Bundle arg3) {

// TODO Auto-generated method stub

}

@Override

public void onResult(RecognizerResult arg0, boolean arg1) {

// TODO Auto-generated method stub

Log.d("ss", "result:"+arg0.getResultString());

Toast.makeText(activity, "result:"+arg0.getResultString(), Toast.LENGTH_SHORT).show();

// UnityPlayer.UnitySendMessage("Manager", "Result", "result:"+arg0.getResultString());

printResult(arg0);

}

@Override

public void onVolumeChanged(int arg0, byte[] arg1) {

// TODO Auto-generated method stub

}

};

private void printResult(RecognizerResult results) {

String text = JsonParser.parseIatResult(results.getResultString());

String sn = null;

// 读取json结果中的sn字段,这里需要一个Java文件JsonParser.java这个在讯飞歌的Demo中可以找到

try {

JSONObject resultJson = new JSONObject(results.getResultString());

sn = resultJson.optString("sn");

} catch (JSONException e) {

e.printStackTrace();

}

mIatResults.put(sn, text);

StringBuffer resultBuffer = new StringBuffer();

for (String key : mIatResults.keySet()) {

resultBuffer.append(mIatResults.get(key));

}

//将结果返回到Unity的Result函数中

UnityPlayer.UnitySendMessage("Manager", "Result", "result:"+resultBuffer.toString());

}

private InitListener mInitListener=new InitListener(){

@Override

public void onInit(int arg0) {

// TODO Auto-generated method stub

if(arg0==0){

Log.d("ss", "loin");

Toast.makeText(activity, "login", Toast.LENGTH_SHORT).show();

}else{

Log.d("ss","login error"+arg0);

Toast.makeText(activity, "login error"+arg0, Toast.LENGTH_SHORT).show();

}

}

};

}

Java这边其实就这么点东西

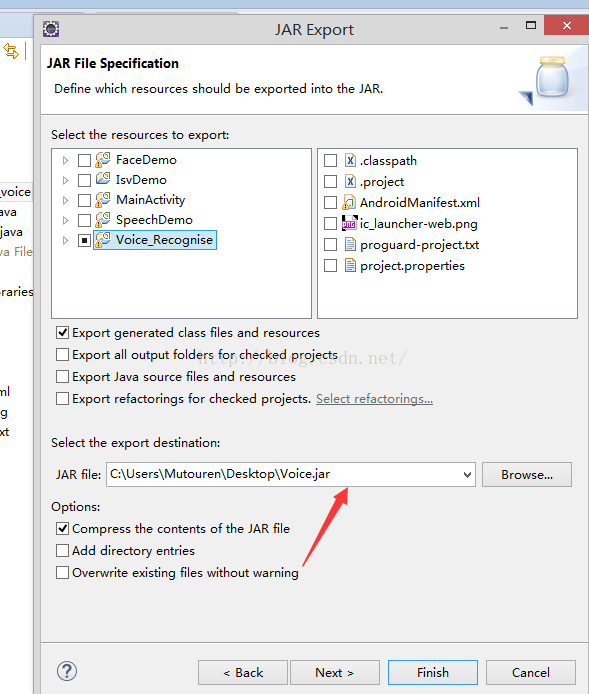

第三步:将文件导出Jar包:实际上我们只需要将需要的文件进行打包就可以了,其他的res啊、lib啊什么的不需要管他们

下一步,设置好导出路径,直接finish就OK了

第四步:将Jar包放到Unity里面,同时需要将讯飞的我们需要的包也要放到里面,实际上是把Libs文件夹拷贝到unity里面就可以了。不过在Unity中,插件是要放到指定位置的,目录如下,这其中的Voice.jar就是我们刚才导出来的Jar包哦

第五步:编写Unity端的调用代码

using UnityEngine;

using UnityEngine.UI;

using System.Collections;

public class TestInstance : MonoBehaviour {

private string show = "sss";

private string showResult = "";

private AndroidJavaObject testobj = null;

private AndroidJavaObject playerActivityContext = null;

void Start()

{

}

void OnGUI()

{

if (GUILayout.Button("login", GUILayout.Height(100)))

{

if (testobj == null)

{

// First, obtain the current activity context借用外国友人的代码

using (var actClass = new AndroidJavaClass("com.unity3d.player.UnityPlayer"))

{//获取当前的activity并保存下来

playerActivityContext = actClass.GetStatic<AndroidJavaObject>("currentActivity");

}

// Pass the context to a newly instantiated TestUnityProxy object调用我们写的类

using (var pluginClass = new AndroidJavaClass("com.petit.voice_voice.MainActivity"))

{

if (pluginClass != null && playerActivityContext!=null)

{//调用Java中函数,并将activity传进去

testobj = pluginClass.CallStatic<AndroidJavaObject>("instance");

testobj.Call("setContext", playerActivityContext);

}

}

}

}

if (GUILayout.Button("startRecognizer", GUILayout.Height(100)))

{

//调用识别函数

testobj.Call("StartActivity1");

show += "StartActivity1";

}

GUILayout.TextArea(show + showResult, GUILayout.Width(200));

}

//这两个函数是不是挺熟悉,这就是接受UnityPlayer发送过来消息的函数,Manager是要创建一个空物体,将这个脚本挂在到上面,才能正常接收消息

public void Init(string hh)

{

show = hh;

}

public void Result(string hh)

{

showResult = hh;

}

}

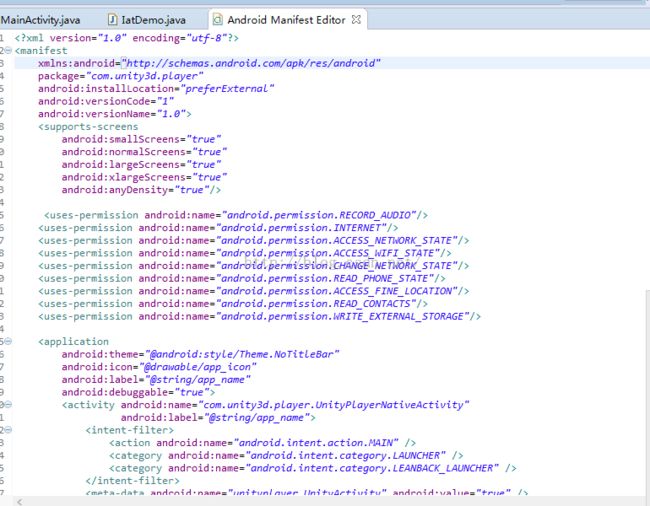

第六步:你以为到上一步就完了么,那就错咯,道出了一运行但是没有结果,如果你想办法打印出错误日志,会发现,是缺少权限,因为unity导出android会自动生成Mainfest的,而这个MainFest里面不包含语音识别所需要的权限,所以你懂得

D:\Program Files (x86)\Unity\Editor\Data\PlaybackEngines\androidplayer我的unity安装目录,在这里面找到

AndroidManifest.xml这是隔膜板,把你需要的权限加进去就好了

OK,到此为止咯

附上下载链接

点我点我