SparkR环境搭建

------------------------------------------目录----------------------------------------------------------

R的安装

Rstudio的安装

SparkR启动

Rstudio操作SparkR

------------------------------------------------------------------------------------------------------

----------------------------------------step1:安装R------------------------------------------------------

sudo apt-get update

sudo apt-get install r-base

启动R,界面输入R

在界面输入

----------------------------------------------step2:Rstudio安装------------------------------------------------------

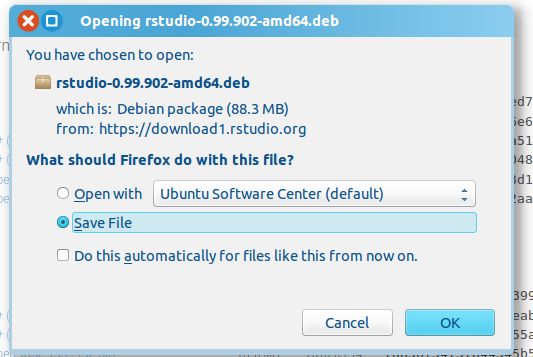

1、官网下载系统对应的版本:https://www.rstudio.com/products/rstudio/download/

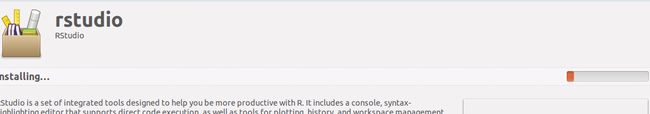

双击之后-->选择安装

---------------------------------启动SparkR-----------------------------------------------------------

cd 到sparkR下的bin目录

./sparkR

--------------------------------------end---------------------------------------------------------------

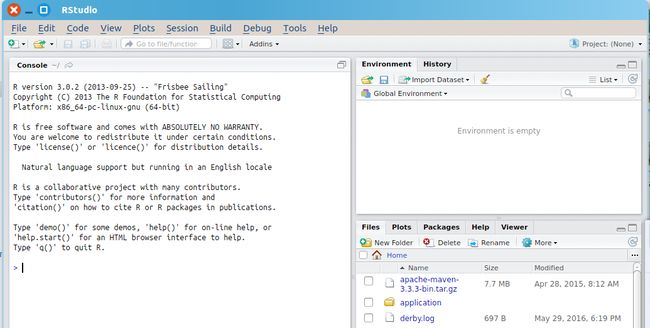

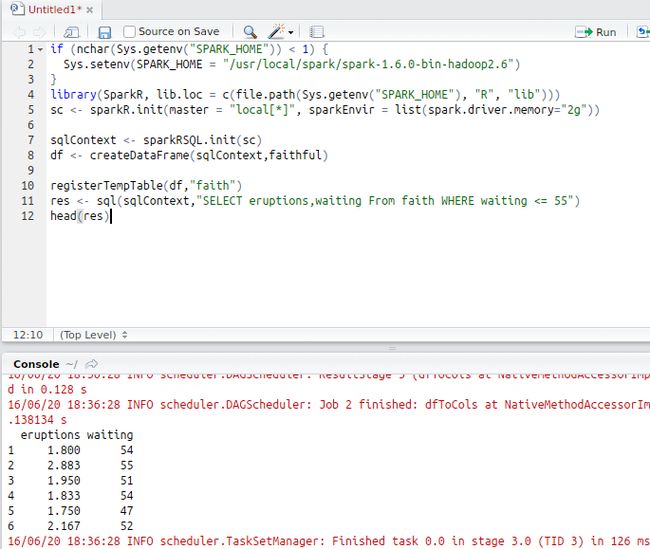

-----------------------------Rstudio下操作sparkR-------------------------------------------------------------------------------

前提:启动spark集群

if (nchar(Sys.getenv("SPARK_HOME")) < 1) {

Sys.setenv(SPARK_HOME = "/usr/local/spark/spark-1.6.0-bin-hadoop2.6")

}

library(SparkR, lib.loc = c(file.path(Sys.getenv("SPARK_HOME"), "R", "lib")))

sc <- sparkR.init(master = "local[*]", sparkEnvir = list(spark.driver.memory="2g"))

sqlContext <- sparkRSQL.init(sc)

df <- createDataFrame(sqlContext,faithful)

registerTempTable(df,"faith")

res <- sql(sqlContext,"SELECT eruptions,waiting From faith WHERE waiting <= 55")

head(res)

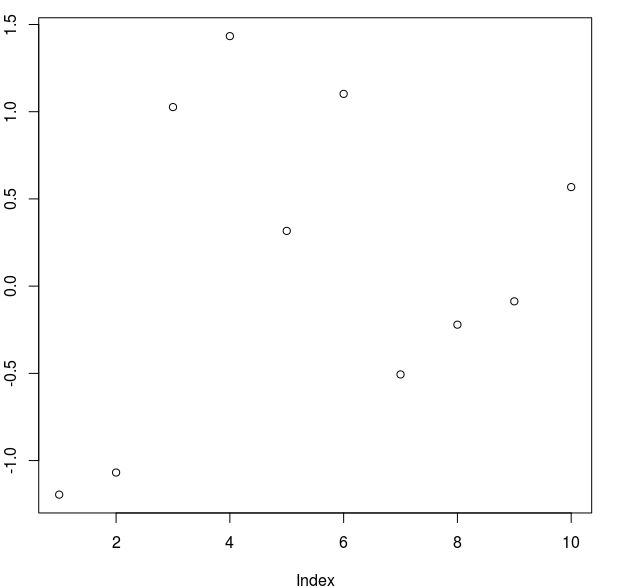

如果出现以下,you get it!!

-----------------------------------------------------------------------------------------------------------------------