Ceph学习——Librados与Osdc实现源码解析

- Librados

- RadosClient类

- IoctxImpl

- AioCompletionImpl

- OSDC

- ObjectOperation 封装操作

- op_target 封装PG信息

- Op 封装操作信息

- 分片 Striper

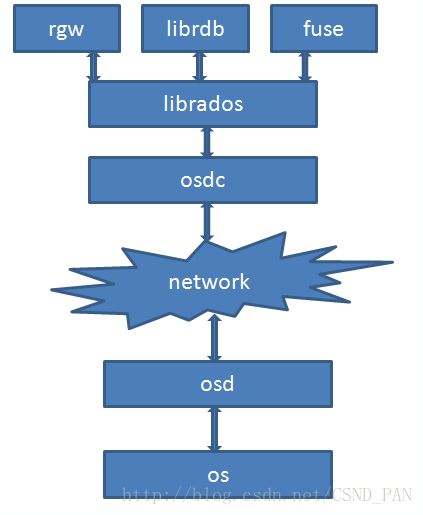

本文介绍Ceph客户端方面的某些模块的实现。客户端主要是实现了接口,对外提供访问的功能。上层可以通过接口来访问Ceph存储。Librados 与 Osdc 位于Ceph客户端中比较底层的位置,Librados 提供了Pool的创建、删除、对象的创建、删除等基本接口;Osdc则用于封装操作,计算对象的地址,发送请求和处理超时。如图:

根据LIBRADOS架构图,叙述大概的事件流程。在 Ceph分布式存储实战中 这本书中有如下一段话:

先根据配置文件调用LIBRADOS创建一个RADOS,接下来为这个RADOS创建一个radosclient,radosclient包含3个主要模块(finisher、Message、Objector)。再根据pool创建对应的ioctx,在ioctx中能够找到radosclient。在调用OSDC生成对应的OSD请求,与OSD进行通信响应请求。这从大体上叙述了librados与osdc在整个Ceph中的作用。

Librados

该模块包含两个部分,分别是RadosClient 模块和IoctxImpl。RadosClient处于最上层,是librados的核心管理类,管理着整个RADOS系统层面以及pool层面的管理。而IoctxImpl则对于其中的某一个pool进行管理,如对 对象的读写等操作的控制。

RadosClient类

先看头文件 radosclient.h

class librados::RadosClient : public Dispatcher//继承自Dispatcher(消息分发类)

{

std::unique_ptrstd::function<void(CephContext*)> > cct_deleter;//unique_ptr智能指针

public:

using Dispatcher::cct;

md_config_t *conf;//配置文件

private:

enum {

DISCONNECTED,

CONNECTING,

CONNECTED,

} state;//网络连接状态

MonClient monclient;

MgrClient mgrclient;

Messenger *messenger;//!!!!!!!!!!!!!!!!!!网络消息接口 !!!!!!!!!!!!!!!!!!!!!!!

uint64_t instance_id;

//相关消息分发 Dispatcher类的函数重写

bool _dispatch(Message *m);

...

...

bool ms_handle_refused(Connection *con) override;

Objecter *objecter;//!!!!!!!!!!!!!!!!!!!!!!Osdc模块中的 用于发送封装好的OP消息!!!!!!!!!!!!!

Mutex lock;//互斥锁

Cond cond;

SafeTimer timer;//定时器

int refcnt;

...

...

public:

Finisher finisher;//!!!!!!!!!!!!!!!!!!!执行回调函数的类!!!!!!!!!!!!!!!!!!

...

...

//创建一个pool相关的上下文信息

int create_ioctx(const char *name, IoCtxImpl **io);

int create_ioctx(int64_t, IoCtxImpl **io);

int get_fsid(std::string *s);

...///pool相关操作

...

bool get_pool_is_selfmanaged_snaps_mode(const std::string& pool);

//同步创建pool 和 异步创建pool

int pool_create(string& name, unsigned long long auid=0, int16_t crush_rule=-1);

int pool_create_async(string& name, PoolAsyncCompletionImpl *c, unsigned long long auid=0,

int16_t crush_rule=-1);

int pool_get_base_tier(int64_t pool_id, int64_t* base_tier);

//同步删除和异步删除

int pool_delete(const char *name);

int pool_delete_async(const char *name, PoolAsyncCompletionImpl *c);

int blacklist_add(const string& client_address, uint32_t expire_seconds);

//Monitor相关命令处理,调用monclient.start_mon_command 把命令发送给Monitor处理

int mon_command(const vector<string>& cmd, const bufferlist &inbl,

bufferlist *outbl, string *outs);

void mon_command_async(const vector<string>& cmd, const bufferlist &inbl,

bufferlist *outbl, string *outs, Context *on_finish);

int mon_command(int rank,

const vector<string>& cmd, const bufferlist &inbl,

bufferlist *outbl, string *outs);

int mon_command(string name,

const vector<string>& cmd, const bufferlist &inbl,

bufferlist *outbl, string *outs);

int mgr_command(const vector<string>& cmd, const bufferlist &inbl,

bufferlist *outbl, string *outs);

//osd相关命令处理,调用objecrot->osd_command 把命令发送给OSD处理

int osd_command(int osd, vector<string>& cmd, const bufferlist& inbl,

bufferlist *poutbl, string *prs);

//pg相关命令处理,调用objecrot->pg_command 把命令发送给OSD处理

int pg_command(pg_t pgid, vector<string>& cmd, const bufferlist& inbl,

bufferlist *poutbl, string *prs);

...

...

...

}; 再来看看其中一些的函数

connect () 是RadosClient的初始化函数。

int librados::RadosClient::connect()

{

common_init_finish(cct);

int err;

...

...

// get monmap

err = monclient.build_initial_monmap();//检查配置文件里面的初始Monitor信息

if (err < 0)

goto out;

err = -ENOMEM;

messenger = Messenger::create_client_messenger(cct, "radosclient");//创建通信模块

if (!messenger)

goto out;

//设置Policy相关信息

messenger->set_default_policy(Messenger::Policy::lossy_client(CEPH_FEATURE_OSDREPLYMUX));

ldout(cct, 1) << "starting msgr at " << messenger->get_myaddr() << dendl;

ldout(cct, 1) << "starting objecter" << dendl;

//创建objecter并初始化

objecter = new (std::nothrow) Objecter(cct, messenger, &monclient,

&finisher,

cct->_conf->rados_mon_op_timeout,

cct->_conf->rados_osd_op_timeout);

if (!objecter)

goto out;

objecter->set_balanced_budget();

monclient.set_messenger(messenger);

mgrclient.set_messenger(messenger);

objecter->init();

messenger->add_dispatcher_head(&mgrclient);

messenger->add_dispatcher_tail(objecter);

messenger->add_dispatcher_tail(this);

messenger->start();

ldout(cct, 1) << "setting wanted keys" << dendl;

monclient.set_want_keys(

CEPH_ENTITY_TYPE_MON | CEPH_ENTITY_TYPE_OSD | CEPH_ENTITY_TYPE_MGR);

ldout(cct, 1) << "calling monclient init" << dendl;

//初始化monclient

err = monclient.init();

...

err = monclient.authenticate(conf->client_mount_timeout);

...

objecter->set_client_incarnation(0);

objecter->start();

lock.Lock();

//定时器初始化

timer.init();

//finisher对象初始化

finisher.start();

state = CONNECTED;

instance_id = monclient.get_global_id();

lock.Unlock();

ldout(cct, 1) << "init done" << dendl;

err = 0;

out:

...

...

return err;

}create_ioctx()用于创建一个pool相关的上下文信息 IoCtxImpl对象。

int librados::RadosClient::create_ioctx(const char *name, IoCtxImpl **io)

{

int64_t poolid = lookup_pool(name);

if (poolid < 0) {

return (int)poolid;

}

*io = new librados::IoCtxImpl(this, objecter, poolid, CEPH_NOSNAP);

return 0;

}mon_command()用于处理Monitor相关命令

void librados::RadosClient::mon_command_async(const vector<string>& cmd,

const bufferlist &inbl,

bufferlist *outbl, string *outs,

Context *on_finish)

{

lock.Lock();

monclient.start_mon_command(cmd, inbl, outbl, outs, on_finish);//把命令发送给Monitor处理

lock.Unlock();

}osd_command()处理OSD相关命令

int librados::RadosClient::osd_command(int osd, vector<string>& cmd,

const bufferlist& inbl,

bufferlist *poutbl, string *prs)

{

Mutex mylock("RadosClient::osd_command::mylock");

Cond cond;

bool done;

int ret;

ceph_tid_t tid;

if (osd < 0)

return -EINVAL;

lock.Lock();

//调用objecter->osd_commandf 发送命令给OSD处理

objecter->osd_command(osd, cmd, inbl, &tid, poutbl, prs,

new C_SafeCond(&mylock, &cond, &done, &ret));

lock.Unlock();

mylock.Lock();

while (!done)

cond.Wait(mylock);

mylock.Unlock();

return ret;

}IoctxImpl

该类是pool的上下文信息,一个pool对应一个IoctxImpl对象。librados中所有关于IO操作的API都设计在librados::IoCtx中,接口的真正实现在IoCtxImpl中。它的处理过程如下:

1)把请求封装成ObjectOperation 类(osdc 中的)

2)把相关的pool信息添加到里面,封装成Objecter::Op对像

3)调用相应的函数 objecter- >op_submit 发送给相应的OSD

4)操作完成后,调用相应的回调函数。

AioCompletionImpl

Aio即Async IO,AioCompletion即Async Io Completion,也就是Async IO完成时的回调处理制作,librados设计AioCompletion就是为了提供一种机制对Aio完成时结果码的处理。而处理函数则由使用者来实现。AioCompletion是librados设计开放的库API,真正的设计逻辑在AioCompletionImpl中。

对于AIoCompletion实例的使用都是引用的pc,即AioCompletionImpl,因此具体来说应该是如何包装AioCompletionImpl。这里提一下,librados中所有关于IO操作的API都设计在librados::IoCtx中,接口的真正实现在IoCtxImpl中。而AioCompletionImpl是IO操作的回调,因为对于AioCompletionImpl的包装设计在IoCtxImpl模块中

详细的关于回调机制的分析见:ceph源代码分析之librados:1. AioCompletion回调机制分析

OSDC

该模块是客户端模块比较底层的,模块,用于封装操作数据,计算对象的地址、发送请求和处理超时。

ObjectOperation 封装操作

该类用于将操作相关的参数统一封装在该类中,并且一次可以封装多个操作。代码太长了。。。。OMG,这一块就看书总结把。。。。

struct ObjectOperation {

vector类OSDop封装对象的一个操作。结构Ceph_osd_op 封装一个操作码和相关的输入输出参数:

struct OSDop{

ceph_osd_op op;//操作码和操作参数

sobject_t soid;

bufferlist indata,outdata

int32_t rval;//操作结果

}op_target 封装PG信息

该结构封装了对象所在的而PG,以及PG对应的OSD列表等信息。

struct op_target_t {

int flags = 0;

epoch_t epoch = 0; ///< latest epoch we calculated the mapping

object_t base_oid;//读取的对象

object_locator_t base_oloc;//对象的pool信息

object_t target_oid;//最终读取的目标对象

object_locator_t target_oloc;//最终该目标对象的pool信息。

///< true if we are directed at base_pgid, not base_oid

bool precalc_pgid = false;

///< true if we have ever mapped to a valid pool

bool pool_ever_existed = false;

///< explcit pg target, if any

pg_t base_pgid;

pg_t pgid; ///< last (raw) pg we mapped to

spg_t actual_pgid; ///< last (actual) spg_t we mapped to

unsigned pg_num = 0; ///< last pg_num we mapped to

unsigned pg_num_mask = 0; ///< last pg_num_mask we mapped to

vector<int> up; ///< set of up osds for last pg we mapped to

vector<int> acting; ///< set of acting osds for last pg we mapped to

int up_primary = -1; ///< last up_primary we mapped to

int acting_primary = -1; ///< last acting_primary we mapped to

int size = -1; ///< the size of the pool when were were last mapped

int min_size = -1; ///< the min size of the pool when were were last mapped

bool sort_bitwise = false; ///< whether the hobject_t sort order is bitwise

bool recovery_deletes = false; ///< whether the deletes are performed during recovery instead of peering

...

...

...

};Op 封装操作信息

该结构体封装了完成一个操作的相关上下文信息,包括target地址信息、连接信息等。

struct Op : public RefCountedObject {

OSDSession *session;//OSD的相关Session 信息,session是关于connect的信息

int incarnation;

op_target_t target;//地址信息

ConnectionRef con; // for rx buffer only

uint64_t features; // explicitly specified op features

vector分片 Striper

当一个文件到对象的映射时,对象有分片,则使用这个类来分片,并保存分片信息。

class Striper {

public:

/*

* map (ino, layout, offset, len) to a (list of) ObjectExtents (byte

* ranges in objects on (primary) osds)该函数完成file到对象stripe后的映射。

*/

static void file_to_extents(CephContext *cct, const char *object_format,

const file_layout_t *layout,

uint64_t offset, uint64_t len,

uint64_t trunc_size,

mapvector >& extents,

uint64_t buffer_offset=0

};

其中 ObjectExtent保存的是对象内的分片信息。

class ObjectExtent {

public:

object_t oid; // object id

uint64_t objectno;//分片序号

uint64_t offset; // 对象内的偏移

uint64_t length; // 长度

uint64_t truncate_size; // in object

object_locator_t oloc; // object locator (pool etc)位置信息 如在哪个pool

};