Basics of Oozie and Oozie SHELL action

Our Oozie Tutorials will cover most of the available workflow actions with and without Kerberos authentication.

Let’s have a look at some basic concepts of Oozie.

What is Oozie?

Oozie is open source workflow management system. We can schedule Hadoop jobs via Oozie which includes hive/pig/sqoop etc. actions. Oozie provides great features to trigger workflows based on data availability,job dependency,scheduled time etc.

More information about Oozie is available here.

Oozie Workflow:

Oozie workflow is DAG(Directed acyclic graph) contains collection of actions. DAG contains two types of nodes action nodes and control nodes, action node is responsible for execution of tasks such as MapReduce, Pig, Hive etc. We can also execute shell scripts using action node. Control node is responsible for execution order of actions.

Oozie Co-ordinator:

In production systems its necessary to run Oozie workflows on a regular time interval or trigger workflows when input data is available or execute workflows after completion of dependent job. This can be achieved by Oozie co-ordinator job.

Oozie Bundle jobs:

Bundle is set of Oozie co-ordinators which gives us better control to start/stop/suspend/resume multiple co-ordinators in a better way.

Oozie Launcher:

Oozie launcher is map only job which runs on Hadoop Cluster, for e.g. you want to run a hive script, you can just run “hive -f

Let’s get started with running shell action using Oozie workflow.

Step 1: Create a sample shell script and upload it to HDFS

[root@sandbox shell]# cat ~/sample.sh #!/bin/bash echo "`date` hi" > /tmp/output

hadoop fs -put sample.sh /user/root/

Step 2: Create job.properties file according to your cluster configuration.

[root@sandbox shell]# cat job.properties nameNode=hdfs://:8020 jobTracker= :8050 queueName=default examplesRoot=examples oozie.wf.application.path=${nameNode}/user/${user.name}

Step 3: Create workflow.xml file for your shell action.

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

sample.sh

/user/root/sample.sh

Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]

Step 4: Upload workflow.xml file created in above step to HDFS at oozie.wf.application.path mentioned in job.properties

hadoop fs -copyFromLocal -f workflow.xml /user/root/

Step 5: Submit Oozie workflow by running below command

oozie job -oozie http://:11000/oozie -config job.properties -run

Step 6: Check Oozie UI to get status of workflow.

http://:11000/oozie

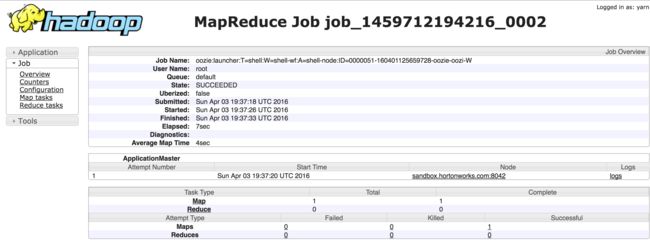

If you click on workflow ID area then you will get detailed status of each action ( see below screenshot )

If you want to check logs for running action then just click on Action Id 2 i.e. shell-node action followed by Console URL(Click on Magnifier icon at the end of console URL)

Step 7: Check final output from command line. Please note that you need to execute below command on a nodemanager where your oozie launcher was launched.

[root@sandbox shell]# cat /tmp/output Sun Apr 3 19:44:52 UTC 2016 hi