1. 媒体捕捉的类

AVFoundation的照片和视频捕捉功能是它的强项.先看一下其中捕捉相关的类.

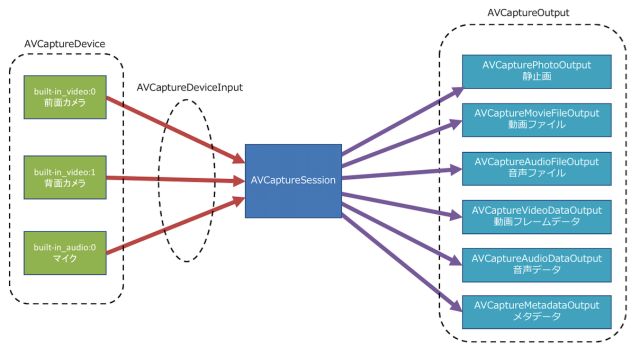

- 捕捉会话 AVCaptureSession:

AVCaptureSession相当于一个虚拟的插座,连接了输入和输出资源.它管理着从物理设备(摄像头和麦克风)得到的数据流,然后输出到其他地方.

可以额外配置一个会话预设值,用来控制捕捉数据的格式和质量,默认是AVCaptureSessionPresetHigh。 - 捕捉设备 AVCaptureDevice:

AVCaptureDevice为物理设备定义一个接口和大量控制方法,例如对焦、曝光、白平衡和闪光等。 - 捕捉设备输入 AVCaptureDeviceInput:

在使用AVCaptureDevice进行处理前,需要将它封装到AVCaptureDeviceInput实例中.因为一个捕捉设备不能直接添加到AVCaptureSession中; 如下:

NSError *error;

AVCaptureDeviceInput *input = [AVCaptureDeviceInput deviceInputWithDevice:videoDevice error:&error];

- 捕捉的输出 AVCaptureOutput:

AVCaptureOutput有许多扩展类,它本身只是一个抽象基类,用于为从Session得到的数据寻找输出目的地. 看上面结构图就可以看出各扩展类功能方向, 这里单独说一下,AVCaptureAudioDataOutput和AVCaptureVideoDataOutput可以直接访问硬件扑捉到的数字样本,可以用于音视频流进行实施处理. - 捕捉连接 AVCaptureConnection

这个类其实就是上图中连接不同组件的连接箭头所表示. 对这些连接的访问可以让开发者对信号流就行底层控制,比如禁用某些特定的连接,或者音频连接中限制单独的音频轨道. - 捕捉预览 AVCaptureVideoPreviewLayer

这个类不在上图中, 它是对捕捉视频数据进行实时预览.在视频角色中类似于AVPlayerLayer

捕捉会话应用流程:

- 第一步:配置会话

- (BOOL)setupSession:(NSError **)error {

// 1. 创建捕捉会话

self.captureSession = [[AVCaptureSession alloc] init];

self.captureSession.sessionPreset = AVCaptureSessionPresetHigh;

//2. 根据要生成的媒体类型获取捕捉设备,并添加到Session上.

AVCaptureDevice *videoDevice =

[AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

// 在把此捕捉设备添加到AVCaptureSession之前,先封装成一个input对象.

AVCaptureDeviceInput *videoInput =

[AVCaptureDeviceInput deviceInputWithDevice:videoDevice error:error];

if (videoInput) {

if ([self.captureSession canAddInput:videoInput]) { // 测试是否可以add

[self.captureSession addInput:videoInput];

self.activeVideoInput = videoInput;

}

} else {

return NO;

}

/*// 如果要获取音频捕捉部分

AVCaptureDevice *audioDevice =

[AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

AVCaptureDeviceInput *audioInput =

[AVCaptureDeviceInput deviceInputWithDevice:audioDevice error:error];

if (audioInput) {

if ([self.captureSession canAddInput:audioInput]) {

[self.captureSession addInput:audioInput];

}

} else {

return NO;

}

*/

//3. 设置捕捉会话的输出部分

// image输出

self.imageOutput = [[AVCaptureStillImageOutput alloc] init];

self.imageOutput.outputSettings = @{AVVideoCodecKey : AVVideoCodecJPEG};

if ([self.captureSession canAddOutput:self.imageOutput]) {

[self.captureSession addOutput:self.imageOutput];

}

// movie输出

self.movieOutput = [[AVCaptureMovieFileOutput alloc] init];

if ([self.captureSession canAddOutput:self.movieOutput]) {

[self.captureSession addOutput:self.movieOutput];

}

return YES;

}

- 第二: 启动和停止会话

- (void)startSession {

if (![self.captureSession isRunning]) {

dispatch_async([self globalQueue], ^{

[self.captureSession startRunning];

});

}

}

- (void)stopSession {

if ([self.captureSession isRunning]) {

dispatch_async([self globalQueue], ^{

[self.captureSession stopRunning];

});

}

}

// 开始和停止会话都是同步耗时操作,所以采用异步执行.

- (dispatch_queue_t)globalQueue {

return dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

}

iOS 8之后使用硬件设备都需要用户给予权限,使用前都要判断下. 例如:

AVAuthorizationStatus authStatus = [AVCaptureDevice authorizationStatusForMediaType:AVMediaTypeVideo];

if(authStatus == AVAuthorizationStatusRestricted || authStatus == AVAuthorizationStatusDenied) {

NSLog(@"相机权限受限!");

}

2. 细节部分.

2.1 捕捉设备的修改与配置

- 获取摄像头,切换摄像头.

// 当前捕捉会话对应的摄像头.

- (AVCaptureDevice *)activeCamera {

return self.activeVideoInput.device;

}

// 当前未激活的摄像头

- (AVCaptureDevice *)inactiveCamera {

AVCaptureDevice *device = nil;

if (self.cameraCount > 1) {

if ([self activeCamera].position == AVCaptureDevicePositionBack) {

device = [self cameraWithPosition:AVCaptureDevicePositionFront];

} else {

device = [self cameraWithPosition:AVCaptureDevicePositionBack];

}

}

return device;

}

// 切换摄像头

- (BOOL)switchCameras {

if (![self canSwitchCameras]) {

return NO;

}

// 1. 获取当前未使用的摄像头,并为他创建一个新的Input.

NSError *error;

AVCaptureDevice *videoDevice = [self inactiveCamera];

AVCaptureDeviceInput *videoInput =

[AVCaptureDeviceInput deviceInputWithDevice:videoDevice error:&error];

// 2. 用新的Input替换掉正在激活的Input

if (videoInput) {

[self.captureSession beginConfiguration]; // 原子性配置开始.保证线程安全

[self.captureSession removeInput:self.activeVideoInput];

if ([self.captureSession canAddInput:videoInput]) {

[self.captureSession addInput:videoInput];

self.activeVideoInput = videoInput;

} else { // 确保安全,如果新的Input不能添加,继续使用旧的

[self.captureSession addInput:self.activeVideoInput];

}

[self.captureSession commitConfiguration]; // 原子性配置完成

} else { // 错误处理

[self.delegate deviceConfigurationFailedWithError:error];

return NO;

}

return YES;

}

- (BOOL)canSwitchCameras {

return self.cameraCount > 1;

}

- (NSUInteger)cameraCount {

return [[AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo] count];

}

// 获取指定位置的AVCaptureDevice

- (AVCaptureDevice *)cameraWithPosition:(AVCaptureDevicePosition)position {

NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice *device in devices) {

if (device.position == position) {

return device;

}

}

return nil;

}

- 调整焦距和曝光

修改AVCaptureDevice配置设备时,一定要先测试修改动作是否被硬件设备支持. 比如前置摄像头不支持对焦操作.尝试一个不被支持的修改动作就会导致程序崩溃. 所以任何修改都要先通过判断一下.

/* 调整对焦 */

- (void)focusAtPoint:(CGPoint)point {

AVCaptureDevice *device = self.activeVideoInput.device;

if (device.isFocusPointOfInterestSupported &&

[device isFocusModeSupported:AVCaptureFocusModeAutoFocus]) { // 判断设备是否支持兴趣点对焦和自动对焦模式.

NSError *error;

if ([device lockForConfiguration:&error]) { // 锁定设备,准备配置修改. 之后修改完释放锁定.

device.focusPointOfInterest = point;

device.focusMode = AVCaptureFocusModeAutoFocus;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

/*

调整曝光

曝光模式定义了四种方式:

AVCaptureExposureModeLocked 锁定

AVCaptureExposureModeAutoExpose 调整一次并锁定

AVCaptureExposureModeContinuousAutoExposure 一直自动调整

AVCaptureExposureModeCustom

大部分设备是根据环境自动调整, 现在我们做调整曝光度并锁定. 但在iOS中并不支持AVCaptureExposureModeAutoExpose, 所以我们要用其他定义来实现.这个功能

*/

static const NSString *THCameraAdjustingExposureContext;

- (void)exposeAtPoint:(CGPoint)point {

AVCaptureDevice *device = self.activeVideoInput.device;

AVCaptureExposureMode exposureMode =

AVCaptureExposureModeContinuousAutoExposure;

if (device.isExposurePointOfInterestSupported &&

[device isExposureModeSupported:exposureMode]) { // 判断设备是否支持AutoExposure模式

NSError *error;

if ([device lockForConfiguration:&error]) { // 锁定设备,准备配置修改. 之后修改完释放锁定.

device.exposurePointOfInterest = point;

device.exposureMode = exposureMode;

// 判断设备是否支持锁定曝光设置, 如果支持,使用KVO来观察设备"adjustingExposure"属性状态. 然后在曝光调整完成时在该点上锁定曝光.

if ([device isExposureModeSupported:AVCaptureExposureModeLocked]) {

[device addObserver:self

forKeyPath:@"adjustingExposure"

options:NSKeyValueObservingOptionNew

context:&THCameraAdjustingExposureContext];

}

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

- (void)observeValueForKeyPath:(NSString *)keyPath

ofObject:(id)object

change:(NSDictionary *)change

context:(void *)context {

if (context == &THCameraAdjustingExposureContext) {

// 这里通过测试Context是否为&THCameraAdjustingExposureContext指针,来判断监听到的回调是否对应我们期望的变更操作.

AVCaptureDevice *device = (AVCaptureDevice *)object;

if (!device.isAdjustingExposure &&

[device isExposureModeSupported:AVCaptureExposureModeLocked]) {

[object removeObserver:self

forKeyPath:@"adjustingExposure"

context:&THCameraAdjustingExposureContext];

// 回到主队列设置exposureMode

dispatch_async(dispatch_get_main_queue(), ^{

NSError *error;

if ([device lockForConfiguration:&error]) {

device.exposureMode = AVCaptureExposureModeLocked;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

});

}

} else {

[super observeValueForKeyPath:keyPath

ofObject:object

change:change

context:context];

}

}

/* 恢复对焦和曝光设置 */

- (void)resetFocusAndExposureModes {

AVCaptureDevice *device = self.activeVideoInput.device;

AVCaptureExposureMode exposureMode =

AVCaptureExposureModeContinuousAutoExposure;

AVCaptureFocusMode focusMode = AVCaptureFocusModeContinuousAutoFocus;

BOOL canResetFocus = [device isFocusPointOfInterestSupported] &&

[device isFocusModeSupported:focusMode];

BOOL canResetExposure = [device isExposurePointOfInterestSupported] &&

[device isExposureModeSupported:exposureMode];

CGPoint centerPoint = CGPointMake(0.5f, 0.5f); // 创建一个中心扫描点.

NSError *error;

if ([device lockForConfiguration:&error]) {

if (canResetFocus) { // 焦点可以重置

device.focusMode = focusMode;

device.focusPointOfInterest = centerPoint;

}

if (canResetExposure) { // 曝光可以充值

device.exposureMode = exposureMode;

device.exposurePointOfInterest = centerPoint;

}

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

- 调整闪光灯和手电筒模式(Flash和 Torch 模式)

AVCaptureDevice可以控制摄像头的LED灯,当拍照时是做闪光灯,当拍视频时做手电筒.

- (void)setFlashMode:(AVCaptureFlashMode)flashMode {

AVCaptureDevice *device = self.activeVideoInput.device;

if (device.flashMode != flashMode &&

[device isFlashModeSupported:flashMode]) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.flashMode = flashMode;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

- (void)setTorchMode:(AVCaptureTorchMode)torchMode {

AVCaptureDevice *device = self.activeVideoInput.device;

if (device.torchMode != torchMode &&

[device isTorchModeSupported:torchMode]) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.torchMode = torchMode;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

}

3. 基本功能实现-拍照和摄像

- 拍照

上面介绍了基本流程,如果要拍照就是希望捕捉会话的输出Output实例是AVCaptureStillImageOutput类型,这需要在第一步配置会话时添加上去. 而关于接下来具体拍摄方法就定义在AVCaptureStillImageOutput中. 代码如下:

- (void)captureStillImage {

// 获取Output对象当前使用的AVCaptureConnection连接.

// 原始相机只支持垂直方向,所以当设备旋转时,用户界面保持不变.我们希望横向时相应调整图片方向.

AVCaptureConnection *connection =

[self.imageOutput connectionWithMediaType:AVMediaTypeVideo];

// 这里要先确定Connection 是否支持设置视频方向. 然后设置图片方向.

if (connection.isVideoOrientationSupported) {

connection.videoOrientation = [self currentVideoOrientation];

}

id handler = ^(CMSampleBufferRef sampleBuffer, NSError *error) {

if (sampleBuffer != NULL) {

NSData *imageData =

[AVCaptureStillImageOutput

jpegStillImageNSDataRepresentation:sampleBuffer];

// 得到的照片

UIImage *image = [[UIImage alloc] initWithData:imageData];

} else {

NSLog(@"NULL sampleBuffer: %@", [error localizedDescription]);

}

};

// 拍摄静态图片

[self.imageOutput captureStillImageAsynchronouslyFromConnection:connection

completionHandler:handler];

}

- 视频

同拍照流程一样,设置捕捉会话的输出Output实例是AVCaptureMovieFileOutput类型. 具体摄像代码如下:

- (void)startRecording {

if (!self.movieOutput.isRecording) { // 是否在录制中

// 设置录制视频方向为当前方向

AVCaptureConnection *videoConnection =

[self.movieOutput connectionWithMediaType:AVMediaTypeVideo];

if ([videoConnection isVideoOrientationSupported]) {

videoConnection.videoOrientation = self.currentVideoOrientation;

}

// 设置VideoStabilization.可以提高视频质量.

if ([videoConnection isVideoStabilizationSupported]) {

if ([[[UIDevice currentDevice] systemVersion] floatValue] < 8.0) {

videoConnection.enablesVideoStabilizationWhenAvailable = YES;

} else {

videoConnection.preferredVideoStabilizationMode = AVCaptureVideoStabilizationModeAuto;

}

}

AVCaptureDevice *device = self.activeVideoInput.device;

// 设置平滑对焦模式,提升移动录制质量

if (device.isSmoothAutoFocusSupported) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.smoothAutoFocusEnabled = NO;

[device unlockForConfiguration];

} else {

[self.delegate deviceConfigurationFailedWithError:error];

}

}

NSURL *outputURL = [self uniqueURL]; // 定义视频文件唯一输出路径.

// 最后,开始录制. 通过AVCaptureFileOutputRecordingDelegate控制录制过程.

[self.movieOutput startRecordingToOutputFileURL:outputURL

recordingDelegate:self];

}

}

AVCaptureMovieFileOutput支持分段捕捉.