OpenCV+海康威视摄像头的实时读取

OpenCV+海康威视摄像头的实时读取

分类:

图形视觉UI(33) windows(75)

版权声明:本文为博主原创文章,未经博主允许不得转载。

目录(?)[+]

OpenCV+海康威视摄像头的实时读取

本文由 @lonelyrains出品,转载请注明出处。

文章链接: http://blog.csdn.net/lonelyrains/article/details/50350052

之前没想过会有这么多朋友遇到问题,所以建了一个qq群536898072,专门供大家以后一起交流讨论图像和机器学习的工程实践问题。

环境

- 硬件:

PC:i7-4970 16GB内存 摄像头型号:DS-2CD3310D-I(2.8mm) - 软件:

windows-x64、vs2012、opencv2.4.8、hkvision5114-x64版本库

配置

- 保证使用SADP工具可以识别摄像头,然后配置IP与电脑在同一个网段。

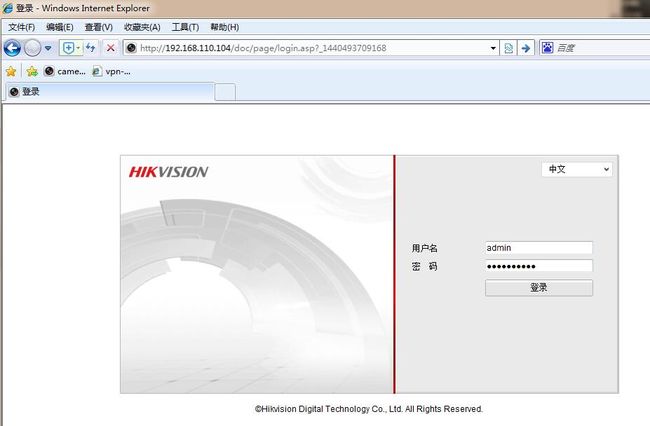

- 再保证可以从浏览器中访问。账号密码默认的一般是admin、a123456789(老版本的摄像头密码是12345)。

登录成功后可能要求下载WebComponent控件,下载好了安装便是。如果仍然出现如下画面:

则换一种浏览器试。一般是默认浏览器没问题的。

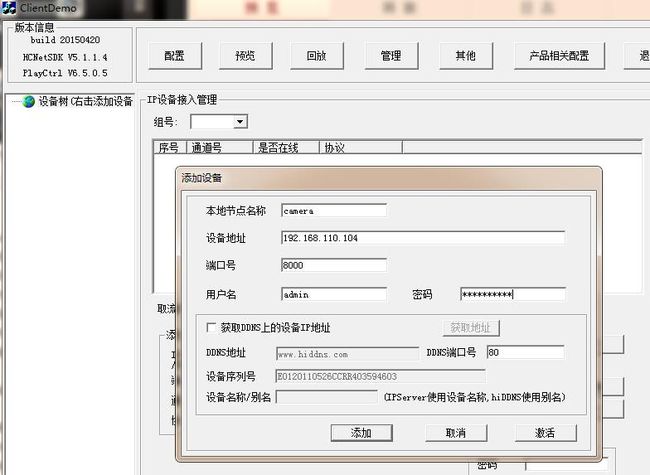

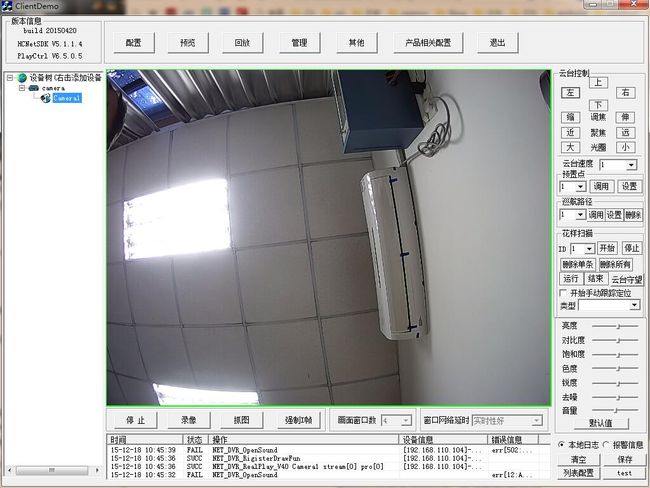

- 配置使用sdk中自带的ClientDemo.exe工具可以访问

- 配置sdk开发环境

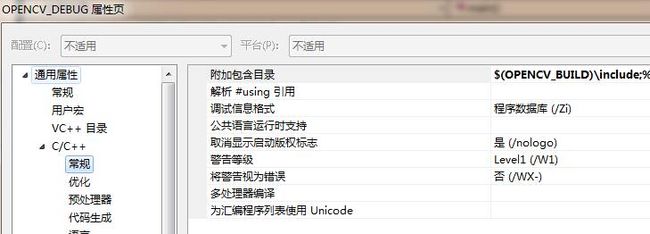

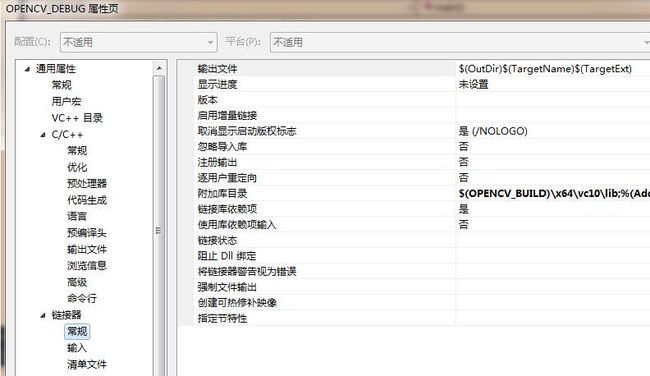

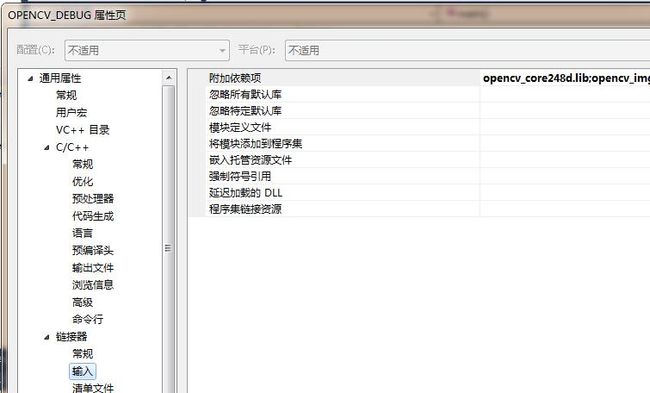

– opencv的配置

这里不展开讲opencv的配置,仅说明需要配置opencv环境变量、设置好对应的vs开发环境的属性配置

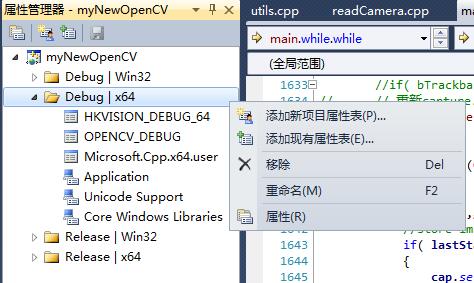

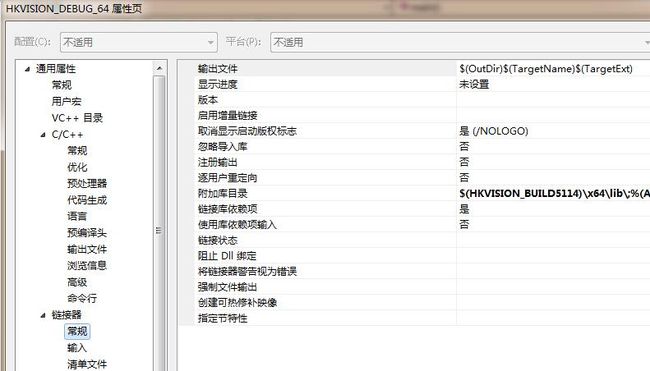

– 海康威视sdk属性配置

因为是64位环境,为了增强属性配置的内聚性,单独添加新项目属性表,设置海康威视sdk的属性

依赖库写全,为:

HCNetSDK.lib;PlayCtrl.lib;ws2_32.lib;winmm.lib;GdiPlus.lib;IPHlpApi.Lib;%(AdditionalDependencies)

代码及说明

#include int rowptr=row*width;

for (col=0; col//int colhalf=col>>1;

tmp = (row/2)*(width/2)+(col/2);

// if((row==1)&&( col>=1400 &&col<=1600))

// {

// printf("col=%d,row=%d,width=%d,tmp=%d.\n",col,row,width,tmp);

// printf("row*width+col=%d,width*height+width*height/4+tmp=%d,width*height+tmp=%d.\n",row*width+col,width*height+width*height/4+tmp,width*height+tmp);

// }

Y=(unsigned int) inYv12[row*width+col];

U=(unsigned int) inYv12[width*height+width*height/4+tmp];

V=(unsigned int) inYv12[width*height+tmp];

// if ((col==200))

// {

// printf("col=%d,row=%d,width=%d,tmp=%d.\n",col,row,width,tmp);

// printf("width*height+width*height/4+tmp=%d.\n",width*height+width*height/4+tmp);

// return ;

// }

if((idx+col*3+2)> (1200 * widthStep))

{

//printf("row * widthStep=%d,idx+col*3+2=%d.\n",1200 * widthStep,idx+col*3+2);

}

outYuv[idx+col*3] = Y;

outYuv[idx+col*3+1] = U;

outYuv[idx+col*3+2] = V;

}

}

//printf("col=%d,row=%d.\n",col,row);

}

//解码回调 视频为YUV数据(YV12),音频为PCM数据

void CALLBACK DecCBFun(long nPort,char * pBuf,long nSize,FRAME_INFO * pFrameInfo, long nReserved1,long nReserved2)

{

long lFrameType = pFrameInfo->nType;

if(lFrameType ==T_YV12)

{

#if USECOLOR

//int start = clock();

static IplImage* pImgYCrCb = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 3);//得到图像的Y分量

yv12toYUV(pImgYCrCb->imageData, pBuf, pFrameInfo->nWidth,pFrameInfo->nHeight,pImgYCrCb->widthStep);//得到全部RGB图像

static IplImage* pImg = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 3);

cvCvtColor(pImgYCrCb,pImg,CV_YCrCb2RGB);

//int end = clock();

#else

static IplImage* pImg = cvCreateImage(cvSize(pFrameInfo->nWidth,pFrameInfo->nHeight), 8, 1);

memcpy(pImg->imageData,pBuf,pFrameInfo->nWidth*pFrameInfo->nHeight);

#endif

//printf("%d\n",end-start);

Mat frametemp(pImg),frame;

//frametemp.copyTo(frame);

// cvShowImage("IPCamera",pImg);

// cvWaitKey(1);

EnterCriticalSection(&g_cs_frameList);

g_frameList.push_back(frametemp);

LeaveCriticalSection(&g_cs_frameList);

#if USECOLOR

// cvReleaseImage(&pImgYCrCb);

// cvReleaseImage(&pImg);

#else

/*cvReleaseImage(&pImg);*/

#endif

//此时是YV12格式的视频数据,保存在pBuf中,可以fwrite(pBuf,nSize,1,Videofile);

//fwrite(pBuf,nSize,1,fp);

}

/***************

else if (lFrameType ==T_AUDIO16)

{

//此时是音频数据,数据保存在pBuf中,可以fwrite(pBuf,nSize,1,Audiofile);

}

else

{

}

*******************/

}

///实时流回调

void CALLBACK fRealDataCallBack(LONG lRealHandle,DWORD dwDataType,BYTE *pBuffer,DWORD dwBufSize,void *pUser)

{

DWORD dRet;

switch (dwDataType)

{

case NET_DVR_SYSHEAD: //系统头

if (!PlayM4_GetPort(&nPort)) //获取播放库未使用的通道号

{

break;

}

if(dwBufSize > 0)

{

if (!PlayM4_OpenStream(nPort,pBuffer,dwBufSize,1024*1024))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//设置解码回调函数 只解码不显示

if (!PlayM4_SetDecCallBack(nPort,DecCBFun))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//设置解码回调函数 解码且显示

//if (!PlayM4_SetDecCallBackEx(nPort,DecCBFun,NULL,NULL))

//{

// dRet=PlayM4_GetLastError(nPort);

// break;

//}

//打开视频解码

if (!PlayM4_Play(nPort,hWnd))

{

dRet=PlayM4_GetLastError(nPort);

break;

}

//打开音频解码, 需要码流是复合流

// if (!PlayM4_PlaySound(nPort))

// {

// dRet=PlayM4_GetLastError(nPort);

// break;

// }

}

break;

case NET_DVR_STREAMDATA: //码流数据

if (dwBufSize > 0 && nPort != -1)

{

BOOL inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

while (!inData)

{

Sleep(10);

inData=PlayM4_InputData(nPort,pBuffer,dwBufSize);

OutputDebugString(L"PlayM4_InputData failed \n");

}

}

break;

}

}

void CALLBACK g_ExceptionCallBack(DWORD dwType, LONG lUserID, LONG lHandle, void *pUser)

{

char tempbuf[256] = {0};

switch(dwType)

{

case EXCEPTION_RECONNECT: //预览时重连

printf("----------reconnect--------%d\n", time(NULL));

break;

default:

break;

}

}

unsigned readCamera(void *param)

{

//---------------------------------------

// 初始化

NET_DVR_Init();

//设置连接时间与重连时间

NET_DVR_SetConnectTime(2000, 1);

NET_DVR_SetReconnect(10000, true);

//---------------------------------------

// 获取控制台窗口句柄

//HMODULE hKernel32 = GetModuleHandle((LPCWSTR)"kernel32");

//GetConsoleWindow = (PROCGETCONSOLEWINDOW)GetProcAddress(hKernel32,"GetConsoleWindow");

//---------------------------------------

// 注册设备

LONG lUserID;

NET_DVR_DEVICEINFO_V30 struDeviceInfo;

lUserID = NET_DVR_Login_V30("192.168.2.64", 8000, "admin", "a123456789", &struDeviceInfo);

if (lUserID < 0)

{

printf("Login error, %d\n", NET_DVR_GetLastError());

NET_DVR_Cleanup();

return -1;

}

//---------------------------------------

//设置异常消息回调函数

NET_DVR_SetExceptionCallBack_V30(0, NULL,g_ExceptionCallBack, NULL);

//cvNamedWindow("IPCamera");

//---------------------------------------

//启动预览并设置回调数据流

NET_DVR_CLIENTINFO ClientInfo;

ClientInfo.lChannel = 1; //Channel number 设备通道号

ClientInfo.hPlayWnd = NULL; //窗口为空,设备SDK不解码只取流

ClientInfo.lLinkMode = 0; //Main Stream

ClientInfo.sMultiCastIP = NULL;

LONG lRealPlayHandle;

lRealPlayHandle = NET_DVR_RealPlay_V30(lUserID,&ClientInfo,fRealDataCallBack,NULL,TRUE);

if (lRealPlayHandle<0)

{

printf("NET_DVR_RealPlay_V30 failed! Error number: %d\n",NET_DVR_GetLastError());

return 0;

}

//cvWaitKey(0);

Sleep(-1);

//fclose(fp);

//---------------------------------------

//关闭预览

if(!NET_DVR_StopRealPlay(lRealPlayHandle))

{

printf("NET_DVR_StopRealPlay error! Error number: %d\n",NET_DVR_GetLastError());

return 0;

}

//注销用户

NET_DVR_Logout(lUserID);

NET_DVR_Cleanup();

return 0;

} - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

其中最终得到的帧保存在g_frameList.push_back(frametemp);中。前后设置了对应的锁,用来对该帧序列的读写进行保护。这一部分内容是要自己完成的。即定义变量:

CRITICAL_SECTION g_cs_frameList;

std::list

主函数中的调用代码,先建立线程,调用上述读摄像头的函数的回调,并把读到的帧序列保存在g_frameList中,然后再读取该序列,保存到Mat里即可:

int main()

{

HANDLE hThread;

unsigned threadID;

Mat frame1;

InitializeCriticalSection(&g_cs_frameList);

hThread = (HANDLE)_beginthreadex( NULL, 0, &readCamera, NULL, 0, &threadID );

...

EnterCriticalSection(&g_cs_frameList);

if(g_frameList.size())

{

list::iterator it;

it = g_frameList.end();

it--;

Mat dbgframe = (*(it));

//imshow("frame from camera",dbgframe);

//dbgframe.copyTo(frame1);

//dbgframe.release();

(*g_frameList.begin()).copyTo(frame[i]);

frame1 = dbgframe;

g_frameList.pop_front();

}

g_frameList.clear(); // 丢掉旧的帧

LeaveCriticalSection(&g_cs_frameList);

...

return 0;

} - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

博乐点评

一共有

2位博乐进行推荐

- 顶

- 4

- 踩

- 0