【深度学习框架Keras】NLP中的n-gram、one-hot与word-embeddings

说明:

主要参考Francois Chollet《Deep Learning with Python》;

代码运行环境为kaggle中的kernels;

数据集IMDB、IMBD RAW以及GloVe需要手动添加

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory

import os

print(os.listdir("../input"))

# Any results you write to the current directory are saved as output.

['glove-global-vectors-for-word-representation', 'imdb-raw', 'keras-imdb']

一、介绍

1.处理文本时,可以将文本看做是单词序列。

2.深度学习在处理文本时,不是直接处理字符而是处理数值型的tensor,因此文本需要进行矢量化。

3.在矢量化文本时,可以先对文本分词然后转换为向量,也可以直接将文本分成字符然后转换为向量。

4.不论是将文本分成“单词序列”还是“字符序列”,都可以统一看做将文本分成token,所以将文本分成tokens的操作称为tokenization。

二.n-gram

n-gram就是从句子中提取n个连续单词的组。例如对于句子"The cat sat on the mat"。如果按2-grams分解句子将得到:

-

{“The”,“The cat”,“cat”,“cat sat”,“sat”,“sat on”,“on”,“on the”,“the”,“the mat”,“mat”}

如果按3-grams分解句子将得到:

-

{“The”,“The cat”,“cat”,“cat sat”,“The cat sat”,“sat”,“sat on”,“on”,“cat sat on”,“on the”,“the”,“sat on the”,“the mat”,“mat”,“on the mat”}

提取n-grams可以看成是一种特征工程,在深度学习中神经网络可以自动提取这种特征,因此不需要提取n-grams,但是如果使用逻辑回归或者随机森林等方法时,n-gram是一种强大的特征工程方法。

三、文本的one-hot编码

one-hot编码最常见、最基本的方法将tokens转换为vector。每一个单词对应则一个唯一的整数索引值,当这个句子包含该词是,对应位置为1。one-hot编码也可以应用到字符级别****

1.单词的one-hot编码

import numpy as np

sentences =['The cat sat on the mat.','The dog ate my homework.']

token_index = {} # key是单词,value是序号(或称index)

# 提取sentences中的单词(token),并为其赋予序号

for sentence in sentences:

for word in sentence.split():

if word not in token_index:

token_index[word] = len(token_index)+1 # 当前最大的序号+1

max_length = 10 # 每个sentence只考虑前10个单词

# results的三个维度(句子,句子中的单词数,每个单词对应的向量的长度)

results = np.zeros(shape=(len(sentences),

max_length,

max(token_index.values())+1))

for i,sentences in enumerate(sentences):

for j,word in list(enumerate(sentences.split()))[:max_length]:

index = token_index.get(word)

results[i,j,index] = 1. # i是当前句子的序号,j是当前单词的序号

2.字符的one-hot编码

import string

sentences =['The cat sat on the mat.','The dog ate my homework.']

characters = string.printable # 所有可以打印的字符

# range(1,len(characters)+1)是characters中字符由1开始的编号

token_index = dict(zip([character for character in characters],# 将字符拆分并保存到list中

range(1,len(characters)+1) # 每个字符对应一个编号

))

max_length = 50

results = np.zeros((len(sentences),max_length,max(token_index.values())+1))

for i,sentence in enumerate(sentences):

for j,character in enumerate(sentence):

index = token_index.get(character)

results[i,j,index] = 1.

3.使用Keras实现单词的one-hot编码

from keras.preprocessing.text import Tokenizer

sentences =['The cat sat on the mat.','The dog ate my homework.']

tokenizer = Tokenizer(num_words=1000) # 仅考虑最常见的1000个单词

tokenizer.fit_on_texts(sentences) # 构建词汇表

# 将句子转换为整数索引列表

sequences = tokenizer.texts_to_sequences(sentences)

print('sequences:',sequences)

# 将句子转换为one-hot编码

one_hot_results = tokenizer.texts_to_matrix(sentences,mode='binary')

word_index = tokenizer.word_index

print('word_index:',word_index) # sentences中出现的所有单词以及其索引

print('Found %s unique tokens.'%len(word_index))

sequences: [[1, 2, 3, 4, 1, 5], [1, 6, 7, 8, 9]]

word_index: {'the': 1, 'cat': 2, 'sat': 3, 'on': 4, 'mat': 5, 'dog': 6, 'ate': 7, 'my': 8, 'homework': 9}

Found 9 unique tokens.

4.使用Keras实现“应用散列技巧的单词one-hot编码”

由于使用一般的one-hot编码需要保存token及其索引,如果token很长那么会需要很多存储空间,那么可以只保存token的hash值

sentences =['The cat sat on the mat.','The dog ate my homework.']

dimensionality = 1000 # hash表的长度

max_length = 10

results = np.zeros((len(sentences),max_length,dimensionality))

for i,sentence in enumerate(sentences):

for j,word in list(enumerate(sentence.split()))[:max_length]:

index = abs(hash(word)) % dimensionality # word的hash值

results[i,j,index] = 1.

四、word embeddings

1.one-hot与word embeddings的对比

one-hot编码产生的词向量是稀疏的、高维的、二值的;

word embeddings是稠密的、低维的、从数据中学习到的;

使用one-hot编码的词向量不包含语义信息,例如两个语义上相关的词,其对应的词向量距离应该相近,但是one-hot编码的词向量所有距离均相同。

使用word embeddings生成的词向量含有语义关系。两个词向量的距离度量了词的相似度,两个词向量相加的值在语义上也是相加的,例如"female"+"king"生成的词向量与"queen"的词向量非常相似。

2.获得word embeddings的两种方式

方法一:从当前的任务中学习到word embeddings;

方法二:使用预训练的word embeddings

这两种方法将会在下面进行介绍

3.使用embedding层学习word embeddings

from keras.datasets import imdb

from keras import preprocessing

from keras.layers import Embedding

from keras.models import Sequential

from keras.layers import Flatten,Dense

max_features = 10000

maxlen = 20

# x_train与x_test分别包含25000条评论,y_train和y_test是对应的label,其取值为0或1

(x_train,y_train),(x_test,y_test) = imdb.load_data(path='/kaggle/input/keras-imdb/imdb.npz',

num_words=max_features)

# 由于每条评论的单词数不相同,通过pad_sequences处理后每条评论的单词数均为maxlen

# 如果评论的单词数超过maxlen,则只取前maxlen个单词

# 如果评论的单词数小于maxlen则填充0到长度为maxlen

x_train = preprocessing.sequence.pad_sequences(x_train,maxlen=maxlen)

x_test = preprocessing.sequence.pad_sequences(x_test,maxlen=maxlen)

model = Sequential()

# Embedding层的输入是一个二维的tensor,其维度为(batch_size,每个单词转换成vector的长度)

# 该层每次输入10000条评论,每条评论的长度由input_length指定,转换为词向量的维度为8

# 该层的输出为(batch_size,maxlen,embedding_dimensionality)=(10000,20,8)

model.add(Embedding(10000,8,input_length=maxlen))

# 将3维tensor转换为2维tensor,即(batch_size,maxlen*embedding_dimensionality)=(10000,20*8)

model.add(Flatten())

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history = model.fit(x_train,y_train,

epochs=10,

batch_size=32,

validation_split=0.2)

Train on 20000 samples, validate on 5000 samples

Epoch 1/10

20000/20000 [==============================] - 2s 88us/step - loss: 0.6759 - acc: 0.6050 - val_loss: 0.6398 - val_acc: 0.6814

...

Epoch 10/10

20000/20000 [==============================] - 1s 46us/step - loss: 0.2839 - acc: 0.8860 - val_loss: 0.5303 - val_acc: 0.7466

4.使用预训练的word embeddings

如果样本较少,可能无法通过自己训练来获得对应的word embedding,此时就可以使用其他人预训练好的word embedding。

有需要其他人预先计算好的wrod embedding,你可以使用Keras Embedding层来获得和使用。

word2vec和GloVe也是经常使用。

五、使用GloVe完成对IMDB原始文本的分类

1.加载数据

imdb_dir = '../input/imdb-raw/aclimdb/aclImdb'

train_dir = os.path.join(imdb_dir,'test')

texts = [] # 所有的评论(文本)

labels = [] # 评论对应的类别

for label_type in ['neg','pos']:

dir_name = os.path.join(train_dir,label_type)

for fname in os.listdir(dir_name):

if(fname[-4:]=='.txt'):

f = open(os.path.join(dir_name,fname))

texts.append(f.read())

f.close()

if(label_type=='neg'):

labels.append(0)

else:

labels.append(1)

2.token化原始的IMDB数据并划分数据集

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

import numpy as np

maxlen = 100 # 每个句子中的单词数

training_samples = 200 # 训练样本数

validation_samples = 10000 # 验证样本数

max_words = 10000 # 只统计所有文本中出现最频繁的10000个词

tokenizer = Tokenizer(num_words=max_words)

tokenizer.fit_on_texts(texts)

# 将文本转换为整数序列

sequences = tokenizer.texts_to_sequences(texts)

word_index = tokenizer.word_index

print('Found %s unique tokens.'%len(word_index))

data = pad_sequences(sequences,maxlen=maxlen) # 固定每个矩阵的长度

labels = np.asarray(labels)

print('Shape of data tensor:',data.shape)

print('Shape of label tensor:',labels.shape)

# 对数据进行shuffle

indices = np.arange(data.shape[0])

np.random.shuffle(indices)

data = data[indices]

labels = labels[indices]

# 划分训练集和验证集

x_train = data[:training_samples]

y_train = labels[:training_samples]

x_val = data[training_samples:training_samples+validation_samples]

y_val = labels[training_samples:training_samples+validation_samples]

Found 72633 unique tokens.

Shape of data tensor: (17243, 100)

Shape of label tensor: (17243,)

3.解析GloVe的word embedding文件

glove_dir = '../input/glove-global-vectors-for-word-representation'

# key为word,value为对应的vector

embeddings_index = {}

f = open(os.path.join(glove_dir,'glove.6B.100d.txt'))

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:],dtype='float32')

embeddings_index[word] = coefs

f.close()

print('Found %s word vectors.'%len(embeddings_index))

Found 400000 word vectors.

4.构造GloVe的word embeddings矩阵

embedding_dim = 100 # 词向量的维度

# max_words=10000个词,每个词对应维度为embedding_dim=100的词向量

embedding_matrix = np.zeros((max_words,embedding_dim))

# word_index中保存了在IMDB中出现的所有单词以及其索引

for word,i in word_index.items():

if(i<max_words):

# 获得word所对应的embedding_vector

embedding_vector = embeddings_index.get(word)

if(embedding_vector is not None):

embedding_matrix[i] = embedding_vector

5.定义一个模型

from keras.models import Sequential

from keras.layers import Embedding,Flatten,Dense

model = Sequential()

model.add(Embedding(max_words,embedding_dim,input_length=maxlen))

model.add(Flatten())

model.add(Dense(32,activation='relu'))

model.add(Dense(1,activation='sigmoid'))

6.将GloVe的Embedding加载到模型中

直接将embedding_matrix作为Embedding层的权重

model.layers[0].set_weights([embedding_matrix])

model.layers[0].trainable = False # 设置为不可训练

7.训练和评估模型

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit(x_train,y_train,

epochs=10,

batch_size=32,

validation_data=(x_val,y_val))

model.save_weights('pre_trained_glove_model.h5')

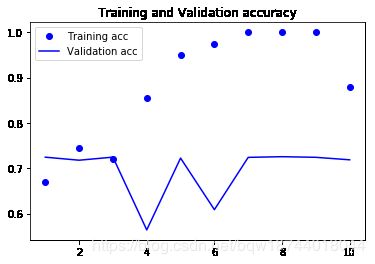

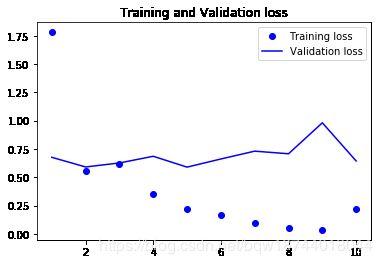

Train on 200 samples, validate on 10000 samples

Epoch 1/10

200/200 [==============================] - 1s 6ms/step - loss: 1.7811 - acc: 0.6700 - val_loss: 0.6761 - val_acc: 0.7243

...

Epoch 10/10

200/200 [==============================] - 0s 2ms/step - loss: 0.2154 - acc: 0.8800 - val_loss: 0.6436 - val_acc: 0.7185

8.绘制结果

import matplotlib.pyplot as plt

%matplotlib inline

def plot_curve(history):

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1,len(acc)+1)

plt.plot(epochs,acc,'bo',label='Training acc')

plt.plot(epochs,val_acc,'b',label='Validation acc')

plt.title('Training and Validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs,loss,'bo',label='Training loss')

plt.plot(epochs,val_loss,'b',label='Validation loss')

plt.title('Training and Validation loss')

plt.legend()

plot_curve(history)

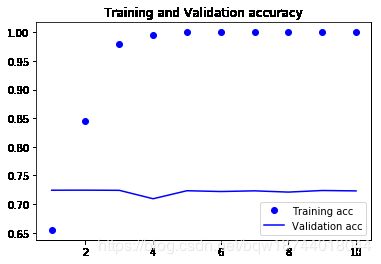

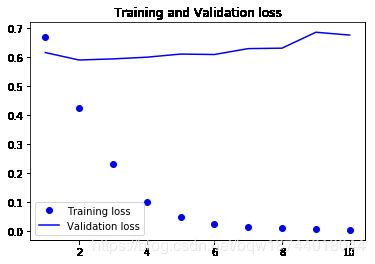

9.不使用预训练获得的word embedding

from keras.models import Sequential

from keras.layers import Embedding,Flatten,Dense

model = Sequential()

model.add(Embedding(max_words,embedding_dim,input_length=maxlen))

model.add(Flatten())

model.add(Dense(32,activation='relu'))

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit(x_train,y_train,

epochs=10,

batch_size=32,

validation_data=(x_val,y_val))

model.save_weights('pre_trained_glove_model.h5')

plot_curve(history)

Train on 200 samples, validate on 10000 samples

Epoch 1/10

200/200 [==============================] - 1s 7ms/step - loss: 0.6689 - acc: 0.6550 - val_loss: 0.6147 - val_acc: 0.7243

...

Epoch 10/10

200/200 [==============================] - 1s 3ms/step - loss: 0.0029 - acc: 1.0000 - val_loss: 0.6750 - val_acc: 0.7231