【Keras-ResNeXt】CIFAR-10

系列连载目录

- 请查看博客 《Paper》 4.1 小节 【Keras】Classification in CIFAR-10 系列连载

学习借鉴

- github:BIGBALLON/cifar-10-cnn

- 知乎专栏:写给妹子的深度学习教程

- Caffe 版代码:https://github.com/soeaver/caffe-model/tree/master/cls/resnext

- Keras 版代码:https://github.com/titu1994/Keras-ResNeXt

参考

- 【Keras-CNN】CIFAR-10

- 本地远程访问Ubuntu16.04.3服务器上的TensorBoard

- caffe代码可视化工具

硬件

- GTX 1080 Ti

文章目录

- 1 理论基础

- 2 ResNeXt 代码实现

- 2.1 my_resnext

- 2.2 my_renext_lr

- 2.3 my_resnext_lr_r

1 理论基础

【ResNext】《Aggregated Residual Transformations for Deep Neural Networks》(CVPR-2017)

2 ResNeXt 代码实现

2.1 my_resnext

在 Keras 版代码 的基础上修改了一份自己的代码

1)导入库,设置好超参数

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="1"

import keras

import numpy as np

import math

from keras.datasets import cifar10

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, ZeroPadding2D, GlobalAveragePooling2D

from keras.layers import Flatten, Dense, Dropout,BatchNormalization,Activation, Convolution2D, add

from keras.models import Model

from keras.layers import Input, concatenate,Lambda

from keras import optimizers

from keras.regularizers import l2

from keras.preprocessing.image import ImageDataGenerator

from keras.initializers import he_normal

from keras.callbacks import LearningRateScheduler, TensorBoard, ModelCheckpoint

from keras import backend as K

num_classes = 10

batch_size = 64 # 64 or 32 or other

epochs = 300

iterations = 782

USE_BN=True

DROPOUT=0.2 # keep 80%

CONCAT_AXIS=3

weight_decay=5e-4

DATA_FORMAT='channels_last' # Theano:'channels_first' Tensorflow:'channels_last'

log_filepath = './my_resnext'

2)数据预处理并设置 learning schedule

def color_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

mean = [125.307, 122.95, 113.865]

std = [62.9932, 62.0887, 66.7048]

for i in range(3):

x_train[:,:,:,i] = (x_train[:,:,:,i] - mean[i]) / std[i]

x_test[:,:,:,i] = (x_test[:,:,:,i] - mean[i]) / std[i]

return x_train, x_test

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

# load data

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

x_train, x_test = color_preprocessing(x_train, x_test)

3)定义网络结构

- group convolution

def grouped_convolution_block(init, grouped_channels,cardinality, strides):

# grouped_channels 每组的通道数

# cardinality 多少组

channel_axis = -1

group_list = []

for c in range(cardinality):

x = Lambda(lambda z: z[:, :, :, c * grouped_channels:(c + 1) * grouped_channels])(init)

x = Conv2D(grouped_channels, (3,3), padding='same', use_bias=False, strides=(strides, strides),

kernel_initializer='he_normal', kernel_regularizer=l2(weight_decay))(x)

group_list.append(x)

group_merge = concatenate(group_list, axis=channel_axis)

x = BatchNormalization()(group_merge)

x = Activation('relu')(x)

return x

- bottleneck block

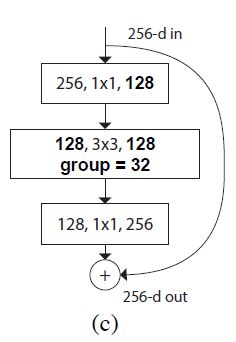

3层,前两层的 channels 一样,后面 double,第一、三层 1*1,第二层组卷积。如果 resolution 不变,identity,如果 resolution 变小,conv(stride = 2)

def block_module(x,filters,cardinality,strides):

# residual connection

init = x

grouped_channels = int(filters / cardinality)

# 如果没有down sampling就不需要这种操作

if init._keras_shape[-1] != 2 * filters:

init = Conv2D(filters * 2, (1, 1), padding='same', strides=(strides, strides),

use_bias=False, kernel_initializer='he_normal', kernel_regularizer=l2(weight_decay))(init)

init = BatchNormalization()(init)

# conv1

x = Conv2D(filters, (1, 1), padding='same', use_bias=False,

kernel_initializer='he_normal', kernel_regularizer=l2(weight_decay))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

# conv2(group),选择在 group 的时候 down sampling

x = grouped_convolution_block(x,grouped_channels,cardinality,strides)

# conv3

x = Conv2D(filters * 2, (1,1), padding='same', use_bias=False, kernel_initializer='he_normal',

kernel_regularizer=l2(weight_decay))(x)

x = BatchNormalization()(x)

x = add([init, x])

x = Activation('relu')(x)

return x

4)搭建网络

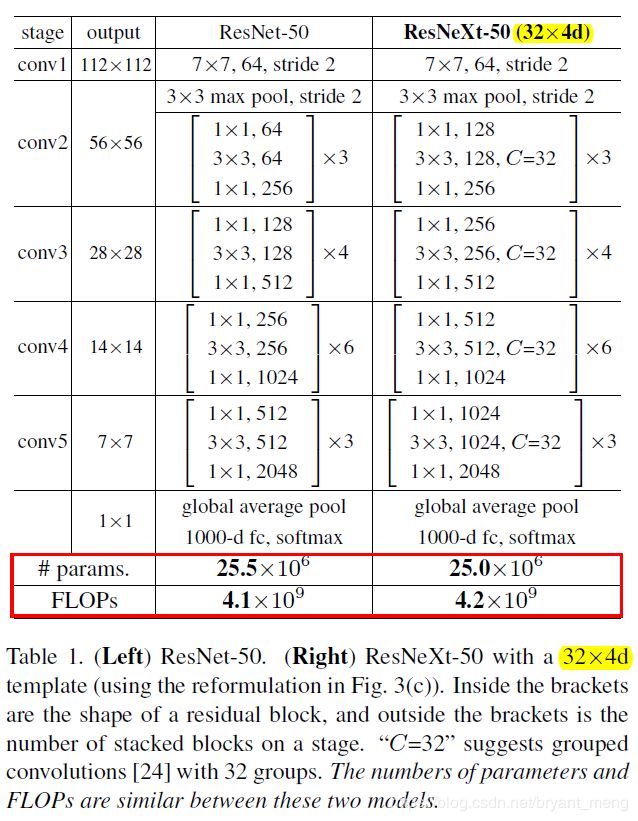

用 3)中设计好的模块来搭建网络,整体 architecture 如下:

down sampling 只会出现在每个 stage 的第一个 bottleneck block 里,刚开始的两次 down sampling 用 一个 Conv2D(64, (3, 3)……)代替,请查看博客 《Paper》 4.1 小节 【Keras】Classification in CIFAR-10 系列连载

def resnext(img_input,nb_classes):

# first layer

x = Conv2D(64, (3, 3), padding='same', use_bias=False, kernel_initializer='he_normal',

kernel_regularizer=l2(weight_decay))(img_input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

# block moduel set1

for _ in range(3):

x = block_module(x,128,8,1)

# block moduel set2, we downsampling in the first block module in sets

x = block_module(x,256,8,2)

for _ in range(2):

x = block_module(x,256,8,1)

# block moduel set3, we downsampling in the first block module in sets

x = block_module(x,512,8,2)

for _ in range(2):

x = block_module(x,512,8,1)

x = GlobalAveragePooling2D()(x)

x = Dense(nb_classes, use_bias=False, kernel_regularizer=l2(weight_decay),

kernel_initializer='he_normal', activation='softmax')(x)

return x

5)生成模型

img_input=Input(shape=(32,32,3))

output = resnext(img_input,num_classes)

model = Model(img_input,output)

model.summary()

Total params: 5,671,872

Trainable params: 5,646,656

Non-trainable params: 25,216

6)开始训练

# set optimizer

sgd = optimizers.SGD(lr=.1, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

# set callback

tb_cb = TensorBoard(log_dir=log_filepath, histogram_freq=0)

change_lr = LearningRateScheduler(scheduler)

cbks = [change_lr,tb_cb]

# set data augmentation

datagen = ImageDataGenerator(horizontal_flip=True,

width_shift_range=0.125,

height_shift_range=0.125,

fill_mode='constant',cval=0.)

datagen.fit(x_train)

# start training

model.fit_generator(datagen.flow(x_train, y_train,batch_size=batch_size),

steps_per_epoch=iterations,

epochs=epochs,

callbacks=cbks,

validation_data=(x_test, y_test))

model.save('my_resnext.h5')

7)结果分析

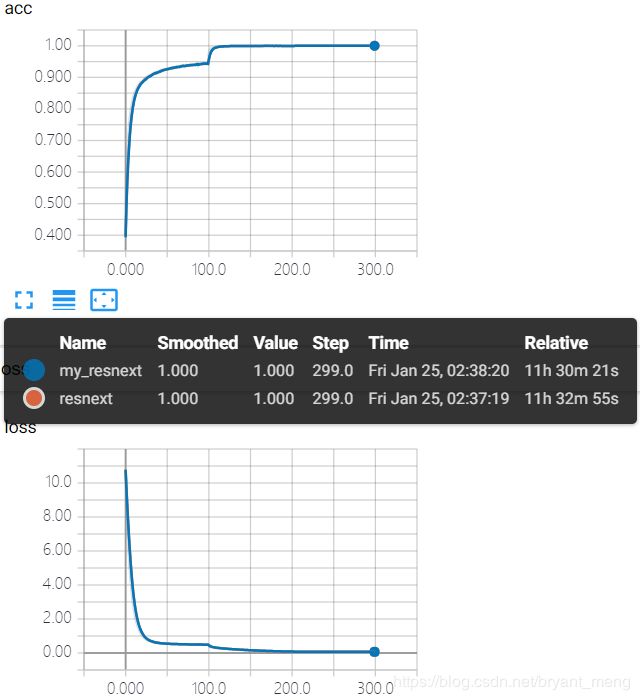

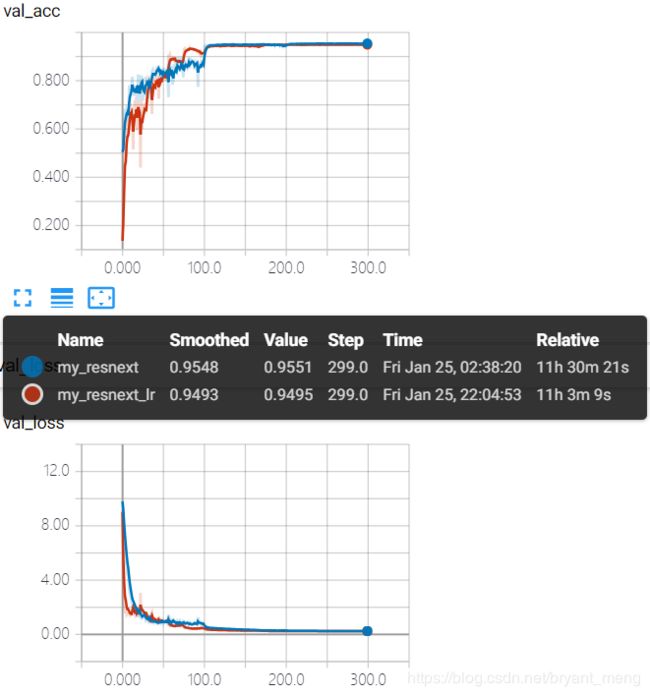

my_resnext 是上述的代码,resnext 是 https://github.com/titu1994/Keras-ResNeXt 的代码(hyper parameters,数据预处理,梯度更新策略都同my_resnext)

training accuracy 和 training loss

test accuracy 和 test loss

恐怖如斯,轻轻松松上 95%

2.2 my_renext_lr

一直没有调整 learning schedule,这次来规范一下,修改如下:

删除

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

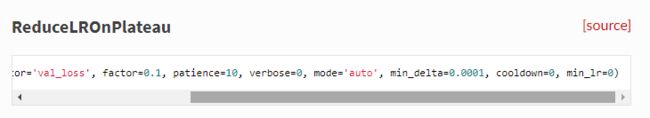

修改 LearningRateScheduler(scheduler) 为 ReduceLROnPlateau

from keras.callbacks import ReduceLROnPlateau

# set callback

tb_cb = TensorBoard(log_dir=log_filepath, histogram_freq=0)

lr_reducer = ReduceLROnPlateau(monitor='val_loss', factor=np.sqrt(0.1),

cooldown=0, patience=10, min_lr=1e-6)

cbks = [lr_reducer,tb_cb]

patience:当patience个epoch过去而模型性能不提升时,学习率减少的动作会被触发

cooldown:学习率减少后,会经过cooldown个epoch才重新进行正常操作

factor:每次减少学习率的因子,学习率将以 lr = lr*factor 的形式被减少

其他代码同 my_renext

参数量如下(不变):

Total params: 5,671,872

Trainable params: 5,646,656

Non-trainable params: 25,216

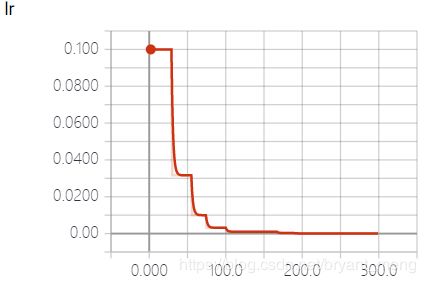

结果分析如下:

test accuracy 和 test loss

学习率在变化,最终效果并没有手动设计的好

结合下面这个图来调调参数了

https://keras-cn.readthedocs.io/en/latest/legacy/other/callbacks/#reducelronplateau

新增 epsilon=0.001,patience=5

lr_reducer = ReduceLROnPlateau(monitor='val_loss', factor=np.sqrt(0.1),

epsilon=0.001,cooldown=0, patience=5, min_lr=1e-6)

会报 warning ,epsilon 没用,查了下正版的,替换成了 min_delta 变量

修改了 sgd 的初始 learning rate

sgd = optimizers.SGD(lr=0.01, momentum=0.9, nesterov=True)

2.3 my_resnext_lr_r

在 2.2 my_resnext_lr 的基础下新增下数据增强的策略 rotation_range=15(加入旋转)

datagen = ImageDataGenerator(horizontal_flip=True,

width_shift_range=0.125,

height_shift_range=0.125,

rotation_range=15,

fill_mode='constant',cval=0.)

其它代码同 my_resnext_lr

参数量如下(不变):

Total params: 5,671,872

Trainable params: 5,646,656

Non-trainable params: 25,216