The dice problem

Suppose I have a box of dice that contains a 4-sided die, a 6-sided die, an 8-sided die, a 12-sided die, and a 20-sided die. If you have ever played Dungeons & Dragons, you know what I am talking about.

一个箱子里面有四边骰子,六边的骰子,八边的骰子,十二边的骰子,二十边的骰子。

Suppose I select a die from the box at random, roll it, and get a 6. What is the probability that I rolled each die?

如果随机选择一个骰子,投掷出来是6点,那么我们是投掷哪个骰子的可能性是多少?

Let me suggest a three-step strategy for approaching a problem like this.

- Choose a representation for the hypotheses.

- Choose a representation for the data.

- Write the likelihood function.

处理问题的三步骤:选择一个假说的代表,选择一个数据的代表,写好可能性函数。

In previous examples I used strings to represent hypotheses and data, but for the die problem I’ll use numbers. Specifically, I’ll use the integers 4, 6, 8, 12, and 20 to represent hypotheses:

suite = Dice([4, 6, 8, 12, 20])

And integers from 1 to 20 for the data. These representations make it easy to write the likelihood function:

class Dice(Suite):

def Likelihood(self, data, hypo):

if hypo < data:

return 0

else:

return 1.0/hypo

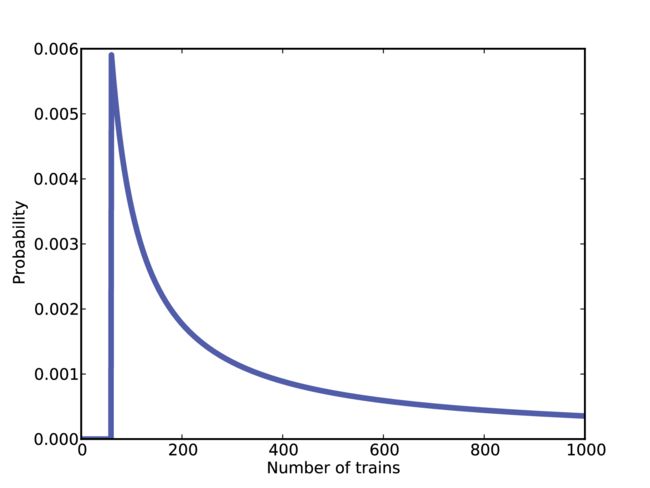

Here’s how Likelihood works. If hypo Otherwise the question is, “Given that there are hypo sides, what is the chance of rolling data?” The answer is 1/hypo, regardless of data. Here is the statement that does the update (if I roll a 6): And here is the posterior distribution: After we roll a 6, the probability for the 4-sided die is 0. The most likely alternative is the 6-sided die, but there is still almost a 12% chance for the 20-sided die. What if we roll a few more times and get 6, 8, 7, 7, 5, and 4? With this data the 6-sided die is eliminated, and the 8-sided die seems quite likely. Here are the results: Now the probability is 94% that we are rolling the 8-sided die, and less than 1% for the 20-sided die. The dice problem is based on an example I saw in Sanjoy Mahajan’s class on Bayesian inference. You can download the code in this section from http://thinkbayes.com/dice.py. For more information see “Working with the code” on page xi. I found the locomotive problem in Frederick Mosteller’s, Fifty Challenging Problems in Probability with Solutions (Dover, 1987): “A railroad numbers its locomotives in order 1..N. One day you see a locomotive with the number 60. Estimate how many locomotives the railroad has.” 就是在火车站的火车是按照顺序排列的,从1到N,一天你看到一个火车上标写的是60号,评估一下有多少火车在车站里。 Based on this observation, we know the railroad has 60 or more locomotives. But how many more? To apply Bayesian reasoning, we can break this problem into two steps: 基于观察,我们可以知道火车站至少有60辆火车。但是具体是多少呢?医用贝叶斯推理,我们可以将问题分拆到两个步骤,第一个是在我们看到新数据之前,我们对火车数量有多少了解呢?第二点是对任何一个新给定的值N,看到数据的可能性是多少呢? The answer to the first question is the prior. The answer to the second is the likelihood. We don’t have much basis to choose a prior, but we can start with something simple and then consider alternatives. Let’s assume that N is equally likely to be any value from 1 to 1000. Now all we need is a likelihood function. In a hypothetical fleet of N locomotives, what is the probability that we would see number 60? If we assume that there is only one train-operating company (or only one we care about) and that we are equally likely to see any of its locomotives, then the chance of seeing any particular locomotive is 1 /N. Here’s the likelihood function: This might look familiar; the likelihood functions for the locomotive problem and the dice problem are identical. Here’s the update: There are too many hypotheses to print, so I plotted the results in Figure 3-1. Not surprisingly, all values of N below 60 have been eliminated. The most likely value, if you had to guess, is 60. That might not seem like a very good guess; after all, what are the chances that you just happened to see the train with the highest number? Nevertheless, if you want to maximize the chance of getting the answer exactly right, you should guess 60. But maybe that’s not the right goal. An alternative is to compute the mean of the posterior distribution: Or you could use the very similar method provided by Pmf: The mean of the posterior is 333, so that might be a good guess if you wanted to minimize error. If you played this guessing game over and over, using the mean of the posterior as your estimate would minimize the mean squared error over the long run (see http://en.wikipedia.org/wiki/Minimum_mean_square_error). 修正后的概率的平均值为333. You can download this example from http://thinkbayes.com/train.py. For more information see “Working with the code” on page xi. To make any progress on the locomotive problem we had to make assumptions, and some of them were pretty arbitrary. In particular, we chose a uniform prior from 1 to 1000, without much justification for choosing 1000, or for choosing a uniform distribution. It is not crazy to believe that a railroad company might operate 1000 locomotives, but a reasonable person might guess more or fewer. So we might wonder whether the posterior distribution is sensitive to these assumptions. With so little data—only one observation— it probably is. Recall that with a uniform prior from 1 to 1000, the mean of the posterior is 333. With an upper bound of 500, we get a posterior mean of 207, and with an upper bound of 2000, the posterior mean is 552. So that’s bad. There are two ways to proceed: 两个方法可以继续:一个是获得更多的数据,获得更多的背景信息。 With more data, posterior distributions based on different priors tend to converge. For example, suppose that in addition to train 60 we also see trains 30 and 90. We can update the distribution like this: With these data, the means of the posteriors are So the differences are smaller. If more data are not available, another option is to improve the priors by gathering more background information. It is probably not reasonable to assume that a train-operating company with 1000 locomotives is just as likely as a company with only 1. With some effort, we could probably find a list of companies that operate locomotives in the area of observation. Or we could interview an expert in rail shipping to gather information about the typical size of companies. But even without getting into the specifics of railroad economics, we can make some educated guesses. In most fields, there are many small companies, fewer medium-sized companies, and only one or two very large companies. In fact, the distribution of company sizes tends to follow a power law, as Robert Axtell reports in Science (see http:// This law suggests that if there are 1000 companies with fewer than 10 locomotives, there might be 100 companies with 100 locomotives, 10 companies with 1000, and possibly one company with 10,000 locomotives. Mathematically, a power law means that the number of companies with a given size is inversely proportional to size, or where PMF x is the probability mass function of x and α is a parameter that is often near 1. We can construct a power law prior like this: And here’s the code that constructs the prior: Again, the upper bound is arbitrary, but with a power law prior, the posterior is less sensitive to this choice. Figure 3-2 shows the new posterior based on the power law, compared to the posterior based on the uniform prior. Using the background information represented in the power law prior, we can all but eliminate values of N greater than 700. If we start with this prior and observe trains 30, 60, and 90, the means of the posteriors are: Now the differences are much smaller. In fact, with an arbitrarily large upper bound, the mean converges on 134. So the power law prior is more realistic, because it is based on general information about the size of companies, and it behaves better in practice. You can download the examples in this section from http://thinkbayes.com/train3.py. For more information see “Working with the code” on page xi. Once you have computed a posterior distribution, it is often useful to summarize the results with a single point estimate or an interval. For point estimates it is common to use the mean, median, or the value with maximum likelihood. For intervals we usually report two values computed so that there is a 90% chance that the unknown value falls between them (or any other probability). These values define a credible interval. A simple way to compute a credible interval is to add up the probabilities in the posterior distribution and record the values that correspond to probabilities 5% and 95%. In other words, the 5th and 95th percentiles. thinkbayes provides a function that computes percentiles: And here’s the code that uses it: For the previous example—the locomotive problem with a power law prior and three trains—the 90% credible interval is 91,243 . The width of this range suggests, correctly, that we are still quite uncertain about how many locomotives there are. In the previous section we computed percentiles by iterating through the values and probabilities in a Pmf. If we need to compute more than a few percentiles, it is more efficient to use a cumulative distribution function, or Cdf. Cdfs and Pmfs are equivalent in the sense that they contain the same information about the distribution, and you can always convert from one to the other. The advantage of the Cdf is that you can compute percentiles more efficiently. thinkbayes provides a Cdf class that represents a cumulative distribution function. Pmf provides a method that makes the corresponding Cdf: And Cdf provides a function named Percentile Converting from a Pmf to a Cdf takes time proportional to the number of values, len(pmf). The Cdf stores the values and probabilities in sorted lists, so looking up a probability to get the corresponding value takes “log time”: that is, time proportional to the logarithm of the number of values. Looking up a value to get the corresponding probability is also logarithmic, so Cdfs are efficient for many calculations. The examples in this section are in http://thinkbayes.com/train3.py. For more information see “Working with the code” on page xi. During World War II, the Economic Warfare Division of the American Embassy in London used statistical analysis to estimate German production of tanks and other equipment. The Western Allies had captured log books, inventories, and repair records that included chassis and engine serial numbers for individual tanks. Analysis of these records indicated that serial numbers were allocated by manufacturer and tank type in blocks of 100 numbers, that numbers in each block were used sequentially, and that not all numbers in each block were used. So the problem of estimating German tank production could be reduced, within each block of 100 numbers, to a form of the locomotive problem. Based on this insight, American and British analysts produced estimates substantially lower than estimates from other forms of intelligence. And after the war, records indicated that they were substantially more accurate. They performed similar analyses for tires, trucks, rockets, and other equipment, yielding accurate and actionable economic intelligence. The German tank problem is historically interesting; it is also a nice example of realworld application of statistical estimation. So far many of the examples in this book have been toy problems, but it will not be long before we start solving real problems. I think it is an advantage of Bayesian analysis, especially with the computational approach we are taking, that it provides such a short path from a basic introduction to the research frontier. Among Bayesians, there are two approaches to choosing prior distributions. Some recommend choosing the prior that best represents background information about the problem; in that case the prior is said to be informative. The problem with using an informative prior is that people might use different background information (or interpret it differently). So informative priors often seem subjective. The alternative is a so-called uninformative prior, which is intended to be as unrestricted as possible, in order to let the data speak for themselves. In some cases you can identify a unique prior that has some desirable property, like representing minimal prior information about the estimated quantity. Uninformative priors are appealing because they seem more objective. But I am generally in favor of using informative priors. Why? First, Bayesian analysis is always based on modeling decisions. Choosing the prior is one of those decisions, but it is not the only one, and it might not even be the most subjective. So even if an uninformative prior is more objective, the entire analysis is still subjective. Also, for most practical problems, you are likely to be in one of two regimes: either you have a lot of data or not very much. If you have a lot of data, the choice of the prior doesn’t matter very much; informative and uninformative priors yield almost the same results. We’ll see an example like this in the next chapter. But if, as in the locomotive problem, you don’t have much data, using relevant background information (like the power law distribution) makes a big difference. And if, as in the German tank problem, you have to make life-and-death decisions based on your results, you should probably use all of the information at your disposal, rather than maintaining the illusion of objectivity by pretending to know less than you do. Exercise 3-1. To write a likelihood function for the locomotive problem, we had to answer this question:“If the railroad has N locomotives, what is the probability that we see number 60?” The answer depends on what sampling process we use when we observe the locomotive. In this chapter, I resolved the ambiguity by specifying that there is only one train-operating company (or only one that we care about). But suppose instead that there are many companies with different numbers of trains. And suppose that you are equally likely to see any train operated by any company. In that case, the likelihood function is different because you are more likely to see a train operated by a large company. As an exercise, implement the likelihood function for this variation of the locomotive problem, and compare the results.suite.Update(6)

4 0.0

6 0.392156862745

8 0.294117647059

12 0.196078431373

20 0.117647058824

for roll in [6, 8, 7, 7, 5, 4]:

suite.Update(roll)

4 0.0

6 0.0

8 0.943248453672

12 0.0552061280613

20 0.0015454182665

The locomotive problem

第一个问题的回答是先验概率,第二个问题的答案是可能性。

我们没有多少基础去选择一个先验概率,但是我们可以从一些简单的开始,然后去考虑其他的可能性,我们假设N可能是1到1000里的任意值。hypos = xrange(1, 1001) #emmmm,这是py2的写法

现在我们需要的是可能性函数。在假设有N个火车的情况下,我们看到60号的可能性多大呢?如果我们假设这里只有一个火车运营公司,我们看到任意一辆火车的可能性是相同的,然后看到任意一个独一无二的火车是1/N。class Train(Suite):

def Likelihood(self, data, hypo):

if hypo < data:

return 0

else:

return 1.0/hypo

看上去很熟悉,这个问题的可能性函数和骰子的是一样的。suite = Train(hypos)

suite.Update(60)

def Mean(suite):

total = 0

for hypo, prob in suite.Items():

total += hypo * prob

return total

print Mean(suite)

print suite.Mean()

What about that prior?

我们也许会奇怪是不是修正后的概率对这些假设是否敏感。

1000的修正概率为333,500的修正概率为207,2000的修正概率为552.从这个结果看,上限不同,概率数各不相同。

这个方法就是获得更多的数据。for data in [60, 30, 90]:

suite.Update(data)

Upper Bound | Posterior Mean

500 152

1000 164

2000 171

An alternative prior

如果更多的数据不可得到,另外一个选项就是通过得到更多的背景信息提高先验概率。假设一个火车运营公司的火车数量假设是1000和1的可能性都不好。

www.sciencemag.org/content/293/5536/1818.full.pdf).

好有意思,这个还有什么罗伯特法则。

这个法则表明如果这里有1000个公司拥有低于10个火车,这里可能100个公司有100个火车,10个公司有1000个火车,可能有1个公司有10000个火车。PMF(x) ∝(1/x)^α

class Train(Dice):

def __init__(self, hypos, alpha=1.0):

Pmf.__init__(self)

for hypo in hypos:

self.Set(hypo, hypo**(-alpha))

self.Normalize()

hypos = range(1, 1001)

suite = Train(hypos)

Upper Bound | Posterior Mean

500 131

1000 133

2000 134

Credible intervals

可信区间就是记录从百分之五到百分之九十五之间的可能性。def Percentile(pmf, percentage):

p = percentage / 100.0

total = 0

for val, prob in pmf.Items():

total += prob

if total >= p:

return val

interval = Percentile(suite, 5), Percentile(suite, 95)

print interval

Cumulative distribution functions

cdf和pmf相比功能区别不大,cdf的效率更高一些。cdf = suite.MakeCdf()

interval = cdf.Percentile(5), cdf.Percentile(95)

从PMF转换为CDF需要的时间与值的数量(len(pmf))成比例。CDF将值和概率存储在已排序的列表中,因此查找概率以获得相应的值需要“日志时间”:即时间与值的对数成比例。查找一个值以获得相应的概率也是对数的,因此CDF对于许多计算都是有效的。The German tank problem

美国人二战时候评估坦克和其他装备生产的问题。

对这些记录的分析表明序列号是由制造商分配的。坦克以100个数字为单位,每个数字按顺序使用,并不是每个数据块中的所有数字都被使用。所以估计的问题德国的坦克产量可以在每100个数量块内减少到一种形式关于机车的问题。Discussion

数据多 先验概率是否信息化区别不是很大......还是数据更重要啊

数据少的时候,相关背景信息会有不同。data speaks for itself.

生死问题上我们应该尽可能运用我们所知道的信息。Exercises