ELK集群搭建(三)

ELK集群部署(三)

ELK 是 elastic 公司旗下三款产品ElasticSearch、Logstash、Kibana的首字母组合,也即Elastic Stack包含ElasticSearch、Logstash、Kibana、Beats。ELK提供了一整套解决方案,并且都是开源软件,之间互相配合使用,完美衔接,高效的满足了很多场合的应用,是目前主流的一种日志系统。

ElasticSearch 一个基于 JSON 的分布式的搜索和分析引擎,作为 ELK 的核心,它集中存储数据,

用来搜索、分析、存储日志。它是分布式的,可以横向扩容,可以自动发现,索引自动分片

Logstash 一个动态数据收集管道,支持以 TCP/UDP/HTTP 多种方式收集数据(也可以接受 Beats 传输来的数据),

并对数据做进一步丰富或提取字段处理。用来采集日志,把日志解析为json格式交给ElasticSearch

Kibana 一个数据可视化组件,将收集的数据进行可视化展示(各种报表、图形化数据),并提供配置、管理 ELK 的界面

Beats 一个轻量型日志采集器,单一用途的数据传输平台,可以将多台机器的数据发送到 Logstash 或 ElasticSearch

X-Pack 一个对Elastic Stack提供了安全、警报、监控、报表、图表于一身的扩展包,不过收费

官网:https://www.elastic.co/cn/ ,中文文档:https://elkguide.elasticsearch.cn/

下载elk各组件的旧版本:

https://www.elastic.co/downloads/past-releases

grok正则表达式参考:

https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/grok-patterns

环境准备

注意,这里我不再沿用之前的主机配置。

- 角色划分:

系统:CentOS 7

zabbix server 192.168.1.252

es主节点/es数据节点/logstash 192.168.1.253

es主节点/es数据节点/kibana/head 192.168.1.254

es主节点/es数据节点/filebeat 192.168.1.255

- 全部关闭防火墙和selinux:

# systemctl stop firewalld && systemctl disable firewalld

# sed -i 's/=enforcing/=disabled/g' /etc/selinux/config && setenforce 0

- 全部配置系统环境:

# vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

# vim /etc/sysctl.conf

vm.max_map_count=655360

# sysctl -p

- 全部安装Java环境:

# tar zxf jdk-8u191-linux-x64.tar.gz && mv jdk1.8.0_191/ /usr/local/jdk

# vim /etc/profile

JAVA_HOME=/usr/local/jdk

PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib

export JAVA_HOME PATH CLASSPATH

# source !$

# java -version

# ln -s /usr/local/jdk/bin/java /usr/local/bin/java

ELK结合Zabbix告警

Logstash支持多种输出介质,比如syslog、HTTP、TCP、elasticsearch、kafka等,而有时候我们想将收集到的日志中一些错误信息输出,并告警时,就用到了logstash-output-zabbix这个插件,此插件可以将Logstash与zabbix进行整合,也就是将Logstash收集到的数据进行过滤,将有错误标识的日志输出到zabbix中,最后通过zabbix的告警机制进行触发、告警。

logstash-plugin相关命令:

logstash安装目录是/usr/local/logstash

列出所有已安装的插件

# /usr/local/logstash/bin/logstash-plugin list

列出已安装的插件及版本信息

# /usr/local/logstash/bin/logstash-plugin list --verbose

列出包含关键字的所有已安装插件

# /usr/local/logstash/bin/logstash-plugin list "http"

列出特定组的所有已安装插件( input,filter,codec,output)

# /usr/local/logstash/bin/logstash-plugin list --group input

更新所有已安装的插件

# /usr/local/logstash/bin/logstash-plugin update

更新指定的插件

# /usr/local/logstash/bin/logstash-plugin update logstash-output-kafka

安装指定的插件

#/usr/local/logstash/bin/logstash-plugin install logstash-output-kafka

删除指定的插件

# /usr/local/logstash/bin/logstash-plugin remove logstash-output-kafka

- 安装zabbix插件:

# /usr/local/logstash/bin/logstash-plugin install logstash-output-zabbix

logstash-output-zabbix安装好之后,就可以在logstash配置文件中使用了,下面是一个logstash-output-zabbix使用的例子:

zabbix {

zabbix_host => "[@metadata][zabbix_host]"

zabbix_key => "[@metadata][zabbix_key]"

zabbix_server_host => "x.x.x.x"

zabbix_server_port => "xxxx"

zabbix_value => "xxxx"

}

其中:

zabbix_host:表示Zabbix主机名字段名称, 可以是单独的一个字段, 也可以是 @metadata 字段的子字段, 是必需的设置,没有默认值。

zabbix_key:表示Zabbix项目键的值,也就是zabbix中的item,此字段可以是单独的一个字段, 也可以是 @metadata 字段的子字段,没有默认值。

zabbix_server_host:表示Zabbix服务器的IP或可解析主机名,默认值是 "localhost",需要设置为zabbix server服务器所在的地址。

zabbix_server_port:表示Zabbix服务器开启的监听端口,默认值是10051。

zabbix_value:表示要发送给zabbix item监控项的值对应的字段名称,默认值是 "message",也就是将"message"字段的内容

发送给上面zabbix_key定义的zabbix item监控项,当然也可以指定一个具体的字段内容发送给zabbix item监控项。

nginx日志告警:

以nginx访问日志和错误日志为例,结合zabbix实现nginx错误告警。

- nginx默认配置:

如果是yum安装

error_log /var/log/nginx/error.log; #默认是crit级别

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

- 配置nginx:

# vim /usr/local/nginx/conf/nginx.conf

error_log /usr/local/nginx/logs/error.log error;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /usr/local/nginx/logs/access.log main;

# vim /usr/local/nginx/conf/vhost/elk.conf

server {

listen 80;

server_name elk.lzx.com;

location / {

proxy_pass http://192.168.1.254:5601;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

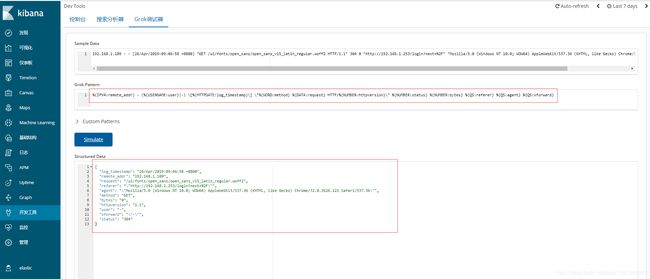

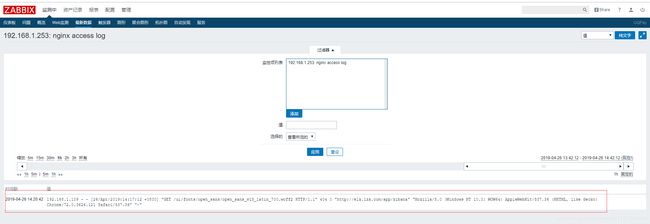

- grok过滤nginx访问日志:

%{IPV4:remote_addr} - (%{USERNAME:user}|-) \[%{HTTPDATE:log_timestamp}\] \"%{WORD:method} %{DATA:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}

针对不同的日志,我们可以在kibana页面进行调试,直到完整过滤为止。

- grok过滤nginx错误日志:

(?%{YEAR}[./-]%{MONTHNUM}[./-]%{MONTHDAY} %{TIME}) \[%{LOGLEVEL:severity}\] %{POSINT:pid}#%{NUMBER}: %{DATA:error}, (client: (?%{IP}|%{HOSTNAME})), (server: (?%{IPORHOST})), (request: (?%{QS})), (upstream: (?\"%{URI}\"|%{QS})), (host: (?%{QS})), (referrer: \"%{URI:referrer}\")

- 配置logstash:

# vim /usr/local/logstash/conf.d/nginx.conf

input {

file {

path => "/usr/local/nginx/logs/access.log"

#start_position => "beginning" #尽量不开启从开头输入数据,否则可能会重复告警

type => "nginx_access"

sincedb_path => "/dev/null"

}

file {

path => "/usr/local/nginx/logs/error.log"

#start_position => "beginning"

type => "nginx_error"

sincedb_path => "/dev/null"

}

}

filter {

if [type] == "nginx_access" {

grok {

match => [ "message", "%{IPV4:remote_addr} - (%{USERNAME:user}|-) \[%{HTTPDATE:log_timestamp}\] \"%{WORD:method} %{DATA:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}"]

}

mutate {

add_field => [ "[zabbix_key]", "nginx_access_status" ]

add_field => [ "[zabbix_host]", "192.168.1.253" ] #建议使用主机IP,使用%{host}变量可能返回hostname

}

}

if [type] == "nginx_error" {

grok {

match => [ "message" , "(?%{YEAR}[./-]%{MONTHNUM}[./-]%{MONTHDAY} %{TIME}) \[%{LOGLEVEL:severity}\] %{POSINT:pid}#%{NUMBER}: %{DATA:error}, (client: (?%{IP}|%{HOSTNAME})), (server: (?%{IPORHOST})), (request: (?%{QS})), (upstream: (?\"%{URI}\"|%{QS})), (host: (?%{QS})), (referrer: \"%{URI:referrer}\")" ]

}

mutate {

add_field => [ "[zabbix_key]", "nginx_error" ]

add_field => [ "[zabbix_host]", "192.168.1.253" ]

}

}

}

output {

if [type] == "nginx_access" {

elasticsearch {

hosts => ["192.168.1.253:9200"]

user => "elastic"

password => "elk-2019"

index => "nginx-access.log-%{+YYYY.MM.dd}"

}

if [status] =~ /(403|404|500|502|503|504)/ {

zabbix {

zabbix_host => "[zabbix_host]"

zabbix_key => "[zabbix_key]"

zabbix_server_host => "192.168.1.252"

zabbix_server_port => "10051"

zabbix_value => "message"

}

}

}

if [type] == "nginx_error" {

elasticsearch {

hosts => ["192.168.1.253:9200"]

user => "elastic"

password => "elk-2019"

index => "nginx-error.log-%{+YYYY.MM.dd}"

}

if [message] =~ /(error|emerg)/ {

zabbix {

zabbix_host => "[zabbix_host]"

zabbix_key => "[zabbix_key]"

zabbix_server_host => "192.168.1.252"

zabbix_server_port => "10051"

zabbix_value => "message"

}

}

}

}

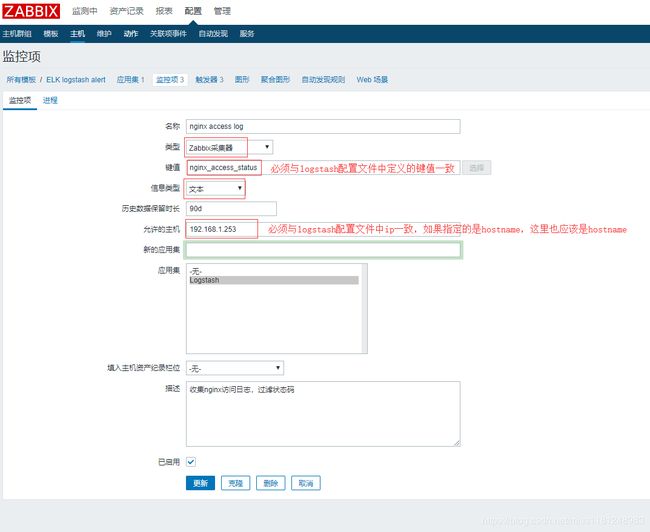

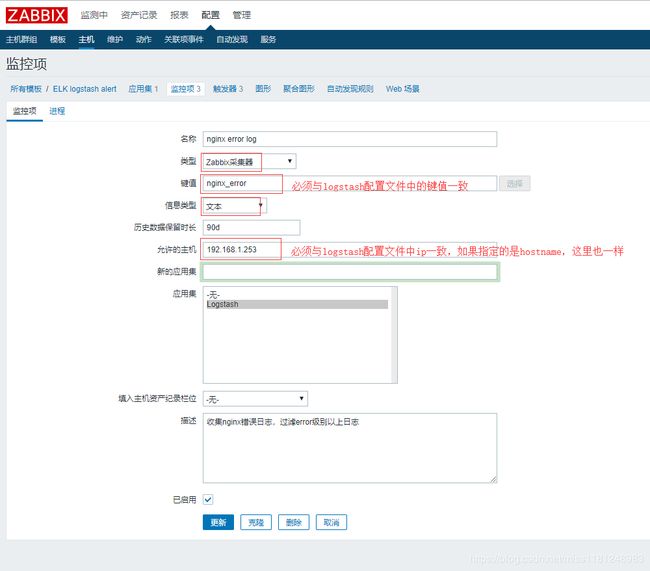

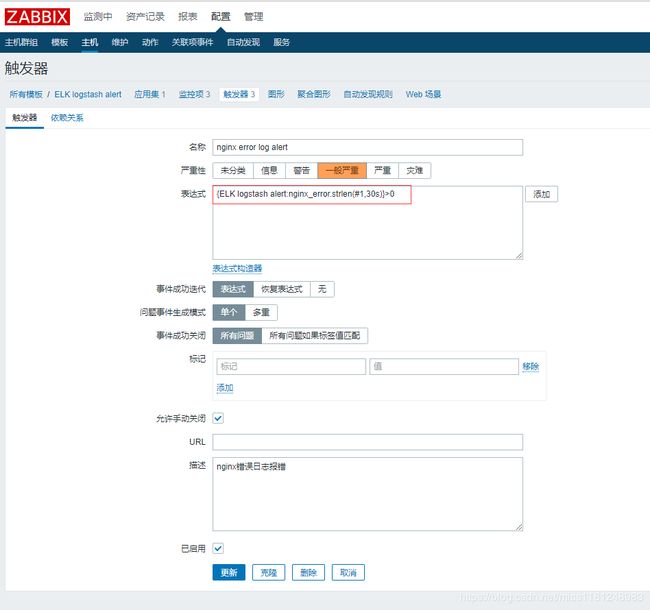

- zabbix页面配置监控项及触发器:

nginx 访问日志监控项及触发器

nginx 错误日志监控项及触发器

如果没有配置监控项就去启动logstash会报错:

[WARN ][logstash.outputs.zabbix ] Zabbix server at 192.168.1.252 rejected all items sent.{:zabbix_host=>"192.168.1.253"}

- 启动logstash:

# > /usr/local/nginx/logs/access.log

# > /usr/local/nginx/logs/error.log #方便实验,先清空nginx日志

# /usr/local/nginx/sbin/nginx

# systemctl restart logstash

# tail -f /usr/local/logstash/logs/logstash-plain.log #查看启动日志看是否正常启动

- access.log 添加一条status异常的日志:

# vim /usr/local/nginx/logs/access.log

192.168.1.109 - - [26/Apr/2019:14:17:12 +0800] "GET /ui/fonts/open_sans/open_sans_v15_latin_700.woff2 HTTP/1.1" 304 0 "http://elk.lzx.com/app/kibana" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36" "-"

192.168.1.109 - - [26/Apr/2019:14:17:12 +0800] "GET /ui/fonts/open_sans/open_sans_v15_latin_700.woff2 HTTP/1.1" 404 0 "http://elk.lzx.com/app/kibana" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36" "-"

#将状态码从304改为404

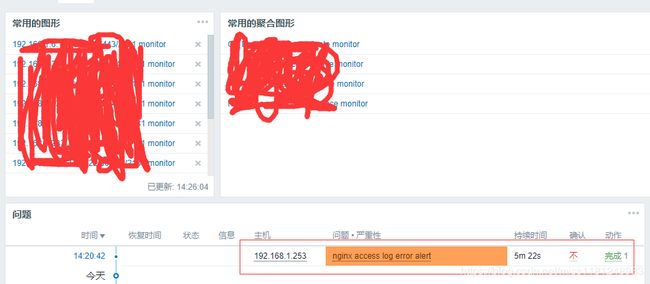

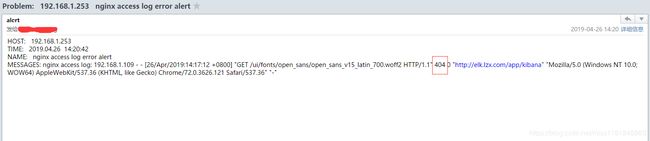

- zabbix告警:

对应告警邮件:

- error.log 让产生一条错误日志:

# cp /usr/local/nginx/conf/vhost/elk.conf /usr/local/nginx/conf/vhost/test.conf

# vim !$

rver {

listen 80;

server_name elk.lzx.com;

location / {

proxy_pass http://192.168.1.254:5601;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

# /usr/local/nginx/sbin/nginx -t

nginx: [emerg] unknown directive "rver" in /usr/local/nginx/conf/vhost/test.conf:1

nginx: configuration file /usr/local/nginx/conf/nginx.conf test failed

# tail /usr/local/nginx/logs/error.log

2019/04/26 14:16:41 [notice] 28426#0: signal process started

2019/04/26 14:33:17 [emerg] 28589#0: unknown directive "rver" in /usr/local/nginx/conf/vhost/test.conf:1

- zabbix告警:

对应告警邮件:

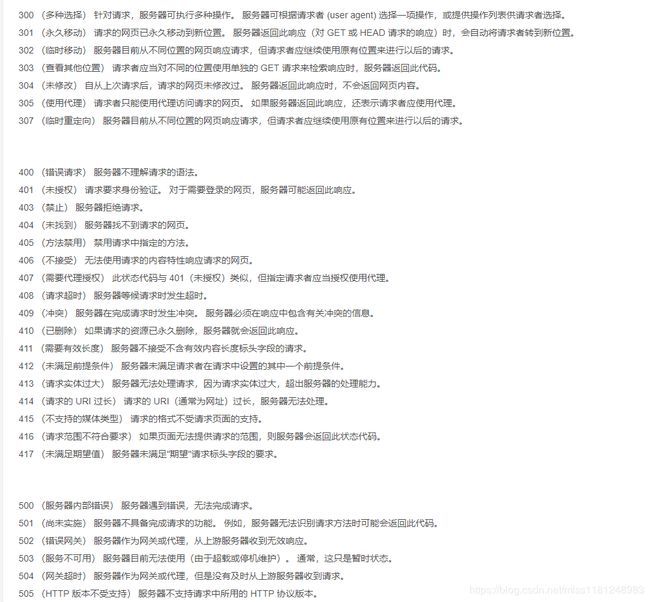

生产环境下,对于访问日志,尽量监控500|502|503|504,50开头才是服务器故障,40开头是客户端问题,如下图

至此,logstash收集nginx日志并结合zabbix告警完成。

tomcat日志告警

tomcat有这些日志:

* catalina开头的日志为Tomcat的综合日志,它记录Tomcat服务相关信息,也会记录错误日志

* host-manager和manager为管理相关的日志,其中host-manager为虚拟主机的管理日志

* localhost和localhost_access为虚拟主机相关日志,其中带access字样的为访问日志,

不带access字样的为默认主机的错误日志(访问日志默认不会生成,需要在server.xml中配置一下)

错误日志会统一记录到catalina.out中,出现问题应该首先查看它

对于tomcat日志,首要收集的是catalina.out和localhost_access_log日志。如果是java项目运行在tomcat之上,那我们只需要收集项目日志即可。

- 安装tomcat:

# tar zxf apache-tomcat-8.5.39.tar.gz && mv apache-tomcat-8.5.39 /usr/local/tomcat

# vim /usr/local/tomcat/bin/catalina.sh

# OS specific support. $var _must_ be set to either true or false. #在该行下添加

JAVA_HOME=/usr/local/jdk

PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib

默认配置:

# vim /usr/local/tomcat/conf/server.xml

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

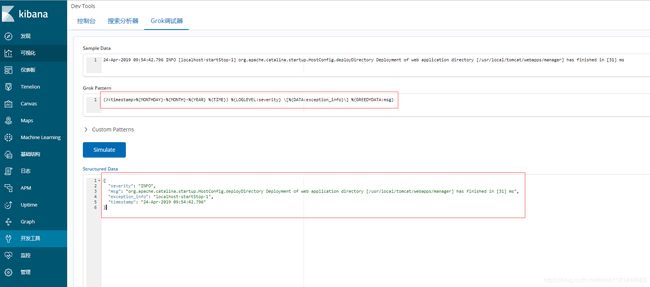

- grok过滤

tomcat catalina.out日志:

(?%{MONTHDAY}-%{MONTH}-%{YEAR} %{TIME}) %{LOGLEVEL:severity} \[%{DATA:exception_info}\] %{GREEDYDATA:msg}

针对不同的日志,我们可以在kibana页面进行调试,直到完整过滤为止。

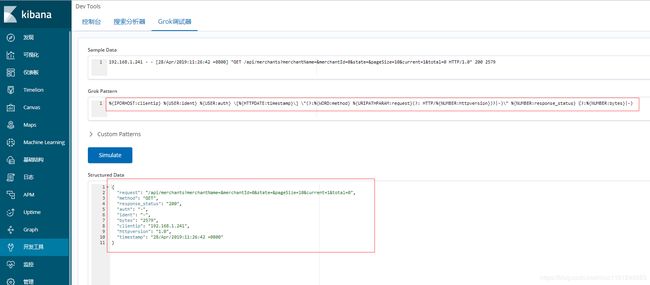

- grok过滤

tomcat local_access_log日志:

%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:method} %{URIPATHPARAM:request}(?: HTTP/%{NUMBER:httpversion})?|-)\" %{NUMBER:response_status} (?:%{NUMBER:bytes}|-)

- 配置logstash:

# vim /usr/local/logstash/conf.d/tomcat.conf

input {

file {

path => "/usr/local/tomcat/logs/catalina.out"

#start_position => "beginning"

type => "catalina"

sincedb_path => "/dev/null"

}

file {

path => "/usr/local/tomcat/logs/localhost_access_log*.txt"

#start_position => "beginning"

type => "localhost_access"

sincedb_path => "/dev/null"

}

}

filter {

if [type] == "catalina" {

grok {

match => [ "message", "(?%{MONTHDAY}-%{MONTH}-%{YEAR} %{TIME}) %{LOGLEVEL:severity} \[%{DATA:exception_info}\] %{GREEDYDATA:msg}" ]

}

mutate {

add_field => [ "[zabbix_key]", "tomcat_catalina_error" ]

add_field => [ "[zabbix_host]", "192.168.1.253" ]

}

}

if [type] == "localhost_access" {

grok {

match => [ "message" , "%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:method} %{URIPATHPARAM:request}(?: HTTP/%{NUMBER:httpversion})?|-)\" %{NUMBER:response_status} (?:%{NUMBER:bytes}|-)"]

}

mutate {

add_field => [ "[zabbix_key]", "tomcat_localhost_access_status" ]

add_field => [ "[zabbix_host]", "192.168.1.253" ]

}

}

}

output {

if [type] == "catalina" {

elasticsearch {

hosts => ["192.168.1.253:9200"]

user => "elastic"

password => "elk-2019"

index => "catalina.out-%{+YYYY.MM.dd}"

}

if [message] =~ /(WARN|ERROR|SEVERE)/ {

zabbix {

zabbix_host => "[zabbix_host]"

zabbix_key => "[zabbix_key]"

zabbix_server_host => "192.168.1.252"

zabbix_server_port => "10051"

zabbix_value => "message"

}

}

}

if [type] == "localhost_access" {

elasticsearch {

hosts => ["192.168.1.253:9200"]

user => "elastic"

password => "elk-2019"

index => "localhost_access.log-%{+YYYY.MM.dd}"

}

if [response_status] =~ /(500|502|503|504)/ {

zabbix {

zabbix_host => "[zabbix_host]"

zabbix_key => "[zabbix_key]"

zabbix_server_host => "192.168.1.252"

zabbix_server_port => "10051"

zabbix_value => "message"

}

}

}

}

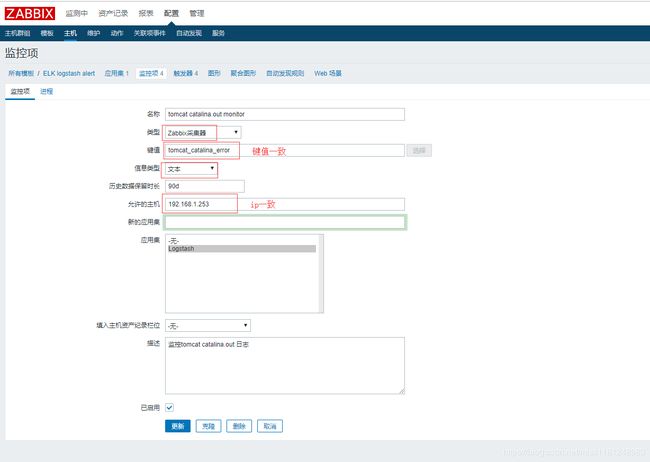

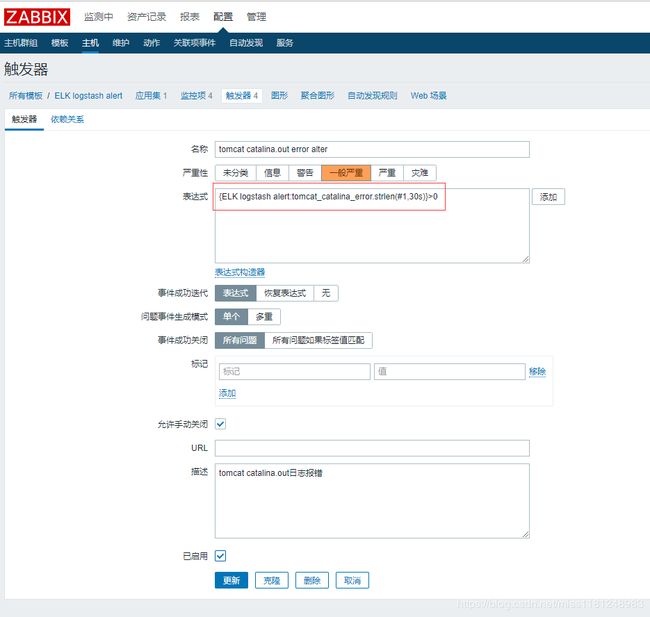

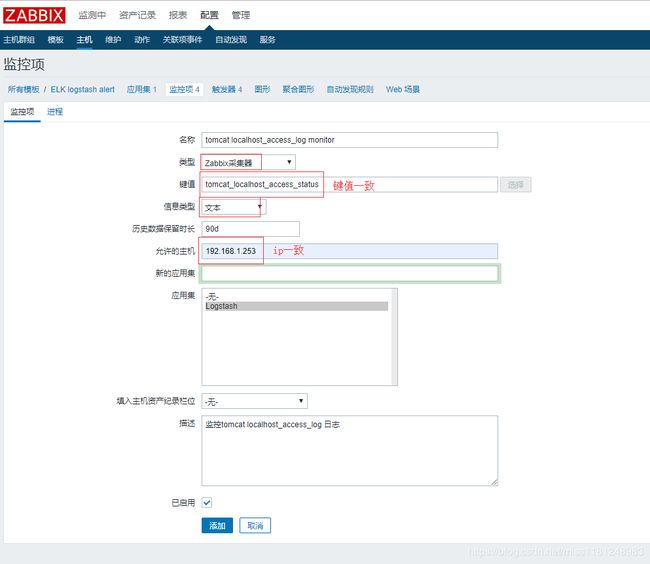

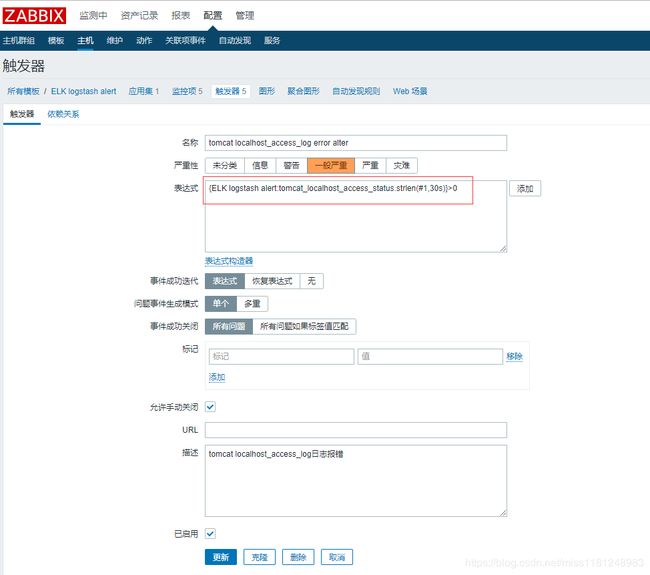

- zabbix页面配置监控项及触发器:

tomcat catalina.out日志监控项及触发器

tomcat local_access_log日志日志监控项及触发器

- 启动logstash:

# /usr/local/tomcat/bin/startup.sh

# systemctl restart logstash

# tail -f /usr/local/logstash/logs/logstash-plain.log #查看启动日志看是否正常启动

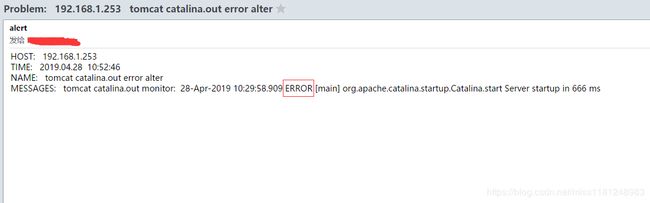

- catalina.out 添加一条错误日志:

# vim /usr/local/tomcat/logs/catalina.out

28-Apr-2019 10:29:58.909 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 666 ms

28-Apr-2019 10:29:58.909 ERROR [main] org.apache.catalina.startup.Catalina.start Server startup in 666 ms

#将severity从INFO改为ERROR

- zabbix告警:

对应告警邮件:

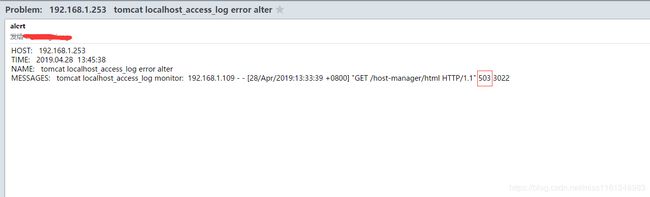

- localhost_access_log 添加一条错误日志:

# vim /usr/local/tomcat/logs/localhost_access_log.2019-04-28.txt

192.168.1.109 - - [28/Apr/2019:13:33:39 +0800] "GET /host-manager/html HTTP/1.1" 403 3022

192.168.1.109 - - [28/Apr/2019:13:33:39 +0800] "GET /host-manager/html HTTP/1.1" 502 3022

#将状态码从403改成503

- zabbix告警:

对应告警邮件:

至此,logstash收集tomcat日志并结合zabbix告警完成。整个过程十分简单,重点在于grok的正则表达式的匹配。

后记

后面部署完成之后,遇到一个问题。告警没有问题,但是告警之后无法自动恢复(关闭问题),这就导致需要手动去确认关闭问题,否则接下来的新产生的错误日志无法告警,这是很严重的问题——如果手动关闭不及时,则必然有错误日志告警遗漏。

所以后面在触发器的“恢复表达式”反复测试,想来想去,感觉触发器函数中的count和nodata都合适,其中nodata最为合适:

上面这个配置感觉没什么问题,但是告警问题依然无法自动关闭,仍然需要手动确认关闭。count函数也不起作用,感觉很奇怪。

后面换了角度,更换“问题表达式”的函数,如果选择“表达式”则表示取“问题表达式”的反,选择“恢复表达式”则表示额外指定判断条件来让告警问题自动恢复。

可以看到,没有手动确认,在问题告警之后1分钟自动关闭问题。而错误日志一般情况下在1分钟内不会有多条产生,这样就可以让我们能够收到全部的错误日志告警,而不需要很操蛋地去手动点确认。