关于Inter漏洞“熔断”的论文翻译(二)

2.2 Address Spaces

2.2 地址空间

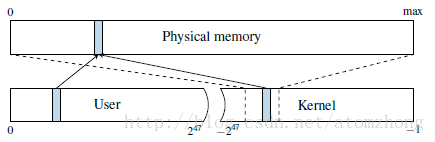

To isolate processes from each other, CPUs support virtual address spaces where virtual addresses are translated to physical addresses. A virtual address space is divided into a set of pages that can be individually mapped to physical memory through a multi-level page translation table. The translation tables define the actual virtual to physical mapping and also protection properties that are used to enforce privilege checks, such as readable,writable, executable and user-accessible. The currently used translation table that is held in a special CPU register. On each context switch, the operating system updates this register with the next process’translation table address in order to implement per process virtual address spaces. Because of that, each process can only reference data that belongs to its own virtual address space. Each virtual address space itself is split into a user and a kernel part. While the user address space can be accessed by the running application, the kernel address space can only be accessed if the CPU is running in privileged mode. This is enforced by the operating system disabling the user-accessible property of the corresponding translation tables. The kernel address space does not only have memory mapped for the kernel’s own usage, but it also needs to perform operations on user pages, e.g., filling them with data. Consequently, the entire physical memory is typically mapped in the kernel. On Linux and OS X, this is done via a direct-physical map, i.e., the entire physical memory is directly mapped to a pre-defined virtual address (cf. Figure 2).

为了将进程彼此隔离,CPU支持将虚拟地址转换为物理地址的虚拟地址空间。虚拟地址空间被分成一组页面,可以通过多级页面转换表单独映射到物理内存。转换表定义了实际的虚拟到物理映射,以及用于强制权限校验的保护属性,如可读,可写,可执行和用户可访问。当前使用的转换表保存在特殊的CPU寄存器中。在每个上下文切换时,操作系统用下一个进程的转换表地址更新该寄存器,以便实现每个进程的虚拟地址空间。因此,每个进程只能引用属于自己虚拟地址空间的数据。每个虚拟地址空间本身被分割成用户部分和内核部分。虽然用户地址空间可以被正在运行的应用程序访问,但只有当CPU以特权模式运行时才能访问内核地址空间。这通过操作系统禁用相应转换表的用户可访问属性来强制执行。内核地址空间不仅具有为内核自身使用而映射的内存,还需要在用户页面上执行操作,例如填充数据。因此,整个物理内存通常映射到内核中。在Linux和OS X上,这是通过直接物理映射完成的,即整个物理内存直接映射到预定义的虚拟地址(参见图2)。

Figure 2: The physical memory is directly mapped in the kernel at a certain offset. A physical address (blue) which is mapped accessible for the user space is also mapped in the kernel space through the direct mapping.

图2:物理内存以一定的偏移量直接映射到内核中。用户空间可访问的物理地址(蓝色)也通过直接映射映射到内核空间。

Instead of a direct-physical map, Windows maintains a multiple so-called paged pools, non-paged pools, and the system cache. These pools are virtual memory regions in the kernel address space mapping physical pages to virtual addresses which are either required to remain in the memory (non-paged pool) or can be removed from the memory because a copy is already stored on the disk (paged pool). The system cache further contains mappings of all file-backed pages. Combined, these memory pools will typically map a large fraction of the physical memory into the kernel address space of every process.

Windows不使用直接物理映射,而是维护多个所谓的分页池,非分页池和系统高速缓存。这些“池”是内核地址空间中的虚拟内存区域,将物理页面映射到那些要求保留在内存中的(非分页池),或者因为副本已存储在磁盘上,而可以从内存中删除的(分页池)虚拟地址上。系统缓存还包含所有文件支持页面的映射。综合来看,这些内存池通常会将大部分物理内存映射到每个进程的内核地址空间。

The exploitation of memory corruption bugs often requires the knowledge of addresses of specific data. In order to impede such attacks, address space layout randomization (ASLR) has been introduced as well as non-executable stacks and stack canaries. In order to protect the kernel, KASLR randomizes the offsets where drivers are located on every boot, making attacks harder as they now require to guess the location of kernel data structures. However, side-channel attacks allow to detect the exact location of kernel data structures [9, 13, 17] or derandomize ASLR in JavaScript [6]. A combination of a software bug and the knowledge of these addresses can lead to privileged code execution.

内存损坏漏洞的利用一般需要特定数据的地址信息。为了阻止这种攻击,人们已经引入了地址空间布局随机化(ASLR)以及非可执行堆栈和堆栈金丝雀(类比于煤矿中的用于探测有毒气体的金丝雀,用于在恶意代码执行之前检测堆栈缓冲区溢出,译者注)。为了保护内核,KASLR随机化每次启动时驱动程序所在的偏移量,使得黑客们需要猜测内核数据结构的位置,从而让攻击变得困难。 然而,旁路攻击允许检测内核数据结构的确切位置[9][13][17],或者使用JavaScript使得ASLR去随机化[6]。软件bug和这些地址信息相结合,就可以导致特权代码的执行。

2.3 Cache Attacks

2.3 缓存攻击

In order to speed-up memory accesses and address translation, the CPU contains small memory buffers, called caches, that store frequently used data. CPU caches hide slow memory access latencies by buffering frequently used data in smaller and faster internal memory. Modern CPUs have multiple levels of caches that are either private to its cores or shared among them. Address space translation tables are also stored in memory and are also cached in the regular caches.

为了加速内存访问和地址转换,CPU包含小内存缓冲区,称为缓存,用于存储经常使用的数据。 CPU缓存通过在较小和较快的内部存储器中缓存常用数据来规避低速内存访问延迟。 现代CPU具有多个级别的缓存,这些缓存对于其内核是私有的,或者是在内核之间共享的。地址空间转换表存储在内存中,也被缓存在常规缓存中。

Cache side-channel attacks exploit timing differences that are introduced by the caches. Different cache attack techniques have been proposed and demonstrated in the past, including Evict+Time [28], Prime+Probe [28, 29], and Flush+Reload [35]. Flush+Reload attacks work on a single cache line granularity. These attacks exploit the shared, inclusive last-level cache. An attacker frequently flushes a targeted memory location using the clflush instruction. By measuring the time it takes to reload the data, the attacker determines whether data was loaded into the cache by another process in the meantime. The Flush+Reload attack has been used for attacks on various computations, e.g., cryptographic algorithms [35, 16, 1], web server function calls [37], user input [11, 23, 31], and kernel addressing information [9].

缓存旁路攻击利用了高速缓存引入的时序差异。这些年来,人们已经提出并演示了许多不同的缓存攻击手段,包括Evict + Time[28],Prime + Probe [28][29]和Flush + Reload [35]。Flush + Reload攻击在单个缓存线粒度上工作。这些攻击利用共享的,包含最后一级的缓存。攻击者通常使用clflush指令刷新目标内存位置。通过测量重新加载数据所需的时间,攻击者可以确定数据是否由另一个进程同时加载到缓存中。Flush + Reload攻击已被用于各种计算的攻击,例如加密算法[35][16][1],Web服务器函数调用[37],用户输入[11][23][31]和内核寻址信息[9]。

A special use case are covert channels. Here the attacker controls both, the part that induces the side effect, and the part that measures the side effect. This can be used to leak information from one security domain to another, while bypassing any boundaries existing on the architectural level or above. Both Prime+Probe and Flush+ Reload have been used in high-performance covert channels [24, 26, 10].

一个特殊攻击手段是隐蔽信道:攻击者在此控制导致副作用的部分和测量副作用的部分。这可用于将信息从一个安全域泄漏到另一个安全域,同时绕过架构级或更高级别上存在的任何边界。 Prime + Probe和Flush + Reload攻击手段都利用了高性能隐蔽通道[24][26][10]。

3 A Toy Example

3 一个玩具示例

In this section, we start with a toy example, a simple code snippet, to illustrate that out-of-order execution can change the microarchitecturall state in a way that leaks information. However, despite its simplicity, it is used as a basis for Section 4 and Section 5, where we show how this change in state can be exploited for an attack.

在本节中,我们从一个玩具示例,一个简单的代码片断开始,来说明乱序执行可能会以某种方式改变微架构状态,从而泄露信息。然而,尽管它简单,但它是第4节和第5节的基础,在其中我们展示了这种状态变化是如何被利用来进行攻击的。

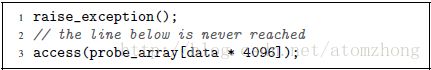

Listing 1 shows a simple code snippet first raising an (unhandled) exception and then accessing an array. The property of an exception is that the control flow does not continue with the code after the exception, but jumps to an exception handler in the operating system. Regardless of whether this exception is raised due to a memory access, e.g., by accessing an invalid address, or due to any other CPU exception, e.g., a division by zero, the control flow continues in the kernel and not with the next user space instruction.

清单1列出了一个简单的代码片段,首先引发一个(未处理的)异常,然后访问一个数组。异常的特性是,控制流不会在异常之后继续执行代码,而是跳转到操作系统中的异常处理程序。无论是由于内存访问(例如,通过访问无效地址)还是由于任何其他CPU异常(例如除以零)而引起此异常,控制流都继续在内核中并且不会处理下一个用户空间中的指令。

Listing 1: A toy example to illustrate side-effects of out-of-order execution.

清单1:一个玩具示例,用于说明无序执行的副作用。

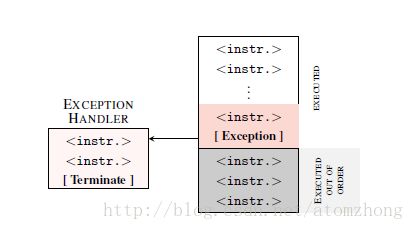

Thus, our toy example cannot access the array in theory, as the exception immediately traps to the kernel and terminates the application. However, due to the out-of-order execution, the CPU might have already executed the following instructions as there is no dependency on the exception. This is illustrated in Figure 3. Due to the exception, the instructions executed out of order are not retired and, thus, never have architectural effects.

因此,我们的玩具示例在理论上不能访问数组,因为异常将立即陷入内核并终止应用程序。但是,由于乱序执行,CPU可能已经执行了以下访问数组的指令,因为它们不存在对异常的依赖。这在图3中进行了说明。由于异常,乱序执行的指令不会正常退出,因此绝不会有完整执行的程序结果。

Figure 3: If an executed instruction causes an exception, diverting the control flow to an exception handler, the subsequent instruction must not be executed anymore. Due to out-of-order execution, the subsequent instructions may already have been partially executed, but not retired. However, the architectural effects of the execution will be discarded.

图3:如果执行的指令导致异常,使得控制流转移到异常处理程序,那么就不能再执行后续的指令。但是由于乱序执行,后续的指令可能已经部分执行但没有退出,则执行出的程序结果将被丢弃。

Although the instructions executed out of order do not have any visible on registers or memory, they have microarchitecturall side effects. During the out-of-order execution, the referenced memory is fetched into a register and is also stored in the cache. If the out-of-order execution has to be discarded, the register and memory contents are never committed. Nevertheless, the cached memory contents are kept in the cache. We can leverage a microarchitecturall side-channel attack such as Flush+Reload [35], which detects whether a specific memory location is cached, to make this microarchitecturall state visible. There are other side channels as well which also detect whether a specific memory location is cached, including Prime+Probe [28, 24, 26], Evict+ Reload [23], or Flush+Flush [10]. However, as Flush+ Reload is the most accurate known cache side channel and is simple to implement, we do not consider any other side channel for this example.

尽管乱序执行的指令对寄存器或存储器没有任何可见的架构效应,但它们具有微观架构的副作用。在乱序执行期间,引用的内存被提取到一个寄存器中,并且也存储在缓存中。如果乱序执行必须被丢弃,寄存器和存储器内容不会被提交。尽管如此,缓存的内容仍保存在缓存中。我们可以利用微观架构的旁路攻击,比如Flush + Reload [35]来检测特定的内存位置是否被缓存,以使这个微观架构状态可见。还有其他的旁路信道也可以检测是否缓存了特定的内存位置,包括Prime + Probe [28,24,26],Evict + Reload [23]或Flush + Flush [10]。但是,由于Flush + Reload是最准确的已知缓存旁路信道,并且易于实现,所以本例中我们不考虑任何其他旁路。

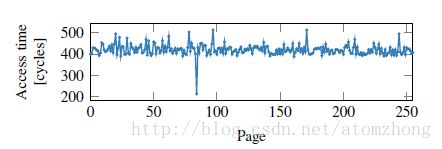

Based on the value of data in this toy example, a different part of the cache is accessed when executing the memory access out of order. As data is multiplied by 4096, data accesses to probe array are scattered over the array with a distance of 4 KB (assuming an 1 B data type for probe array). Thus, there is an injective mapping from the value of data to a memory page, i.e., there are no two different values of data which result in an access to the same page. Consequently, if a cache line of a page is cached, we know the value of data. The spreading over different pages eliminates false positives due to the prefetcher, as the prefetcher cannot access data across page boundaries [14].

根据这个玩具示例中的数据值,乱序执行时内存将访问高速缓存的不同部分。当数据乘以4096时,对探针数组的数据访问分散在阵列上,距离为4KB(假设探针数组为1 B数据类型)。因此,存在一个从数值到内存页的单射映射,即不存在导致访问相同页面的两个不同数据值。所以如果页内的缓存行被缓存,我们将由此得知数据的具体值。由于预取程序不能跨越页面边界访问数据,因此将数据分布在不同的页面上能消除由于预取程序造成的误报[14]。

Figure 4 shows the result of a Flush+Reload measurement iterating over all pages, after executing the out-of-order snippet with data = 84. Although the array access should not have happened due to the exception, we can clearly see that the index which would have been accessed is cached. Iterating over all pages (e.g., in the exception handler) shows only a cache hit for page 84 This shows that even instructions which are never actually executed, change the microarchitecturall state of the CPU. Section 4 modifies this toy example to not read a value, but to leak an inaccessible secret.

图4显示了在使用data=84的值执行乱序代码片段之后,Flush + Reload遍历所有页面的测量结果。尽管由于异常而不应该发生数组访问,但是我们可以清楚地看到索引将被访问的内容被缓存。例如,在异常处理程序中遍历所有页面,可以看到仅有第84页的高速缓存命中。这表明即使是从未实际执行的指令也会改变CPU的微架构状态。第4节中将修改这个玩具例子为不读取任何值,但却依然泄漏了一个本来无法访问的保密值。

Figure 4: Even if a memory location is only accessed during out-of-order execution, it remains cached. Iterating over the 256 pages of probe array shows one cache hit, exactly on the page that was accessed during the out-of-order execution.

图4:即使一个内存位置只在乱序执行期间被访问,它仍然被缓存。对256个探针数组进行迭代显示一个缓存命中,正好在乱序执行期间访问的页面上。

4 Building Blocks of the Attack

4 构建攻击模块

The toy example in Section 3 illustrated that side-effects of out-of-order execution can modify the microarchitecturall state to leak information. While the code snippet reveals the data value passed to a cache-side channel, we want to show how this technique can be leveraged to leak otherwise inaccessible secrets. In this section, we want to generalize and discuss the necessary building blocks to exploit out-of-order execution for an attack.

第3节中的玩具示例说明了无序执行的副作用可以修改微架构状态从而泄漏信息。虽然代码片段揭示了传递给缓存端通道的数据值,但我们想要展示如何利用这种技术来泄露绝密信息。在本节中,我们想概括和讨论必要的利用乱序执行的攻击模块。

The adversary targets a secret value that is kept somewhere in physical memory. Note that register contents are also stored in memory upon context switches, i.e.,they are also stored in physical memory. As described in Section 2.2, the address space of every process typically includes the entire user space, as well as the entire kernel space, which typically also has all physical memory (in-use) mapped. However, these memory regions are only accessible in privileged mode (cf. Section 2.2).

对手的目标是保存在物理内存某处的保密值。注意,寄存器内容也在上下文切换时被存储在存储器中,即,它们也被存储在物理内存中。如第2.2节所述,每个进程的地址空间通常包含整个用户空间以及整个内核空间,这些空间通常也映射了所有(正在使用中的)物理内存。但是,这些内存区域只能在特权模式下访问(参见第2.2节)。

In this work, we demonstrate leaking secrets by bypassing the privileged-mode isolation, giving an attacker full read access to the entire kernel space including any physical memory mapped, including the physical memory of any other process and the kernel. Note that Kocher et al. [19] pursue an orthogonal approach, called Spectre Attacks, which trick speculative executed instructions into leaking information that the victim process is authorized to access. As a result, Spectre Attacks lack the privilege escalation aspect of Meltdown and require tailoring to the victim process’s software environment, but apply more broadly to CPUs that support speculative execution and are not stopped by KAISER.

在这项工作中,我们通过绕过特权模式隔离来证明保密的泄露,给攻击者完整的读取权限去访问整个内核空间,包括任何物理内存映射和任何其他进程和内核的物理内存。值得关注的是,Kocher 等人[19]研究一种正交性的攻击方法,称为“幽灵”,它通过诱骗执行指令来泄露受害者进程有权访问的信息。因此,幽灵攻击没有熔断的权限越级的特性,需要针对受害者进程的软件环境进行特化,但是更广泛地应用于支持推测性执行的CPU,并且不会被KAISER阻止。

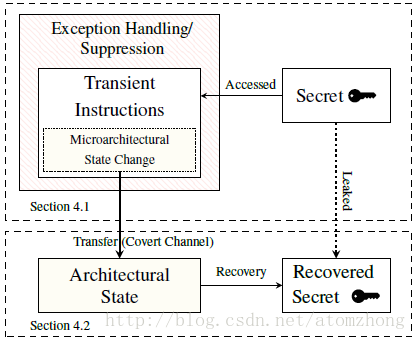

The full Meltdown attack consists of two building blocks, as illustrated in Figure 5. The first building block of Meltdown is to make the CPU execute one or more instructions that would never occur in the executed path. In the toy example (cf. Section 3), this is an access to an array, which would normally never be executed, as the previous instruction always raises an exception. We call such an instruction, which is executed out of order, leaving measurable side effects, a transient instruction.Furthermore, we call any sequence of instructions containing at least one transient instruction a transient instruction sequence.

完整的熔断攻击由两个模块组成,如图5所示。熔断的第一个模块是让CPU执行一条或多条在执行路径中永远不会发生的指令。在玩具的例子中(参见第3节),这是对一个数组的访问,通常不会执行,因为前面的指令总是引发一个异常。我们把这样的指令称为乱序执行,留下了可测量的副作用,一个瞬态指令。此外,我们把任何包含至少一个瞬态指令的指令序列称为瞬态指令序列。

Figure 5: The Meltdown attack uses exception handling or suppression, e.g., TSX, to run a series of transient instructions. These transient instructions obtain a (persistent) secret value and change the microarchitecturall state of the processor based on this secret value. This forms the sending part of a microarchitecturall covert channel. The receiving side reads the microarchitecturall state, making it architectural and recovering the secret value.

图5:熔断攻击使用异常处理或抑制(例如TSX)来运行一系列瞬态指令。这些瞬态指令获得(永久的)保密值,并基于该保密值改变处理器的微架构状态。这构成了微架构隐蔽通道的发送部分。接收端读取微架构状态,从而构建和复原保密值。

In order to leverage transient instructions for an attack, the transient instruction sequence must utilize a secret value that an attacker wants to leak. Section 4.1 describes building blocks to run a transient instruction sequence with a dependency on a secret value.

为了利用瞬态指令进行攻击,瞬态指令序列必须利用攻击者想要使之泄漏的保密值。4.1节中介绍了用以运行一个依赖于保密值的瞬态指令序列的模块。

The second building block of Meltdown is to transfer the microarchitecturall side effect of the transient instruction sequence to an architectural state to further process the leaked secret. Thus, the second building described in Section 4.2 describes building blocks to transfer a microarchitecturall side effect to an architectural state using a covert channel.

熔断的第二个模块是将瞬态指令序列的微架构副作用转移到架构状态,以进一步处理泄露的保密值。 4.2节中介绍了使用隐蔽通道将微架构副作用转移到架构状态的第二个模块。

4.1 Executing Transient Instructions

4.1 执行瞬态指令

The first building block of Meltdown is the execution of transient instructions. Transient instructions basically occur all the time, as the CPU continuously runs ahead of the current instruction to minimize the experienced latency and thus maximize the performance (cf. Section 2.1). Transient instructions introduce an exploitable side channel if their operation depends on a secret value. We focus on addresses that are mapped within the attacker’s process, i.e., the user-accessible user space addresses as well as the user-inaccessible kernel space addresses. Note that attacks targeting code that is executed within the context (i.e., address space) of another process are possible [19], but out of scope in this work, since all physical memory (including the memory of other processes) can be read through the kernel address space anyway.

熔断的第一个模块块就是执行瞬态指令。瞬态指令基本上每时每刻都在发生,因为CPU会在当前指令之前连续运行,以尽量减少等待执行的延迟,从而最大限度地提高性能(参见第2.1节)。如果瞬态指令的操作依赖于秘密值,则会引入可利用的旁路。我们主要关注那些映射到攻击者进程内的地址,即用户可访问的用户空间地址以及用户不可访问的内核空间地址。值得注意的是,针对在另一个进程的上下文(即地址空间)内执行的代码进行攻击是有可能的[19],但是这超出了本篇论文的范围,因为所有物理内存(包括其他进程的内存)无论如何都可以通过内核地址空间读取。

Accessing user-inaccessible pages, such as kernel pages, triggers an exception which generally terminates the application. If the attacker targets a secret at a user-inaccessible address, the attacker has to cope with this exception. We propose two approaches: With exception handling, we catch the exception effectively occurring after executing the transient instruction sequence, and with exception suppression, we prevent the exception from occurring at all and instead redirect the control flow after executing the transient instruction sequence. We discuss these approaches in detail in the following.

访问用户不可访问的页面(例如内核页面)通常会触发一个终止该应用程序的异常。如果攻击者以用户不可访问的地址为目标,那么他们必须应对这个异常。我们提出了两种方法:通过异常处理,在执行完瞬态指令序列之后捕捉到有效的异常;通过异常抑制,完全阻止异常发生,而在执行完瞬态指令序列之后重定向控制流。我们接下来将详细讨论这些方法。

Exception handling

异常处理

A trivial approach is to fork the attacking application before accessing the invalid memory location that terminates the process, and only access the invalid memory location in the child process. The CPU executes the transient instruction sequence in the child process before crashing. The parent process can then recover the secret by observing the microarchitecturall state, e.g., through a side-channel.

一个简单的方法是在攻击程序访问终止进程的无效内存位置之前就对进程克隆,然后只访问子进程中的无效内存位置。CPU在崩溃之前执行子进程中的瞬态指令序列。然后父进程可以通过观察微架构状态(例如通过旁路通道)来回复保密值。

It is also possible to install a signal handler that will be executed if a certain exception occurs, in this specific case a segmentation fault. This allows the attacker to issue the instruction sequence and prevent the application from crashing, reducing the overhead as no new process has to be created.

另一个方法则可以安装一个信号处理程序,如果发生某些异常,这个信号处理程序将被执行,在这个特定的情况下是分段错误。这允许攻击者发出指令序列并防止应用程序崩溃,从而减少了开销,因为这样不需要创建新的进程。

Exception suppression

异常抑制

A different approach to deal with exceptions is to prevent them from being raised in the first place. Transactional memory allows to group memory accesses into one seemingly atomic operation, giving the option to roll-back to a previous state if an error occurs. If an exception occurs within the transaction, the architectural state is reset, and the program execution continues without disruption.

处理异常的另一种方法是在第一时间阻止它们抛出。事务性内存允许将内存访问划分成为一个伪原子操作,如果发生错误,可以选择回滚到以前的状态。如果在事务中发生异常,架构状态将被重置,程序继续运行而不会中断。

Furthermore, speculative execution issues instructions that might not occur on the executed code path due to a branch misprediction. Such instructions depending on a preceding conditional branch can be speculatively executed. Thus, the invalid memory access is put within a speculative instruction sequence that is only executed if a prior branch condition evaluates to true. By making sure that the condition never evaluates to true in the executed code path, we can suppress the occurring exception as the memory access is only executed speculatively. This technique may require a sophisticated training of the branch predictor. Kocher et al. [19] pursue this approach in orthogonal work, since this construct can frequently be found in code of other processes.

此外,由于分支预测错误,推测执行可能会在执行的代码路径上执行原本不会发生的指令。这类依赖于之前的条件分支的指令可以被推测性地执行。因此,无效的内存访问被放置在一个仅当之前的分支条件评估为真时才执行的推测指令序列内。通过保证分支条件在执行的代码路径中从不计算为真,我们就可以抑制将要发生的异常,因为内存访问只是推测性地执行。这种技术可能需要对分支预测器进行复杂的训练。Kocher等人[19]在正交性工作中研究这个方法,因为这个构造经常可以在其他进程的代码中找到。

4.2 Building a Covert Channel

4.2建立一个隐蔽通道

The second building block of Meltdown is the transfer of the microarchitecturall state, which was changed by the transient instruction sequence, into an architectural state (cf. Figure 5). The transient instruction sequence can be seen as the sending end of a microarchitecturall covert channel. The receiving end of the covert channel receives the microarchitecturall state change and deduces the secret from the state. Note that the receiver is not part of the transient instruction sequence and can be a different thread or even a different process e.g., the parent process in the fork-and-crash approach.

熔断的第二个模块是通过瞬态指令序列将微架构状态转换为架构状态(参见图5)。瞬态指令序列可以看作微架构隐蔽通道的发送端。隐蔽通道的接收端接收微架构状态变化,并从此状态推导保密值。值得注意的是,接收器不是瞬态指令序列的一部分,它可以是不同的线程,甚至是不同的进程,例如fork-and-crash方法中的父进程。

We leverage techniques from cache attacks, as the cache state is a microarchitecturall state which can be reliably transferred into an architectural state using various techniques [28, 35, 10]. Specifically, we use Flush+ Reload [35], as it allows to build a fast and low-noise covert channel. Thus, depending on the secret value, the transient instruction sequence (cf. Section 4.1) performs a regular memory access, e.g., as it does in the toy example (cf. Section 3).

我们利用的技术来自于缓存攻击,因为缓存状态是一种微架构状态,可以使用各种技术将其可靠地转换为架构状态[28][35][10]。具体而言,我们使用Flush + Reload[35]方法,因为它允许建立一个快速,低噪声的隐蔽通道。因此,取决于保密值,瞬态指令序列(参见4.1节)执行规律的内存访问,就好像在玩具示例中展示的一样(参见第3节)。

After the transient instruction sequence accessed an accessible address, i.e., this is the sender of the covert channel; the address is cached for subsequent accesses. The receiver can then monitor whether the address has been loaded into the cache by measuring the access time to the address. Thus, the sender can transmit a ‘1’-bit by accessing an address which is loaded into the monitored cache, and a ‘0’-bit by not accessing such an address.

在瞬态指令序列访问一个可访问地址之后,即,这是隐蔽通道的发送器; 该地址被缓存以用于随后的访问。之后接收器通过测量地址的访问时间来监视地址是否已经加载到缓存中。因此,发送器可以通过访问被加载到所监视的高速缓存中的地址来发送“1”位,并且通过不访问这样的地址来发送“0”位。

Using multiple different cache lines, as in our toy example in Section 3, allows to transmit multiple bits at once. For every of the 256 different byte values, the sender accesses a different cache line. By performing a Flush+Reload attack on all of the 256 possible cache lines, the receiver can recover a full byte instead of just one bit. However, since the Flush+Reload attack takes much longer (typically several hundred cycles) than the transient instruction sequence, transmitting only a single bit at once is more efficient. The attacker can simply do that by shifting and masking the secret value accordingly.

使用多个不同的缓存行,就像我们在第3节中的玩具示例一样,我们可以一次传输多个位数据。对于256个不同字节值中的每一个,发送器都访问不同的缓存行。通过对所有256个可能的缓存行执行Flush + Reload攻击,接收者可以恢复一个完整的字节而不是一个位。然而,由于Flush + Reload攻击比瞬态指令序列花费更长的时间(通常为几百个循环),所以一次仅发送一个位就更有效率。攻击者可以简单地通过对保密值进行相应地移位和掩码来做到这一点。

Note that the covert channel is not limited to microarchitecturall states which rely on the cache. Any microarchitecturall state which can be influenced by an instruction (sequence) and is observable through a side channel can be used to build the sending end of a covert channel. The sender could, for example, issue an instruction (sequence) which occupies a certain execution port such as the ALU to send a ‘1’-bit. The receiver measures the latency when executing an instruction (sequence) on the same execution port. A high latency implies that the sender sends a ‘1’-bit, whereas a low latency implies that sender sends a ‘0’-bit. The advantage of the Flush+ Reload cache covert channel is the noise resistance and the high transmission rate [10]. Furthermore, the leakage can be observed from any CPU core [35], i.e., rescheduling events do not significantly affect the covert channel.

请注意,隐蔽通道不仅限于依赖缓存的微架构状态。任何可以被指令(序列)影响并且可以通过旁路信道观察到的微观架构状态都可以用来构建隐蔽信道的发送端。例如,发送器可以发出占用某个执行端口(如ALU)的指令(序列)来发送“1”位。接收器在同一执行端口上执行指令(序列)时测量延迟。高延迟意味着发送者发送“1”位,而低延迟意味着发送者发送“0”位。Flush + Reload缓存隐蔽通道的优点是抗噪声和高传输速率[10]。此外,泄漏可以从任何CPU内核[35]观察到,即重调度事件不会显着影响隐蔽信道。