kubelet sandbox创建与calico cni网络配置流程 (二)

上一篇文章分析了kubelet创建pod时首先需要创建一个sandbox容器,该容器保证了k8s的pod中多个容器使用同一个网络命名空间,每个容器能够像访问本地端口一样访问对端容器端口。虽然sandbox的创建流程和运行时参数配置的代码我们都一一分析过了,实际的容器网络也是调用cni插件配置,但是cni插件是怎么工作的呢,这一节我们着重从cni(以caliclo为例)插件一端分析网络配置过程。

cni插件其实是一个二进制执行文件,kubelet在经过各种逻辑生成配置参数,然后根据配置配置参数去执行cni插件,我们看下这些参数是哪些

prevResult, err = invoke.ExecPluginWithResult(pluginPath, newConf.Bytes, c.args("ADD", rt))

// 其中,rt的内容为

rt := &libcni.RuntimeConf{

ContainerID: podSandboxID.ID,

NetNS: podNetnsPath,

IfName: network.DefaultInterfaceName,

Args: [][2]string{

{"IgnoreUnknown", "1"},

{"K8S_POD_NAMESPACE", podNs},

{"K8S_POD_NAME", podName},

{"K8S_POD_INFRA_CONTAINER_ID", podSandboxID.ID},

},

}

// newConf.Bytes是cni插件的配置文件,以我的集群为例

{

"name": "k8s-pod-network",

"cniVersion": "0.1.0",

"type": "calico",

"etcd_endpoints": "http://127.0.0.1:2379",

"etcd_key_file": "",

"etcd_cert_file": "",

"etcd_ca_cert_file": "",

"log_level": "debug",

"mtu": 1500,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s",

"k8s_api_root": "https://10.96.0.1:443",

"k8s_auth_token": "eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJjYWxpY28tbm9kZS10b2tlbi14cTQ1dyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJjYWxpY28tbm9kZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImMwZDI5NDQyLWExMGUtMTFlOC04NGM4LTAwMGMyOWUwYjU0OSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTpjYWxpY28tbm9kZSJ9.iT1WQTKMrJaDjM_cBTi4IO6m2Zx566wlyQm2N0CcCLNWmBwRfO4FKhuRTCp5yd9gNTBZlosdByovIeQJLBPXfUqcFOIZ6he4or1fVnjgBOIqy1G2zii7X0KrVMHEizwwS9sz44ielsjxD-BIbdwxaXv0U9yjaB1TH9Fp8LdmNtdMUs1UrrimODUqG4QAFcaGA9UBAfgchsx6pPmRNJ2Jft79W6kXv-BCK6vR434UeobfxM5k8rj4rXhCzLjQt8iIKfgOFYlzehH-ZWFjNETUxrRoyRDqvBvxQ9Sh9Bh8dC5ODO34acZPAKy1GiP2S-9fP1P8RwKP-hmw4em1RC4UdA"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

}该部分在第一节已经详细介绍,如有疑问可以查看第一节的内容。

带着这些信息,我们开始看calico插件的网络配置流程(只分析基于k8s的容器网络配置),先看calico的main函数(cni版本1.11.6)

func main() {

// Set up logging formatting.

log.SetFormatter(&logutils.Formatter{})

// Install a hook that adds file/line no information.

log.AddHook(&logutils.ContextHook{})

// Display the version on "-v", otherwise just delegate to the skel code.

// Use a new flag set so as not to conflict with existing libraries which use "flag"

flagSet := flag.NewFlagSet("Calico", flag.ExitOnError)

version := flagSet.Bool("v", false, "Display version")

err := flagSet.Parse(os.Args[1:])

if err != nil {

fmt.Println(err)

os.Exit(1)

}

if *version {

fmt.Println(VERSION)

os.Exit(0)

}

if err := AddIgnoreUnknownArgs(); err != nil {

os.Exit(1)

}

skel.PluginMain(cmdAdd, nil, cmdDel, cniSpecVersion.All, "")

}PluginMain是一个委托函数,参数的接收为cmdAdd,cmdGet,cmdDel以及cni支持版本等。在PluginMain函数里我们看到其实它调用的是PluginMainWithError,而逻辑主要是dispatcher下的pluginMain函数

func PluginMain(cmdAdd, cmdGet, cmdDel func(_ *CmdArgs) error, versionInfo version.PluginInfo, about string) {

if e := PluginMainWithError(cmdAdd, cmdGet, cmdDel, versionInfo, about); e != nil {

if err := e.Print(); err != nil {

log.Print("Error writing error JSON to stdout: ", err)

}

os.Exit(1)

}

}

func PluginMainWithError(cmdAdd, cmdGet, cmdDel func(_ *CmdArgs) error, versionInfo version.PluginInfo, about string) *types.Error {

return (&dispatcher{

Getenv: os.Getenv,

Stdin: os.Stdin,

Stdout: os.Stdout,

Stderr: os.Stderr,

}).pluginMain(cmdAdd, cmdGet, cmdDel, versionInfo, about)

}dispatcher从系统的环境变量和输入接收参数,同时设置系统的输出和错误,dispatcher的pluginMain函数如下

func (t *dispatcher) pluginMain(cmdAdd, cmdGet, cmdDel func(_ *CmdArgs) error, versionInfo version.PluginInfo, about string) *types.Error {

cmd, cmdArgs, err := t.getCmdArgsFromEnv()

if err != nil {

// Print the about string to stderr when no command is set

if _, ok := err.(missingEnvError); ok && t.Getenv("CNI_COMMAND") == "" && about != "" {

fmt.Fprintln(t.Stderr, about)

return nil

}

return createTypedError(err.Error())

}

if cmd != "VERSION" {

err = validateConfig(cmdArgs.StdinData)

if err != nil {

return createTypedError(err.Error())

}

}

switch cmd {

case "ADD":

err = t.checkVersionAndCall(cmdArgs, versionInfo, cmdAdd)

case "GET":

configVersion, err := t.ConfVersionDecoder.Decode(cmdArgs.StdinData)

if err != nil {

return createTypedError(err.Error())

}

if gtet, err := version.GreaterThanOrEqualTo(configVersion, "0.4.0"); err != nil {

return createTypedError(err.Error())

} else if !gtet {

return &types.Error{

Code: types.ErrIncompatibleCNIVersion,

Msg: "config version does not allow GET",

}

}

for _, pluginVersion := range versionInfo.SupportedVersions() {

gtet, err := version.GreaterThanOrEqualTo(pluginVersion, configVersion)

if err != nil {

return createTypedError(err.Error())

} else if gtet {

if err := t.checkVersionAndCall(cmdArgs, versionInfo, cmdGet); err != nil {

return createTypedError(err.Error())

}

return nil

}

}

return &types.Error{

Code: types.ErrIncompatibleCNIVersion,

Msg: "plugin version does not allow GET",

}

case "DEL":

err = t.checkVersionAndCall(cmdArgs, versionInfo, cmdDel)

case "VERSION":

err = versionInfo.Encode(t.Stdout)

default:

return createTypedError("unknown CNI_COMMAND: %v", cmd)

}

if err != nil {

if e, ok := err.(*types.Error); ok {

// don't wrap Error in Error

return e

}

return createTypedError(err.Error())

}

return nil

}从这个函数,我们可以看出,calico接收ADD、GET、DEL、VERSION等命令操作,包含添加容器网络、清除容器网络和查看cni版本等。

既然我们主要谈的是容器网络创建流程,那我们这里主要分析ADD命令,查看checkVersionAndCall函数

func (t *dispatcher) checkVersionAndCall(cmdArgs *CmdArgs, pluginVersionInfo version.PluginInfo, toCall func(*CmdArgs) error) error {

configVersion, err := t.ConfVersionDecoder.Decode(cmdArgs.StdinData)

if err != nil {

return err

}

verErr := t.VersionReconciler.Check(configVersion, pluginVersionInfo)

if verErr != nil {

return &types.Error{

Code: types.ErrIncompatibleCNIVersion,

Msg: "incompatible CNI versions",

Details: verErr.Details(),

}

}

return toCall(cmdArgs)

}首先通过cmdArgs的StdinData参数获取cni版本,然后通过Check函数检查所获取的cni版本是否是支持(以下版本”0.1.0”, “0.2.0”, “0.3.0”, “0.3.1”, “0.4.0”)的。如果检查通过,则执行toCall函数,这个toCall函数就是cmdAdd,如果我们回到main函数查看,cmdAdd就在main.go里,这里通过回调运行cmdAdd函数。

func cmdAdd(args *skel.CmdArgs) error {

// Unmarshal the network config, and perform validation

conf := NetConf{}

if err := json.Unmarshal(args.StdinData, &conf); err != nil {

return fmt.Errorf("failed to load netconf: %v", err)

}

cniVersion := conf.CNIVersion

ConfigureLogging(conf.LogLevel)

workload, orchestrator, err := GetIdentifiers(args)

if err != nil {

return err

}

logger := CreateContextLogger(workload)

// Allow the nodename to be overridden by the network config

updateNodename(conf, logger)

logger.WithFields(log.Fields{

"Orchestrator": orchestrator,

"Node": nodename,

"Workload": workload,

"ContainerID": args.ContainerID,

}).Info("Extracted identifiers")

logger.WithFields(log.Fields{"NetConfg": conf}).Info("Loaded CNI NetConf")

calicoClient, err := CreateClient(conf)

if err != nil {

return err

}

ready, err := IsReady(calicoClient)

if err != nil {

return err

}

if !ready {

logger.Warn("Upgrade may be in progress, ready flag is not set")

return fmt.Errorf("Calico is currently not ready to process requests")

}

// Always check if there's an existing endpoint.

endpoints, err := calicoClient.WorkloadEndpoints().List(api.WorkloadEndpointMetadata{

Node: nodename,

Orchestrator: orchestrator,

Workload: workload})

if err != nil {

return err

}

logger.Debugf("Retrieved endpoints: %v", endpoints)

var endpoint *api.WorkloadEndpoint

if len(endpoints.Items) == 1 {

endpoint = &endpoints.Items[0]

}

fmt.Fprintf(os.Stderr, "Calico CNI checking for existing endpoint: %v\n", endpoint)

// Collect the result in this variable - this is ultimately what gets "returned" by this function by printing

// it to stdout.

var result *current.Result

// If running under Kubernetes then branch off into the kubernetes code, otherwise handle everything in this

// function.

if orchestrator == "k8s" {

if result, err = k8s.CmdAddK8s(args, conf, nodename, calicoClient, endpoint); err != nil {

return err

}

} else {

...

}

// Handle profile creation - this is only done if there isn't a specific policy handler.

if conf.Policy.PolicyType == "" {

logger.Debug("Handling profiles")

// Start by checking if the profile already exists. If it already exists then there is no work to do.

// The CNI plugin never updates a profile.

exists := true

_, err = calicoClient.Profiles().Get(api.ProfileMetadata{Name: conf.Name})

if err != nil {

_, ok := err.(errors.ErrorResourceDoesNotExist)

if ok {

exists = false

} else {

// Cleanup IP allocation and return the error.

ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

return err

}

}

if !exists {

// The profile doesn't exist so needs to be created. The rules vary depending on whether k8s is being used.

// Under k8s (without full policy support) the rule is permissive and allows all traffic.

// Otherwise, incoming traffic is only allowed from profiles with the same tag.

fmt.Fprintf(os.Stderr, "Calico CNI creating profile: %s\n", conf.Name)

var inboundRules []api.Rule

if orchestrator == "k8s" {

inboundRules = []api.Rule{{Action: "allow"}}

} else {

inboundRules = []api.Rule{{Action: "allow", Source: api.EntityRule{Tag: conf.Name}}}

}

profile := &api.Profile{

Metadata: api.ProfileMetadata{

Name: conf.Name,

Tags: []string{conf.Name},

},

Spec: api.ProfileSpec{

EgressRules: []api.Rule{{Action: "allow"}},

IngressRules: inboundRules,

},

}

logger.WithField("profile", profile).Info("Creating profile")

if _, err := calicoClient.Profiles().Create(profile); err != nil {

// Cleanup IP allocation and return the error.

ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

return err

}

}

}

// Set Gateway to nil. Calico-IPAM doesn't set it, but host-local does.

// We modify IPs subnet received from the IPAM plugin (host-local),

// so Gateway isn't valid anymore. It is also not used anywhere by Calico.

for _, ip := range result.IPs {

ip.Gateway = nil

}

// Print result to stdout, in the format defined by the requested cniVersion.

return types.PrintResult(result, cniVersion)为了分析清楚,还是先给出skel.CmdArgs和NetConf的数据结构,NetConf可以看作是calico config的配置文件。

// CmdArgs captures all the arguments passed in to the plugin

// via both env vars and stdin

type CmdArgs struct {

ContainerID string

Netns string

IfName string

Args string

Path string

StdinData []byte

}

// NetConf stores the common network config for Calico CNI plugin

type NetConf struct {

CNIVersion string `json:"cniVersion,omitempty"`

Name string `json:"name"`

Type string `json:"type"`

IPAM struct {

Name string

Type string `json:"type"`

Subnet string `json:"subnet"`

AssignIpv4 *string `json:"assign_ipv4"`

AssignIpv6 *string `json:"assign_ipv6"`

IPv4Pools []string `json:"ipv4_pools,omitempty"`

IPv6Pools []string `json:"ipv6_pools,omitempty"`

} `json:"ipam,omitempty"`

MTU int `json:"mtu"`

Hostname string `json:"hostname"`

Nodename string `json:"nodename"`

DatastoreType string `json:"datastore_type"`

EtcdAuthority string `json:"etcd_authority"`

EtcdEndpoints string `json:"etcd_endpoints"`

LogLevel string `json:"log_level"`

Policy Policy `json:"policy"`

Kubernetes Kubernetes `json:"kubernetes"`

Args Args `json:"args"`

EtcdScheme string `json:"etcd_scheme"`

EtcdKeyFile string `json:"etcd_key_file"`

EtcdCertFile string `json:"etcd_cert_file"`

EtcdCaCertFile string `json:"etcd_ca_cert_file"`

}在cmdAdd函数中,通过json序列化args的StdinData(可通过上述的calico配置文件加强理解)参数到conf中,GetIdentifiers返回workloadID和orchestratorID。workloadID格式为pod的namespace.name,orchestratorID为”k8s”。因为我的环境是k8s,所以我主要关注CmdAddK8s函数,这里屏蔽掉我不关心的IPAM为host-local部分代码

func CmdAddK8s(args *skel.CmdArgs, conf utils.NetConf, nodename string, calicoClient *calicoclient.Client, endpoint *api.WorkloadEndpoint) (*current.Result, error) {

var err error

var result *current.Result

k8sArgs := utils.K8sArgs{}

err = types.LoadArgs(args.Args, &k8sArgs)

if err != nil {

return nil, err

}

utils.ConfigureLogging(conf.LogLevel)

workload, orchestrator, err := utils.GetIdentifiers(args)

if err != nil {

return nil, err

}

logger := utils.CreateContextLogger(workload)

logger.WithFields(log.Fields{

"Orchestrator": orchestrator,

"Node": nodename,

}).Info("Extracted identifiers for CmdAddK8s")

endpointAlreadyExisted := endpoint != nil

if endpointAlreadyExisted {

// This happens when Docker or the node restarts. K8s calls CNI with the same parameters as before.

// Do the networking (since the network namespace was destroyed and recreated).

// There's an existing endpoint - no need to create another. Find the IP address from the endpoint

// and use that in the response.

result, err = utils.CreateResultFromEndpoint(endpoint)

if err != nil {

return nil, err

}

logger.WithField("result", result).Debug("Created result from existing endpoint")

// If any labels changed whilst the container was being restarted, they will be picked up by the policy

// controller so there's no need to update the labels here.

} else {

client, err := newK8sClient(conf, logger)

if err != nil {

return nil, err

}

logger.WithField("client", client).Debug("Created Kubernetes client")

if conf.IPAM.Type == "host-local" && strings.EqualFold(conf.IPAM.Subnet, "usePodCidr") {

...

}

labels := make(map[string]string)

annot := make(map[string]string)

// Only attempt to fetch the labels and annotations from Kubernetes

// if the policy type has been set to "k8s". This allows users to

// run the plugin under Kubernetes without needing it to access the

// Kubernetes API

if conf.Policy.PolicyType == "k8s" {

var err error

labels, annot, err = getK8sLabelsAnnotations(client, k8sArgs)

if err != nil {

return nil, err

}

logger.WithField("labels", labels).Debug("Fetched K8s labels")

logger.WithField("annotations", annot).Debug("Fetched K8s annotations")

// Check for calico IPAM specific annotations and set them if needed.

if conf.IPAM.Type == "calico-ipam" {

v4pools := annot["cni.projectcalico.org/ipv4pools"]

v6pools := annot["cni.projectcalico.org/ipv6pools"]

if len(v4pools) != 0 || len(v6pools) != 0 {

var stdinData map[string]interface{}

if err := json.Unmarshal(args.StdinData, &stdinData); err != nil {

return nil, err

}

var v4PoolSlice, v6PoolSlice []string

if len(v4pools) > 0 {

if err := json.Unmarshal([]byte(v4pools), &v4PoolSlice); err != nil {

logger.WithField("IPv4Pool", v4pools).Error("Error parsing IPv4 IPPools")

return nil, err

}

if _, ok := stdinData["ipam"].(map[string]interface{}); !ok {

logger.Fatal("Error asserting stdinData type")

os.Exit(0)

}

stdinData["ipam"].(map[string]interface{})["ipv4_pools"] = v4PoolSlice

logger.WithField("ipv4_pools", v4pools).Debug("Setting IPv4 Pools")

}

if len(v6pools) > 0 {

if err := json.Unmarshal([]byte(v6pools), &v6PoolSlice); err != nil {

logger.WithField("IPv6Pool", v6pools).Error("Error parsing IPv6 IPPools")

return nil, err

}

if _, ok := stdinData["ipam"].(map[string]interface{}); !ok {

logger.Fatal("Error asserting stdinData type")

os.Exit(0)

}

stdinData["ipam"].(map[string]interface{})["ipv6_pools"] = v6PoolSlice

logger.WithField("ipv6_pools", v6pools).Debug("Setting IPv6 Pools")

}

newData, err := json.Marshal(stdinData)

if err != nil {

logger.WithField("stdinData", stdinData).Error("Error Marshaling data")

return nil, err

}

args.StdinData = newData

logger.WithField("stdin", string(args.StdinData)).Debug("Updated stdin data")

}

}

}

ipAddrsNoIpam := annot["cni.projectcalico.org/ipAddrsNoIpam"]

ipAddrs := annot["cni.projectcalico.org/ipAddrs"]

// switch based on which annotations are passed or not passed.

switch {

case ipAddrs == "" && ipAddrsNoIpam == "":

// Call IPAM plugin if ipAddrsNoIpam or ipAddrs annotation is not present.

logger.Debugf("Calling IPAM plugin %s", conf.IPAM.Type)

ipamResult, err := ipam.ExecAdd(conf.IPAM.Type, args.StdinData)

if err != nil {

return nil, err

}

logger.Debugf("IPAM plugin returned: %+v", ipamResult)

// Convert IPAM result into current Result.

// IPAM result has a bunch of fields that are optional for an IPAM plugin

// but required for a CNI plugin, so this is to populate those fields.

// See CNI Spec doc for more details.

result, err = current.NewResultFromResult(ipamResult)

if err != nil {

utils.ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

return nil, err

}

if len(result.IPs) == 0 {

utils.ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

return nil, errors.New("IPAM plugin returned missing IP config")

}

case ipAddrs != "" && ipAddrsNoIpam != "":

// Can't have both ipAddrs and ipAddrsNoIpam annotations at the same time.

e := fmt.Errorf("Can't have both annotations: 'ipAddrs' and 'ipAddrsNoIpam' in use at the same time")

logger.Error(e)

return nil, e

case ipAddrsNoIpam != "":

// ipAddrsNoIpam annotation is set so bypass IPAM, and set the IPs manually.

overriddenResult, err := overrideIPAMResult(ipAddrsNoIpam, logger)

if err != nil {

return nil, err

}

logger.Debugf("Bypassing IPAM to set the result to: %+v", overriddenResult)

// Convert overridden IPAM result into current Result.

// This method fill in all the empty fields necessory for CNI output according to spec.

result, err = current.NewResultFromResult(overriddenResult)

if err != nil {

return nil, err

}

if len(result.IPs) == 0 {

return nil, errors.New("Failed to build result")

}

case ipAddrs != "":

// When ipAddrs annotation is set, we call out to the configured IPAM plugin

// requesting the specific IP addresses included in the annotation.

result, err = ipAddrsResult(ipAddrs, conf, args, logger)

if err != nil {

return nil, err

}

logger.Debugf("IPAM result set to: %+v", result)

}

// Create the endpoint object and configure it.

endpoint = api.NewWorkloadEndpoint()

endpoint.Metadata.Name = args.IfName

endpoint.Metadata.Node = nodename

endpoint.Metadata.Orchestrator = orchestrator

endpoint.Metadata.Workload = workload

endpoint.Metadata.Labels = labels

// Set the profileID according to whether Kubernetes policy is required.

// If it's not, then just use the network name (which is the normal behavior)

// otherwise use one based on the Kubernetes pod's Namespace.

if conf.Policy.PolicyType == "k8s" {

endpoint.Spec.Profiles = []string{fmt.Sprintf("k8s_ns.%s", k8sArgs.K8S_POD_NAMESPACE)}

} else {

endpoint.Spec.Profiles = []string{conf.Name}

}

// Populate the endpoint with the output from the IPAM plugin.

if err = utils.PopulateEndpointNets(endpoint, result); err != nil {

// Cleanup IP allocation and return the error.

utils.ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

return nil, err

}

logger.WithField("endpoint", endpoint).Info("Populated endpoint")

}

fmt.Fprintf(os.Stderr, "Calico CNI using IPs: %s\n", endpoint.Spec.IPNetworks)

// maybeReleaseIPAM cleans up any IPAM allocations if we were creating a new endpoint;

// it is a no-op if this was a re-network of an existing endpoint.

maybeReleaseIPAM := func() {

logger.Debug("Checking if we need to clean up IPAM.")

logger := logger.WithField("IPs", endpoint.Spec.IPNetworks)

if endpointAlreadyExisted {

logger.Info("Not cleaning up IPAM allocation; this was a pre-existing endpoint.")

return

}

logger.Info("Releasing IPAM allocation after failure")

utils.ReleaseIPAllocation(logger, conf.IPAM.Type, args.StdinData)

}

// Whether the endpoint existed or not, the veth needs (re)creating.

hostVethName := k8sbackend.VethNameForWorkload(workload)

_, contVethMac, err := utils.DoNetworking(args, conf, result, logger, hostVethName)

if err != nil {

logger.WithError(err).Error("Error setting up networking")

maybeReleaseIPAM()

return nil, err

}

mac, err := net.ParseMAC(contVethMac)

if err != nil {

logger.WithError(err).WithField("mac", mac).Error("Error parsing container MAC")

maybeReleaseIPAM()

return nil, err

}

endpoint.Spec.MAC = &cnet.MAC{HardwareAddr: mac}

endpoint.Spec.InterfaceName = hostVethName

endpoint.Metadata.ActiveInstanceID = args.ContainerID

logger.WithField("endpoint", endpoint).Info("Added Mac, interface name, and active container ID to endpoint")

// Write the endpoint object (either the newly created one, or the updated one)

if _, err := calicoClient.WorkloadEndpoints().Apply(endpoint); err != nil {

logger.WithError(err).Error("Error creating/updating endpoint in datastore.")

maybeReleaseIPAM()

return nil, err

}

logger.Info("Wrote updated endpoint to datastore")

return result, nil

}CmdAddK8s函数流程非常清晰,这里分析每个步骤的逻辑,调用GetIdentifiers获得workload和orchestrator的值(形式和上述提到的一样)。然后根据endpoint变量是否为空判断处理流程,这里直接贴出代码里的注释

// This happens when Docker or the node restarts. K8s calls CNI with the same parameters as before.

// Do the networking (since the network namespace was destroyed and recreated).

// There's an existing endpoint - no need to create another. Find the IP address from the endpoint

// and use that in the response.如果不为空则执行CreateResultFromEndpoint函数,其实是用的之前分配的结果,这里我们先不关注,既然做为新建流程,我们就从最原始的创建开始,假设我们是在新建一个pod,那么我们现在要给这个pod配置网络,我们顺着calico的代码看需要给它配置什么。如果我们在calico的配置文件里配置conf.Policy.PolicyType为k8s,那么它会从pod中获取pod的labels和annotations,再根据配置文件的conf.IPAM.Type是否calico-ipam,然后从annotations中分别取出key为cni.projectcalico.org/ipv4pools和cni.projectcalico.org/ipv6pools(如果有)的值配置ipv4地址池和ipv6地址池。然后从annotations取出key为cni.projectcalico.org/ipAddrsNoIpam和cni.projectcalico.org/ipAddrs(如果有)的值配置ipAddrsNoIpam和ipAddrs参数。根据ipAddrsNoIpam和ipAddrs的值有下列4种情况:

- ipAddrs和ipAddrsNoIpam的值为空,直接通过calico-ipam去分配ip地址;

- ipAddrs和ipAddrsNoIpam的值均不为空,抛出错误,不能同时配置ipAddrs和ipAddrsNoIpam;

- 如果ipAddrsNoIpam不为空,则使用ipAddrsNoIpam设置的ip地址作为result,不调用calico-ipam;

- 如果ipAddrs不为空,则使用ipAddrs设置的ip地址,调用calico-ipam去分配该地址。

我们看到,除了第2、3种情况,第1、4都使用到了calico-ipam,我们都知道在cni的定义里,ipam是在各插件中抽出来的,为了避免每个插件都分配地址,简单来讲ipam就是用来分配ip地址的,最后返回分配的结果。关于calico-ipam留到第三节再分析。

获得ipam分配的ip结果后,接下来重要的事就是将这个ip结果配置在容器的网络命名空间。

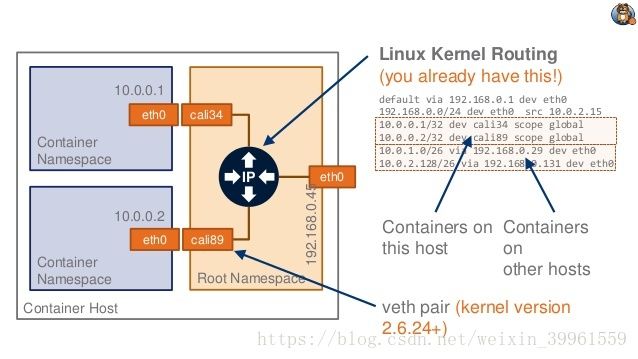

为了便于分析接下来的内容,先copy个图片上来

calico配置容器网络其实就是配置veth pair,一处在主机端,另一处在容器端,这个veth pair连接容器和主机的网络空间,主机端的veth是虚拟网卡,calico的网络走的是路由模式。关于veth pair的教程可以查阅这篇文章 :Linux-虚拟网络设备-veth pair。

继续回到源码的分析,VethNameForWorkload函数获取calico在主机端的veth名称,使用sha1算法计算workload的内容,最后截取前11个字符

// VethNameForWorkload returns a deterministic veth name

// for the given Kubernetes workload.

func VethNameForWorkload(workload string) string {

// A SHA1 is always 20 bytes long, and so is sufficient for generating the

// veth name and mac addr.

h := sha1.New()

h.Write([]byte(workload))

return fmt.Sprintf("cali%s", hex.EncodeToString(h.Sum(nil))[:11])

}DoNetworking这里就是calico cni的核心操作了,先看DoNetworking的逻辑

// DoNetworking performs the networking for the given config and IPAM result

func DoNetworking(args *skel.CmdArgs, conf NetConf, result *current.Result, logger *log.Entry, desiredVethName string) (hostVethName, contVethMAC string, err error) {

// Select the first 11 characters of the containerID for the host veth.

hostVethName = "cali" + args.ContainerID[:Min(11, len(args.ContainerID))]

contVethName := args.IfName

var hasIPv4, hasIPv6 bool

// If a desired veth name was passed in, use that instead.

if desiredVethName != "" {

hostVethName = desiredVethName

}

// Clean up if hostVeth exists.

if oldHostVeth, err := netlink.LinkByName(hostVethName); err == nil {

if err = netlink.LinkDel(oldHostVeth); err != nil {

return "", "", fmt.Errorf("failed to delete old hostVeth %v: %v", hostVethName, err)

}

logger.Infof("cleaning old hostVeth: %v", hostVethName)

}

err = ns.WithNetNSPath(args.Netns, func(hostNS ns.NetNS) error {

veth := &netlink.Veth{

LinkAttrs: netlink.LinkAttrs{

Name: contVethName,

Flags: net.FlagUp,

MTU: conf.MTU,

},

PeerName: hostVethName,

}

if err := netlink.LinkAdd(veth); err != nil {

logger.Errorf("Error adding veth %+v: %s", veth, err)

return err

}

hostVeth, err := netlink.LinkByName(hostVethName)

if err != nil {

err = fmt.Errorf("failed to lookup %q: %v", hostVethName, err)

return err

}

if mac, err := net.ParseMAC("EE:EE:EE:EE:EE:EE"); err != nil {

logger.Infof("failed to parse MAC Address: %v. Using kernel generated MAC.", err)

} else {

// Set the MAC address on the host side interface so the kernel does not

// have to generate a persistent address which fails some times.

if err = netlink.LinkSetHardwareAddr(hostVeth, mac); err != nil {

logger.Warnf("failed to Set MAC of %q: %v. Using kernel generated MAC.", hostVethName, err)

}

}

// Explicitly set the veth to UP state, because netlink doesn't always do that on all the platforms with net.FlagUp.

// veth won't get a link local address unless it's set to UP state.

if err = netlink.LinkSetUp(hostVeth); err != nil {

return fmt.Errorf("failed to set %q up: %v", hostVethName, err)

}

contVeth, err := netlink.LinkByName(contVethName)

if err != nil {

err = fmt.Errorf("failed to lookup %q: %v", contVethName, err)

return err

}

// Fetch the MAC from the container Veth. This is needed by Calico.

contVethMAC = contVeth.Attrs().HardwareAddr.String()

logger.WithField("MAC", contVethMAC).Debug("Found MAC for container veth")

// At this point, the virtual ethernet pair has been created, and both ends have the right names.

// Both ends of the veth are still in the container's network namespace.

for _, addr := range result.IPs {

// Before returning, create the routes inside the namespace, first for IPv4 then IPv6.

if addr.Version == "4" {

// Add a connected route to a dummy next hop so that a default route can be set

gw := net.IPv4(169, 254, 1, 1)

gwNet := &net.IPNet{IP: gw, Mask: net.CIDRMask(32, 32)}

err := netlink.RouteAdd(

&netlink.Route{

LinkIndex: contVeth.Attrs().Index,

Scope: netlink.SCOPE_LINK,

Dst: gwNet,

},

)

if err != nil {

return fmt.Errorf("failed to add route inside the container: %v", err)

}

if err = ip.AddDefaultRoute(gw, contVeth); err != nil {

return fmt.Errorf("failed to add the default route inside the container: %v", err)

}

if err = netlink.AddrAdd(contVeth, &netlink.Addr{IPNet: &addr.Address}); err != nil {

return fmt.Errorf("failed to add IP addr to %q: %v", contVethName, err)

}

// Set hasIPv4 to true so sysctls for IPv4 can be programmed when the host side of

// the veth finishes moving to the host namespace.

hasIPv4 = true

}

// Handle IPv6 routes

if addr.Version == "6" {

// Make sure ipv6 is enabled in the container/pod network namespace.

// Without these sysctls enabled, interfaces will come up but they won't get a link local IPv6 address

// which is required to add the default IPv6 route.

if err = writeProcSys("/proc/sys/net/ipv6/conf/all/disable_ipv6", "0"); err != nil {

return fmt.Errorf("failed to set net.ipv6.conf.all.disable_ipv6=0: %s", err)

}

if err = writeProcSys("/proc/sys/net/ipv6/conf/default/disable_ipv6", "0"); err != nil {

return fmt.Errorf("failed to set net.ipv6.conf.default.disable_ipv6=0: %s", err)

}

if err = writeProcSys("/proc/sys/net/ipv6/conf/lo/disable_ipv6", "0"); err != nil {

return fmt.Errorf("failed to set net.ipv6.conf.lo.disable_ipv6=0: %s", err)

}

// No need to add a dummy next hop route as the host veth device will already have an IPv6

// link local address that can be used as a next hop.

// Just fetch the address of the host end of the veth and use it as the next hop.

addresses, err := netlink.AddrList(hostVeth, netlink.FAMILY_V6)

if err != nil {

logger.Errorf("Error listing IPv6 addresses for the host side of the veth pair: %s", err)

return err

}

if len(addresses) < 1 {

// If the hostVeth doesn't have an IPv6 address then this host probably doesn't

// support IPv6. Since a IPv6 address has been allocated that can't be used,

// return an error.

return fmt.Errorf("failed to get IPv6 addresses for host side of the veth pair")

}

hostIPv6Addr := addresses[0].IP

_, defNet, _ := net.ParseCIDR("::/0")

if err = ip.AddRoute(defNet, hostIPv6Addr, contVeth); err != nil {

return fmt.Errorf("failed to add IPv6 default gateway to %v %v", hostIPv6Addr, err)

}

if err = netlink.AddrAdd(contVeth, &netlink.Addr{IPNet: &addr.Address}); err != nil {

return fmt.Errorf("failed to add IPv6 addr to %q: %v", contVeth, err)

}

// Set hasIPv6 to true so sysctls for IPv6 can be programmed when the host side of

// the veth finishes moving to the host namespace.

hasIPv6 = true

}

}

// Now that the everything has been successfully set up in the container, move the "host" end of the

// veth into the host namespace.

if err = netlink.LinkSetNsFd(hostVeth, int(hostNS.Fd())); err != nil {

return fmt.Errorf("failed to move veth to host netns: %v", err)

}

return nil

})

if err != nil {

logger.Errorf("Error creating veth: %s", err)

return "", "", err

}

err = configureSysctls(hostVethName, hasIPv4, hasIPv6)

if err != nil {

return "", "", fmt.Errorf("error configuring sysctls for interface: %s, error: %s", hostVethName, err)

}

// Moving a veth between namespaces always leaves it in the "DOWN" state. Set it back to "UP" now that we're

// back in the host namespace.

hostVeth, err := netlink.LinkByName(hostVethName)

if err != nil {

return "", "", fmt.Errorf("failed to lookup %q: %v", hostVethName, err)

}

if err = netlink.LinkSetUp(hostVeth); err != nil {

return "", "", fmt.Errorf("failed to set %q up: %v", hostVethName, err)

}

// Now that the host side of the veth is moved, state set to UP, and configured with sysctls, we can add the routes to it in the host namespace.

err = SetupRoutes(hostVeth, result)

if err != nil {

return "", "", fmt.Errorf("error adding host side routes for interface: %s, error: %s", hostVeth.Attrs().Name, err)

}

return hostVethName, contVethMAC, err

}想一想,如果是我们自己去创建一个veth pair我们的操作步骤是什么?在某个网络空间下操作(这里使用的是容器的网络空间)

- 创建veth pair,主机端和容器端

- 给容器端veth 配置mac、ip、网关和路由等

- 给主机端veth配置mac、路由等

- 将主机端veth移到主机的网络空间

对着上面几个步骤去查看DoNetworking函数,代码里的逻辑和我们的操作其实是如出一辙的,DoNetworking函数里还有个configureSysctls函数,他将给主机端的veth配置必要的sysctls。DoNetworking最后返回主机veth name和容器mac,这些信息都更新到enpoint,最后是将这个信息通过calico client更新到etcd里。

最后返回CmdAddK8s的结果,该结果就是执行calico cni二进制文件的结果,而程序运行到这里也就退出了。