ORB-SLAM2运行自己的数据集(单目)

文章目录

- 环境

- 数据获取

- 相机标定

- 数据预处理

环境

· ubuntu 18.04

· ORB-SLAM2

假设环境已经配好且ORB-SLAM2已经编译完成

数据获取

可以用手机或者其他设备采集数据,我用的是Sony Xperia Z5采集的视频,视频分辨率为1920*1080,fps为59.87

相机标定

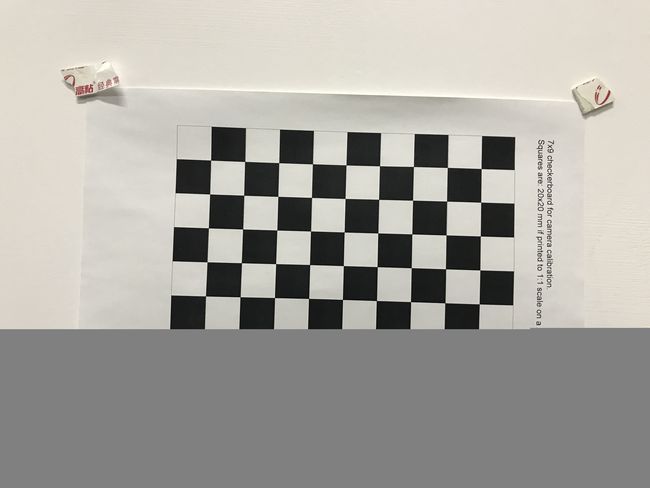

本例采用7x9的棋盘格进行相机标定,如图1所示

步骤:

import numpy as np

import cv2

import glob

import os

'''

相机标定脚本

'''

# termination criteria

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 10, 0.001)

row_no = 9

col_no = 7

#这里用的图7行*9列

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((row_no*col_no,3), np.float32)

objp[:,:2] = np.mgrid[0:row_no,0:col_no].T.reshape(-1,2)

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d point in real world space

imgpoints = [] # 2d points in image plane.

images = os.listdir('./img/')

for fname in images:

img = cv2.imread('./img/'+fname)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# Find the chess board corners

ret, corners = cv2.findChessboardCorners(gray, (row_no,col_no),None)

# If found, add object points, image points (after refining them)

if ret == True:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray,corners,(11,11),(-1,-1),criteria)

imgpoints.append(corners2)

# Draw and display the corners

img = cv2.drawChessboardCorners(img, (row_no,col_no), corners2,ret)

cv2.imshow('img',img)

cv2.waitKey(30)

cv2.destroyAllWindows()

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1],None,None)

print(mtx)

print(dist)

np.savetxt('./matrix.txt',mtx)

np.savetxt('./dist.txt',dist)

h, w = img.shape[:2]

newcameramtx, roi=cv2.getOptimalNewCameraMatrix(mtx,dist,(w,h),1,(w,h))

for fname in images:

img = cv2.imread('./img/'+fname)

# 通过调用函数,传递ROI参数就可以复制结果。

dst = cv2.undistort(img, mtx, dist, None, newcameramtx)

# crop the image

x, y, w, h = roi

dst = dst[y:y + h, x:x + w]

cv2.imshow('img', img)

cv2.imshow('dst', dst)

cv2.waitKey(0)

# 首先找到原图片与校正图片之间映射函数。然后使用重映射函数。

# undistort

# mapx, mapy = cv2.initUndistortRectifyMap(mtx, dist, None, newcameramtx, (w, h), 5)

# dst = cv2.remap(img, mapx, mapy, cv2.INTER_LINEAR)

#

# # crop the image

# x, y, w, h = roi

# dst = dst[y:y + h, x:x + w]

# cv2.imwrite('calibresult.png', dst)

标定结果如下:

相机内参矩阵

1.480823466461038151e+03 0.000000000000000000e+00 9.428762100578752552e+02 0.000000000000000000e+00 1.495030449101173872e+03 5.331657639570393030e+02 0.000000000000000000e+00 0.000000000000000000e+00 1.000000000000000000e+00

畸变系数

-5.551663673857653442e-02 9.888473859774349339e-02 -1.988202569460489292e-03 -1.191351830829561788e-02 -2.278315633704405818e-01

然后再到ORB_SLAM2/Examples/Monocular目录下复制KITTI00-02.yaml,重命名为z5.yaml,并打开,然后将上述标定的参数写入z5.yaml对应位置。

数据预处理

用z5拍摄一段视频,然后用一下脚本进行数据预处理

# coding:utf-8

import os

import cv2

import numpy as np

from multiprocessing import Pool

class Kitti():

def __init__(self, video_parent_dir, frame_interval, img_width, img_height):

'''

:param video_father_dir: video father dir file dir

:param frame_interval: every frame_interval save a key frame

:param img_width: img sequence width

:param img_height: img sequence height

'''

self.video_dirs = [video_parent_dir+dir for dir in os.listdir(video_parent_dir)]

self.frame_interval = frame_interval

self.img_width = img_width

self.img_height = img_height

self.dir_cnt = 0

def get_format_name(self, idx, lenght):

'''

:param idx: given img index, such as 1, 2, 3

:lenght: format name length

:return: return format img name like 000001, 000002, ...

'''

cnt = lenght - 1

prefix = ''

nmb = idx

while idx // 10 != 0:

cnt -= 1

idx = idx // 10

for i in range(cnt):

prefix += '0'

return prefix + str(nmb)

def run(self,video_dir):

videoCapture = cv2.VideoCapture(video_dir)

# get video fps

fps = videoCapture.get(cv2.CAP_PROP_FPS)

# get vide width and height

size = (int(videoCapture.get(cv2.CAP_PROP_FRAME_WIDTH)), int(videoCapture.get(cv2.CAP_PROP_FRAME_HEIGHT)))

width_offset = int((size[0] - self.img_width) / 2)

height_offset = int((size[1] - self.img_height) / 2)

print('=======================')

print('init_size: ',size)

print('dest_size: (',self.img_width,',',self.img_height,')')

img_sequence_parentdir = './' + self.get_format_name(self.dir_cnt, 2)

if not os.path.exists(img_sequence_parentdir):

os.makedirs(img_sequence_parentdir)

if not os.path.exists(img_sequence_parentdir + '/image_0/'):

os.makedirs(img_sequence_parentdir + '/image_0/')

# 读帧

success, frame = videoCapture.read()

total_frame_idx = 0 # video frame index

count = 0 # keyframe number

tmp_cnt = 0 # record new cycle frame number

timestep = 0 # time step

timestep_total = [0] # save every time step

print("Fps: ", fps)

print('=======================')

while success:

# 每秒采2帧

if tmp_cnt == 0 or tmp_cnt == self.frame_interval:

format_img_name = img_sequence_parentdir + '/image_0/' + self.get_format_name(count, 6) + '.png'

print(format_img_name)

cv2.imwrite(format_img_name,

frame[height_offset:height_offset + self.img_height,

width_offset:width_offset + self.img_width])

count += 1

tmp_cnt = 0

timestep += self.frame_interval / fps

timestep_total.append(timestep)

success, frame = videoCapture.read() # 获取下一帧

total_frame_idx = total_frame_idx + 1

tmp_cnt += 1

self.dir_cnt += 1

np.savetxt(img_sequence_parentdir + '/times.txt', timestep_total)

def main(self):

# pool = Pool()

# pool.map(self.run,self.video_dirs)

# pool.close()

# pool.join()

for video_dir in self.video_dirs:

self.run(video_dir)

if __name__ == "__main__":

K = Kitti('./video/',2,1920,1080)

K.main()

上述脚本运行完成后,会得到如下的文件:

00|

image_0

times.txt

image_0 保存的是关键帧序列, times.txt保存的是关键帧对应的时间

注:这里所指的关键帧是指我们在视频中每隔frame_interval 采样的帧。如,视频总长度为10帧,设置每隔1帧采样一帧,那么关键帧为第1,3,5,7,9 (index从零开始)。frame_interval 为Kitti类的第二个参数,可以自己调整。

class Kitti():

def __init__(self, video_parent_dir, frame_interval, img_width, img_height):

使用自己拍摄的数据运行ORB-SLAM2,如下:

-

进入ORB_SLAM2目录

-

./Examples/Monocular/mono_kitti ./Vocabulary/ORBvoc.txt PATH_TO_z5.yaml ./PATH_TO_IMAGE_SEQUENCE

(本机命令如下:./Examples/Monocular/mono_kitti ./Vocabulary/ORBvoc.txt ./Examples/z5.yaml /media/lab/ff048a6a-f7ae-4301-9843-39db29f48c47/lib/Downloads/img_sequence2/01)

最终结果: