基于Andrew ng课后作业6,matlab实现svm算法的垃圾邮件分类器(spam classifier)

零、 背景

matlab也不熟,python也不熟。机器学习没入门。啥也不会。

我们的目标是: 获得数据集 ---> 构造字典 ---> 获得特征向量X,y ---> 训练模型 ---> 预测数据。

一、 邮件数据下载

http://spamassassin.apache.org/old/publiccorpus/

各压缩包内容在该网站上readme.html都有介绍。我使用了spam_2,hard_ham 和 easy_ham。(其实只取了其中的几十个)

然后大概需要批量重命名: ren *.* *.txt

注意:

spam: y=1

non-spam: y=0

(根本记不住啊有木有

二、 构造字典(vocabList)

这里有个大坑。因为Andrew男神的作业vocab.txt也就是字典是直接给出的。所以我们要自己构造。

注意字典是采集于垃圾邮件,这里我用100封垃圾邮件(完全不够啊喂,emmmm速度快啊!

处理邮件内容:

function contents = myProcessEmail(email_contents)

%word_indices = [];

contents = [];

% load vocabulary

vocabList = getVocabList();

fprintf('\n==== Processing Email ====\n\n');

% 去掉邮件头

hdrstart = strfind(email_contents,([char(10) char(10)]));

email_contents = email_contents(hdrstart(1):end);

% 改为小写

email_contents = lower(email_contents);

% 去掉html

email_contents = regexprep(email_contents,'<[^<>]+>',' ');

% 处理数字

email_contents = regexprep(email_contents,'[0-9]+', 'number');

% 处理网址

email_contents = regexprep(email_contents,'(http|https)://[^\s]*', 'httpaddr');

% 处理邮箱地址

email_contents = regexprep(email_contents,'[^\s]+@[^\s]+', 'emailaddr');

% 处理$

email_contents = regexprep(email_contents,'[$]+','dollar');

while ~isempty(email_contents)

[str, email_contents] = strtok(email_contents,[' @$/#.-:&*+=[]?!(){},''">_<;%' char(10) char(13)]);

str = regexprep(str, '[^a-zA-Z0-9]', '');

try str = porterStemmer(strtrim(str));

catch str = '';continue;

end;

if length(str)<1

continue;

end;

contents = [contents,' ',str];

% for z = 1:length(vocabList),

% if strcmp(str,vocabList(z))==1

% word_indices = [word_indices;z];

%

% end;

%end;

end;

构造字典输出到vocab.txt

vocabList = [1]; % 记录邮件中出现的词

vocabCount = [1]; % 记录各词的出现的次数

% 获取文件目录

path = './vocabList/';

list = dir(path);

fileNum = size(list,1);

fprintf('fileNum: %d\n',fileNum);

% 遍历文件夹下的所有文件,因为dir获得的文件还包括. 和.. 所以i从3开始

for i = 3:fileNum,

filename = [path list(i).name];

email_contents = readFile(filename);

fprintf('%s\n',filename);

processed_contents = strtrim(myProcessEmail(email_contents));

fprintf('-----------size of processed_contents----------\n%d\n',length(processed_contents));

fprintf('----------processed email----------\n');

disp(processed_contents);

split_contents = regexp(processed_contents,'\s','split');

for i = 1:length(split_contents),

isExist = 0;

for z = 1:length(vocabCount),

if strcmp(vocabList(2*z-1),split_contents(i))==1

vocabCount(z) = vocabCount(z)+1;

isExist = 1;

break;

end;

end;

if isExist == 0,

vocabList = [vocabList,' ',split_contents(i)];

vocabCount = [vocabCount 1];

end;

end;

%fprintf('---------vocabList----------\n');

%disp(vocabList);

%fprintf('---------vocabCount----------\n');

%disp(vocabCount);

%fprintf('length of split words: %d\n', length(split_contents));

%fprintf('length of vocabList: %d\n', length(vocabList));

%fprintf('length of vocabCount: %d\n', length(vocabCount));

%fprintf('press enter to continue\n');

end;

fprintf('----------build ordered list----------\n');

choosenList = ['1'];

for i = 1:length(vocabCount)

if vocabCount(i) >= 100

choosenList = [choosenList,' ',vocabList(2*i-1)];

end;

end;

% 排序

choosenList = sort(choosenList);

% 去掉空格

spaceCount = 0;

for i = 1:length(choosenList),

if strcmp(choosenList(i),' ')==1,

spaceCount = spaceCount + 1;

end;

end;

choosenList = choosenList(:,(spaceCount+1:end));

fid = fopen('vocab.txt','wt');

for i = 1:length(choosenList),

fprintf(fid,'%d %s\n',i,choosenList{1,i});

end;

fclose(fid);

注意:

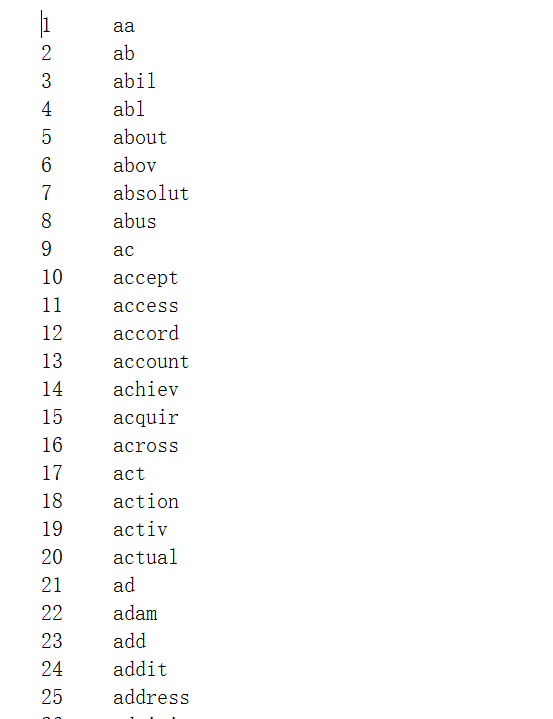

记事本打开原vocab.txt是这样:

然后我以为格式大概就是 %s%d,于是直接输入到txt,但是调用getVocabList怎么都得不到词,只能是词+序号的形式。

不断修改尝试fscanf函数,以为是编码问题(二进制与ascii),后来发现编码都是ANSI。

查看txt文件编码:点击另存为。

弄了两天。

最后发现!高能来了,用写字板打开vocab.txt时,是酱紫:

....原来是格式问题。

所以最后输出到文件是:

fprintf(fid,'%d %s\n',i,choosenList{1,i});从中可以看出来fscanf扫描文件格式时,只有分开的字符串和数字才管用。(这不应该很明显嘛!

三、 训练模型

clear;

close all;

clc;

fprintf('\nPreprecessing emails\n');

%获取文件目录

path = './samples/';

list = dir(path);

fileNum = size(list,1);

fprintf('fileNum:%d\n', fileNum);

vocabList = getVocabList();

n = length(vocabList);

X = zeros((fileNum-2),n);

y = zeros(20,1);

y = [y;ones(20,1)];

for i =3:fileNum,

filename = [path list(i).name];

email_contents = readFile(filename);

fprintf('%s\n',filename);

processed_contents = strtrim(myProcessEmail(email_contents));

split_contents = regexp(processed_contents,'\s','split');

for j =1:length(split_contents),

for z = 1:n,

if strcmp(split_contents(j),vocabList(z))==1

X(i-2,z) = 1;

break;

end;

end;

end;

end;

fprintf('\n---------X---------\n');

disp(X);

pause;

fprintf('\nTraining Linear SVM\n');

C=0.1;

model = svmTrain(X,y,C,@linearKernel);

p = svmPredict(model,X);

fprintf('Trainging Accuracy : %f\n',mean(double(p==y))*100);准确度100%,很好分嘛。

然鹅,用hard_ham预测时,准确度只有5%,扎不扎心?

字典词少啊,太少了。

这些难分的邮件,收到的人是不是欠人钱了喂,发的这么像垃圾邮件真的好嘛!