cdh6.3.2配置Hive on Spark

环境:戴尔xps15(32G内存、1T固态、外接雷电3接口的三星1T移动固态、WD Elements的4T外接机械硬盘 )win10三台Centos7虚拟机用于测试cdh6.3.2集群(免费的社区版的最高版本)以及自编译phoenix5.1.0、flink1.10.0、elasticsearch6.6.0等各源码。

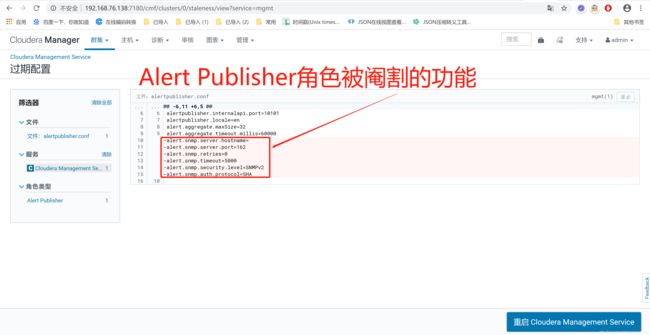

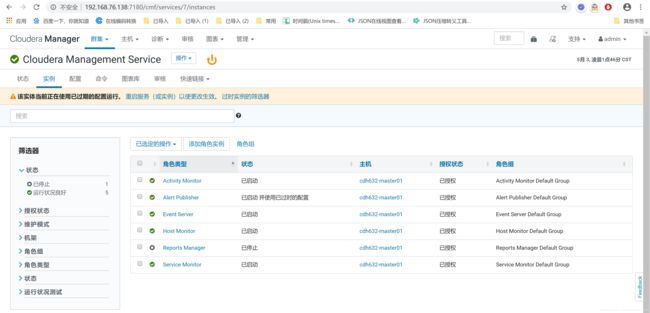

首先,该集群试用企业版功能到期后,无法启动Reports Manager角色(见图一)且Alert Publisher角色部分功能被阉割(见图二)。

1.1、添加服务以搭建Hive on Spark,由于笔记本上虚拟机的cdh集群角色易挂故HDFS配置为HA高可用模式。

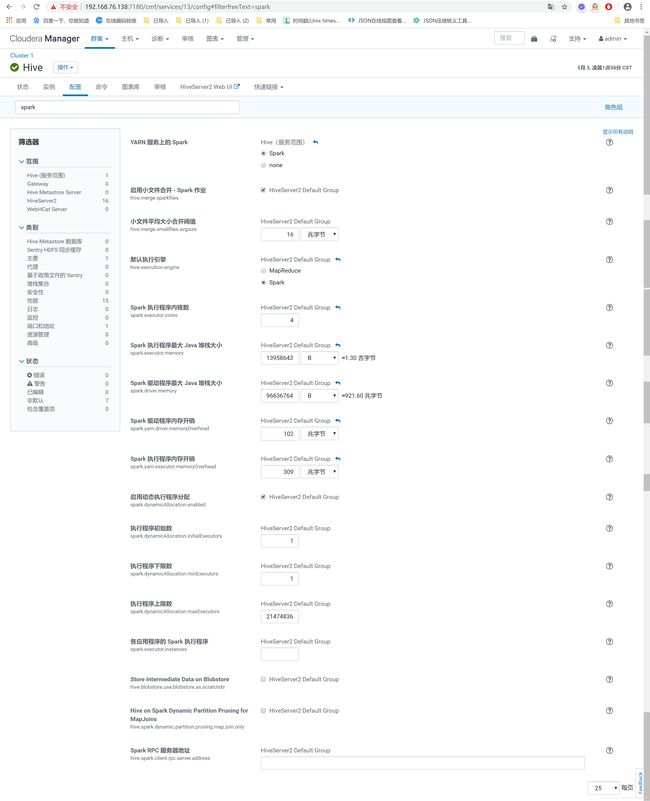

1.2、Hive服务的“配置”处搜索spark(见下图)勾选两配置项,且图中带蓝色回退小按钮的参数项为我调试成功后的最终配置(注:各内存若不修改默认值,则初次运行hive时各种报错)

1.3、在上图勾选spark时会提示要配置Spark On Yarn,到spark服务的“配置”处确认一下

1.4、Yarn服务“配置”处第10页修改默认资源值,可避免下面所列报错

其中yarn.nodemanager.resource.memory-mb和yarn.scheduler.maximum-allocation-mb为必修项(由默认值1改为2)。而yarn.nodemanager.resource.cpu-vcores和yarn.scheduler.maximum-allocation-vcores由默认值2修改为4是为了解决yarn列表中如下报错

1.5、开始运行hive:

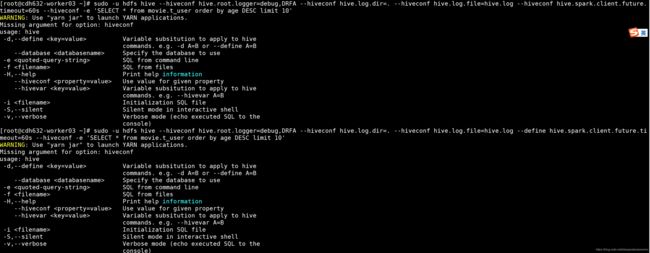

1.5.1、若直接运行hive进入其客户端,弊端为在一开始无法初始化spark client更别提提交到yarn时无法看到详细日志(如下图)

本来调试阶段可以按照Hive命令行中进行设置,无奈始终并不打印日志(见图),权限和报错问题

INFO [spark-submit-stderr-redir-6f70c261-ed5e-4af4-a7ea-7b11084e7547 main] client.SparkClientImpl: Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergr oup:drwxr-xr-x

------------省略------------------------

ERROR [6f70c261-ed5e-4af4-a7ea-7b11084e7547 main] client.SparkClientImpl: Error while waiting for Remote Spark D river to connect back to HiveServer2.

java.util.concurrent.ExecutionException: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

---------------------

ERROR [6f70c261-ed5e-4af4-a7ea-7b11084e7547 main] spark.SparkTask: Failed to execute Spark task "Stage-1"

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session 6f70c261-ed5e-4af4-a7ea-7b11084e7547_0 : java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

--------

Caused by: java.lang.RuntimeException: Error while waiting for Remote Spark Driver to connect back to HiveServer2.于是把hive运行命令改为

图中所说的无果是指hive.log文件中就一行hive-site.xml日志,无其他任何日志。不过控制台却打印出来很多有用日志

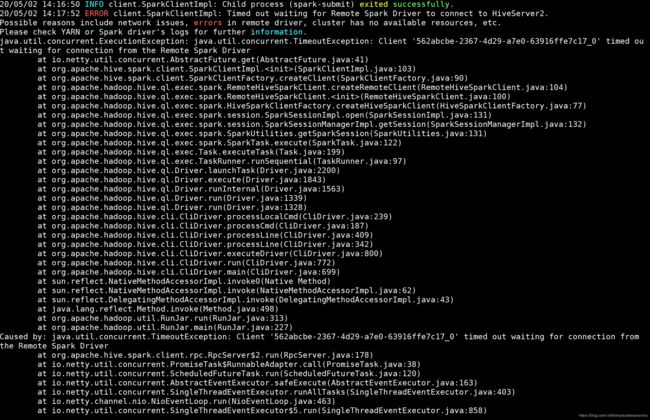

关于此“超时”报错的详解见链接,经尝试由默认值60s改为200s解决超时报错

set hive.spark.client.future.timeout=200;

可解决报错:

ERROR client.SparkClientImpl: Timed out waiting for Remote Spark Driver to connect to HiveServer2.

Possible reasons include network issues, errors in remote driver, cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remoark Driver

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41)

at org.apache.hive.spark.client.SparkClientImpl.(SparkClientImpl.java:103)

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.(RemoteHiveSparkClient.java:100)

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132)

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131)

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1328)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:187)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:409)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:836)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:772)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remote Spark Driver

at org.apache.hive.spark.client.rpc.RpcServer$2.run(RpcServer.java:178)

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38)

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at java.lang.Thread.run(Thread.java:748)

20/05/02 23:37:26 ERROR spark.SparkTask: Failed to execute Spark task "Stage-1"

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session b1fb6956-93d2-438a-98c2-a9db64024ac4_1: java.util.concurrent.TimeoutExceptioient 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remote Spark Driver

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.getHiveException(SparkSessionImpl.java:286)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:135)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132)

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131)

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1328)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:239)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:187)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:409)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:836)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:772)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.lang.RuntimeException: Timed out waiting for Remote Spark Driver to connect to HiveServer2.

Possible reasons include network issues, errors in remote driver, cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

at org.apache.hive.spark.client.SparkClientImpl.(SparkClientImpl.java:124)

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104)

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.(RemoteHiveSparkClient.java:100)

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131)

... 22 more

Caused by: java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection fre Remote Spark Driver

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41)

at org.apache.hive.spark.client.SparkClientImpl.(SparkClientImpl.java:103)

... 27 more

Caused by: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remote Spark Driver

at org.apache.hive.spark.client.rpc.RpcServer$2.run(RpcServer.java:178)

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38)

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at java.lang.Thread.run(Thread.java:748)

FAILED: Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark session b1fb6956-93d2-438a-98c2-a9db644_1: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remote Spark Driver

20/05/02 23:37:26 ERROR ql.Driver: FAILED: Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark sesb1fb6956-93d2-438a-98c2-a9db64024ac4_1: java.util.concurrent.TimeoutException: Client 'b1fb6956-93d2-438a-98c2-a9db64024ac4_1' timed out waiting for connection from the Remotrk Driver 只不过此参数不可在sql同命令行中设置(见下图)

最终的解决方案为

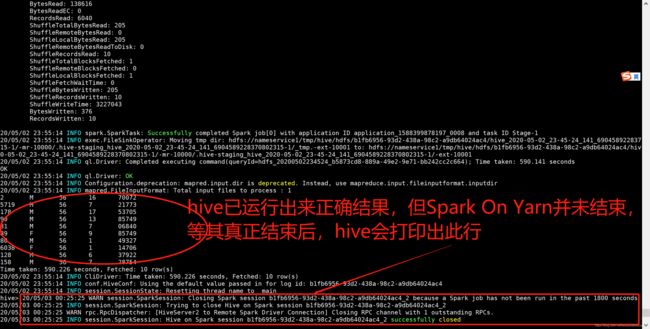

注意:1.关于“超时”链接文中所说的三个参数中只有上图一个参数可以在hql语句中动态设置待验证且验证结果能从hive控制台看出(如下图)

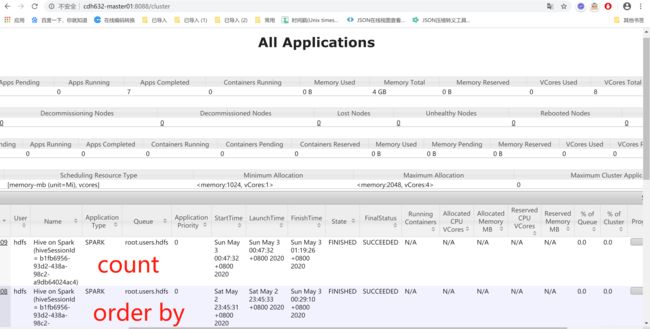

hive客户端运行出来正确结果后Spark On Yarn并未真正结束

上图hive中order by已出结果但下图yarn显示其运行中

count同上

参考文档:

CDH的 hive on spark(spark on yarn)

【hive on spark Error】return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask.

待实践:

hive 替换第三方jar包不生效问题排查

特别鸣谢:https://itdiandi.net/view/1431,不过其文中的配置项在cdh6.3.2的自带Hive组件配置文件中添加后运行hql语句时报错。