VINS-FUSION 源码 双目 单线程 按执行顺序阅读

VINS-FUSION 源码 双目 单线程 按执行顺序阅读

Keywords :

VINS-FUSION vins 源码解读 源码梳理 vins数据结构 vinsfusion vins双目

双目vins 双目vinsfusion 双目vins_fusion vins fusion结构梳理

vins_fusion结构梳理 vins源码 vinsfusion源码 vins纯双目 vinsfusion纯双目 vins-fusion广角双目 vins-fusion代码解读 vins-fusion代码梳理

- 概述

本文依照代码(双目不含imu,只开单线程)执行顺序写成,内容为主要代码加注释的方式,个别会有文字说明,VINS-FUSION开源代码地址为:

https://github.com/HKUST-Aerial-Robotics/VINS-Fusion

错的地方欢迎指正

转载注明来源 - by.十里桃园

吐槽下小觅,只会在知乎抖机灵卖产品,真正的干货基本没,抖机灵抖得多了就很烦了。

再吐槽下vins。vslam的前端在光流法,以至于此算法的天花板颇低。

有两个先天弱点:1,特征点无描述,导致无法用某一帧定位在全图的位置 2,用LK光流跟踪,转弯废

-

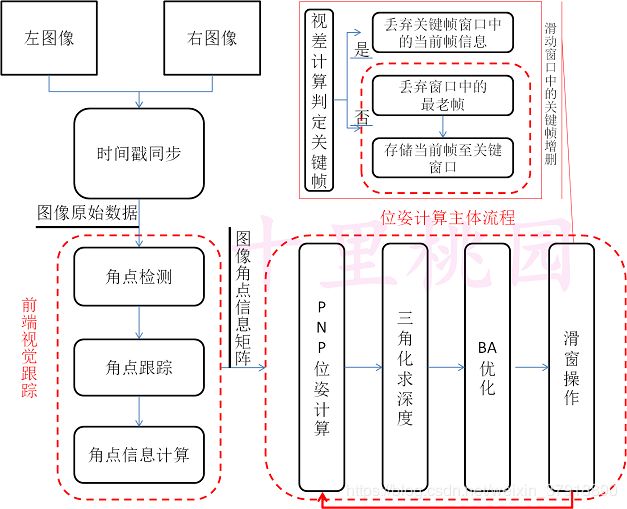

程序流程图(不含回环检测)

程序位姿计算主要分为两个模块,暂不包含回环检测模块:

1,前端视觉跟踪及角点信息计算

2,位姿计算模块

- 程序入口:

程序入口:VINS-Fusion/vins-estimator/src/rosNodeTest.cpp

- 3.1 estimator 类初始化

rosNodeTest.cpp:

Estimator estimator;

Estimator.cpp:

Estimator::Estimator(): f_manager{Rs}

{

ROS_INFO("init begins");

initThreadFlag = false; // 是否初始化线程 false则表示还未初始化线程数

clearState();//清除了状态,为诸多变量赋初值

}

- 3.2数据队列queue初始化

rosNodeTest.cpp:

queue

feature_buf;//存储feature

queue

img0_buf;//存储image0 左侧图像

queue

img1_buf;//存储image1 左侧图像

- 3.3 main函数

rosNodeTest.cpp:

readParameters(config_file);

estimator.setParameter();//读取并配置文件参数

ros::Subscriber

sub_feature = n.subscribe("/feature_tracker/feature", 2000, feature_callback);//源数据为特征点

ros::Subscriber

sub_img0 = n.subscribe(IMAGE0_TOPIC, 100, img0_callback);//左侧图像

ros::Subscriber

sub_img1 = n.subscribe(IMAGE1_TOPIC, 100, img1_callback);//右侧图像

ros::Subscriber sub_restart = n.subscribe("/vins_restart", 100,

restart_callback); //restart

void restart_callback(const std_msgs::BoolConstPtr &restart_msg)

{

if (restart_msg->data

== true)

{

ROS_WARN("restart the estimator!");

estimator.clearState();//清除状态 赋初始值

estimator.setParameter();//根据配置文件赋值

}

return;

}

std::thread sync_thread{sync_process};//入口

main函数为程序入口程序,值得注意的是sync_process为程序入口。

- 接受源图像数据及处理接口

接受源图像数据定义在synv_process函数中,并送入estimator进行位姿估计和后续运算。其接受图像代码块如下:

rosNodeTest.cpp:

double time0 =

img0_buf.front()->header.stamp.toSec();

double time1 =

img1_buf.front()->header.stamp.toSec();

// 0.003s sync tolerance 双摄像头同步 阈值0.003

if(time0 < time1 - 0.003)

{

img0_buf.pop();

printf("throw img0\n");

}

else if(time0 > time1 + 0.003)

{

img1_buf.pop();

printf("throw img1\n");

}//双摄像头同步 购买的摄像头已经做了同步 ros消息时间戳完全相同

else

{

time =

img0_buf.front()->header.stamp.toSec();

header =

img0_buf.front()->header;

image0 =

getImageFromMsg(img0_buf.front());//接收到的左图像 1通道

img0_buf.pop();

image1 =

getImageFromMsg(img1_buf.front());//接收到的右图像 1通道

img1_buf.pop();

//printf("find img0 and img1\n");

}

在接受到源图像之后,送入estimator:

rosNodeTest.cpp:

if(!image0.empty())

estimator.inputImage(time, image0,

image1);//双目图像送入estimator

- 角点检测和视觉跟踪

- 5.1 外层调用接口

VINS-FUISON调用的OPENCV的角点检测和光流法跟踪函数,其外层调用接口如下:

estimator.cpp:

Estimator::inputImage()

map>>> featureFrame;//map 里面的pair为 >类型 存储featureFrame map

if(_img1.empty())

featureFrame =

featureTracker.trackImage(t, _img);//角点检测和跟踪 单目

else

featureFrame = featureTracker.trackImage(t, _img, _img1);//角点检测和跟踪 双目

if (SHOW_TRACK)//是否发布TrackImage话题 RVIZ里面的 track_image窗口

{

cv::Mat imgTrack =

featureTracker.getTrackImage();

//featureTracker.showUndistortion();//显示去畸变的图像,dataset可以正常显示 ,摄像头有黑白间隔异常

pubTrackImage(imgTrack, t);

}

- 5.2 角点检测

VINS-FUSION采用的为Shi-Tomasi角点。

feature_tracker.cpp:

FeatureTracker::setMask ()

cv::circle(mask, it.second.first, MIN_DIST,

0, -1);//把特征点周围MIN_DIST的点设置为0

feature_tracker.cpp:

FeatureTracker::trackImage()

cv::goodFeaturesToTrack(cur_img,

n_pts, MAX_CNT - cur_pts.size(), 0.01, MIN_DIST, mask);

//cur_img

当前输入的单通道图像;

//n_pts 输出的被提取的角点坐标;

//MAX_CNT为设置的最大角点个数程序中会按照角点强度降序排序,超过MAX_CNT的角点将被舍弃;

//0.01为角点强度的阈值系数,若检测出所有角点中的最大强度是max,那么强度值小于max*qualityLevel的角点被舍弃

//MIN_DIST为角点与其邻域强角点之间的欧式距离,与邻域强角点距离小于minDistance的角点将被舍弃;

//mask : 感兴趣区域,

- 5.3 光流法跟踪

VINS-FUSION采用基于角点特征的金字塔LK光流跟踪算法。VINS所定义的跟踪包含两种模式:前后帧跟踪、左右帧间跟踪

前后帧跟踪,以前帧为基础,跟踪当前帧:

feature_tracker.cpp:

FeatureTracker::trackImage()

cv::calcOpticalFlowPyrLK(prev_img,

cur_img, prev_pts, cur_pts, status, err, cv::Size(21, 21), 3);

//输入:prev_img 第一张图像

cur_img: 当前图像

prev_pts第一张图像中的点

//输出

nextPts 当前图像的点

//金字塔三层

if(FLOW_BACK)// 反向的光流跟踪,通过上一帧跟踪到的点,反向跟踪回去,差异大的点剔除。 默认打开->bot.yaml

{

vector reverse_status;

vector

reverse_pts = prev_pts;

cv::calcOpticalFlowPyrLK(cur_img,

prev_img, cur_pts, reverse_pts, reverse_status, err, cv::Size(21, 21), 1,

cv::TermCriteria(cv::TermCriteria::COUNT+cv::TermCriteria::EPS,

30, 0.01), cv::OPTFLOW_USE_INITIAL_FLOW);

//反向跟踪

//cv::calcOpticalFlowPyrLK(cur_img, prev_img,

cur_pts, reverse_pts, reverse_status, err, cv::Size(21, 21), 3);

for(size_t i = 0; i

< status.size(); i++)

{

if(status[i] && reverse_status[i] && distance(prev_pts[i],

reverse_pts[i]) <= 0.5)

//正向和反向的跟踪状态均为1 且反向跟踪的点跟当前点小于0.5个像素

{

status[i] = 1;

//重置跟踪状态

}

else

status[i] = 0;

//重置跟踪状态

}

}

左右帧跟踪,以当前左帧为基础,跟踪当前右帧:

feature_tracker.cpp:

FeatureTracker::trackImage()

cv::calcOpticalFlowPyrLK(cur_img,

rightImg, cur_pts, cur_right_pts, status, err, cv::Size(21, 21), 3); //以左帧为基准跟踪右帧,3层金字塔

if(FLOW_BACK) //反向check跟踪

{

cv::calcOpticalFlowPyrLK(rightImg, cur_img,

cur_right_pts, reverseLeftPts, statusRightLeft, err, cv::Size(21, 21), 3);

for(size_t i = 0; i < status.size(); i++)

{

if(status[i] && statusRightLeft[i]

&& inBorder(cur_right_pts[i]) && distance(cur_pts[i],

reverseLeftPts[i]) <= 0.5)

//正向和反向的跟踪状态均为1 没有越界 且反向跟踪的点跟当前点小于0.5个像素

status[i] = 1;

else

status[i] = 0;

}

}

- 5.4角点坐标畸变纠正

VINS-FUSION采用对角点进行畸变纠正的方式来消除相机畸变的影响。

角点跟踪结束后,对跟踪角点结果进行畸变纠正。入口为:

feature_tracker.cpp:

FeatureTracker::trackImage()

cur_un_pts

= undistortedPts(cur_pts, m_camera[0]);

//cur_un_pts 当前左侧图像角点纠正后的坐标,vector类型;cur_pts待纠正的坐标每副图像的坐标存在一个vector里; m_camera[0]相机编号,0->left,1->right

cur_un_right_pts

= undistortedPts(cur_right_pts, m_camera[1]);

//cur_un_right_pts 当前右侧图像角点纠正后的坐标,vector类型;cur_pts待纠正的坐标每副图像的坐标存在一个vector里; m_camera[1]相机编号,0->left,1->right

feature_tracker.cpp:

FeatureTracker:: undistortedPts ()

vector

un_pts;//纠正结果

for (unsigned int i = 0; i <

pts.size(); i++)//遍历当前图像的角点

{

Eigen::Vector2d a(pts[i].x,

pts[i].y);

Eigen::Vector3d b;

cam->liftProjective(a, b); // 调用图像像素投影函数 将图像特征点的坐标映射到空间坐标

un_pts.push_back(cv::Point2f(b.x() /

b.z(), b.y() / b.z()));

//投影点3D -> 非畸变2D

b(x,y,z)为P点在相机归一化平面的投影 /z为转二维齐次坐标

}

VINS_FUSION采用循环消畸变的方式,先将像素在图像中的坐标转换至归一化平面再进行后续操作。

CataCamera.cc:

CataCamera::liftProjective ()

mx_d =

m_inv_K11 * p(0) + m_inv_K13;// K11 - > fx K13->cx p为像素在RAW图像中的坐标点

my_d =

m_inv_K22 * p(1) + m_inv_K23;//K2 ->fy K23->cy

//投影到归一化平面

int n = 8;//循环消除畸变步数

Eigen::Vector2d

d_u;

distortion(Eigen::Vector2d(mx_d,

my_d), d_u);//分布消除畸变

//

Approximate value 近似值

mx_u =

mx_d - d_u(0);

my_u =

my_d - d_u(1);

for (int i = 1; i < n; ++i)//递归循环

{

distortion(Eigen::Vector2d(mx_u,

my_u), d_u);

mx_u = mx_d - d_u(0);//每递归一次的差值

my_u = my_d - d_u(1);

}

CataCamera.cc:

CataCamera::distortion ()

CataCamera::distortion(const Eigen::Vector2d& p_u,

Eigen::Vector2d& d_u) const //分布畸变纠正函数

{

double k1 =

mParameters.k1();//径向畸变k1

double k2 =

mParameters.k2();//径向畸变k2

double p1 = mParameters.p1();//径向畸变p1

double p2 =

mParameters.p2();//径向畸变p2

double mx2_u, my2_u,

mxy_u, rho2_u, rad_dist_u;

mx2_u = p_u(0) * p_u(0);

my2_u = p_u(1) * p_u(1);

mxy_u = p_u(0) * p_u(1);

rho2_u = mx2_u + my2_u;//距原点距离的平方

rad_dist_u = k1 * rho2_u + k2 * rho2_u *

rho2_u;//径向畸变因子

d_u << p_u(0) * rad_dist_u + 2.0 * p1 * mxy_u + p2 *

(rho2_u + 2.0 * mx2_u),//切向畸变纠正

p_u(1) * rad_dist_u

+ 2.0 * p2 * mxy_u + p1 * (rho2_u + 2.0 * my2_u);//切向畸变纠正

}

- 5.5跟踪角点的速度计算

feature_tracker.cpp:

FeatureTracker::trackImage()

pts_velocity

= ptsVelocity(ids, cur_un_pts, cur_un_pts_map, prev_un_pts_map);

//计算当前点的运动速度 左侧相机

right_pts_velocity

= ptsVelocity(ids_right, cur_un_right_pts, cur_un_right_pts_map,

prev_un_right_pts_map);

//计算当前点的运动速度 右侧相机

feature_tracker.cpp:

FeatureTracker::ptsVelocity()

cur_id_pts.clear();

for (unsigned int i = 0; i <

ids.size(); i++)

{

cur_id_pts.insert(make_pair(ids[i],

pts[i]));//制作 ids和pts的对应映射map

}

double dt = cur_time - prev_time;

//

v=s/dt

//dt已求出

std::map::iterator it;

it =

prev_id_pts.find(ids[i]);

if (it != prev_id_pts.end())//遍历所有角点

{

double v_x = (pts[i].x - it->second.x) / dt;//Vdx

double v_y = (pts[i].y - it->second.y) / dt;//Vdy

pts_velocity.push_back(cv::Point2f(v_x,

v_y));//记录每个角点的速度 return给调用

}

- 5.6角点帧的数据结构

feature_tracker.cpp:

FeatureTracker::trackImage()

map>>> featureFrame;//角点数据帧

for (size_t i = 0; i < ids.size(); i++)//遍历所有角点

{

int feature_id = ids[i];//当前角点 ID

double x, y ,z;

x = cur_un_pts[i].x;//畸变纠正后的当前点x坐标

y = cur_un_pts[i].y;//畸变纠正后的当前点y坐标

z = 1;//齐次坐标

double p_u, p_v;

p_u = cur_pts[i].x;//像素平面当前x坐标

p_v = cur_pts[i].y;//像素平面当前y坐标

int camera_id = 0;

double velocity_x, velocity_y;

velocity_x = pts_velocity[i].x; //当前方向x方向速度

velocity_y = pts_velocity[i].y; //当前方向y方向速度

Eigen::Matrix xyz_uv_velocity;

xyz_uv_velocity << x, y, z,

p_u, p_v, velocity_x, velocity_y;//

//角点帧数据格式:三维齐次坐标*3;二维像素平面坐标*2;x,y方向速度*2;共7个基本数据

featureFrame[feature_id].emplace_back(camera_id, xyz_uv_velocity);//压入当前Vector,函数结尾返回

}

- 位姿计算

在跟踪和角点信息等信息计算完毕后,将依赖所得数据进行SLAM计算。

- [ ]6.1图像处理函数调用

estimator.cpp:

Estimator::inputImage()

else //默认走这里 非多线程

{

mBuf.lock();

featureBuf.push(make_pair(t, featureFrame));//当前feature 送入FeatureBuf

mBuf.unlock();

TicToc processTime;

processMeasurements();//执行运算

printf("process time: %f\n", processTime.toc());//

}

estimator.cpp:

Estimator:: processMeasurements ()

pair >

> > > feature;//读取队列中的feature的容器

feature

= featureBuf.front();//队列中的角点信息读入feature变量

processImage(feature.second, feature.first);//根据接受到的特征执行计算

- 6.2关键帧判定

VINS_FUSION维护了默认大小为10帧的滑动窗口,滑窗中记录了10帧关键帧的双目图像信息。当接受到新图像时,计算平均视差,若太小则不把当前图形作为关键帧加入滑窗。

estimator.cpp:

Estimator:: processImage ()

if

(f_manager.addFeatureCheckParallax(frame_count, image, td))

//frame_count:滑动窗口里的帧数 image:要处理的当前帧 td:图像和IMU的时间差 无IMU置为0

{

marginalization_flag = MARGIN_OLD;//作为关键帧

}

else

{

marginalization_flag = MARGIN_SECOND_NEW;//不作为关键帧

}

//通过计算两帧之间的视差决定是否作为关键帧,添加之前检测到的角点到feature容器中,计算每一个角点被跟踪次数,以及视差

//未完成初始化则先填满关键帧,默认10帧

feature_manager.cpp:

FeatureManager::addFeatureCheckParallax

FeaturePerFrame f_per_fra(id_pts.second[0].second,

td);

//f_per_fra初始化,左侧角点信息赋值

//id_pts.second[0].second 左侧相机的角点信息

//类型为 Eigen::Matrix 三维齐次坐标*3;二维像素平面坐标*2;x,y方向速度*2;

assert(id_pts.second[0].first == 0);

//如果左侧相机角点为0 抛出

if(id_pts.second.size() == 2)

//如果右侧相机角点信息存在

{

f_per_fra.rightObservation(id_pts.second[1].second);

//右侧角点信息赋值至f_per_fra

assert(id_pts.second[1].first == 1);

//右侧角点信息不存在 抛出

}

int feature_id = id_pts.first;//角点ID赋值 id_pts.first为一张图像中的所有角点的序号从强到不强

auto it = find_if(feature.begin(), feature.end(), [feature_id](const FeaturePerId &it)

{

return it.feature_id ==

feature_id;

});

//it 为与当前角点id相同的id 类型为 FeaturePerId

if (it == feature.end())

//如果当前角点list中不存在跟当前角点相同的id 即角点第一次才出现 那么新增

{

feature.push_back(FeaturePerId(feature_id, frame_count));//feature中新建一个FeaturePerId对象

feature.back().feature_per_frame.push_back(f_per_fra);//记录被几帧跟踪到

new_feature_num++;//当前帧的角点数

}

else if (it->feature_id == feature_id)//找到当前角点

{

it->feature_per_frame.push_back(f_per_fra);//记录被几帧跟踪到

last_track_num++;//跟踪到的角点数目

if( it-> feature_per_frame.size() >= 4)//连续被4帧跟踪到

long_track_num++;

}

}

if (frame_count < 2 || last_track_num < 20 ||

long_track_num < 40 || new_feature_num > 0.5 * last_track_num)

//关键帧数小于2 跟踪到角点数目小于20 连续被跟踪小于40帧 当前帧加入角点记录列表的数目大于跟踪到角点数目的一半(当前帧出现新的视野较多)

//以上4条件满足一则插入滑动窗口关键帧

return true;

- 6.3帧间视差计算(VINS有bug改正)

VINS_FUSION在计算视差的时候利用角点缓存等数据计算次新帧,次次新帧之间的误差,从而判定是否将当前帧加入关键帧。

feature_manager.cpp:

FeatureManager::addFeatureCheckParallax ()

for (auto &it_per_id : feature)//遍历特征点

{

// 计算第2最新帧和第3最新帧之间跟踪到的特征点的平均视差

if (it_per_id.start_frame <= frame_count - 2

&&

it_per_id.start_frame + int(it_per_id.feature_per_frame.size()) - 1

>= frame_count - 1)

//跟刚加入的时候对比 两帧以内

//刚加入的那帧 与 连续跟踪数之和再减1

{

// 对于给定id的特征点,计算第2最新帧和第3最新帧之间该特征点的视差(当前帧frame_count是第1最新帧)

parallax_sum +=

compensatedParallax2(it_per_id, frame_count);

parallax_num++;

}

}

if (parallax_num

== 0)

{

return true;

//插入关键帧

}

else

{

ROS_DEBUG("parallax_sum: %lf, parallax_num:

%d", parallax_sum,

parallax_num);

ROS_DEBUG("current parallax: %lf", parallax_sum / parallax_num *

FOCAL_LENGTH);

last_average_parallax = parallax_sum /

parallax_num * FOCAL_LENGTH;

return parallax_sum / parallax_num >=

MIN_PARALLAX;

//补偿后的视差大于 阈值 若小于,当前帧不插入关键帧

// MIN_PARALLAX = MIN_PARALLAX(默认15) / FOCAL_LENGTH;

}

VINS—FUSION做帧间视差补偿计算将调用下述函数

feature_manager.cpp:

FeatureManager::compensatedParallax2 ()

double FeatureManager::compensatedParallax2(const FeaturePerId &it_per_id, int frame_count)

{

//it_per_id : 传入的角点id 类型为FeaturePerId

//frame_count :当前滑窗内的图像帧数

//check the second last frame is keyframe or not

//parallax betwwen seconde last frame and third last frame

const FeaturePerFrame &frame_i = it_per_id.feature_per_frame[frame_count - 2 - it_per_id.start_frame];

const FeaturePerFrame &frame_j = it_per_id.feature_per_frame[frame_count - 1 - it_per_id.start_frame];

double ans = 0;//视差补偿值

Vector3d p_j = frame_j.point;

double u_j = p_j(0);

double v_j = p_j(1);

Vector3d p_i = frame_i.point;

Vector3d p_i_comp;//冗余代码

//int r_i = frame_count - 2;

//int r_j = frame_count - 1;

//p_i_comp = ric[camera_id_j].transpose() * Rs[r_j].transpose() * Rs[r_i]

* ric[camera_id_i] * p_i;

p_i_comp = p_i;//冗余代码

double dep_i = p_i(2);

double u_i = p_i(0) /

dep_i;//像素平面坐标

double v_i = p_i(1) /

dep_i;//像素平面坐标

double du = u_i - u_j,

dv = v_i - v_j;//x差值与y差值 此处应有误,u_j应为 u_j / p_j(2) ??

double dep_i_comp =

p_i_comp(2);//冗余代码

double u_i_comp =

p_i_comp(0) / dep_i_comp;//冗余代码

double v_i_comp =

p_i_comp(1) / dep_i_comp;//冗余代码

double du_comp =

u_i_comp - u_j, dv_comp = v_i_comp - v_j;//冗余代码

ans = max(ans, sqrt(min(du * du + dv * dv,

du_comp * du_comp + dv_comp * dv_comp)));

//上行代码有误

return ans;

}

但该函数有谬误,其修正方式应如下

feature_manager.cpp:

FeatureManager::compensatedParallax2 ()

double FeatureManager::compensatedParallax2(const FeaturePerId &it_per_id, int frame_count)

{

//it_per_id : 传入的角点id 类型为FeaturePerId

//frame_count :当前滑窗内的图像帧数

//check the second last frame is keyframe or not

//parallax betwwen seconde last frame and third last frame

const FeaturePerFrame &frame_i = it_per_id.feature_per_frame[frame_count - 2 - it_per_id.start_frame];

const FeaturePerFrame &frame_j = it_per_id.feature_per_frame[frame_count - 1 - it_per_id.start_frame];

double ans = 0;//视差补偿值

Vector3d p_j = frame_j.point;

double u_j = p_j(0);

double v_j = p_j(1);

Vector3d p_i = frame_i.point;

//Vector3d p_i_comp;//冗余代码

//int r_i = frame_count - 2;

//int r_j = frame_count - 1;

//p_i_comp = ric[camera_id_j].transpose() * Rs[r_j].transpose() * Rs[r_i]

* ric[camera_id_i] * p_i;

// p_i_comp = p_i;//冗余代码

double dep_i = p_i(2);

double u_i = p_i(0) /

dep_i;//像素平面坐标

double v_i = p_i(1) /

dep_i;//像素平面坐标

double dep_j = p_j(2);

double u_j = p_j(0) / dep_j;//像素平面坐标

double v_j = p_j(1) / dep_j;//像素平面坐标

double du = u_i - u_j,

dv = v_i - v_j;//x差值与y差值 此处应有误,u_j应为 u_j / p_j(2) ??

// double dep_i_comp = p_i_comp(2);//冗余代码

// double u_i_comp = p_i_comp(0) / dep_i_comp;//冗余代码

// double v_i_comp = p_i_comp(1) / dep_i_comp;//冗余代码

// double du_comp = u_i_comp - u_j, dv_comp = v_i_comp - v_j;//冗余代码

//ans = max(ans, sqrt(min(du * du + dv * dv, du_comp * du_comp + dv_comp *

dv_comp)));

//上行代码有误

ans = sqrt(du * du + dv * dv);

return ans;

}

- 6.3位姿计算

VINS—FUSION使用PNP方法计算相机位姿,其主体函数如下,主要有4个部分:PNP,三角化,BA优化,滑窗

estimator.cpp:

Estimator:: processImage ()

if(STEREO && !USE_IMU)

{

f_manager.initFramePoseByPnP(frame_count,

Ps, Rs, tic, ric);

//tic[0]左相机T tic[1] 右相机T ric[0]左相机R ric[1]右相机R

f_manager.triangulate(frame_count,

Ps, Rs, tic, ric);//三角化三维坐标

optimization();//非线性最小二乘优化重投影误差

if(frame_count == WINDOW_SIZE)

{

solver_flag = NON_LINEAR; //初始化完毕,程序正常运行

slideWindow();

ROS_INFO("Initialization finish!");

}

}

TicToc t_solve;

if(!USE_IMU)

f_manager.initFramePoseByPnP(frame_count, Ps, Rs, tic, ric);//pnp求位姿

f_manager.triangulate(frame_count, Ps, Rs, tic, ric);//三角化

optimization();//优化

set removeIndex;

outliersRejection(removeIndex);

f_manager.removeOutlier(removeIndex);

if (! MULTIPLE_THREAD)

{

featureTracker.removeOutliers(removeIndex);

predictPtsInNextFrame();

}

ROS_DEBUG("solver costs: %fms", t_solve.toc());

//异常检测与恢复, 检测到异常,系统将切换回初始化阶段

if (failureDetection())

{

ROS_WARN("failure detection!");

failure_occur = 1;

clearState();

setParameter();

ROS_WARN("system reboot!");

return;

}

slideWindow();//滑窗

f_manager.removeFailures();

// prepare output of VINS

key_poses.clear();

for (int i = 0; i <= WINDOW_SIZE; i++)

key_poses.push_back(Ps[i]);

last_R = Rs[WINDOW_SIZE];

last_P = Ps[WINDOW_SIZE];

last_R0 = Rs[0];

last_P0 = Ps[0];

updateLatestStates();

- 6.3.1 PNP求位姿

PNP计算函数提供了opencv cv::solvePnP函数的外层封装和数据准备,在初始化第1帧图像时,因深度信息匮乏先执行三角化求深度。

feature_manager.cpp:

FeatureManager::solvePoseByPnP ()

bool FeatureManager::solvePoseByPnP(Eigen::Matrix3d &R, Eigen::Vector3d &P,

vector &pts2D, vector &pts3D)

{

Eigen::Matrix3d R_initial;

Eigen::Vector3d P_initial;

// w_T_cam ---> cam_T_w

R_initial = R.inverse();//相机到世界 R

P_initial = -(R_initial * P);//相机到世界 T

//printf("pnp size %d \n",(int)pts2D.size() );

if (int(pts2D.size()) < 4)//最小二乘法至少4个角点

{

printf("feature tracking not enough, please

slowly move you device! \n");

return false;

}

cv::Mat r, rvec, t, D, tmp_r;

cv::eigen2cv(R_initial, tmp_r);

cv::Rodrigues(tmp_r, rvec);//罗德里格斯变换 向量变换到矩阵

cv::eigen2cv(P_initial, t);//eigen 转自cv格式

cv::Mat K = (cv::Mat_(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0,

1); //相机内参

bool pnp_succ;

pnp_succ = cv::solvePnP(pts3D, pts2D, K, D, rvec, t,

1);

//pts3D: 世界坐标系下的控制点的坐标

//pts2D: 在图像坐标系下对应的控制点的坐标

//K 相机内参矩阵

//D 畸变矩阵

//rvec 输出的旋转向量。使坐标点从世界坐标系旋转到相机坐标系

//t 输出的平移向量。使坐标点从世界坐标系平移到相机坐标系

//默认使用CV_ITERATIV迭代法

//pnp_succ = solvePnPRansac(pts3D, pts2D, K, D, rvec, t, true, 100, 8.0 /

focalLength, 0.99, inliers);

if(!pnp_succ)

{

printf("pnp failed ! \n");

return false;

}

cv::Rodrigues(rvec, r);//罗德里格斯变换

//cout << "r " << endl << r << endl;

Eigen::MatrixXd R_pnp;

cv::cv2eigen(r, R_pnp);//cv 格式转至 eigen格式 R矩阵

Eigen::MatrixXd T_pnp;

cv::cv2eigen(t, T_pnp);//cv 格式转至 eigen格式 T矩阵

// cam_T_w ---> w_T_cam

R =

R_pnp.transpose();

P = R * (-T_pnp);

return true;

}

- 6.3.2 三角化求深度

在三角化函数中,因本文档跟踪纯双目数据,并不含IMU数据,但下面函数中的IMU信息是仅因需要通过IMU坐标系转换,需要转换到左相机坐标系下的深度。

feature_manager.cpp:

FeatureManager::triangulate ()

int imu_i = it_per_id.start_frame;//IMU不用 但是坐标定义在imu上 通过imu转换位姿

Eigen::Matrix leftPose;

Eigen::Vector3d

t0 = Ps[imu_i] + Rs[imu_i] * tic[0];//利用imu的位姿计算左相机位姿 T

Eigen::Matrix3d

R0 = Rs[imu_i] * ric[0];//利用imu的位姿计算左相机位姿 R

leftPose.leftCols<3>()

= R0.transpose();

leftPose.rightCols<1>()

= -R0.transpose() * t0;

//cout

<< "left pose " << leftPose << endl;

Eigen::Matrix rightPose;

Eigen::Vector3d

t1 = Ps[imu_i] + Rs[imu_i] * tic[1];//利用imu的位姿计算右相机位姿

Eigen::Matrix3d

R1 = Rs[imu_i] * ric[1];//利用imu的位姿计算右相机位姿

rightPose.leftCols<3>()

= R1.transpose();

rightPose.rightCols<1>()

= -R1.transpose() * t1;

//cout << "right pose "

<< rightPose << endl;

Eigen::Vector2d

point0, point1;

Eigen::Vector3d

point3d;

point0 =

it_per_id.feature_per_frame[0].point.head(2);

point1 =

it_per_id.feature_per_frame[0].pointRight.head(2);

//cout

<< "point0 " << point0.transpose() << endl;

//cout

<< "point1 " << point1.transpose() << endl;

triangulatePoint(leftPose,

rightPose, point0, point1, point3d); // svd方法三角化

Eigen::Vector3d

localPoint;

localPoint

= leftPose.leftCols<3>() * point3d + leftPose.rightCols<1>();//左相机坐标系下的坐标

double depth = localPoint.z();//深度信息

if (depth > 0)

it_per_id.estimated_depth = depth;

else

it_per_id.estimated_depth = INIT_DEPTH;//INIT_DEPTH = 5.0

- 6.3.3 BA优化

- 6.3.4 滑窗操作

滑窗操作主要有两种操作

其一,次新帧为关键帧,删除最老帧插入次新帧

其二,次新帧不是关键帧,删除次新帧信息,如角点信息,跟踪信息等

其核心代码如下:

feature_manager.cpp:

FeatureManager::slideWindow ()

void Estimator::slideWindow()

{

TicToc t_margin;

if

(marginalization_flag == MARGIN_OLD)//关键帧更新

{

double t_0 = Headers[0];

back_R0 = Rs[0];

back_P0 = Ps[0];

if (frame_count == WINDOW_SIZE)//frame_count在初始化后恒等于WINDOW_SIZE的默认值10

{

for (int i = 0; i <

WINDOW_SIZE; i++)

{

Headers[i] = Headers[i + 1];//时间戳

Rs[i].swap(Rs[i + 1]);//变换矩阵

Ps[i].swap(Ps[i + 1]);

if(USE_IMU)

{

std::swap(pre_integrations[i], pre_integrations[i + 1]);

dt_buf[i].swap(dt_buf[i +

1]);

linear_acceleration_buf[i].swap(linear_acceleration_buf[i + 1]);

angular_velocity_buf[i].swap(angular_velocity_buf[i

+ 1]);

Vs[i].swap(Vs[i + 1]);

Bas[i].swap(Bas[i + 1]);

Bgs[i].swap(Bgs[i + 1]);

}

}

Headers[WINDOW_SIZE] =

Headers[WINDOW_SIZE - 1];//删除 一帧

Ps[WINDOW_SIZE] = Ps[WINDOW_SIZE

- 1];

Rs[WINDOW_SIZE] = Rs[WINDOW_SIZE

- 1];

if(USE_IMU)

{

Vs[WINDOW_SIZE] =

Vs[WINDOW_SIZE - 1];

Bas[WINDOW_SIZE] =

Bas[WINDOW_SIZE - 1];

Bgs[WINDOW_SIZE] =

Bgs[WINDOW_SIZE - 1];

delete pre_integrations[WINDOW_SIZE];

pre_integrations[WINDOW_SIZE]

= new IntegrationBase{acc_0, gyr_0, Bas[WINDOW_SIZE],

Bgs[WINDOW_SIZE]};

dt_buf[WINDOW_SIZE].clear();

linear_acceleration_buf[WINDOW_SIZE].clear();

angular_velocity_buf[WINDOW_SIZE].clear();

}

//释放ImageFrame中最老帧信息

if (true ||

solver_flag == INITIAL)

{

map::iterator it_0;

it_0 = all_image_frame.find(t_0);

delete it_0->second.pre_integration;

all_image_frame.erase(all_image_frame.begin(), it_0);

}

slideWindowOld();//删除最老帧更新窗口

}

}

else//关键帧不更新

{

if (frame_count == WINDOW_SIZE)

{

Headers[frame_count - 1] =

Headers[frame_count];

Ps[frame_count - 1] =

Ps[frame_count];

Rs[frame_count - 1] = Rs[frame_count];

if(USE_IMU)

{

for (unsigned int i = 0; i < dt_buf[frame_count].size();

i++)

{

double tmp_dt = dt_buf[frame_count][i];

Vector3d tmp_linear_acceleration =

linear_acceleration_buf[frame_count][i];

Vector3d

tmp_angular_velocity = angular_velocity_buf[frame_count][i];

pre_integrations[frame_count - 1]->push_back(tmp_dt, tmp_linear_acceleration,

tmp_angular_velocity);

dt_buf[frame_count -

1].push_back(tmp_dt);

linear_acceleration_buf[frame_count -

1].push_back(tmp_linear_acceleration);

angular_velocity_buf[frame_count - 1].push_back(tmp_angular_velocity);

}

Vs[frame_count - 1] =

Vs[frame_count];

Bas[frame_count - 1] =

Bas[frame_count];

Bgs[frame_count - 1] =

Bgs[frame_count];

delete pre_integrations[WINDOW_SIZE];

pre_integrations[WINDOW_SIZE]

= new IntegrationBase{acc_0, gyr_0, Bas[WINDOW_SIZE],

Bgs[WINDOW_SIZE]};

dt_buf[WINDOW_SIZE].clear();

linear_acceleration_buf[WINDOW_SIZE].clear();

angular_velocity_buf[WINDOW_SIZE].clear();

}

slideWindowNew();//删除次新帧信息

}

}

}