(中国大学MOOC)《深度学习应用开发-TensorFlow实践》(第8讲---MNIST手写数字识别:多层神经网络与应用)

全连接单隐藏层网络建模实现

%matplotlib notebook

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data

from time import time

import matplotlib.pyplot as plt

import numpy as np

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x = tf.placeholder(tf.float32, [None, 784], name="X")

y = tf.placeholder(tf.float32, [None, 10], name="Y")

H1_NN = 256

W1 = tf.Variable(tf.truncated_normal([784, H1_NN], stddev=0.1))

b1 = tf.Variable(tf.zeros([H1_NN]))

Y1 = tf.nn.relu(tf.matmul(x, W1) + b1)

W2 = tf.Variable(tf.truncated_normal([H1_NN, 10], stddev=0.1))

b2 = tf.Variable(tf.zeros([10]))

forward = tf.matmul(Y1, W2) + b2

pred = tf.nn.softmax(forward)

loss_function = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(logits=forward, labels=y))

train_epochs = 4

batch_size = 50

total_batch = int(mnist.train.num_examples / batch_size)

display_step = 1

learning_rate = 0.01

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(loss_function)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(pred, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

startTime = time()

sess = tf.Session()

sess.run(tf.global_variables_initializer())

loss_list = []

for epoch in range(train_epochs):

for batch in range(total_batch):

xs, ys = mnist.train.next_batch(batch_size)

sess.run(optimizer, feed_dict={x:xs, y:ys})

loss, acc = sess.run([loss_function, accuracy],

feed_dict={x:mnist.validation.images,

y:mnist.validation.labels})

loss_list.append(loss)

if(epoch+1) % display_step == 0:

print("Train Epoch:", "%02d" % (epoch+1),

"Loss=", "{:.9f}".format(loss), "Accuracy=", "{:.4f}".format(acc))

duration = time() - startTime

print("Train Finished takes:", "{:.2f}".format(duration))

plt.plot(loss_list)

acc_test = sess.run(accuracy, feed_dict={x:mnist.test.images,

y:mnist.test.labels})

print("Test Accuarcy:", acc_test)

prediction_result = sess.run(tf.argmax(pred, 1), feed_dict={x:mnist.test.images})

print(prediction_result[0:10])

compare_list = prediction_result == np.argmax(mnist.test.labels, 1)

print(compare_list)

err_list = [i for i in range(len(compare_list)) if compare_list[i]==False]

print(err_list, len(err_list))

def print_predict_errs(labels, prediction):

cnt = 0

compare_list = prediction_result == np.argmax(mnist.test.labels, 1)

err_list = [i for i in range(len(compare_list)) if compare_list[i]==False]

for x in err_list:

print("index="+str(x)+

"标签值=", np.argmax(labels[x]),

"预测值=",prediction_result[x])

cnt += 1

print("总计:"+str(cnt))

print_predict_errs(labels=mnist.test.labels, prediction=prediction_result)

def plot_images_lables_prediction(images,

labels,

prediction,

index,

num=10):

fig = plt.gcf()

fig.set_size_inches(10, 15)

if num > 25:

num = 25

for i in range(0, num):

ax = plt.subplot(5, 5, i+1)

ax.imshow(np.reshape(images[index],(28,28)),

cmap="binary")

title = "label=" + str(np.argmax(labels[index]))

if len(prediction) > 0:

title += ", predict=" + str(prediction[index])

ax.set_title(title, fontsize=10)

ax.set_xticks([])

ax.set_yticks([])

index += 1

plt.show()

plot_images_lables_prediction(mnist.test.images,

mnist.test.labels,

prediction_result,

0, 10)

增加到两层神经网络

## 两层

%matplotlib notebook

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data

from time import time

import matplotlib.pyplot as plt

import numpy as np

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x = tf.placeholder(tf.float32, [None, 784], name="X")

y = tf.placeholder(tf.float32, [None, 10], name="Y")

H1_NN = 256

H2_NN = 128

W1 = tf.Variable(tf.truncated_normal([784, H1_NN], stddev=0.1))

b1 = tf.Variable(tf.zeros([H1_NN]))

W2 = tf.Variable(tf.truncated_normal([H1_NN, H2_NN], stddev=0.1))

b2 = tf.Variable(tf.zeros([H2_NN]))

W3 = tf.Variable(tf.truncated_normal([H2_NN, 10], stddev=0.1))

b3 = tf.Variable(tf.zeros([10]))

Y1 = tf.nn.relu(tf.matmul(x, W1) + b1)

Y2 = tf.nn.relu(tf.matmul(Y1, W2) + b2)

forward = tf.matmul(Y2, W3) + b3

pred = tf.nn.softmax(forward)

loss_function = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(logits=forward, labels=y))

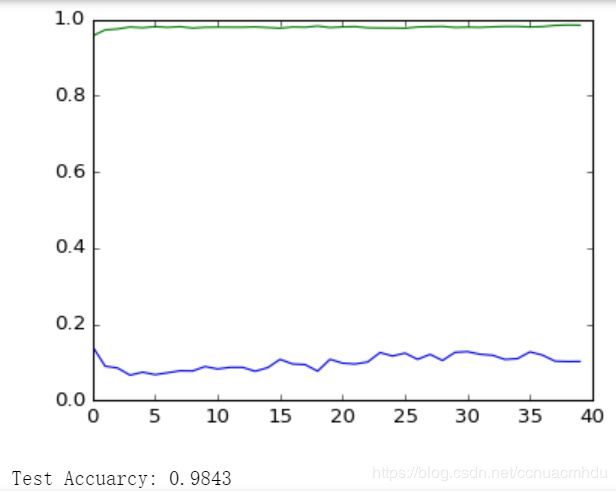

train_epochs = 40

batch_size = 50

total_batch = int(mnist.train.num_examples / batch_size)

display_step = 1

learning_rate = 0.01

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(loss_function)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(pred, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

startTime = time()

sess = tf.Session()

sess.run(tf.global_variables_initializer())

loss_list = []

for epoch in range(train_epochs):

for batch in range(total_batch):

xs, ys = mnist.train.next_batch(batch_size)

sess.run(optimizer, feed_dict={x:xs, y:ys})

loss, acc = sess.run([loss_function, accuracy],

feed_dict={x:mnist.validation.images,

y:mnist.validation.labels})

loss_list.append(loss)

if(epoch+1) % display_step == 0:

print("Train Epoch:", "%02d" % (epoch+1),

"Loss=", "{:.9f}".format(loss), "Accuracy=", "{:.4f}".format(acc))

duration = time() - startTime

print("Train Finished takes:", "{:.2f}".format(duration))

plt.plot(loss_list)

acc_test = sess.run(accuracy, feed_dict={x:mnist.test.images,

y:mnist.test.labels})

print("Test Accuarcy:", acc_test)

prediction_result = sess.run(tf.argmax(pred, 1), feed_dict={x:mnist.test.images})

print(prediction_result[0:10])

compare_list = prediction_result == np.argmax(mnist.test.labels, 1)

print(compare_list)

err_list = [i for i in range(len(compare_list)) if compare_list[i]==False]

print(err_list, len(err_list))

def plot_images_lables_prediction(images,

labels,

prediction,

index,

num=10):

fig = plt.gcf()

fig.set_size_inches(10, 15)

if num > 25:

num = 25

for i in range(0, num):

ax = plt.subplot(5, 5, i+1)

ax.imshow(np.reshape(images[index],(28,28)),

cmap="binary")

title = "label=" + str(np.argmax(labels[index]))

if len(prediction) > 0:

title += ", predict=" + str(prediction[index])

ax.set_title(title, fontsize=10)

ax.set_xticks([])

ax.set_yticks([])

index += 1

plt.show()

plot_images_lables_prediction(mnist.test.images,

mnist.test.labels,

prediction_result,

0, 10)

发现预测准确率反而下降了!!

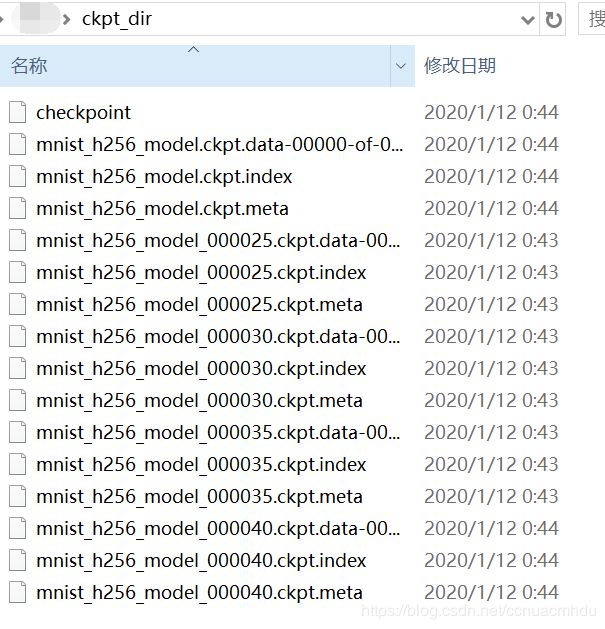

保存训练好的模型

## 保存模型

%matplotlib notebook

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data

from time import time

import matplotlib.pyplot as plt

import numpy as np

import os

tf.reset_default_graph()

logDir = "C:\\Users\\20191027\\Documents\\log" # 输出日志,用于TensorBoard可视化

## 1、准备数据

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x = tf.placeholder(tf.float32, [None, 784], name="X")

y = tf.placeholder(tf.float32, [None, 10], name="Y")

## 2、构建模型

H1_NN = 256

W1 = tf.Variable(tf.truncated_normal([784, H1_NN], stddev=0.1))

b1 = tf.Variable(tf.zeros([H1_NN]))

Y1 = tf.nn.relu(tf.matmul(x, W1) + b1)

W2 = tf.Variable(tf.truncated_normal([H1_NN, 10], stddev=0.1))

b2 = tf.Variable(tf.zeros([10]))

forward = tf.matmul(Y1, W2) + b2

pred = tf.nn.softmax(forward)

loss_function = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(logits=forward, labels=y))

## 3、训练模型

train_epochs = 40

batch_size = 40

total_batch = int(mnist.train.num_examples / batch_size)

display_step = 1

learning_rate = 0.001

save_step = 5 # 保存模型粒度(每几轮保存一次)。只会保存最近的5次模型

ckpt_dir = "./ckpt_dir/" # 保存模型的路径

if not os.path.exists(ckpt_dir):

os.makedirs(ckpt_dir)

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(loss_function)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(pred, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 声明完所有变量后,再调用Saver,用于保存模型

saver = tf.train.Saver()

startTime = time()

sess = tf.Session()

sess.run(tf.global_variables_initializer())

loss_list = [] # 统计训练每轮的损失

acc_list = [] # 统计训练每轮的准确率

for epoch in range(train_epochs):

for batch in range(total_batch):

xs, ys = mnist.train.next_batch(batch_size)

sess.run(optimizer, feed_dict={x:xs, y:ys})

loss, acc = sess.run([loss_function, accuracy],

feed_dict={x:mnist.validation.images,

y:mnist.validation.labels})

loss_list.append(loss)

acc_list.append(acc)

if(epoch+1) % display_step == 0:

print("Train Epoch:", "%02d" % (epoch+1),

"Loss=", "{:.9f}".format(loss), "Accuracy=", "{:.4f}".format(acc))

# 保存模型

if (epoch+1) % save_step == 0:

saver.save(sess, os.path.join(ckpt_dir,'mnist_h256_model_{:06d}.ckpt'.format(epoch+1)))

print('mnist_h256_model_{:06d}.ckpt saved'.format(epoch+1))

# 保存最终的模型

saver.save(sess, os.path.join(ckpt_dir, 'mnist_h256_model.ckpt'))

print("Model saved!")

duration = time() - startTime

print("Train Finished takes:", "{:.2f}".format(duration))

plt.plot(loss_list) # 打印损失随训练轮数的变化曲线

plt.plot(acc_list) # 打印准确率随训练轮数的变化曲线

## 4.预测

acc_test = sess.run(accuracy, feed_dict={x:mnist.test.images,

y:mnist.test.labels})

print("Test Accuarcy:", acc_test)

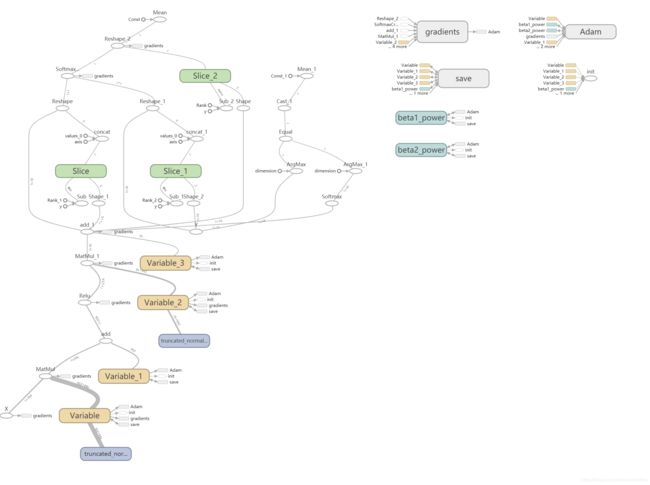

# 输出日志,用于TensorBoard可视化显示

writer = tf.summary.FileWriter(logDir, tf.get_default_graph())

writer.close()

用于tensorboard可视化计算图的日志文件:

![]()

用于模型恢复的文件:

恢复保存的模型

遇到了很多问题!!按照老师讲解的视频无法恢复。。。怎么破?

- tensorboard无法显示计算图怎么办?

Win+R,输入cmd进入DOS黑框框,输入tensorboard --logdir=日志路径

(计算图可视化方法见这篇博客)

- 奇葩的事情是,笔者搜索很久都没找到答案,搞了好久它自己好了。。StackOverflow

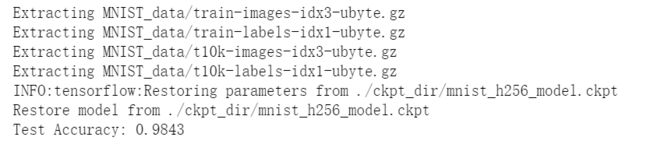

## 恢复保存的模型(有奇葩错误bug,需要先带上tf.reset_default_graph()执行,再去掉它执行。。)

# (奇葩的是,我搜索了那么久,都没找到解决方案,它自己好了。。。)

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data

import numpy as np

import matplotlib.pyplot as plt

import cv2

tf.reset_default_graph() # 要有这句话

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x = tf.placeholder(tf.float32, [None, 784], name="X")

y = tf.placeholder(tf.float32, [None, 10], name="Y")

H1_NN = 256

W1 = tf.Variable(tf.truncated_normal([784, H1_NN], stddev=0.1))

b1 = tf.Variable(tf.zeros([H1_NN]))

Y1 = tf.nn.relu(tf.matmul(x, W1) + b1)

W2 = tf.Variable(tf.truncated_normal([H1_NN, 10], stddev=0.1))

b2 = tf.Variable(tf.zeros([10]))

forward = tf.matmul(Y1, W2) + b2

pred = tf.nn.softmax(forward)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(pred, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

ckpt_dir = "./ckpt_dir/" # 保存模型的路径

saver = tf.train.Saver()

sess = tf.Session()

sess.run(tf.global_variables_initializer())

ckpt = tf.train.get_checkpoint_state(ckpt_dir)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

print("Restore model from "+ckpt.model_checkpoint_path)

# 预测

print("Test Accuracy:", accuracy.eval(session=sess,

feed_dict={x:mnist.test.images, y:mnist.test.labels}))

特此说明

本文参考中国大学MOOC官方课程《深度学习应用开发-TensorFlow实践》吴明晖、李卓蓉、金苍宏