MyBatis-Plus 简单使用

简介

文档

官方

github

特性

- 无侵入:只做增强不做改变,引入它不会对现有工程产生影响,如丝般顺滑

- 损耗小:启动即会自动注入基本 CURD,性能基本无损耗,直接面向对象操作

- 强大的 CRUD 操作:内置通用 Mapper、通用 Service,仅仅通过少量配置即可实现单表大部分 CRUD 操作,更有强大的条件构造器,满足各类使用需求

- 支持 Lambda 形式调用:通过 Lambda 表达式,方便的编写各类查询条件,无需再担心字段写错

- 支持主键自动生成:支持多达 4 种主键策略(内含分布式唯一 ID 生成器 - Sequence),可自由配置,完美解决主键问题

- 支持 ActiveRecord 模式:支持 ActiveRecord 形式调用,实体类只需继承 Model 类即可进行强大的 CRUD 操作

- 支持自定义全局通用操作:支持全局通用方法注入( Write once, use anywhere )

- 内置代码生成器:采用代码或者 Maven 插件可快速生成 Mapper 、 Model 、 Service 、 Controller 层代码,支持模板引擎,更有超多自定义配置等您来使用

- 内置分页插件:基于 MyBatis 物理分页,开发者无需关心具体操作,配置好插件之后,写分页等同于普通 List 查询

- 分页插件支持多种数据库:支持 MySQL、MariaDB、Oracle、DB2、H2、HSQL、SQLite、Postgre、SQLServer 等多种数据库

- 内置性能分析插件:可输出 Sql 语句以及其执行时间,建议开发测试时启用该功能,能快速揪出慢查询

- 内置全局拦截插件:提供全表 delete 、 update 操作智能分析阻断,也可自定义拦截规则,预防误操作

配置以及基本使用

- pom.xml

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.0.5</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

- yml

spring:

datasource:

username: xxx

password: xxx

url: jdbc:mysql://120.79.18.7:32553/test_xf?characterEncoding=utf-8&useSSL=false

driver-class-name: com.mysql.jdbc.Driver

- 实体类

/**

* 1. 与表名一样

* 2. 字段一致

*/

@Data

@AllArgsConstructor

@NoArgsConstructor

public class Employee {

Integer id;

String lastName;

String email;

String gender;

Integer age;

}

- 写接口

// 对应的 Mapper上面集成基本的类 BaseMapper

@Repository

public interface EmployeeMapper extends BaseMapper<Employee> {

/**

* 此时所有的CRUD(基本的)已经完成

*/

}

- 主启动类

@MapperScan("com.bnmzy.mybatisplus.mapper")

@SpringBootApplication

public class MybatisPlus01Application {

- 测试类

/**

* 1. 继承了BaseMapper,所有的方法都来自父类

* 2. 也可以编写自己的扩展方法

*/

@Autowired

private EmployeeMapper employeeMapper;

@Test

void testList(){

// 参数是一个Wrapper , 条件构造器, 这里先不用,设为null

// 查询全部员工

List<Employee> employees = employeeMapper.selectList(null);

employees.forEach(System.out::print);

}

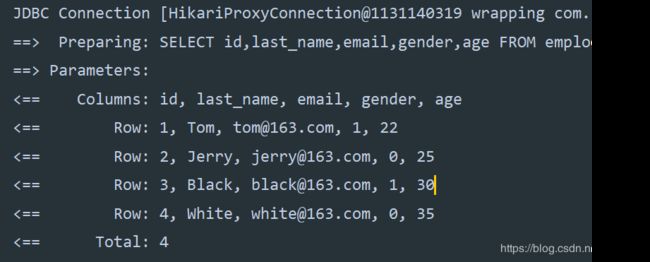

配置日志

所有的sql现在是不可见得,我们希望知道它如何执行,此时需要看日志

- yml

# 配置日志(此处是默认)

mybatis-plus:

configuration:

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl

CRUD扩展

1. Insert

若实体对象没设置id(主键),会自动生成一个id(详见主键生成策略),此时若主键自增则会报错,此时需要在主键字段上设置@TableID,详见下文

测试增加:

Employee employee = new Employee();

employee.setLastName("Acai");

employee.setAge(22);

employee.setEmail("[email protected]");

employee.setGender("1");

// employee.setId(5);

// 自动生成Id

int insert = employeeMapper.insert(employee);

System.out.println(insert);

// 自动生成的Id会写到该对象

System.out.println(employee);

1.1主键生成策略

雪花算法:雪花算法

1(0,无意义)+ 41(时间戳)+5(机房id)+5(机器id)+12(序号,即每秒可以生成4096个ID)

1.2 @TableId()

- 默认(不加该注解的情况下)

@TableId(type = IdType.ID_WORKER,value = "")

Integer id

- 注解源码

public @interface TableId {

String value() default "";

IdType type() default IdType.NONE;

}``

3. IdType

```java

public enum IdType {

//数据库ID自增

AUTO(0),

//该类型为未设置主键类型

NONE(1),

/**

* 用户输入ID

* 该类型可以通过自己注册自动填充插件进行填充

*/

INPUT(2),

/* 以下3种类型、只有当插入对象ID 为空,才自动填充。 */

//全局唯一ID (idWorker)

ID_WORKER(3),

// 全局唯一ID (UUID)

UUID(4),

//字符串全局唯一ID (idWorker 的字符串表示)

ID_WORKER_STR(5);

private int key;

IdType(int key) {

this.key = key;

}

}

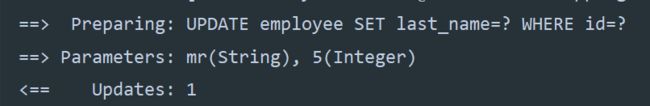

2. Update

- 代码

Employee employee = new Employee();

employee.setId(5);

// 通过条件自动拼接动态sql

employee.setLastName("mr");

// 传入的对象是 T ,即mapper传入的泛型

int result = employeeMapper.updateById(employee);

System.out.println(result);

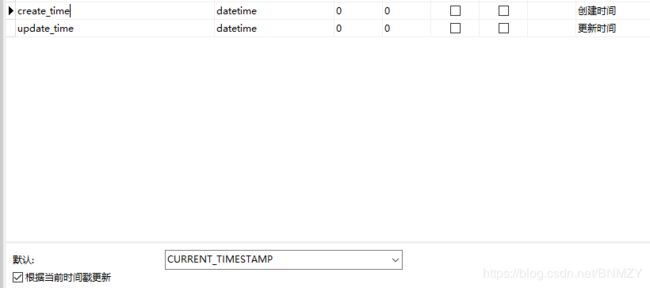

3. 自动填充

- 创建时间、修改时间,这些操作都是自动化完成,我们不希望手动更新

- 阿里巴巴开发手册:所有的数据库表:gmt_create、gmt_modified,几乎所有的表都要配置上

方式一:数据库级别

方式二:代码级别

- 在Entity处

// 表示在插入时自行填充(对象属性为null情况下)

@TableField(fill = FieldFill.INSERT)

private LocalDateTime createTime;

// 表示在插入和更新时自行填充(对象属性为null情况下)

@TableField(fill = FieldFill.INSERT_UPDATE)

private LocalDateTime updateTime;

- 自定义MetaObjectHandler的实现类

@Slf4j

@Component

public class MyMetaObjectHandler implements MetaObjectHandler {

@Override

public void insertFill(MetaObject metaObject) {

log.info("start insert fill...");

/**

* 3.3.0 后该方法已过时

* default MetaObjectHandler setFieldValByName

* (String fieldName, Object fieldVal, MetaObject metaObject)

* 1.字段名 2.字段值 3. 给哪个数据处理

*/

this.setFieldValByName("createTime",LocalDateTime.now(),metaObject);

this.setFieldValByName("updateTime",LocalDateTime.now(),metaObject);

/**

* 下面是新版的方法

*/

this.strictInsertFill(metaObject,"createTime",LocalDateTime.class,LocalDateTime.now());

// this.strictInsertFill(metaObject,"updateTime",LocalDateTime.class,LocalDateTime.now());

this.fillStrategy(metaObject,"updateTime",LocalDateTime.now());

}

@Override

public void updateFill(MetaObject metaObject) {

this.strictInsertFill(metaObject,"updateTime",LocalDateTime.class,LocalDateTime.now());

}

}

4. 乐观锁

乐观锁的实现方式

- 取出记录时,获取当前version

- 更新时,带上这个version

- 执行更新时,set version = new Version where version = oldVersion

- 如果version不对,就更新失败

- 表中增加version字段

- 实体类

// 表示这是一个乐观锁

@Version

private Integer version;

- 注册组件

@MapperScan("com.bnmzy.mybatisplus.mapper")

@EnableTransactionManagement

@Configuration

public class MybatisPlusConfig {

// 注册乐观锁插件

@Bean

public OptimisticLockerInterceptor optimisticLockerInterceptor() {

return new OptimisticLockerInterceptor();

}

}

- 测试

//测试乐观锁

@Test

void testOptimisticLocker(){

Employee employee = employeeMapper.selectById(6);

employee.setLastName("Acai");

employee.setEmail("[email protected]");

employeeMapper.updateById(employee);

}

- 乐观锁触发测试

Employee employee = employeeMapper.selectById(8);

employee.setLastName("Em1");

employee.setEmail("[email protected]");

Employee employee2 = employeeMapper.selectById(8);

employee2.setLastName("Em2");

employee2.setEmail("[email protected]");

employeeMapper.updateById(employee2);

// 此时触发乐观锁,失败,可用自旋锁尝试多次提交

employeeMapper.updateById(employee);

5. 查询select

5.1 selectById

Employee employee = employeeMapper.selectById(1);

System.out.println(employee);

5.2 selectBatchIds

批量查询

List<Employee> employees = employeeMapper.selectBatchIds(Arrays.asList(1, 2, 3));

employees.forEach(System.out::println);

5.3 selectByMap

条件查询之一:按map操作

HashMap<String, Object> map = new HashMap<>();

// 自定义要查询

map.put("last_name","Acai");

map.put("email","[email protected]");

List<Employee> employees = employeeMapper.selectByMap(map);

employees.forEach(System.out::println);

6. 分页查询

原始limit、pageHelper…

- 配置拦截器,在自定义的MetaObjectHandler处

@Bean // 来自官网

public PaginationInterceptor paginationInterceptor() {

PaginationInterceptor paginationInterceptor = new PaginationInterceptor();

// 设置请求的页面大于最大页后操作, true调回到首页,false 继续请求 默认false

// paginationInterceptor.setOverflow(false);

// 设置最大单页限制数量,默认 500 条,-1 不受限制

// paginationInterceptor.setLimit(500);

// 开启 count 的 join 优化,只针对部分 left join

paginationInterceptor.setCountSqlParser(new JsqlParserCountOptimize(true));

return paginationInterceptor;

}

- 使用

// 第1页,一页面大小是3

Page<Employee> employeePage = new Page<>(1, 3);

employeeMapper.selectPage(employeePage,null);

// 获得结果

employeePage.getRecords().forEach(System.out::println);

// 总记录数

System.out.println(employeePage.getTotal());

// 当前页数

System.out.println(employeePage.getCurrent());

// 共多少页

System.out.println(employeePage.getSize());

7. 基本删除

// 测试删除

@Test

void testDeleteById(){

employeeMapper.deleteById(9);

}

// 批量删除

@Test

void testDeleteBatchId(){

employeeMapper.deleteBatchIds(Arrays.asList(10,11));

}

// map删除

@Test

void testDeleteMap(){

HashMap<String, Object> stringObjectHashMap = new HashMap<>();

stringObjectHashMap.put("last_name","FF");

employeeMapper.deleteByMap(stringObjectHashMap);

}

8. 逻辑删除

物理删除:从数据库中直接移除

逻辑删除:在数据库中没有被移除,而是通过一个变量来让它失效, deleted =0 ==> deleted = 1

场景示例:管理员可以查看被删除的记录,防止数据的丢失,类似于回收站

原理

MP启动注入SQL原理分析

待我变强再分析!