IDEA 连接远程spark操作hive的操作和遇到的坑

在搭建IDEA使用遇到了一些坑和解决了下面废话不多说,附上步骤

1.配置本地HADOOP_HOME

下载winutils的windows的版本,

地址:https://github.com/srccodes/hadoop-common-2.2.0-bin,直接下载此项目的zip包,下载后是文件名是hadoop-common-2.2.0-bin-master.zip,随便解压到一个目录 例如:(E:\hadoop-common\)

本地添加本地winutils有两个方法,

第一个方法是在代码的开头加上

System.setProperty("hadoop.home.dir", "E:\\hadoop-common")

第二个方法是:设置环境变量(我设置过了但是没有生效)

增加用户变量HADOOP_HOME,值是下载的zip包解压的目录(E:\\hadoop-common),然后在系统变量path里增加%HADOOP_HOME%\bin 即可

原因:程序需要根据HADOOP_HOME找到winutils.exe,由于win并没有配置该环境变量,所以程序报 null\bin\winutils.exe

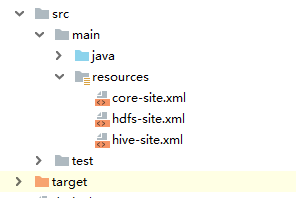

2.把hive/conf/hive-site.xml ,hadoop-conf下core-site.xml;hdfs-conf下hfds-site.xml 放入工程目录resources下

hive-site.xml文件如下:

3.配置pom.xml文件 javax.jdo.option.ConnectionURL jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true javax.jdo.option.ConnectionDriverName com.mysql.jdbc.Driver javax.jdo.option.ConnectionUserName root javax.jdo.option.ConnectionPassword hadoop hive.metastore.warehouse.dir /usr/hive/warehouse hive.exec.scratchdir /usr/hive/tmp hive.metastore.uris thrift://master:9083 hive.cli.print.current.db true

4.0.0 com.badou spark11Pro 1.0-SNAPSHOT UTF-8 UTF-8 2.11 64m 512m 2.0.0 2.11 org.apache.spark spark-core_${scala.version} ${spark.version} org.apache.spark spark-streaming_${scala.version} ${spark.version} org.apache.spark spark-sql_${scala.version} ${spark.version} org.apache.spark spark-hive_${scala.version} ${spark.version} org.apache.spark spark-mllib_${scala.version} ${spark.version} org.apache.spark spark-streaming-kafka-0-8_${scala.version} ${spark.version} com.alibaba fastjson 1.2.17 com.huaban jieba-analysis 1.0.2 mysql mysql-connector-java 5.1.31 org.scalatest scalatest_2.11 3.2.0-SNAP5 test junit junit 4.12 test org.apache.hadoop hadoop-client 2.6.1 org.apache.hadoop hadoop-common 2.6.1 org.apache.hadoop hadoop-hdfs 2.6.1 org.apache.hbase hbase-server 1.3.1 org.apache.hbase hbase-client 1.3.1 log4j log4j org.apache.thrift thrift org.jruby jruby-complete org.slf4j slf4j-log4j12 org.mortbay.jetty jsp-2.1 org.mortbay.jetty jsp-api-2.1 org.mortbay.jetty servlet-api-2.5 com.sun.jersey jersey-core com.sun.jersey jersey-json com.sun.jersey jersey-server org.mortbay.jetty jetty org.mortbay.jetty jetty-util tomcat jasper-runtime tomcat jasper-compiler org.jruby jruby-complete org.jboss.netty netty io.netty netty org.apache.hbase hbase-protocol 1.3.1 org.apache.hbase hbase-annotations 1.3.1 test-jar test org.apache.hbase hbase-hadoop-compat 1.3.1 test test-jar log4j log4j org.apache.thrift thrift org.jruby jruby-complete org.slf4j slf4j-log4j12 org.mortbay.jetty jsp-2.1 org.mortbay.jetty jsp-api-2.1 org.mortbay.jetty servlet-api-2.5 com.sun.jersey jersey-core com.sun.jersey jersey-json com.sun.jersey jersey-server org.mortbay.jetty jetty org.mortbay.jetty jetty-util tomcat jasper-runtime tomcat jasper-compiler org.jruby jruby-complete org.jboss.netty netty io.netty netty org.apache.hbase hbase-hadoop2-compat 1.3.1 test test-jar log4j log4j org.apache.thrift thrift org.jruby jruby-complete org.slf4j slf4j-log4j12 org.mortbay.jetty jsp-2.1 org.mortbay.jetty jsp-api-2.1 org.mortbay.jetty servlet-api-2.5 com.sun.jersey jersey-core com.sun.jersey jersey-json com.sun.jersey jersey-server org.mortbay.jetty jetty org.mortbay.jetty jetty-util tomcat jasper-runtime tomcat jasper-compiler org.jruby jruby-complete org.jboss.netty netty io.netty netty org.apache.hbase hbase-client 1.3.1 org.apache.hbase hbase-server 1.3.1 org.scala-tools maven-scala-plugin 2.15.2 compile testCompile maven-compiler-plugin 3.6.0 1.8 1.8 org.apache.maven.plugins maven-assembly-plugin 2.3 jar-with-dependencies org.apache.maven.plugins maven-surefire-plugin 2.19 true compile

4.编写代码如下

import org.apache.spark.sql.SparkSession

object TestFunc {

def main(args: Array[String]): Unit = {

// 实例化sparksession 在client端自动实例化sparksession

// Spark session available as 'spark'.

System.setProperty("hadoop.home.dir", "E:\\大数据\\hadoop-common")

val spark = SparkSession

.builder()

.appName("test")

.master("local[2]")

.enableHiveSupport()

.getOrCreate()

val df = spark.sql("select * from hive.orders")

val priors = spark.sql("select * from hive.order_products_prior")

import spark.implicits._

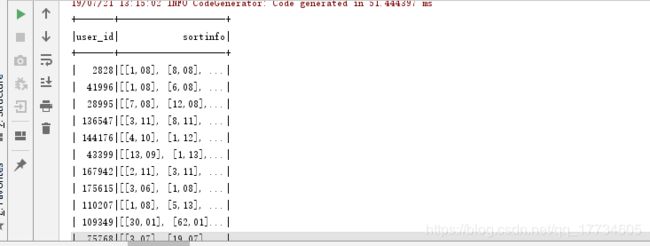

val orderNumbersort=df.select("user_id","order_number","order_hour_of_day").rdd

.map(x=>(x(0).toString,(x(1).toString,x(2).toString))).groupByKey()

orderNumbersort.mapValues(_.toArray.sortWith(_._2<_._2)).toDF("user_id","sortinfo").show()

}

}

其中遇到的问题

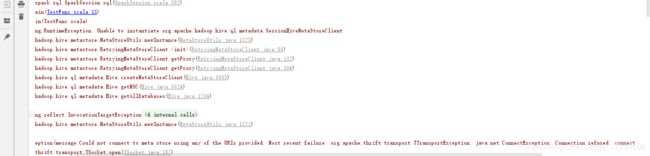

1.Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

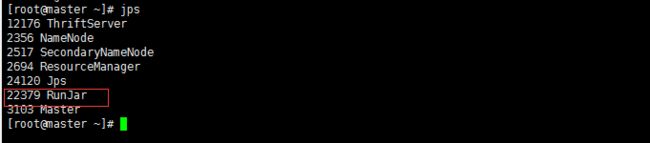

虽然提示的时着错,但是往下看,发现时连接不上,赶紧检查一下,发现hive应用时启动了,(如果没有启动运行:./bin/hive --service metastore & 命令启动)

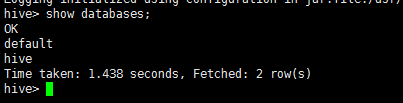

既然应用时启动了,那么我们看看数据库是否存在,

对比一下hive-site.xml查看,明显没有hive-site.xml里面的hives,更改成hive之后运行

Access denied for user 'root'@'192.168.17.1' (using password: YES)

访问的ip没有权限,那么我们就去数据库里面加上这个ip的权限

查看数据库user表,没有这个ip

[root@master apache-hive-1.2.2-bin]# mysql -u root -p

mysql> select host,user,password from mysql.user;

+---------------+------+-------------------------------------------+

| host | user | password |

+---------------+------+-------------------------------------------+

| localhost | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| master | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| % | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| ::1 | root | |

| localhost | | |

| master | | |

| slave1 | root | *FD57120397BA9AFE270FE6251AE967ECA5E0AA |

| slave2 | root | *FD571203974BA9AFE270FE6211AE967ECA5E0AA |

+---------------+------+-------------------------------------------+

10 rows in set (0.04 sec)

新增一条数据:

INSERT INTO mysql.user(Host,User,Password,ssl_cipher,x509_issuer,x509_subject) VALUES("192.168.17.1","root",PASSWORD("root"),"","","");

然后运行这个命令

- mysql> flush privileges;

重启mysql数据库生效

- [root@Tony_ts_tian bin]# service mysqld restart

然后查看数据库信息

mysql> select host,user,password from mysql.user;

+---------------+------+-------------------------------------------+

| host | user | password |

+---------------+------+-------------------------------------------+

| localhost | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| master | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| % | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

| ::1 | root | |

| localhost | | |

| master | | |

| slave1 | root | *FD571203974BA9AFE270FE62151AE967ECA5E0AA |

| slave2 | root | *FD571203974BA9AFE270FE62151AE967ECA5E0AA |

| 192.168.17.1 | root | *B34D36DA2C3ADBCCB80926618B9507F5689964B6 |

+---------------+------+-------------------------------------------+

10 rows in set (0.04 sec)

Access denied for user 'xiaoqiu'@'%' to database 'hive'

grant all privileges on hive.* to 'root'@'%';