GreenPlum log查看方式的想法

通过本文旨在解决gp log繁琐的问题。在异常发生时,如何高效便捷的查看log呢?

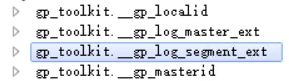

gp提供了通过外部表查询数据库的log,如下:

其中seg的ext table如下

CREATE READABLE EXTERNAL WEB TABLE gp_toolkit.__gp_log_segment_ext

(

logtime TIMESTAMP WITH TIME ZONE(1),

loguser TEXT,

logdatabase TEXT,

logpid TEXT,

logthread TEXT,

loghost TEXT,

logport TEXT,

logsessiontime TIMESTAMP WITH TIME ZONE(1),

logtransaction INTEGER,

logsession TEXT,

logcmdcount TEXT,

logsegment TEXT,

logslice TEXT,

logdistxact TEXT,

loglocalxact TEXT,

logsubxact TEXT,

logseverity TEXT,

logstate TEXT,

logmessage TEXT,

logdetail TEXT,

loghint TEXT,

logquery TEXT,

logquerypos INTEGER,

logcontext TEXT,

logdebug TEXT,

logcursorpos INTEGER,

logfunction TEXT,

logfile TEXT,

logline INTEGER,

logstack TEXT

)

EXECUTE E'cat $GP_SEG_DATADIR/pg_log/*.csv' ON ALL -- master上的log 为 "ON MASTER"

FORMAT 'CSV' (delimiter ',' null '' escape '"' quote '"')

ENCODING 'UTF8';

关于每个字段的具体含义可参见:https://yq.aliyun.com/articles/698117

gp_log_database 此view 是从master 和segment的ext table union all在一起的产物。

在实际使用中,由于gp csvlog数量巨大,文件大小约在80GB/天。 直接查询是不可能实现的,借助sed

sed -n '/2019-12-01 14:07:20/,/2019-12-01 14:10:36/p' gpdb-2019-12-01_000000.csv

查询一小段时间的log,结果很不友好,是否可以修改ext table 中的 location 精确指定某一个csv来查询。 待做

SELECT gp_log_system.logtime, gp_log_system.loguser, gp_log_system.logdatabase, gp_log_system.logpid, gp_log_system.logthread, gp_log_system.loghost, gp_log_system.logport, gp_log_system.logsessiontime, gp_log_system.logtransaction, gp_log_system.logsession, gp_log_system.logcmdcount, gp_log_system.logsegment, gp_log_system.logslice, gp_log_system.logdistxact, gp_log_system.loglocalxact, gp_log_system.logsubxact, gp_log_system.logseverity, gp_log_system.logstate, gp_log_system.logmessage, gp_log_system.logdetail, gp_log_system.loghint, gp_log_system.logquery, gp_log_system.logquerypos, gp_log_system.logcontext, gp_log_system.logdebug, gp_log_system.logcursorpos, gp_log_system.logfunction, gp_log_system.logfile, gp_log_system.logline, gp_log_system.logstack

FROM gp_toolkit.gp_log_system

WHERE gp_log_system.logdatabase = current_database()::text limit 1gp_log_system该视图的定义如下:

SELECT

__gp_log_segment_ext.logtime,

__gp_log_segment_ext.loguser,

__gp_log_segment_ext.logdatabase,

__gp_log_segment_ext.logpid,

__gp_log_segment_ext.logthread,

__gp_log_segment_ext.loghost,

__gp_log_segment_ext.logport,

__gp_log_segment_ext.logsessiontime,

__gp_log_segment_ext.logtransaction,

__gp_log_segment_ext.logsession,

__gp_log_segment_ext.logcmdcount,

__gp_log_segment_ext.logsegment,

__gp_log_segment_ext.logslice,

__gp_log_segment_ext.logdistxact,

__gp_log_segment_ext.loglocalxact,

__gp_log_segment_ext.logsubxact,

__gp_log_segment_ext.logseverity,

__gp_log_segment_ext.logstate,

__gp_log_segment_ext.logmessage,

__gp_log_segment_ext.logdetail,

__gp_log_segment_ext.loghint,

__gp_log_segment_ext.logquery,

__gp_log_segment_ext.logquerypos,

__gp_log_segment_ext.logcontext,

__gp_log_segment_ext.logdebug,

__gp_log_segment_ext.logcursorpos,

__gp_log_segment_ext.logfunction,

__gp_log_segment_ext.logfile,

__gp_log_segment_ext.logline,

__gp_log_segment_ext.logstack

FROM

ONLY gp_toolkit.__gp_log_segment_ext UNION ALL

SELECT

__gp_log_master_ext.logtime,

__gp_log_master_ext.loguser,

__gp_log_master_ext.logdatabase,

__gp_log_master_ext.logpid,

__gp_log_master_ext.logthread,

__gp_log_master_ext.loghost,

__gp_log_master_ext.logport,

__gp_log_master_ext.logsessiontime,

__gp_log_master_ext.logtransaction,

__gp_log_master_ext.logsession,

__gp_log_master_ext.logcmdcount,

__gp_log_master_ext.logsegment,

__gp_log_master_ext.logslice,

__gp_log_master_ext.logdistxact,

__gp_log_master_ext.loglocalxact,

__gp_log_master_ext.logsubxact,

__gp_log_master_ext.logseverity,

__gp_log_master_ext.logstate,

__gp_log_master_ext.logmessage,

__gp_log_master_ext.logdetail,

__gp_log_master_ext.loghint,

__gp_log_master_ext.logquery,

__gp_log_master_ext.logquerypos,

__gp_log_master_ext.logcontext,

__gp_log_master_ext.logdebug,

__gp_log_master_ext.logcursorpos,

__gp_log_master_ext.logfunction,

__gp_log_master_ext.logfile,

__gp_log_master_ext.logline,

__gp_log_master_ext.logstack

FROM

ONLY gp_toolkit.__gp_log_master_ext

ORDER BY

1

| ERROR: XX000: could not write 32768 bytes to temporary file: No space left on device (buffile.c:405)

|

好奇,为啥会 sql查询会导致磁盘空间使用率过高。

参考前辈的文章

http://blog.sina.com.cn/s/blog_6b1dfc870102vj35.html

-----------------------------------update 2020年1月7日10:27:52------------------------------------------------

gp log 有关的参数

日志滚动

- log_rotation_age Automatic log file rotation will occur after N minutes

- log_rotation_size Automatic log file rotation will occur after N kilobytes

- log_truncate_on_rotation Truncate existing log files of same name during log rotation

日志级别

- client_min_messages Sets the message levels that are sent to the client

- log_error_verbosity

- log_min_duration_statement

- log_min_error_statement

- log_min_messages

日志内容

- debug_pretty_print

- debug_print_parse

- debug_print_plan

- debug_print_prelim_plan

- debug_print_rewritten

- debug_print_slice_table

- log_autostats

- log_connections

- log_disconnections

- log_dispatch_stats

- log_duration Logs the duration of each completed SQL statement.

- log_executor_stats

- log_hostname Logs the host name in the connection logs

- log_parser_stats

- log_planner_stats

- log_statement Sets the type of statements logged

- log_statement_stats Writes cumulative performance statistics to the server log

- log_timezone Sets the time zone to use in log messages

- gp_debug_linger

- gp_log_format Sets the format for log files.

- gp_max_csv_line_length aximum allowed length of a csv input data row in bytes

- gp_reraise_signal Do we attempt to dump core when a serious problem occurs.

---------------------------------update 2020年2月19日22:36:40-----------------------------------------

gplogfilter 手册

COMMAND NAME: gplogfilter

Searches through Greenplum Database log files for specified entries.

*****************************************************

SYNOPSIS

*****************************************************gplogfilter [

] [ ] [ ]

[] [ ] gplogfilter --help

gplogfilter --version

*****************************************************

DESCRIPTION

*****************************************************The gplogfilter utility can be used to search through a Greenplum

Database log file for entries matching the specified criteria. If an

input file is not supplied, then gplogfilter will use the

$MASTER_DATA_DIRECTORY environment variable to locate the Greenplum

master log file in the standard logging location. To read from standard

input, use a dash (-) as the input file name. Input files may be

compressed using gzip. In an input file, a log entry is identified by

its timestamp in YYYY-MM-DD [hh:mm[:ss]] format.You can also use gplogfilter to search through all segment log files at

once by running it through the gpssh utility. For example, to display

the last three lines of each segment log file:gpssh -f seg_host_file

=> source /usr/local/greenplum-db/greenplum_path.sh

=> gplogfilter -n 3 /gpdata/*/pg_log/gpdb*.csvBy default, the output of gplogfilter is sent to standard output. Use

the -o option to send the output to a file or a directory. If you supply

an output file name ending in .gz, the output file will be compressed by

default using maximum compression. If the output destination is a

directory, the output file is given the same name as the input file.

*****************************************************

OPTIONS

*****************************************************TIMESTAMP OPTIONS

******************-b

| --begin= Specifies a starting date and time to begin searching for log entries in

the format of YYYY-MM-DD [hh:mm[:ss]].If a time is specified, the date and time must be enclosed in either

single or double quotes. This example encloses the date and time in

single quotes:gplogfilter -b '2013-05-23 14:33'

-e| --end= Specifies an ending date and time to stop searching for log entries in

the format of YYYY-MM-DD [hh:mm[:ss]].If a time is specified, the date and time must be enclosed in either

single or double quotes. This example encloses the date and time in

single quotes:gplogfilter -e '2013-05-23 14:33'

-dSpecifies a time duration to search for log entries in the format of

[hh][:mm[:ss]]. If used without either the -b or -e option, will use the

current time as a basis.PATTERN MATCHING OPTIONS

*************************-c i[gnore]|r[espect] | --case=i[gnore]|r[espect]

Matching of alphabetic characters is case sensitive by default unless

proceeded by the --case=ignore option.

-C '' | --columns=' ' Selects specific columns from the log file. Specify the desired columns

as a comma-delimited string of column numbers beginning with 1, where

the second column from left is 2, the third is 3, and so on. See the

"Greenplum Database System Administrator Guide" for details about the log

file format and for a list of the available columns and their associated

number.

-f '' | --find=' ' Finds the log entries containing the specified string.

-F '' | --nofind=' ' Rejects the log entries containing the specified string.

-m| --match= Finds log entries that match the specified Python regular expression.

See http://docs.python.org/library/re.html for Python regular expression

syntax.

-M| --nomatch= Rejects log entries that match the specified Python regular expression.

See http://docs.python.org/library/re.html for Python regular expression

syntax.

-t | --troubleFinds only the log entries that have ERROR:, FATAL:, or PANIC: in the

first line.

OUTPUT OPTIONS

***************-n

| --tail= Limits the output to the last integer of qualifying log entries found.

-s[ ] | --slice= [ ] From the list of qualifying log entries, returns the

number of

entries starting at theentry number, where an offset of zero (0)

denotes the first entry in the result set and an offset of any number

greater than zero counts back from the end of the result set.

-o| --out= Writes the output to the specified file or directory location instead of

STDOUT.

-z 0-9 | --zip=0-9Compresses the output file to the specified compression level using

gzip, where 0 is no compression and 9 is maximum compression. If you

supply an output file name ending in .gz, the output file will be

compressed by default using maximum compression.

-a | --appendIf the output file already exists, appends to the file instead of

overwriting it.

INPUT OPTIONS

**************

The name of the input log file(s) to search through. If an input file is

not supplied, gplogfilter will use the $MASTER_DATA_DIRECTORY

environment variable to locate the Greenplum master log file. To read

from standard input, use a dash (-) as the input file name.

-u | --unzipUncompress the input file using gunzip. If the input file name ends in

.gz, it will be uncompressed by default.

--helpDisplays the online help.

--versionDisplays the version of this utility.

*****************************************************

EXAMPLES

*****************************************************Display the last three error messages in the master log file:

gplogfilter -t -n 3

Display all log messages in the master log file timestamped in the last

10 minutes:gplogfilter -d :10

Display log messages in the master log file containing the string

'|con6 cmd11|':gplogfilter -f '|con6 cmd11|'

Using gpssh, run gplogfilter on the segment hosts and search for log

messages in the segment log files containing the string 'con6' and save

output to a file.gpssh -f seg_hosts_file -e 'source

/usr/local/greenplum-db/greenplum_path.sh ; gplogfilter -f con6

/gpdata/*/pg_log/gpdb*.csv' > seglog.out

*****************************************************

SEE ALSO

*****************************************************gpssh, gpscp

-------------------------------------update 2020年3月11日18:19:45------------------------------------------

pgbadger 可视化分析gp log是一个不错的选择。

目前已经在测试环境测通,分析结果可以满足日常数据库效能分析。

好开心 终于把pgbadger测通了。下一步修改正式区的参数,并上线,等部署完了 我回来再写一篇。