ORBSLAM2理论与实战(12) 单目初始化initializer类

Initializer类主要负责SLAM系统的初始化,在ORBSLAM2中初始化非常重要。主要是单目初始化问题,这里同时计算两个模型:用于平面场景的单应性矩阵H和用于非平面场景的基础矩阵F,然后通过一个评分规则来选择合适的模型,恢复相机的旋转矩阵R和平移向量t。

/**

* @brief 单目的地图初始化

*

* 并行地计算基础矩阵和单应性矩阵,选取其中一个模型,恢复出最开始两帧之间的相对姿态以及点云

* 得到初始两帧的匹配、相对运动、初始MapPoints

*/

void Tracking::MonocularInitialization()

{

// 如果单目初始器还没有被创建,则创建单目初始器

if(!mpInitializer)

{

// Set Reference Frame

// 单目初始帧的特征点数必须大于100

if(mCurrentFrame.mvKeys.size()>100)

{

// 步骤1:得到用于初始化的第一帧,初始化需要两帧

mInitialFrame = Frame(mCurrentFrame);

// 记录最近的一帧

mLastFrame = Frame(mCurrentFrame);

// mvbPrevMatched最大的情况就是所有特征点都被跟踪上

//mvbPrevMatched 存放在前一个关键帧的特征点 2019.05.13 lishuwei

mvbPrevMatched.resize(mCurrentFrame.mvKeysUn.size());

for(size_t i=0; i(NULL);

fill(mvIniMatches.begin(),mvIniMatches.end(),-1);

return;

}

// Find correspondences

// 步骤3:在mInitialFrame与mCurrentFrame中找匹配的特征点对

// mvbPrevMatched为前一帧的特征点,存储了mInitialFrame中哪些点将进行接下来的匹配

// mvIniMatches存储mInitialFrame,mCurrentFrame之间匹配的特征点

ORBmatcher matcher(0.9,true);

int nmatches = matcher.SearchForInitialization(mInitialFrame,mCurrentFrame,mvbPrevMatched,mvIniMatches,100);

// Check if there are enough correspondences

// 步骤4:如果初始化的两帧之间的匹配点太少,重新初始化

if(nmatches<100)

{

delete mpInitializer;

mpInitializer = static_cast(NULL);

return;

}

cv::Mat Rcw; // Current Camera Rotation

cv::Mat tcw; // Current Camera Translation

vector vbTriangulated; // Triangulated Correspondences (mvIniMatches)

// 步骤5:通过H模型或F模型进行单目初始化,得到两帧间相对运动、初始MapPoints

if(mpInitializer->Initialize(mCurrentFrame, mvIniMatches, Rcw, tcw, mvIniP3D, vbTriangulated))

{

// 步骤6:删除那些无法进行三角化的匹配点

for(size_t i=0, iend=mvIniMatches.size(); i=0 && !vbTriangulated[i])

{

mvIniMatches[i]=-1;

nmatches--;

}

}

// Set Frame Poses

// 将初始化的第一帧作为世界坐标系,因此第一帧变换矩阵为单位矩阵

mInitialFrame.SetPose(cv::Mat::eye(4,4,CV_32F));

// 由Rcw和tcw构造Tcw,并赋值给mTcw,mTcw为世界坐标系到该帧的变换矩阵

cv::Mat Tcw = cv::Mat::eye(4,4,CV_32F);

Rcw.copyTo(Tcw.rowRange(0,3).colRange(0,3));

tcw.copyTo(Tcw.rowRange(0,3).col(3));

mCurrentFrame.SetPose(Tcw);

// 步骤6:将三角化得到的3D点包装成MapPoints

// Initialize函数会得到mvIniP3D,

// mvIniP3D是cv::Point3f类型的一个容器,是个存放3D点的临时变量,

// CreateInitialMapMonocular将3D点包装成MapPoint类型存入KeyFrame和Map中

CreateInitialMapMonocular();

}

}

}

大体分为五个步骤:

- 找到初始对应点

- 同时计算两个模型

- 模型选择

- 运动恢复(sfm)

- 集束调整

1.找到初始对应点

int nmatches = matcher.SearchForInitialization(mInitialFrame,mCurrentFrame,mvbPrevMatched,mvIniMatches,100);

在当前帧Fc中提取ORB特征点,与参考帧Fr进行匹配。初始化匹配对数少于100,重新构造参考帧。一直到到满足,实现较为鲁邦的初始化

2.同时计算两个模型

在找到对应点之后,开始调用Initializer.cc中的Initializer::Initialized函数进行初始化工作。为了计算R和t,ORB_SLAM为了针对平面和非平面场景选择最合适的模型,同时开启了两个线程,分别计算单应性矩阵Hcr和基础矩阵Fcr。如下所示:

3.模型选择

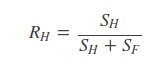

文中认为,当场景是一个平面、或近似为一个平面、或者视差较小的时候,可以使用单应性矩阵H,而使用基础矩阵F恢复运动,需要场景是一个非平面、视差大的场景。这个时候,文中使用下面所示的一个机制,来估计两个模型的优劣:

当RH大于0.45时,选择从单应性变换矩阵还原运动。不过ORB_SLAM2源代码中使用的是0.4作为阈值

4.运动恢复(sfm)

选择好模型后,就可以恢复运动。

5.集束调整

最后使用一个全局集束调整(BA),优化初始化结果。这一部分是在Tracking.cc中的CreateInitialMapMonocular()函数中,使用了如下语句:

Optimizer::GlobalBundleAdjustemnt(mpMap,20);Tracking.cc中的CreateInitialMapMonocular()如下

/**

* @brief CreateInitialMapMonocular

*

* 为单目摄像头三角化生成MapPoints

*/

void Tracking::CreateInitialMapMonocular()

{

// Create KeyFrames

KeyFrame* pKFini = new KeyFrame(mInitialFrame,mpMap,mpKeyFrameDB);

KeyFrame* pKFcur = new KeyFrame(mCurrentFrame,mpMap,mpKeyFrameDB);

// 步骤1:将初始关键帧的描述子转为BoW

pKFini->ComputeBoW();

// 步骤2:将当前关键帧的描述子转为BoW

pKFcur->ComputeBoW();

// Insert KFs in the map

// 步骤3:将关键帧插入到地图

// 凡是关键帧,都要插入地图

mpMap->AddKeyFrame(pKFini);

mpMap->AddKeyFrame(pKFcur);

// Create MapPoints and asscoiate to keyframes

// 步骤4:将3D点包装成MapPoints

for(size_t i=0; iAddMapPoint(pMP,i);

pKFcur->AddMapPoint(pMP,mvIniMatches[i]);

// a.表示该MapPoint可以被哪个KeyFrame的哪个特征点观测到

pMP->AddObservation(pKFini,i);

pMP->AddObservation(pKFcur,mvIniMatches[i]);

// b.从众多观测到该MapPoint的特征点中挑选区分读最高的描述子

pMP->ComputeDistinctiveDescriptors();

// c.更新该MapPoint平均观测方向以及观测距离的范围

pMP->UpdateNormalAndDepth();

//Fill Current Frame structure

mCurrentFrame.mvpMapPoints[mvIniMatches[i]] = pMP;

mCurrentFrame.mvbOutlier[mvIniMatches[i]] = false;

//Add to Map

// 步骤4.4:在地图中添加该MapPoint

mpMap->AddMapPoint(pMP);

}

// Update Connections

// 步骤5:更新关键帧间的连接关系

// 在3D点和关键帧之间建立边,每个边有一个权重,边的权重是该关键帧与当前帧公共3D点的个数

pKFini->UpdateConnections();

pKFcur->UpdateConnections();

// Bundle Adjustment

cout << "New Map created with " << mpMap->MapPointsInMap() << " points" << endl;

// 步骤5:BA优化

Optimizer::GlobalBundleAdjustemnt(mpMap,20);

// Set median depth to 1

// 步骤6:!!!将MapPoints的中值深度归一化到1,并归一化两帧之间变换

// 评估关键帧场景深度,q=2表示中值

float medianDepth = pKFini->ComputeSceneMedianDepth(2);

float invMedianDepth = 1.0f/medianDepth;

if(medianDepth<0 || pKFcur->TrackedMapPoints(1)<100)

{

cout << "Wrong initialization, reseting..." << endl;

Reset();

return;

}

// Scale initial baseline

cv::Mat Tc2w = pKFcur->GetPose();

// x/z y/z 将z归一化到1

Tc2w.col(3).rowRange(0,3) = Tc2w.col(3).rowRange(0,3)*invMedianDepth;

pKFcur->SetPose(Tc2w);

// Scale points

// 把3D点的尺度也归一化到1

vector vpAllMapPoints = pKFini->GetMapPointMatches();

for(size_t iMP=0; iMPSetWorldPos(pMP->GetWorldPos()*invMedianDepth);

}

}

// 这部分和SteroInitialization()相似

mpLocalMapper->InsertKeyFrame(pKFini);

mpLocalMapper->InsertKeyFrame(pKFcur);

mCurrentFrame.SetPose(pKFcur->GetPose());

mnLastKeyFrameId=mCurrentFrame.mnId;

mpLastKeyFrame = pKFcur;

mvpLocalKeyFrames.push_back(pKFcur);

mvpLocalKeyFrames.push_back(pKFini);

mvpLocalMapPoints=mpMap->GetAllMapPoints();

mpReferenceKF = pKFcur;

mCurrentFrame.mpReferenceKF = pKFcur;

mLastFrame = Frame(mCurrentFrame);

mpMap->SetReferenceMapPoints(mvpLocalMapPoints);

mpMapDrawer->SetCurrentCameraPose(pKFcur->GetPose());

mpMap->mvpKeyFrameOrigins.push_back(pKFini);

mState=OK;// 初始化成功,至此,初始化过程完成

}

initializer类

initializer.h

#ifndef INITIALIZER_H

#define INITIALIZER_H

#include

#include "Frame.h"

namespace ORB_SLAM2

{

// THIS IS THE INITIALIZER FOR MONOCULAR SLAM. NOT USED IN THE STEREO OR RGBD CASE.

/**

* @brief 单目SLAM初始化相关,双目和RGBD不会使用这个类

*/

class Initializer

{

typedef pair Match;

public:

// Fix the reference frame

// 用reference frame来初始化,这个reference frame就是SLAM正式开始的第一帧

Initializer(const Frame &ReferenceFrame, float sigma = 1.0, int iterations = 200);

// Computes in parallel a fundamental matrix and a homography

// Selects a model and tries to recover the motion and the structure from motion

// 用current frame,也就是用SLAM逻辑上的第二帧来初始化整个SLAM,得到最开始两帧之间的R t,以及点云

bool Initialize(const Frame &CurrentFrame, const vector &vMatches12,

cv::Mat &R21, cv::Mat &t21, vector &vP3D, vector &vbTriangulated);

private:

// 假设场景为平面情况下通过前两帧求取Homography矩阵(current frame 2 到 reference frame 1),并得到该模型的评分

void FindHomography(vector &vbMatchesInliers, float &score, cv::Mat &H21);

// 假设场景为非平面情况下通过前两帧求取Fundamental矩阵(current frame 2 到 reference frame 1),并得到该模型的评分

void FindFundamental(vector &vbInliers, float &score, cv::Mat &F21);

// 被FindHomography函数调用具体来算Homography矩阵

cv::Mat ComputeH21(const vector &vP1, const vector &vP2);

// 被FindFundamental函数调用具体来算Fundamental矩阵

cv::Mat ComputeF21(const vector &vP1, const vector &vP2);

// 被FindHomography函数调用,具体来算假设使用Homography模型的得分

float CheckHomography(const cv::Mat &H21, const cv::Mat &H12, vector &vbMatchesInliers, float sigma);

// 被FindFundamental函数调用,具体来算假设使用Fundamental模型的得分

float CheckFundamental(const cv::Mat &F21, vector &vbMatchesInliers, float sigma);

// 分解F矩阵,并从分解后的多个解中找出合适的R,t

bool ReconstructF(vector &vbMatchesInliers, cv::Mat &F21, cv::Mat &K,

cv::Mat &R21, cv::Mat &t21, vector &vP3D, vector &vbTriangulated, float minParallax, int minTriangulated);

// 分解H矩阵,并从分解后的多个解中找出合适的R,t

bool ReconstructH(vector &vbMatchesInliers, cv::Mat &H21, cv::Mat &K,

cv::Mat &R21, cv::Mat &t21, vector &vP3D, vector &vbTriangulated, float minParallax, int minTriangulated);

// 通过三角化方法,利用反投影矩阵将特征点恢复为3D点

void Triangulate(const cv::KeyPoint &kp1, const cv::KeyPoint &kp2, const cv::Mat &P1, const cv::Mat &P2, cv::Mat &x3D);

// 归一化三维空间点和帧间位移t

void Normalize(const vector &vKeys, vector &vNormalizedPoints, cv::Mat &T);

// ReconstructF调用该函数进行cheirality check,从而进一步找出F分解后最合适的解

int CheckRT(const cv::Mat &R, const cv::Mat &t, const vector &vKeys1, const vector &vKeys2,

const vector &vMatches12, vector &vbInliers,

const cv::Mat &K, vector &vP3D, float th2, vector &vbGood, float ¶llax);

// F矩阵通过结合内参可以得到Essential矩阵,该函数用于分解E矩阵,将得到4组解

void DecomposeE(const cv::Mat &E, cv::Mat &R1, cv::Mat &R2, cv::Mat &t);

// Keypoints from Reference Frame (Frame 1)

vector mvKeys1; ///< 存储Reference Frame中的特征点

// Keypoints from Current Frame (Frame 2)

vector mvKeys2; ///< 存储Current Frame中的特征点

// Current Matches from Reference to Current

// Reference Frame: 1, Current Frame: 2

vector mvMatches12; ///< Match的数据结构是pair,mvMatches12只记录Reference到Current匹配上的特征点对

vector mvbMatched1; ///< 记录Reference Frame的每个特征点在Current Frame是否有匹配的特征点

// Calibration

cv::Mat mK; ///< 相机内参

// Standard Deviation and Variance

float mSigma, mSigma2; ///< 测量误差

// Ransac max iterations

int mMaxIterations; ///< 算Fundamental和Homography矩阵时RANSAC迭代次数

// Ransac sets

vector > mvSets; ///< 二维容器,外层容器的大小为迭代次数,内层容器大小为每次迭代算H或F矩阵需要的点

};

} //namespace ORB_SLAM

#endif // INITIALIZER_H

initializer.cpp

/**

* This file is part of ORB-SLAM2.

*

* Copyright (C) 2014-2016 Raúl Mur-Artal (University of Zaragoza)

* For more information see

*

* ORB-SLAM2 is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM2 is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with ORB-SLAM2. If not, see

namespace ORB_SLAM2

{

/**

* @brief 给定参考帧构造Initializer

*

* 用reference frame来初始化,这个reference frame就是SLAM正式开始的第一帧

* @param ReferenceFrame 参考帧

* @param sigma 测量误差

* @param iterations RANSAC迭代次数

*/

Initializer::Initializer(const Frame &ReferenceFrame, float sigma, int iterations)

{

mK = ReferenceFrame.mK.clone();

mvKeys1 = ReferenceFrame.mvKeysUn;

mSigma = sigma;

mSigma2 = sigma*sigma;

mMaxIterations = iterations;

}

/**

* @brief 并行地计算基础矩阵和单应性矩阵,选取其中一个模型,恢复出最开始两帧之间的相对姿态以及点云

*/

bool Initializer::Initialize(const Frame &CurrentFrame, const vector &vMatches12, cv::Mat &R21, cv::Mat &t21,

vector &vP3D, vector &vbTriangulated)

{

// Fill structures with current keypoints and matches with reference frame

// Reference Frame: 1, Current Frame: 2

// Frame2 特征点

mvKeys2 = CurrentFrame.mvKeysUn;

// mvMatches12记录匹配上的特征点对

mvMatches12.clear();

mvMatches12.reserve(mvKeys2.size());

// mvbMatched1记录每个特征点是否有匹配的特征点,

// 这个变量后面没有用到,后面只关心匹配上的特征点

mvbMatched1.resize(mvKeys1.size());

// 步骤1:组织特征点对

for(size_t i=0, iend=vMatches12.size();i=0)

{

mvMatches12.push_back(make_pair(i,vMatches12[i]));

mvbMatched1[i]=true;

}

else

mvbMatched1[i]=false;

}

// 匹配上的特征点的个数

const int N = mvMatches12.size();

// Indices for minimum set selection

// 新建一个容器vAllIndices,生成0到N-1的数作为特征点的索引

vector vAllIndices;

vAllIndices.reserve(N);

vector vAvailableIndices;

for(int i=0; i >(mMaxIterations,vector(8,0));

DUtils::Random::SeedRandOnce(0);

for(int it=0; it vbMatchesInliersH, vbMatchesInliersF;

float SH, SF; // score for H and F

cv::Mat H, F; // H and F

// ref是引用的功能:http://en.cppreference.com/w/cpp/utility/functional/ref

// 计算homograpy并打分

//https://blog.csdn.net/TH_NUM/article/details/81385917 ref 2019.05.13 lishuwei

thread threadH(&Initializer::FindHomography,this,ref(vbMatchesInliersH), ref(SH), ref(H));

// 计算fundamental matrix并打分

thread threadF(&Initializer::FindFundamental,this,ref(vbMatchesInliersF), ref(SF), ref(F));

// Wait until both threads have finished

threadH.join();

threadF.join();

// Compute ratio of scores

// 步骤4:计算得分比例,选取某个模型

float RH = SH/(SH+SF);

// Try to reconstruct from homography or fundamental depending on the ratio (0.40-0.45)

// 步骤5:从H矩阵或F矩阵中恢复R,t

if(RH>0.40)

return ReconstructH(vbMatchesInliersH,H,mK,R21,t21,vP3D,vbTriangulated,1.0,50);

else //if(pF_HF>0.6)

return ReconstructF(vbMatchesInliersF,F,mK,R21,t21,vP3D,vbTriangulated,1.0,50);

return false;

}

/**

* @brief 计算单应矩阵

*

* 假设场景为平面情况下通过前两帧求取Homography矩阵(current frame 2 到 reference frame 1),并得到该模型的评分

*/

void Initializer::FindHomography(vector &vbMatchesInliers, float &score, cv::Mat &H21)

{

// Number of putative matches

const int N = mvMatches12.size();

// Normalize coordinates

// 将mvKeys1和mvKey2归一化到均值为0,一阶绝对矩为1,归一化矩阵分别为T1、T2

vector vPn1, vPn2;

cv::Mat T1, T2;

Normalize(mvKeys1,vPn1, T1);

Normalize(mvKeys2,vPn2, T2);

cv::Mat T2inv = T2.inv();

// Best Results variables

// 最终最佳的MatchesInliers与得分

score = 0.0;

vbMatchesInliers = vector(N,false);

// Iteration variables

vector vPn1i(8);

vector vPn2i(8);

cv::Mat H21i, H12i;

// 每次RANSAC的MatchesInliers与得分

vector vbCurrentInliers(N,false);

float currentScore;

// Perform all RANSAC iterations and save the solution with highest score

for(int it=0; itscore)

{

H21 = H21i.clone();

vbMatchesInliers = vbCurrentInliers;

score = currentScore;

}

}

}

/**

* @brief 计算基础矩阵

*

* 假设场景为非平面情况下通过前两帧求取Fundamental矩阵(current frame 2 到 reference frame 1),并得到该模型的评分

*/

void Initializer::FindFundamental(vector &vbMatchesInliers, float &score, cv::Mat &F21)

{

// Number of putative matches

const int N = vbMatchesInliers.size();

// Normalize coordinates

vector vPn1, vPn2;

cv::Mat T1, T2;

Normalize(mvKeys1,vPn1, T1);

Normalize(mvKeys2,vPn2, T2);

cv::Mat T2t = T2.t();

// Best Results variables

score = 0.0;

vbMatchesInliers = vector(N,false);

// Iteration variables

vector vPn1i(8);

vector vPn2i(8);

cv::Mat F21i;

vector vbCurrentInliers(N,false);

float currentScore;

// Perform all RANSAC iterations and save the solution with highest score

for(int it=0; itscore)

{

F21 = F21i.clone();

vbMatchesInliers = vbCurrentInliers;

score = currentScore;

}

}

}

// |x'| | h1 h2 h3 ||x|

// |y'| = a | h4 h5 h6 ||y| 简写: x' = a H x, a为一个尺度因子

// |1 | | h7 h8 h9 ||1|

// 使用DLT(direct linear tranform)求解该模型

// x' = a H x

// ---> (x') 叉乘 (H x) = 0

// ---> Ah = 0

// A = | 0 0 0 -x -y -1 xy' yy' y'| h = | h1 h2 h3 h4 h5 h6 h7 h8 h9 |

// |-x -y -1 0 0 0 xx' yx' x'|

// 通过SVD求解Ah = 0,A'A最小特征值对应的特征向量即为解

/**

* @brief 从特征点匹配求homography(normalized DLT)

*

* @param vP1 归一化后的点, in reference frame

* @param vP2 归一化后的点, in current frame

* @return 单应矩阵

* @see Multiple View Geometry in Computer Vision - Algorithm 4.2 p109

*/

cv::Mat Initializer::ComputeH21(const vector &vP1, const vector &vP2)

{

const int N = vP1.size();

cv::Mat A(2*N,9,CV_32F); // 2N*9

for(int i=0; i(2*i,0) = 0.0;

A.at(2*i,1) = 0.0;

A.at(2*i,2) = 0.0;

A.at(2*i,3) = -u1;

A.at(2*i,4) = -v1;

A.at(2*i,5) = -1;

A.at(2*i,6) = v2*u1;

A.at(2*i,7) = v2*v1;

A.at(2*i,8) = v2;

A.at(2*i+1,0) = u1;

A.at(2*i+1,1) = v1;

A.at(2*i+1,2) = 1;

A.at(2*i+1,3) = 0.0;

A.at(2*i+1,4) = 0.0;

A.at(2*i+1,5) = 0.0;

A.at(2*i+1,6) = -u2*u1;

A.at(2*i+1,7) = -u2*v1;

A.at(2*i+1,8) = -u2;

}

cv::Mat u,w,vt;

cv::SVDecomp(A,w,u,vt,cv::SVD::MODIFY_A | cv::SVD::FULL_UV);

return vt.row(8).reshape(0, 3); // v的最后一列

}

// x'Fx = 0 整理可得:Af = 0

// A = | x'x x'y x' y'x y'y y' x y 1 |, f = | f1 f2 f3 f4 f5 f6 f7 f8 f9 |

// 通过SVD求解Af = 0,A'A最小特征值对应的特征向量即为解

/**

* @brief 从特征点匹配求fundamental matrix(normalized 8点法)

* @param vP1 归一化后的点, in reference frame

* @param vP2 归一化后的点, in current frame

* @return 基础矩阵

* @see Multiple View Geometry in Computer Vision - Algorithm 11.1 p282 (中文版 p191)

*/

cv::Mat Initializer::ComputeF21(const vector &vP1,const vector &vP2)

{

const int N = vP1.size();

cv::Mat A(N,9,CV_32F); // N*9

for(int i=0; i(i,0) = u2*u1;

A.at(i,1) = u2*v1;

A.at(i,2) = u2;

A.at(i,3) = v2*u1;

A.at(i,4) = v2*v1;

A.at(i,5) = v2;

A.at(i,6) = u1;

A.at(i,7) = v1;

A.at(i,8) = 1;

}

cv::Mat u,w,vt;

cv::SVDecomp(A,w,u,vt,cv::SVD::MODIFY_A | cv::SVD::FULL_UV);

cv::Mat Fpre = vt.row(8).reshape(0, 3); // v的最后一列

cv::SVDecomp(Fpre,w,u,vt,cv::SVD::MODIFY_A | cv::SVD::FULL_UV);

w.at(2)=0; // 秩2约束,将第3个奇异值设为0

return u*cv::Mat::diag(w)*vt;

}

/**

* @brief 对给定的homography matrix打分

*

* @see

* - Author's paper - IV. AUTOMATIC MAP INITIALIZATION (2)

* - Multiple View Geometry in Computer Vision - symmetric transfer errors: 4.2.2 Geometric distance

* - Multiple View Geometry in Computer Vision - model selection 4.7.1 RANSAC

*/

float Initializer::CheckHomography(const cv::Mat &H21, const cv::Mat &H12, vector &vbMatchesInliers, float sigma)

{

const int N = mvMatches12.size();

// |h11 h12 h13|

// |h21 h22 h23|

// |h31 h32 h33|

const float h11 = H21.at(0,0);

const float h12 = H21.at(0,1);

const float h13 = H21.at(0,2);

const float h21 = H21.at(1,0);

const float h22 = H21.at(1,1);

const float h23 = H21.at(1,2);

const float h31 = H21.at(2,0);

const float h32 = H21.at(2,1);

const float h33 = H21.at(2,2);

// |h11inv h12inv h13inv|

// |h21inv h22inv h23inv|

// |h31inv h32inv h33inv|

const float h11inv = H12.at(0,0);

const float h12inv = H12.at(0,1);

const float h13inv = H12.at(0,2);

const float h21inv = H12.at(1,0);

const float h22inv = H12.at(1,1);

const float h23inv = H12.at(1,2);

const float h31inv = H12.at(2,0);

const float h32inv = H12.at(2,1);

const float h33inv = H12.at(2,2);

vbMatchesInliers.resize(N);

float score = 0;

// 基于卡方检验计算出的阈值(假设测量有一个像素的偏差)

const float th = 5.991;

//信息矩阵,方差平方的倒数

const float invSigmaSquare = 1.0/(sigma*sigma);

// N对特征匹配点

for(int i=0; ith)

bIn = false;

else

score += th - chiSquare1;

// Reprojection error in second image

// x1in2 = H21*x1

// 将图像1中的特征点单应到图像2中

const float w1in2inv = 1.0/(h31*u1+h32*v1+h33);

const float u1in2 = (h11*u1+h12*v1+h13)*w1in2inv;

const float v1in2 = (h21*u1+h22*v1+h23)*w1in2inv;

const float squareDist2 = (u2-u1in2)*(u2-u1in2)+(v2-v1in2)*(v2-v1in2);

const float chiSquare2 = squareDist2*invSigmaSquare;

if(chiSquare2>th)

bIn = false;

else

score += th - chiSquare2;

if(bIn)

vbMatchesInliers[i]=true;

else

vbMatchesInliers[i]=false;

}

return score;

}

/**

* @brief 对给定的fundamental matrix打分

*

* @see

* - Author's paper - IV. AUTOMATIC MAP INITIALIZATION (2)

* - Multiple View Geometry in Computer Vision - symmetric transfer errors: 4.2.2 Geometric distance

* - Multiple View Geometry in Computer Vision - model selection 4.7.1 RANSAC

*/

float Initializer::CheckFundamental(const cv::Mat &F21, vector &vbMatchesInliers, float sigma)

{

const int N = mvMatches12.size();

const float f11 = F21.at(0,0);

const float f12 = F21.at(0,1);

const float f13 = F21.at(0,2);

const float f21 = F21.at(1,0);

const float f22 = F21.at(1,1);

const float f23 = F21.at(1,2);

const float f31 = F21.at(2,0);

const float f32 = F21.at(2,1);

const float f33 = F21.at(2,2);

vbMatchesInliers.resize(N);

float score = 0;

// 基于卡方检验计算出的阈值(假设测量有一个像素的偏差)

const float th = 3.841;

const float thScore = 5.991;

const float invSigmaSquare = 1.0/(sigma*sigma);

for(int i=0; ith)

bIn = false;

else

score += thScore - chiSquare1;

// Reprojection error in second image

// l1 =x2tF21=(a1,b1,c1)

const float a1 = f11*u2+f21*v2+f31;

const float b1 = f12*u2+f22*v2+f32;

const float c1 = f13*u2+f23*v2+f33;

const float num1 = a1*u1+b1*v1+c1;

const float squareDist2 = num1*num1/(a1*a1+b1*b1);

const float chiSquare2 = squareDist2*invSigmaSquare;

if(chiSquare2>th)

bIn = false;

else

score += thScore - chiSquare2;

if(bIn)

vbMatchesInliers[i]=true;

else

vbMatchesInliers[i]=false;

}

return score;

}

// |0 -1 0|

// E = U Sigma V' let W = |1 0 0|

// |0 0 1|

// 得到4个解 E = [R|t]

// R1 = UWV' R2 = UW'V' t1 = U3 t2 = -U3

/**

* @brief 从F恢复R t

*

* 度量重构

* 1. 由Fundamental矩阵结合相机内参K,得到Essential矩阵: \f$ E = k'^T F k \f$

* 2. SVD分解得到R t

* 3. 进行cheirality check, 从四个解中找出最合适的解

*

* @see Multiple View Geometry in Computer Vision - Result 9.19 p259

*/

bool Initializer::ReconstructF(vector &vbMatchesInliers, cv::Mat &F21, cv::Mat &K,

cv::Mat &R21, cv::Mat &t21, vector &vP3D, vector &vbTriangulated, float minParallax, int minTriangulated)

{

int N=0;

for(size_t i=0, iend = vbMatchesInliers.size() ; i vP3D1, vP3D2, vP3D3, vP3D4;

vector vbTriangulated1,vbTriangulated2,vbTriangulated3, vbTriangulated4;

float parallax1,parallax2, parallax3, parallax4;

int nGood1 = CheckRT(R1,t1,mvKeys1,mvKeys2,mvMatches12,vbMatchesInliers,K, vP3D1, 4.0*mSigma2, vbTriangulated1, parallax1);

int nGood2 = CheckRT(R2,t1,mvKeys1,mvKeys2,mvMatches12,vbMatchesInliers,K, vP3D2, 4.0*mSigma2, vbTriangulated2, parallax2);

int nGood3 = CheckRT(R1,t2,mvKeys1,mvKeys2,mvMatches12,vbMatchesInliers,K, vP3D3, 4.0*mSigma2, vbTriangulated3, parallax3);

int nGood4 = CheckRT(R2,t2,mvKeys1,mvKeys2,mvMatches12,vbMatchesInliers,K, vP3D4, 4.0*mSigma2, vbTriangulated4, parallax4);

int maxGood = max(nGood1,max(nGood2,max(nGood3,nGood4)));

R21 = cv::Mat();

t21 = cv::Mat();

// minTriangulated为可以三角化恢复三维点的个数

int nMinGood = max(static_cast(0.9*N),minTriangulated);

int nsimilar = 0;

if(nGood1>0.7*maxGood)

nsimilar++;

if(nGood2>0.7*maxGood)

nsimilar++;

if(nGood3>0.7*maxGood)

nsimilar++;

if(nGood4>0.7*maxGood)

nsimilar++;

// If there is not a clear winner or not enough triangulated points reject initialization

// 四个结果中如果没有明显的最优结果,则返回失败

if(maxGood1)

{

return false;

}

// If best reconstruction has enough parallax initialize

// 比较大的视差角

if(maxGood==nGood1)

{

if(parallax1>minParallax)

{

vP3D = vP3D1;

vbTriangulated = vbTriangulated1;

R1.copyTo(R21);

t1.copyTo(t21);

return true;

}

}else if(maxGood==nGood2)

{

if(parallax2>minParallax)

{

vP3D = vP3D2;

vbTriangulated = vbTriangulated2;

R2.copyTo(R21);

t1.copyTo(t21);

return true;

}

}else if(maxGood==nGood3)

{

if(parallax3>minParallax)

{

vP3D = vP3D3;

vbTriangulated = vbTriangulated3;

R1.copyTo(R21);

t2.copyTo(t21);

return true;

}

}else if(maxGood==nGood4)

{

if(parallax4>minParallax)

{

vP3D = vP3D4;

vbTriangulated = vbTriangulated4;

R2.copyTo(R21);

t2.copyTo(t21);

return true;

}

}

return false;

}

// H矩阵分解常见有两种方法:Faugeras SVD-based decomposition 和 Zhang SVD-based decomposition

// 参考文献:Motion and structure from motion in a piecewise plannar environment

// 这篇参考文献和下面的代码使用了Faugeras SVD-based decomposition算法

/**

* @brief 从H恢复R t

*

* @see

* - Faugeras et al, Motion and structure from motion in a piecewise planar environment. International Journal of Pattern Recognition and Artificial Intelligence, 1988.

* - Deeper understanding of the homography decomposition for vision-based control

*/

bool Initializer::ReconstructH(vector &vbMatchesInliers, cv::Mat &H21, cv::Mat &K,

cv::Mat &R21, cv::Mat &t21, vector &vP3D, vector &vbTriangulated, float minParallax, int minTriangulated)

{

int N=0;

for(size_t i=0, iend = vbMatchesInliers.size() ; i(0);

float d2 = w.at(1);

float d3 = w.at(2);

// SVD分解的正常情况是特征值降序排列

if(d1/d2<1.00001 || d2/d3<1.00001)

{

return false;

}

vector vR, vt, vn;

vR.reserve(8);

vt.reserve(8);

vn.reserve(8);

//n'=[x1 0 x3] 4 posibilities e1=e3=1, e1=1 e3=-1, e1=-1 e3=1, e1=e3=-1

// 法向量n'= [x1 0 x3] 对应ppt的公式17

float aux1 = sqrt((d1*d1-d2*d2)/(d1*d1-d3*d3));

float aux3 = sqrt((d2*d2-d3*d3)/(d1*d1-d3*d3));

float x1[] = {aux1,aux1,-aux1,-aux1};

float x3[] = {aux3,-aux3,aux3,-aux3};

//case d'=d2

// 计算ppt中公式19

float aux_stheta = sqrt((d1*d1-d2*d2)*(d2*d2-d3*d3))/((d1+d3)*d2);

float ctheta = (d2*d2+d1*d3)/((d1+d3)*d2);

float stheta[] = {aux_stheta, -aux_stheta, -aux_stheta, aux_stheta};

// 计算旋转矩阵 R‘,计算ppt中公式18

// | ctheta 0 -aux_stheta| | aux1|

// Rp = | 0 1 0 | tp = | 0 |

// | aux_stheta 0 ctheta | |-aux3|

// | ctheta 0 aux_stheta| | aux1|

// Rp = | 0 1 0 | tp = | 0 |

// |-aux_stheta 0 ctheta | | aux3|

// | ctheta 0 aux_stheta| |-aux1|

// Rp = | 0 1 0 | tp = | 0 |

// |-aux_stheta 0 ctheta | |-aux3|

// | ctheta 0 -aux_stheta| |-aux1|

// Rp = | 0 1 0 | tp = | 0 |

// | aux_stheta 0 ctheta | | aux3|

for(int i=0; i<4; i++)

{

cv::Mat Rp=cv::Mat::eye(3,3,CV_32F);

Rp.at(0,0)=ctheta;

Rp.at(0,2)=-stheta[i];

Rp.at(2,0)=stheta[i];

Rp.at(2,2)=ctheta;

cv::Mat R = s*U*Rp*Vt;

vR.push_back(R);

cv::Mat tp(3,1,CV_32F);

tp.at(0)=x1[i];

tp.at(1)=0;

tp.at(2)=-x3[i];

tp*=d1-d3;

// 这里虽然对t有归一化,并没有决定单目整个SLAM过程的尺度

// 因为CreateInitialMapMonocular函数对3D点深度会缩放,然后反过来对 t 有改变

cv::Mat t = U*tp;

vt.push_back(t/cv::norm(t));

cv::Mat np(3,1,CV_32F);

np.at(0)=x1[i];

np.at(1)=0;

np.at(2)=x3[i];

cv::Mat n = V*np;

if(n.at(2)<0)

n=-n;

vn.push_back(n);

}

//case d'=-d2

// 计算ppt中公式22

float aux_sphi = sqrt((d1*d1-d2*d2)*(d2*d2-d3*d3))/((d1-d3)*d2);

float cphi = (d1*d3-d2*d2)/((d1-d3)*d2);

float sphi[] = {aux_sphi, -aux_sphi, -aux_sphi, aux_sphi};

// 计算旋转矩阵 R‘,计算ppt中公式21

for(int i=0; i<4; i++)

{

cv::Mat Rp=cv::Mat::eye(3,3,CV_32F);

Rp.at(0,0)=cphi;

Rp.at(0,2)=sphi[i];

Rp.at(1,1)=-1;

Rp.at(2,0)=sphi[i];

Rp.at(2,2)=-cphi;

cv::Mat R = s*U*Rp*Vt;

vR.push_back(R);

cv::Mat tp(3,1,CV_32F);

tp.at(0)=x1[i];

tp.at(1)=0;

tp.at(2)=x3[i];

tp*=d1+d3;

cv::Mat t = U*tp;

vt.push_back(t/cv::norm(t));

cv::Mat np(3,1,CV_32F);

np.at(0)=x1[i];

np.at(1)=0;

np.at(2)=x3[i];

cv::Mat n = V*np;

if(n.at(2)<0)

n=-n;

vn.push_back(n);

}

int bestGood = 0;

int secondBestGood = 0;

int bestSolutionIdx = -1;

float bestParallax = -1;

vector bestP3D;

vector bestTriangulated;

// Instead of applying the visibility constraints proposed in the Faugeras' paper (which could fail for points seen with low parallax)

// We reconstruct all hypotheses and check in terms of triangulated points and parallax

// d'=d2和d'=-d2分别对应8组(R t)

for(size_t i=0; i<8; i++)

{

float parallaxi;

vector vP3Di;

vector vbTriangulatedi;

int nGood = CheckRT(vR[i],vt[i],mvKeys1,mvKeys2,mvMatches12,vbMatchesInliers,K,vP3Di, 4.0*mSigma2, vbTriangulatedi, parallaxi);

// 保留最优的和次优的

if(nGood>bestGood)

{

secondBestGood = bestGood;

bestGood = nGood;

bestSolutionIdx = i;

bestParallax = parallaxi;

bestP3D = vP3Di;

bestTriangulated = vbTriangulatedi;

}

else if(nGood>secondBestGood)

{

secondBestGood = nGood;

}

}

if(secondBestGood<0.75*bestGood && bestParallax>=minParallax && bestGood>minTriangulated && bestGood>0.9*N)

{

vR[bestSolutionIdx].copyTo(R21);

vt[bestSolutionIdx].copyTo(t21);

vP3D = bestP3D;

vbTriangulated = bestTriangulated;

return true;

}

return false;

}

// Trianularization: 已知匹配特征点对{x x'} 和 各自相机矩阵{P P'}, 估计三维点 X

// x' = P'X x = PX

// 它们都属于 x = aPX模型

// |X|

// |x| |p1 p2 p3 p4 ||Y| |x| |--p0--||.|

// |y| = a |p5 p6 p7 p8 ||Z| ===>|y| = a|--p1--||X|

// |z| |p9 p10 p11 p12||1| |z| |--p2--||.|

// 采用DLT的方法:x叉乘PX = 0

// |yp2 - p1| |0|

// |p0 - xp2| X = |0|

// |xp1 - yp0| |0|

// 两个点:

// |yp2 - p1 | |0|

// |p0 - xp2 | X = |0| ===> AX = 0

// |y'p2' - p1' | |0|

// |p0' - x'p2'| |0|

// 变成程序中的形式:

// |xp2 - p0 | |0|

// |yp2 - p1 | X = |0| ===> AX = 0

// |x'p2'- p0'| |0|

// |y'p2'- p1'| |0|

/**

* @brief 给定投影矩阵P1,P2和图像上的点kp1,kp2,从而恢复3D坐标

*

* @param kp1 特征点, in reference frame

* @param kp2 特征点, in current frame

* @param P1 投影矩阵P1

* @param P2 投影矩阵P2

* @param x3D 三维点

* @see Multiple View Geometry in Computer Vision - 12.2 Linear triangulation methods p312

*/

void Initializer::Triangulate(const cv::KeyPoint &kp1, const cv::KeyPoint &kp2, const cv::Mat &P1, const cv::Mat &P2, cv::Mat &x3D)

{

// 在DecomposeE函数和ReconstructH函数中对t有归一化

// 这里三角化过程中恢复的3D点深度取决于 t 的尺度,

// 但是这里恢复的3D点并没有决定单目整个SLAM过程的尺度

// 因为CreateInitialMapMonocular函数对3D点深度会缩放,然后反过来对 t 有改变

cv::Mat A(4,4,CV_32F);

A.row(0) = kp1.pt.x*P1.row(2)-P1.row(0);

A.row(1) = kp1.pt.y*P1.row(2)-P1.row(1);

A.row(2) = kp2.pt.x*P2.row(2)-P2.row(0);

A.row(3) = kp2.pt.y*P2.row(2)-P2.row(1);

cv::Mat u,w,vt;

cv::SVD::compute(A,w,u,vt,cv::SVD::MODIFY_A| cv::SVD::FULL_UV);

x3D = vt.row(3).t();

x3D = x3D.rowRange(0,3)/x3D.at(3);

}

/**

* @brief 归一化特征点到同一尺度(作为normalize DLT的输入)

*

* [x' y' 1]' = T * [x y 1]' \n

* 归一化后x', y'的均值为0,sum(abs(x_i'-0))=1,sum(abs((y_i'-0))=1

*

* @param vKeys 特征点在图像上的坐标

* @param vNormalizedPoints 特征点归一化后的坐标

* @param T 将特征点归一化的矩阵

*/

void Initializer::Normalize(const vector &vKeys, vector &vNormalizedPoints, cv::Mat &T)

{

float meanX = 0;

float meanY = 0;

const int N = vKeys.size();

vNormalizedPoints.resize(N);

for(int i=0; i(0,0) = sX;

T.at(1,1) = sY;

T.at(0,2) = -meanX*sX;

T.at(1,2) = -meanY*sY;

}

/**

* @brief 进行cheirality check,从而进一步找出F分解后最合适的解

*/

int Initializer::CheckRT(const cv::Mat &R, const cv::Mat &t, const vector &vKeys1, const vector &vKeys2,

const vector &vMatches12, vector &vbMatchesInliers,

const cv::Mat &K, vector &vP3D, float th2, vector &vbGood, float ¶llax)

{

// Calibration parameters

const float fx = K.at(0,0);

const float fy = K.at(1,1);

const float cx = K.at(0,2);

const float cy = K.at(1,2);

vbGood = vector(vKeys1.size(),false);

vP3D.resize(vKeys1.size());

vector vCosParallax;

vCosParallax.reserve(vKeys1.size());

// Camera 1 Projection Matrix K[I|0]

// 步骤1:得到一个相机的投影矩阵

// 以第一个相机的光心作为世界坐标系

cv::Mat P1(3,4,CV_32F,cv::Scalar(0));

K.copyTo(P1.rowRange(0,3).colRange(0,3));

// 第一个相机的光心在世界坐标系下的坐标

cv::Mat O1 = cv::Mat::zeros(3,1,CV_32F);

// Camera 2 Projection Matrix K[R|t]

// 步骤2:得到第二个相机的投影矩阵

cv::Mat P2(3,4,CV_32F);

R.copyTo(P2.rowRange(0,3).colRange(0,3));

t.copyTo(P2.rowRange(0,3).col(3));

P2 = K*P2;

// 第二个相机的光心在世界坐标系下的坐标

cv::Mat O2 = -R.t()*t;

int nGood=0;

for(size_t i=0, iend=vMatches12.size();i(0)) || !isfinite(p3dC1.at(1)) || !isfinite(p3dC1.at(2)))

{

vbGood[vMatches12[i].first]=false;

continue;

}

// Check parallax

// 步骤4:计算视差角余弦值

cv::Mat normal1 = p3dC1 - O1;

float dist1 = cv::norm(normal1);

cv::Mat normal2 = p3dC1 - O2;

float dist2 = cv::norm(normal2);

float cosParallax = normal1.dot(normal2)/(dist1*dist2);

// 步骤5:判断3D点是否在两个摄像头前方

// Check depth in front of first camera (only if enough parallax, as "infinite" points can easily go to negative depth)

// 步骤5.1:3D点深度为负,在第一个摄像头后方,淘汰

if(p3dC1.at(2)<=0 && cosParallax<0.99998)

continue;

// Check depth in front of second camera (only if enough parallax, as "infinite" points can easily go to negative depth)

// 步骤5.2:3D点深度为负,在第二个摄像头后方,淘汰

cv::Mat p3dC2 = R*p3dC1+t;

if(p3dC2.at(2)<=0 && cosParallax<0.99998)

continue;

// 步骤6:计算重投影误差

// Check reprojection error in first image

// 计算3D点在第一个图像上的投影误差

float im1x, im1y;

float invZ1 = 1.0/p3dC1.at(2);

im1x = fx*p3dC1.at(0)*invZ1+cx;

im1y = fy*p3dC1.at(1)*invZ1+cy;

float squareError1 = (im1x-kp1.pt.x)*(im1x-kp1.pt.x)+(im1y-kp1.pt.y)*(im1y-kp1.pt.y);

// 步骤6.1:重投影误差太大,跳过淘汰

// 一般视差角比较小时重投影误差比较大

if(squareError1>th2)

continue;

// Check reprojection error in second image

// 计算3D点在第二个图像上的投影误差

float im2x, im2y;

float invZ2 = 1.0/p3dC2.at(2);

im2x = fx*p3dC2.at(0)*invZ2+cx;

im2y = fy*p3dC2.at(1)*invZ2+cy;

float squareError2 = (im2x-kp2.pt.x)*(im2x-kp2.pt.x)+(im2y-kp2.pt.y)*(im2y-kp2.pt.y);

// 步骤6.2:重投影误差太大,跳过淘汰

// 一般视差角比较小时重投影误差比较大

if(squareError2>th2)

continue;

// 步骤7:统计经过检验的3D点个数,记录3D点视差角

vCosParallax.push_back(cosParallax);

vP3D[vMatches12[i].first] = cv::Point3f(p3dC1.at(0),p3dC1.at(1),p3dC1.at(2));

nGood++;

if(cosParallax<0.99998)

vbGood[vMatches12[i].first]=true;

}

// 步骤8:得到3D点中较大的视差角

if(nGood>0)

{

// 从小到大排序

sort(vCosParallax.begin(),vCosParallax.end());

// trick! 排序后并没有取最大的视差角

// 取一个较大的视差角

size_t idx = min(50,int(vCosParallax.size()-1));

parallax = acos(vCosParallax[idx])*180/CV_PI;

}

else

parallax=0;

return nGood;

}

/**

* @brief 分解Essential矩阵

*

* F矩阵通过结合内参可以得到Essential矩阵,分解E矩阵将得到4组解 \n

* 这4组解分别为[R1,t],[R1,-t],[R2,t],[R2,-t]

* @param E Essential Matrix

* @param R1 Rotation Matrix 1

* @param R2 Rotation Matrix 2

* @param t Translation

* @see Multiple View Geometry in Computer Vision - Result 9.19 p259

*/

void Initializer::DecomposeE(const cv::Mat &E, cv::Mat &R1, cv::Mat &R2, cv::Mat &t)

{

cv::Mat u,w,vt;

cv::SVD::compute(E,w,u,vt);

// 对 t 有归一化,但是这个地方并没有决定单目整个SLAM过程的尺度

// 因为CreateInitialMapMonocular函数对3D点深度会缩放,然后反过来对 t 有改变

u.col(2).copyTo(t);

t=t/cv::norm(t);

cv::Mat W(3,3,CV_32F,cv::Scalar(0));

W.at(0,1)=-1;

W.at(1,0)=1;

W.at(2,2)=1;

R1 = u*W*vt;

if(cv::determinant(R1)<0) // 旋转矩阵有行列式为1的约束

R1=-R1;

R2 = u*W.t()*vt;

if(cv::determinant(R2)<0)

R2=-R2;

}

} //namespace ORB_SLAM