ORB-SLAM2代码阅读笔记(二):System.cc相关函数

目录

1.System在ORB-SLAM2系统中的作用

2.System类构造函数

3.System::TrackMonocular函数

4.System::Shutdown函数

5.System::SaveKeyFrameTrajectoryTUM函数

6.本部分在总体流程中的位置

1.System在ORB-SLAM2系统中的作用

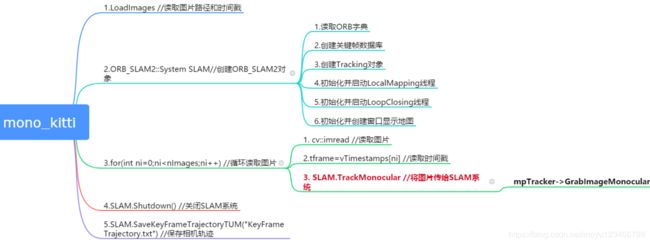

在ORB-SLAM2代码阅读笔记(一):从mono_kitti单目运行开始一篇的介绍中可以看到mono_kitti.cc中main函数里有创建System对象的语句:

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::MONOCULAR,true);这里的System对象冠名SLAM,颇有一些ORB-SLAM2之“大总管”的感觉,仔细看过System的构造函数后,你会觉得:还真是如此,作为“大总管”它是名副其实的。那么作为“大总管”,最基本的应该是要负责资源分配和人员调度。

那么我们这里的SLAM大总管又做了哪些工作呢?总结一下,主要是下面7件事情:

1)读取ORB字典,为后期的回环检测做准备;

2)创建关键帧数据库KeyFrameDatabase,用于存放关键帧相关数据;

3)初始化Tracking线程。其实Tracking线程是在main线程中运行的,可以简单的认为main线程就是Tracking线程;

4)初始化并启动LocalMapping线程;

5)初始化并启动LoopClosing线程;

6)初始化并启动窗口显示线程mptViewer;

7)在各个线程之间分配资源,方便线程彼此之间的数据交互。

怎么样?有没有觉得大总管干的都是些大事?具体这些事情,我们可以在System类构造函数中去仔细阅读代码进行一一对应。

2.System类构造函数

/**

* strVocFile 为词典文件

* strSettingsFile 为设置配置文件

* sensor sensor类型,单目、双目和RGBD

* bUseViewer 是否进行相机位姿和图片帧显示

*/

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor,

const bool bUseViewer):mSensor(sensor), mpViewer(static_cast(NULL)), mbReset(false),mbActivateLocalizationMode(false),

mbDeactivateLocalizationMode(false)

{

// Output welcome message

cout << endl <<

"ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl <<

"This program comes with ABSOLUTELY NO WARRANTY;" << endl <<

"This is free software, and you are welcome to redistribute it" << endl <<

"under certain conditions. See LICENSE.txt." << endl << endl;

cout << "Input sensor was set to: ";

if(mSensor==MONOCULAR)

cout << "Monocular" << endl;

else if(mSensor==STEREO)

cout << "Stereo" << endl;

else if(mSensor==RGBD)

cout << "RGB-D" << endl;

//Check settings file

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

//Load ORB Vocabulary

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

//1.读取ORB字典

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database 2.创建关键帧数据库

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//Create the Map 创建地图对象

mpMap = new Map();

//Create Drawers. These are used by the Viewer 创建两个显示窗口

mpFrameDrawer = new FrameDrawer(mpMap);

mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

//3.这里初始化的Tracking线程是在main线程中运行的(其实也就是main线程),所以不需要调用new thread来创建

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);

//Initialize the Local Mapping thread and launch

//4.初始化LocalMapping对象,并开启LocalMapping线程

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);

//Initialize the Loop Closing thread and launch

//5.初始化LooClosing对象,并开启LoopClosing线程

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);

//Initialize the Viewer thread and launch

//是否显示图像帧和相机位姿的窗口

if(bUseViewer)

{

//6.初始化显示窗口对象,并启动线程用于显示图像和地图点

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile);

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

}

//Set pointers between threads

//7.给各个线程分配资源

//以下变量设置是为了在各个线程中可以访问其他线程中的数据,方便线程之间的数据交互

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

} 3.System::TrackMonocular函数

在mono_kitti.cc的main函数中可以看到调用了下面语句:

SLAM.TrackMonocular(im,tframe);这里其实就是将读取的图像im传给Tracking线程了,那么TrackMonocular中做了哪些操作呢?请看TrackMonocular函数代码。

/**

* 跟踪单目相机帧数据

* im 图片

* timestamp 图片对应的时间戳

*/

cv::Mat System::TrackMonocular(const cv::Mat &im, const double ×tamp)

{

//在单目相机跟踪的接口中判断当前输入的类型不为单目,则退出

if(mSensor!=MONOCULAR)

{

cerr << "ERROR: you called TrackMonocular but input sensor was not set to Monocular." << endl;

exit(-1);

}

// Check mode change

{

unique_lock lock(mMutexMode);

//初始化System的时候mbActivateLocalizationMode值为false

if(mbActivateLocalizationMode)

{

//LocalMapping线程停止运行

mpLocalMapper->RequestStop();

// Wait until Local Mapping has effectively stopped

//等待知道LocalMapping线程真正停止运行

while(!mpLocalMapper->isStopped())

{

usleep(1000);

}

mpTracker->InformOnlyTracking(true);

mbActivateLocalizationMode = false;

}

//初始化System的时候mbDeactivateLocalizationMode值为false

if(mbDeactivateLocalizationMode)

{

mpTracker->InformOnlyTracking(false);

mpLocalMapper->Release();

mbDeactivateLocalizationMode = false;

}

}

// Check reset

{

unique_lock lock(mMutexReset);

if(mbReset)

{

mpTracker->Reset();

mbReset = false;

}

}

//输入图片和时间戳给Tracking线程

cv::Mat Tcw = mpTracker->GrabImageMonocular(im,timestamp);

unique_lock lock2(mMutexState);

mTrackingState = mpTracker->mState;

mTrackedMapPoints = mpTracker->mCurrentFrame.mvpMapPoints;

mTrackedKeyPointsUn = mpTracker->mCurrentFrame.mvKeysUn;

return Tcw;

} 可以看到,其实这个函数里边其实就做了两件事情:

1)check mode、check reset,这个和窗口的视图显示有关,可以在显示相机位姿的窗口左侧点击按钮实际操作看看效果;

2)将图片和时间戳传给了Tracking,这里才是真正的调用了Tracking的接口GrabImageMonocular,那么这个函数肯定是后边要重点关注的。

4.System::Shutdown函数

这个函数也是在mono_kitti的main函数中调度的,当SLAM系统处理完所有的图片后,系统就要退出,此时调用该接口关闭除Tracking线程(也就是main线程)之外的所有线程。

/**

* 关闭SLAM系统

*

*/

void System::Shutdown()

{

//finish掉LocalMapping线程

mpLocalMapper->RequestFinish();

//finish掉LoopClosing线程

mpLoopCloser->RequestFinish();

if(mpViewer)

{

////finish掉地图显示线程

mpViewer->RequestFinish();

while(!mpViewer->isFinished())

usleep(5000);

}

// Wait until all thread have effectively stopped

while(!mpLocalMapper->isFinished() || !mpLoopCloser->isFinished() || mpLoopCloser->isRunningGBA())

{

usleep(5000);

}

if(mpViewer)

pangolin::BindToContext("ORB-SLAM2: Map Viewer");

}5.System::SaveKeyFrameTrajectoryTUM函数

SLAM的问题本质,其实是个贝叶斯后验概率问题,就是通过已经获取的一帧一帧的图像信息来判断相机的位姿变换,最后将所有获得的位姿图点坐标可视化后连起来其实就是相机运动的轨迹了(当然,这个说起来容易做起来难,后边慢慢体会)。这个函数就是对ORB-SLAM2系统运行所产生的相机位姿坐标数据存储到KeyFrameTrajectory.txt文件中了,当然也可以用可视化工具将该文件进行可视化,就能清晰看到相机运动的轨迹了。感兴趣的可以网上找相关资料可视化看看。

/**

* 保存相机位姿和走过的路径filename文件中

*

*/

void System::SaveKeyFrameTrajectoryTUM(const string &filename)

{

cout << endl << "Saving keyframe trajectory to " << filename << " ..." << endl;

vector vpKFs = mpMap->GetAllKeyFrames();

sort(vpKFs.begin(),vpKFs.end(),KeyFrame::lId);

// Transform all keyframes so that the first keyframe is at the origin.

// After a loop closure the first keyframe might not be at the origin.

//cv::Mat Two = vpKFs[0]->GetPoseInverse();

ofstream f;

f.open(filename.c_str());

f << fixed;

for(size_t i=0; iSetPose(pKF->GetPose()*Two);

if(pKF->isBad())

continue;

cv::Mat R = pKF->GetRotation().t();

vector q = Converter::toQuaternion(R);

cv::Mat t = pKF->GetCameraCenter();

f << setprecision(6) << pKF->mTimeStamp << setprecision(7) << " " << t.at(0) << " " << t.at(1) << " " << t.at(2)

<< " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl;

}

f.close();

cout << endl << "trajectory saved!" << endl;

}