【PyTorch】借助PyTorch简洁地实现Softmax回归,对Fashion MNIST数据集进行分类

摘要

借助PyTorch简洁地实现Softmax回归,对Fashion MNIST数据集进行分类。

动机

《动手学深度学习》有人用pytorch进行了实现(整书地址见文末参考链接),实际学习过程中发现里面的代码在我的环境里(pytorch 0.4.0)有些小问题(也许是因为我手动读取数据集的结果与pytorch提供的torchvision.datasets读取数据集的结果格式不同),更正并整理后发出来也许对大家有帮助。本节原内容见末尾参考链接。

这里的代码在原内容做了以下修改:

- 修复fashion mnist数据集下载缓慢(由于墙的原因,实际上是下载失败)的问题(改为自己手动下载数据集,并手动读取)

- 修复书中pytorch版代码

准确率计算错误的问题 - 修复

RuntimeError: multi-target not supported at ...的错误 - 不需要import

d2lzh模块。确保安装了基本环境后,代码拷贝到你的机子上就能用,不需要额外下载其他模块

3.7 softmax回归的简洁实现

import torch

from torch import nn

from torch.nn import init

import numpy as np

import sys

print(torch.__version__)

输出:

0.4.0

3.7.1 获取和读取数据

采用pytorch的提供的函数从网上下载数据集太慢(由于墙的原因),所以我是从github手动下载数据集,并手动读取。

fashion mnist数据集下载地址,下载里面data/fashion的内容即可

def load_mnist(path, kind='train'):

""" load自己手动下载的数据集

mnist文件内容存放方式见 http://yann.lecun.com/exdb/mnist/

偏移8字节是为了跳过信息头

"""

import os

import gzip

import numpy as np

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,

'%s-labels-idx1-ubyte.gz'

% kind)

images_path = os.path.join(path,

'%s-images-idx3-ubyte.gz'

% kind)

with gzip.open(labels_path, 'rb') as lbpath:

labels = np.frombuffer(lbpath.read(), dtype=np.uint8,

offset=8)

with gzip.open(images_path, 'rb') as imgpath:

images = np.frombuffer(imgpath.read(), dtype=np.uint8,

offset=16).reshape(len(labels), 784)

return images, labels

def load_data_fashion_mnist(batch_size, resize=None, root='~/Datasets/FashionMNIST'):

"""Download the fashion mnist dataset and then load into memory."""

#原来的方法,因为墙的原因,下载特别慢

"""

trans = []

if resize:

trans.append(torchvision.transforms.Resize(size=resize))

trans.append(torchvision.transforms.ToTensor())

transform = torchvision.transforms.Compose(trans)

mnist_train = torchvision.datasets.FashionMNIST(root=root, train=True, download=True, transform=transform)

mnist_test = torchvision.datasets.FashionMNIST(root=root, train=False, download=True, transform=transform)

"""

#我的方法,采用自己下载好的数据集

X_train, y_train = load_mnist('/home/user_name/Datasets/FashionMNIST/raw', kind='train')

X_test, y_test = load_mnist('/home/user_name/Datasets/FashionMNIST/raw', kind='t10k')

X_train_tensor = torch.from_numpy(X_train).to(torch.float32).view(-1, 1, 28, 28) * (1/255.0)

X_test_tensor = torch.from_numpy(X_test).to(torch.float32).view(-1, 1, 28, 28) * (1/255.0)

y_train_tensor = torch.from_numpy(y_train).to(torch.int64).view(-1, 1)

y_test_tensor = torch.from_numpy(y_test).to(torch.int64).view(-1, 1)

import torch.utils.data as Data

mnist_train = Data.TensorDataset(X_train_tensor, y_train_tensor)

mnist_test = Data.TensorDataset(X_test_tensor, y_test_tensor)

# 分割线 ============================================================

if sys.platform.startswith('win'):

num_workers = 0 # 0表示不用额外的进程来加速读取数据

else:

num_workers = 4

train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers)

test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers)

return train_iter, test_iter

batch_size = 256

train_iter, test_iter = load_data_fashion_mnist(batch_size)

3.7.2 定义和初始化模型

num_inputs = 784

num_outputs = 10

# 方法1

# class LinearNet(nn.Module):

# def __init__(self, num_inputs, num_outputs):

# super(LinearNet, self).__init__()

# self.linear = nn.Linear(num_inputs, num_outputs)

# def forward(self, x): # x shape: (batch, 1, 28, 28)

# y = self.linear(x.view(x.shape[0], -1))

# return y

# net = LinearNet(num_inputs, num_outputs)

# 方法2

# 将对`x`的形状转换的这个功能自定义一个`FlattenLayer`

class FlattenLayer(nn.Module):

def __init__(self):

super(FlattenLayer, self).__init__()

def forward(self, x): # x shape: (batch, *, *, ...)

return x.view(x.shape[0], -1)

from collections import OrderedDict

net = nn.Sequential(

# FlattenLayer(),

# nn.Linear(num_inputs, num_outputs)

OrderedDict([

('flatten', FlattenLayer()),

('linear', nn.Linear(num_inputs, num_outputs))])

)

# 使用均值为0、标准差为0.01的正态分布随机初始化模型的权重参数

init.normal_(net.linear.weight, mean=0, std=0.01)

init.constant_(net.linear.bias, val=0);

3.7.3 softmax和交叉熵损失函数

如果做了上一节3.6的练习,那么你可能意识到了分开定义softmax运算和交叉熵损失函数可能会造成数值不稳定。

因此,PyTorch提供了一个包括softmax运算和交叉熵损失计算的函数。它的数值稳定性更好。

什么叫做不稳定?见数值的近似误差,上溢或者下溢:Softmax数值不稳定问题

loss = nn.CrossEntropyLoss()

3.7.4 定义优化算法

# 使用学习率为0.1的小批量随机梯度下降作为优化算法

optimizer = torch.optim.SGD(net.parameters(), lr=0.1)

3.7.5 计算分类准确率

# 评估模型net在数据集data_iter中的准确率

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

# 原文方法

# 对于pytorch 0.4.0计算准确率出错(其他版本未测试)。原因是"=="两边数据shape不同,会进行广播

# acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

# 我的方法

acc_sum += (net(X).argmax(dim=1) == y.squeeze(1)).float().sum().item()

n += y.shape[0]

return acc_sum / n

# 测试一下,因为我们随机初始化了模型`net`,所以这个随机模型的准确率应该接近于类别个数10的倒数即0.1。

print("init evaluate_accuracy: ", evaluate_accuracy(test_iter, net))

输出:

init evaluate_accuracy: 0.0832

3.7.6 训练模型

def sgd(params, lr, batch_size):

"""

这里自动求梯度模块计算得来的梯度是一个批量样本的梯度和。

为了和原书保持一致,这里除以了batch_size,但是应该是不用除的,因为一般用PyTorch计算loss时就默认已经

沿batch维求了平均了。

"""

for param in params:

# 注意这里更改param时用的param.data,避免被`autograd`记录从而影响到梯度反向传播

param.data -= lr * param.grad / batch_size

num_epochs, lr = 5, 0.1

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

""" training network """

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

# 原方法,出错

# 错误提示为:RuntimeError: multi-target not supported at /opt/conda/conda-bld/pytorch_1524590031827/work/aten/src/THNN/generic/ClassNLLCriterion.c:22

# l = loss(y_hat, y).sum().float()

# 我的方法

l = loss(y_hat, y.squeeze(1)).sum().float()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

sgd(params, lr, batch_size)

else:

optimizer.step() # “softmax回归的简洁实现”一节将用到

train_l_sum += l.item()

# 原文方法

# 对于pytorch 0.4.0计算准确率出错(其他版本未测试)。原因是"=="两边数据shape不同,会进行广播

# train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

# 我的方法

train_acc_sum += (y_hat.argmax(dim=1) == y.squeeze(1)).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

# 开始训练

train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer)

输出:

epoch 1, loss 0.0031, train acc 0.749, test acc 0.786

epoch 2, loss 0.0022, train acc 0.813, test acc 0.808

epoch 3, loss 0.0021, train acc 0.826, test acc 0.812

epoch 4, loss 0.0020, train acc 0.832, test acc 0.823

epoch 5, loss 0.0019, train acc 0.837, test acc 0.802

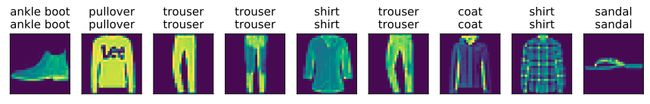

3.7.7预测

def get_fashion_mnist_labels(labels):

""" 函数可以将数值标签转成相应的文本标签 """

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

from IPython import display

from matplotlib import pyplot as plt

def use_svg_display():

"""Use svg format to display plot in jupyter"""

display.set_matplotlib_formats('svg')

def show_fashion_mnist(images, labels):

""" 该函数在一行里画出多张图像和对应标签 """

use_svg_display()

# 这里的_表示我们忽略(不使用)的变量

_, figs = plt.subplots(1, len(images), figsize=(12, 12))

for f, img, lbl in zip(figs, images, labels):

f.imshow(img.view((28, 28)).numpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

plt.show()

# 预测

X, y = iter(test_iter).next()

true_labels = get_fashion_mnist_labels(y.numpy())

pred_labels = get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels, pred_labels)]

# 画前10个预测结果

show_fashion_mnist(X[0:9], titles[0:9])

相关/参考链接

《动手学深度学习 PyTorch》3.7 softmax回归的简洁实现

《动手学深度学习》

fashion mnist数据集下载地址,下载里面data/fashion的内容即可