ORB-SLAM2源码学习(一)——系统结构及System.cc

开始阅读学习ORB-SLAM2的源码了,在这里做个学习记录,另外也是督促自己的学习的更加深入。

前言

首先ORB-SLAM2作为一个完整的slam系统,作者即提供了论文也有对应的源码,它的github主页:

https://github.com/raulmur/ORB_SLAM2

系统包括了单目、双目、rgbd的实现,这里我主要学习单目slam系统,就以单目slam的流程来学习。

另外感谢泡泡机器人提供的有很好的注释版本(吴博):

https://gitee.com/paopaoslam/ORB-SLAM2

一、系统结构

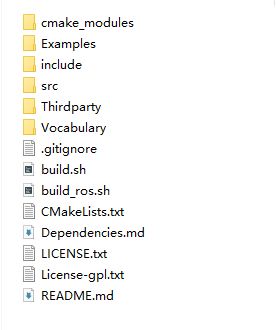

ORB-SLAM2系统的代码非常整齐,简洁,便于阅读,作者将项目做了良好的封装,文件分布如下:

其中src中为slam系统源码,Examples中为对应不同传感器的例程源码,Thirdparty存放的是用到的第三方库,Vocabulary存放的是回环检测中BoW用到的视觉词典;

首先,我们看看怎么来调用ORB-SLAM2,学习一下例程源码mono_tum.cc。

二、mono_tum.cc(单目tum数据集例程)

主体源码,做过修剪:

int main(int argc, char **argv)

{

if(argc != 4)

{

cerr << endl << "Usage: ./mono_tum path_to_vocabulary path_to_settings path_to_sequence" << endl;

return 1;

}

// Retrieve paths to images

vector vstrImageFilenames;

vector vTimestamps;

string strFile = string(argv[3])+"/rgb.txt";

LoadImages(strFile, vstrImageFilenames, vTimestamps);

int nImages = vstrImageFilenames.size();

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::MONOCULAR,true);

// Vector for tracking time statistics

vector vTimesTrack;

vTimesTrack.resize(nImages);

cout << endl << "-------" << endl;

cout << "Start processing sequence ..." << endl;

cout << "Images in the sequence: " << nImages << endl << endl;

// Main loop

cv::Mat im;

for(int ni=0; ni >(t2 - t1).count();

vTimesTrack[ni]=ttrack;

// Wait to load the next frame

double T=0;

if(ni0)

T = tframe-vTimestamps[ni-1];

if(ttrack 可以看到使用ORB-SLAM2系统对外接口为下流程:

- 创建ORB_SLAM2::System对象

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::MONOCULAR,true); - 循环读取每一帧图片及其时间戳

SLAM.TrackMonocular(im,tframe); - 关闭SLAM系统

SLAM.Shutdown(); - 将相机轨线保存到硬盘中

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");eg:在我第一次尝试使用编译好的mono_tum时,想要使用自己的数据来跑跑ORB-SLAM2,但因为没有考虑到文件时间戳问题(当时文件命名就是自然数),于是程序帧数几乎一秒一帧,可以看到上面的代码里有一段 usleep((T-ttrack)*1e6),这就是讲这个程序的运行速度和你提供的时间戳有关。

三、System.cc

在上面可以看到ORB-SLAM2系统的主入口通过System这个类定义的,这里来看下这个类的头文件。

class System

{

public:

// Input sensor

enum eSensor{

MONOCULAR=0,

STEREO=1,

RGBD=2

};

public:

// Initialize the SLAM system. It launches the Local Mapping, Loop Closing and Viewer threads.

System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer = true);

// Proccess the given stereo frame. Images must be synchronized and rectified.

// Input images: RGB (CV_8UC3) or grayscale (CV_8U). RGB is converted to grayscale.

// Returns the camera pose (empty if tracking fails).

cv::Mat TrackStereo(const cv::Mat &imLeft, const cv::Mat &imRight, const double ×tamp);

// Process the given rgbd frame. Depthmap must be registered to the RGB frame.

// Input image: RGB (CV_8UC3) or grayscale (CV_8U). RGB is converted to grayscale.

// Input depthmap: Float (CV_32F).

// Returns the camera pose (empty if tracking fails).

cv::Mat TrackRGBD(const cv::Mat &im, const cv::Mat &depthmap, const double ×tamp);

// Proccess the given monocular frame

// Input images: RGB (CV_8UC3) or grayscale (CV_8U). RGB is converted to grayscale.

// Returns the camera pose (empty if tracking fails).

cv::Mat TrackMonocular(const cv::Mat &im, const double ×tamp);

// This stops local mapping thread (map building) and performs only camera tracking.

void ActivateLocalizationMode();

// This resumes local mapping thread and performs SLAM again.

void DeactivateLocalizationMode();

// Reset the system (clear map)

void Reset();

// All threads will be requested to finish.

// It waits until all threads have finished.

// This function must be called before saving the trajectory.

void Shutdown();

// Save camera trajectory in the TUM RGB-D dataset format.

// Only for stereo and RGB-D. This method does not work for monocular.

// Call first Shutdown()

// See format details at: http://vision.in.tum.de/data/datasets/rgbd-dataset

void SaveTrajectoryTUM(const string &filename);

// Save keyframe poses in the TUM RGB-D dataset format.

// This method works for all sensor input.

// Call first Shutdown()

// See format details at: http://vision.in.tum.de/data/datasets/rgbd-dataset

void SaveKeyFrameTrajectoryTUM(const string &filename);

// Save camera trajectory in the KITTI dataset format.

// Only for stereo and RGB-D. This method does not work for monocular.

// Call first Shutdown()

// See format details at: http://www.cvlibs.net/datasets/kitti/eval_odometry.php

void SaveTrajectoryKITTI(const string &filename);

// TODO: Save/Load functions

// SaveMap(const string &filename);

// LoadMap(const string &filename);

private:

// Input sensor

eSensor mSensor;

// ORB vocabulary used for place recognition and feature matching.

ORBVocabulary* mpVocabulary;

// KeyFrame database for place recognition (relocalization and loop detection).

KeyFrameDatabase* mpKeyFrameDatabase;

// Map structure that stores the pointers to all KeyFrames and MapPoints.

Map* mpMap;

// Tracker. It receives a frame and computes the associated camera pose.

// It also decides when to insert a new keyframe, create some new MapPoints and

// performs relocalization if tracking fails.

Tracking* mpTracker;

// Local Mapper. It manages the local map and performs local bundle adjustment.

LocalMapping* mpLocalMapper;

// Loop Closer. It searches loops with every new keyframe. If there is a loop it performs

// a pose graph optimization and full bundle adjustment (in a new thread) afterwards.

LoopClosing* mpLoopCloser;

// The viewer draws the map and the current camera pose. It uses Pangolin.

Viewer* mpViewer;

FrameDrawer* mpFrameDrawer;

MapDrawer* mpMapDrawer;

// System threads: Local Mapping, Loop Closing, Viewer.

// The Tracking thread "lives" in the main execution thread that creates the System object.

std::thread* mptLocalMapping;

std::thread* mptLoopClosing;

std::thread* mptViewer;

// Reset flag

std::mutex mMutexReset;

bool mbReset;

// Change mode flags

std::mutex mMutexMode;

bool mbActivateLocalizationMode;

bool mbDeactivateLocalizationMode;

};

类的成员有:

- ORB词袋的对象

- 关键帧的数据库对象

- 地图点数据对象

- Tracking对象

- Local Mapping对象

- Loop Closing对象

成员函数主要功能为:

- 构造函数初始化具体slam系统

- TrackMonocular将每一帧输入slam中进行处理

-

Shutdown停止slam线程

-

SaveKeyFrameTrajectoryTUM将关键帧位置做具体数据集格式的输出

接下来我们看看System.cc里的具体内容:

首先,System的构造函数里:

- 系统参数设置文件读取

- ORB词袋文件读取(txt或bin)

- 成员的初始化

-

LocalMapping、LoopClosing、Viewer线程直接启动(Tracking线程实际是主线程)

其次TrackMonocular函数里:调用了tracking的GrabImageMonocular函数对每一帧进行处理;这里就进入了tracking模块,在之后我们再来学习Tracking.cc。

最后在Shutdown函数里关闭LocalMapping、LoopClosing、Viewer线程。